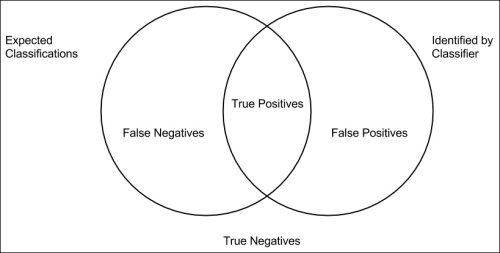

To calculate the error rates on classification algorithms, we'll keep count of several things. We'll track how many positives are correctly and incorrectly identified as well as how many negatives are correctly and incorrectly identified. These values are usually called true positives, false positives, true negatives, and false negatives. The relationship of these values to the expected values and the classifier's outputs and to each other can be seen in the following diagram:

From these numbers, we'll first calculate the precision of the algorithm. This is the ratio of true positives to the number of all identified positives (both true and false positives). This tells us how many of the items that it identified as positives actually are positives.

We'll then calculate the recall. This is the ratio of true positives to all actual positives (true positives and false negatives). This gives us an idea of how many positives it's missing.

To calculate this, we'll use a standard reduce loop. First, we'll write the accumulator function for it. This will take a mapping of the counts that we need to tally and a pair of ratings, the expected and the actual. Depending on what they are and whether they match, we'll increment one of the counts as follows:

(defnaccum-error [error-counts pair]

(let [get-key {["+" "+"] :true-pos

["-" "-"] :true-neg

["+" "-"] :false-neg

["-" "+"] :false-pos}

k (get-key pair)]

(assoc error-counts k (inc (error-counts k)))))Once we have the counts for a test set, we'll need to summarize these counts into the figure for precision and recall:

(defn summarize-error [error-counts]

(let [{:keys [true-pos false-pos true-neg false-neg]}

error-counts]

{:precision (float (/ true-pos (+ true-pos false-pos))),

:recall (float (/ true-pos (+ true-pos false-neg)))}))With these two defined, the function to actually calculate the error is standard Clojure:

(defn compute-error [expecteds actuals]

(let [start {:true-neg 0, :false-neg 0, :true-pos 0,

:false-pos 0}]

(summarize-error

(reduceaccum-error start (map vector expecteds actuals)))))We can do something similar to determine the mean error of a collection of precision/recall mappings. We could simply figure the value for each key separately, but rather than walking over the collection multiple times, we will do something more complicated and walk over it once while calculating the sums for each key:

(defn mean-error [coll]

(let [start {:precision 0, :recall 0}

accum (fn [a b]

{:precision (+ (:precision a) (:precision b))

:recall (+ (:recall a) (:recall b))})

summarize (fn [n a]

{:precision (/ (:precision a) n)

:recall (/ (:recall a) n)})]

(summarize (count coll) (reduce accum start coll))))These functions will give us a good grasp of the performance of our classifiers and how well they do at identifying the sentiments expressed in the data.