Safety Instrumentation Functions and System (Including Fire and Gas System)

Abstract

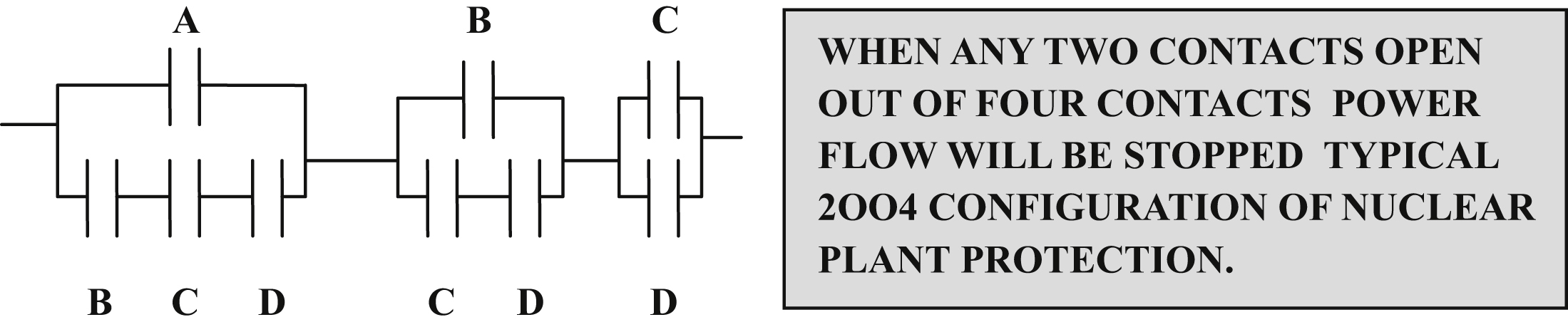

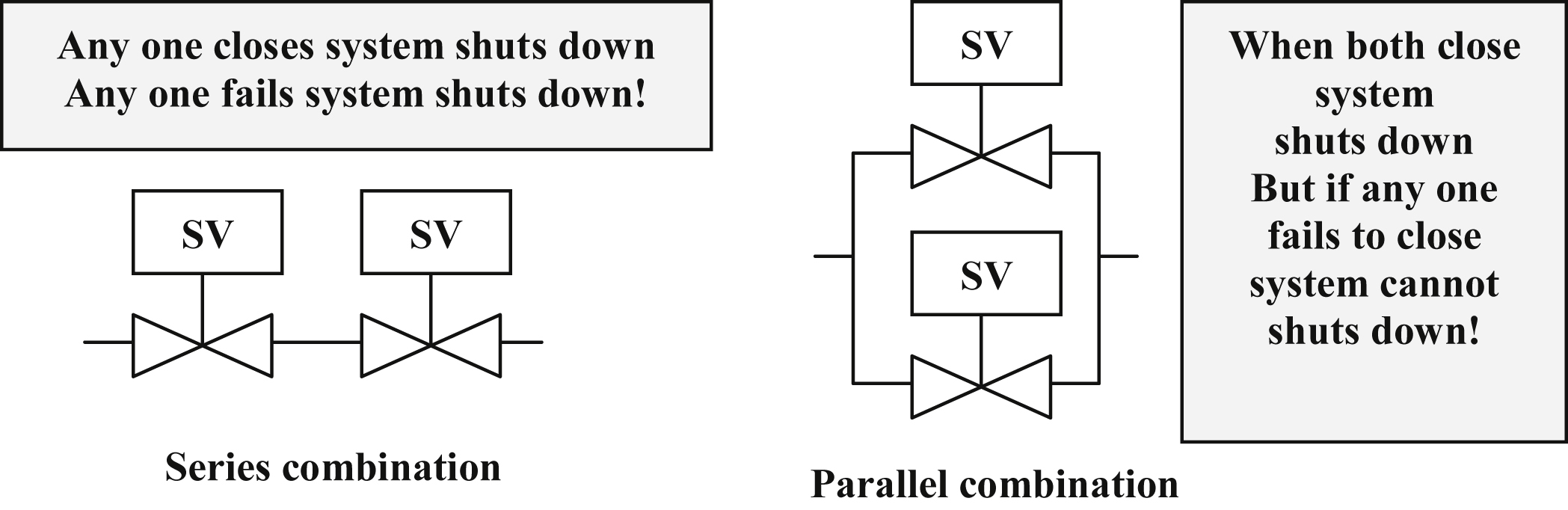

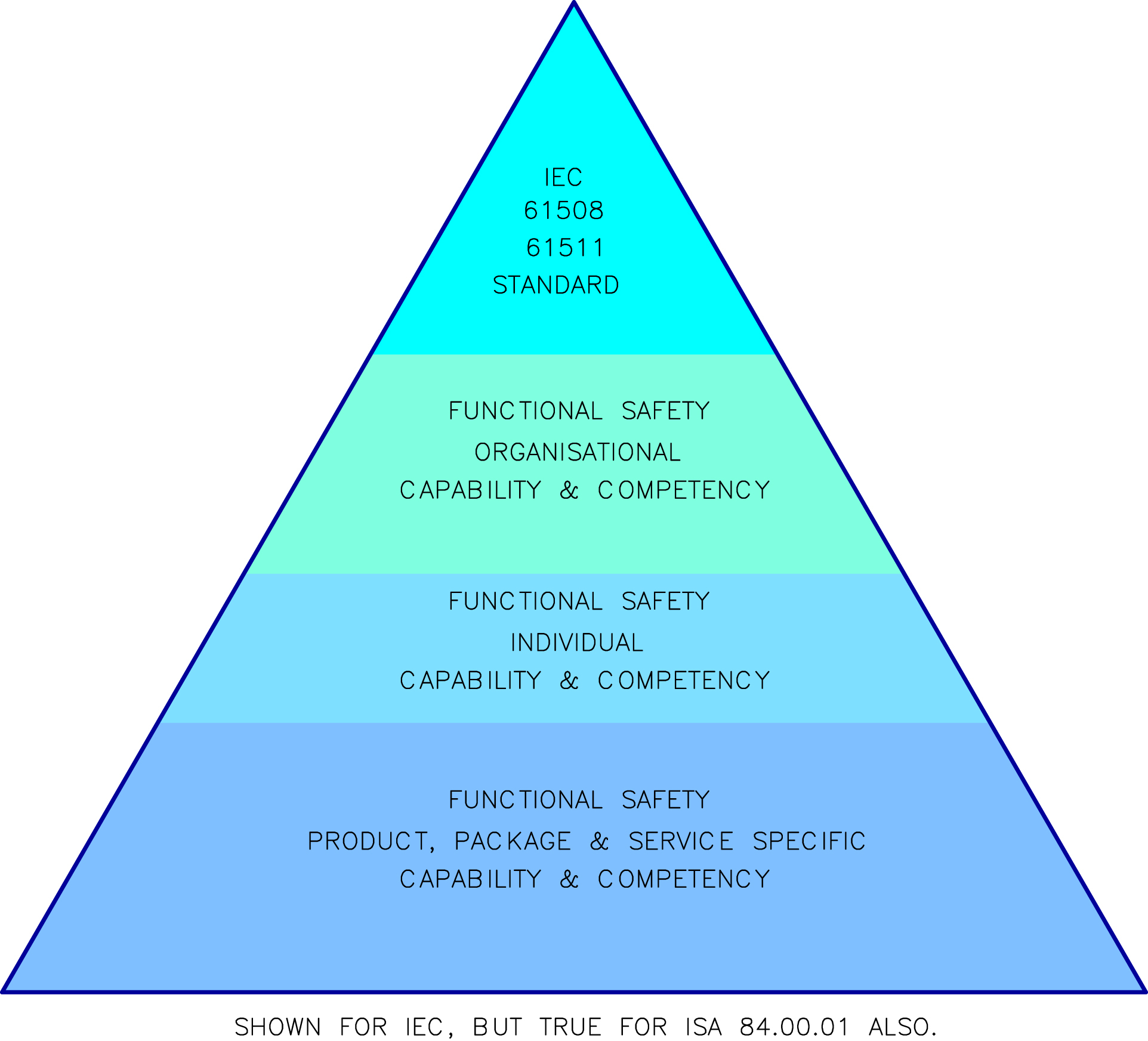

Knowledge of safety instrumented systems (SISs) based on IEC 61508, discussed in the previous chapter, has been elaborated here for distinction among the terms SIS, safety instrument function (SIF), and safety integrity level (SIL), used interchangeably. Competency structure of SIS calls for a detailed discussion of reliability theory and relationship between the same and SIS. Bathtub curve and Weibull distributions are applied for SIS reliability calculations. There are various types of failure; random, systematic, and common cause failures in various instrumentation systems need special attention. Also, discussions on special requirements for sensors, logic solvers, and final elements are covered including short discussions on safe programmable logic controller. Redundancy and voting logic play an important role for naturally same needs to be treated. SIS is different for machinery system process plants and nuclear plants; accordingly these are treated separately. Fire and gas system (FGS) is an important issue in all plants so detailed discussions including special attention on philosophy document, detection technology, and area coverage have been included. Perspective and performance-based regulation for FGS needs special attention as opinion is divided. So, pros and cons of both have been discussed.

Keywords

1.0. Safety Instrumented Function, Safety Instrumented System, and Safety Integrity Level Discussions

1.0.1. Risk Reduction

1.0.2. Safety Barriers for Risks

1.0.3. Safety Instrumented System Discussions

1.1. Safety Instrumented Function and Safety Instrumented System Functional Aspects – Discussions

1.1.1. Various Terms with Application Notes

1.1.2. Basic Principle Discussions

1.1.3. Scope, Boundary and Safety Requirement Specification

1.2. Failure and Failure Classes

1.2.1. Introductory Discussions

1.2.2. Bathtub Curve Discussions

1.2.3. Weibull Distribution

(VII/1.2.3-1)

(VII/1.2.3-1)

1.2.4. Failure Types and Random Failure

1.2.5. Systematic Failure

1.2.6. Common Cause Failure

1.3. Reliability Theory and Safety Instrumented System Issues (Including Reliability Block Diagram, Risk Graph)

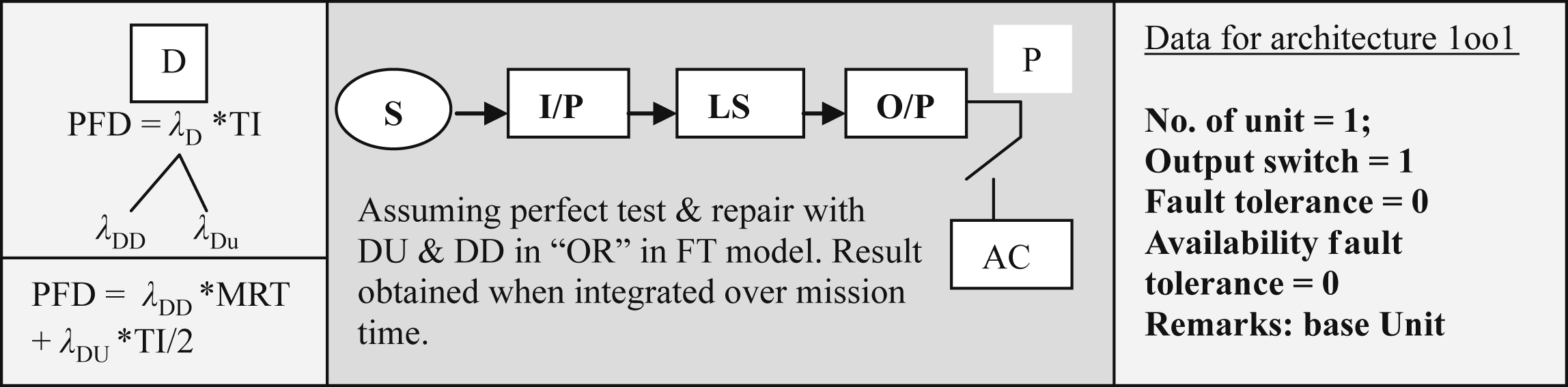

1.3.1. Common Terms in Reliability and SIS with Explanations

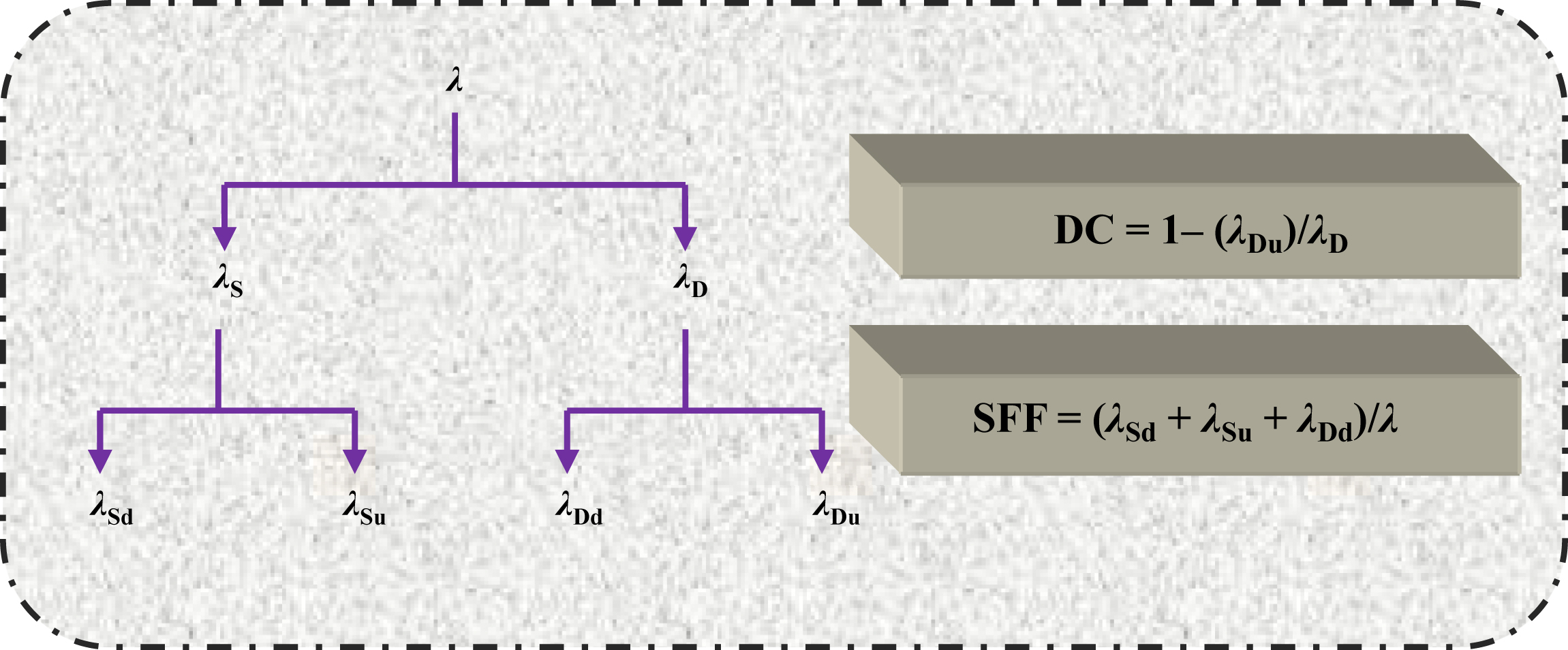

Table VII/1.3.1-1

Lists of Symbols for Failure Rate and Related Terms

| Various Terms | Symbols Used to Represent | ||

| Detected | Undetected | Others | |

| Safe failure (λS) | λSd | λSu | NA |

| Dangerous failure (λD) | λDd | λDu | NA |

| Safe failure fraction | NA | NA | SFF |

| Diagnostic coverage | NA | NA | DC |

1.3.2. Reliability Theory and Measurements

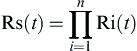

![]() (VII/1.3.2-1)

(VII/1.3.2-1)

![]() (VII/1.3.2-2)

(VII/1.3.2-2)

![]() (VII/1.3.2-3)

(VII/1.3.2-3)

Table VII/1.3.2-1

Major Distribution in Reliability

| Distribution | Formula | Remarks |

| Exponential | Failures occur in random intervals and the expected number of failures is the same for long periods. h(t) is constant λ, 1/λ is MTBF (θ). So, 63.2% of items will have failed by time t = θ. | |

| Weibull | The survival function: | To compare with Eq. (VII/1.2.3-1) in Clause 1.2.3, where influencing factors have been elaborated (γ = 0). Also refer to Fig. VII/1.2-1, bathtub curve, for the following: When β = 1, the hazard function is constant can be modeled by η = 1/λ. When β < 1, decreasing hazard function when β > 1, increasing hazard function. |

![]() (VII/1.3.2-4)

(VII/1.3.2-4)

(VII/1.3.2-5)

(VII/1.3.2-5)

(VII/1.3.2-6)

(VII/1.3.2-6)

1.3.3. Reliability of Safety Instrumentation Systems

1.3.4. Risk Graph

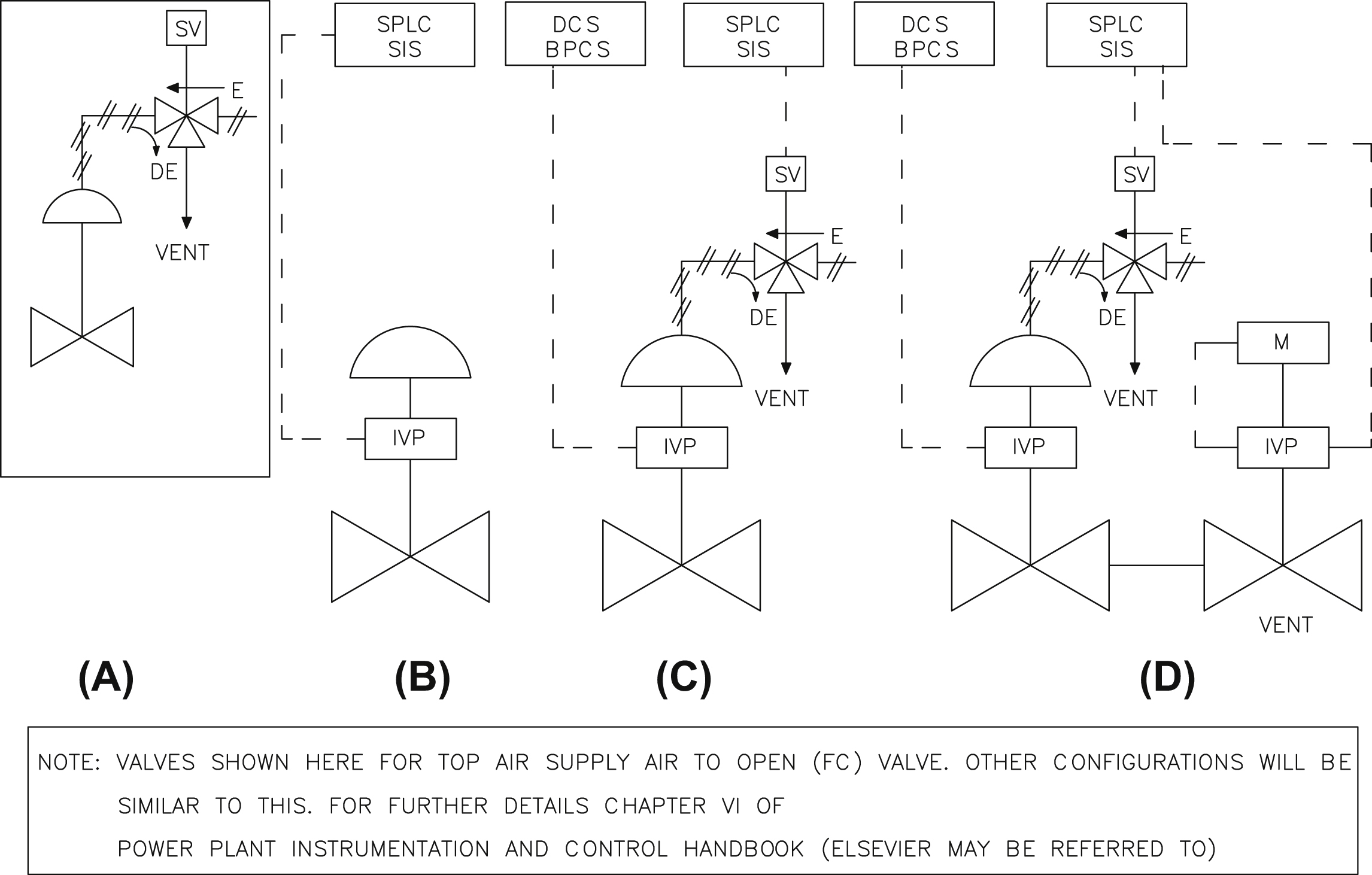

1.4. Component Functional Safety (Safety Instrumented System Components)

1.4.1. Sensor and Final Element (non PE) Electronic Device Details