Fault Tolerance, Protection Layer, and System Security

Abstract

A safety instrumentation system (SIS) demands a fault tolerant design to ensure high availability and system integrity. The discussions on fault tolerance cover various fault tolerant measures, including fault tolerant characteristics, redundancies, and hardware and software. The discussions start with faults and failure types along with other related issues like availability, maintainability, and countermeasures suitable for each. Discussions are completed with a focus on fault tolerant networks including fault tolerant Ethernet. Minute details on independent protection layer characteristics and their effect on SIS are covered. The role of a firewall and demilitarized zone in combating a cyber attack is very important and discussions on these are included. In view of current demands for commercial off-the-shelf and integrated networks, cyber security is extremely important and special discussions on these are necessary because cyber security of industrial automation and control systems is different from information technology cyber security. Special discussions are included to focus on zone conduit and Open Platform Communications firewalls in line with the upcoming international standard ISA/IEC 62443 series.

Keywords

1.0. Fault Tolerance

1.0.1. Definition of Terms

1.0.2. Dependability

![]() (XI/1.0.2-1)

(XI/1.0.2-1)

![]() (XI/1.0.2-2)

(XI/1.0.2-2)

1.1. General Discussions on Fault Tolerance

1.1.1. Characteristics

1.1.2. Fault and Failure Types

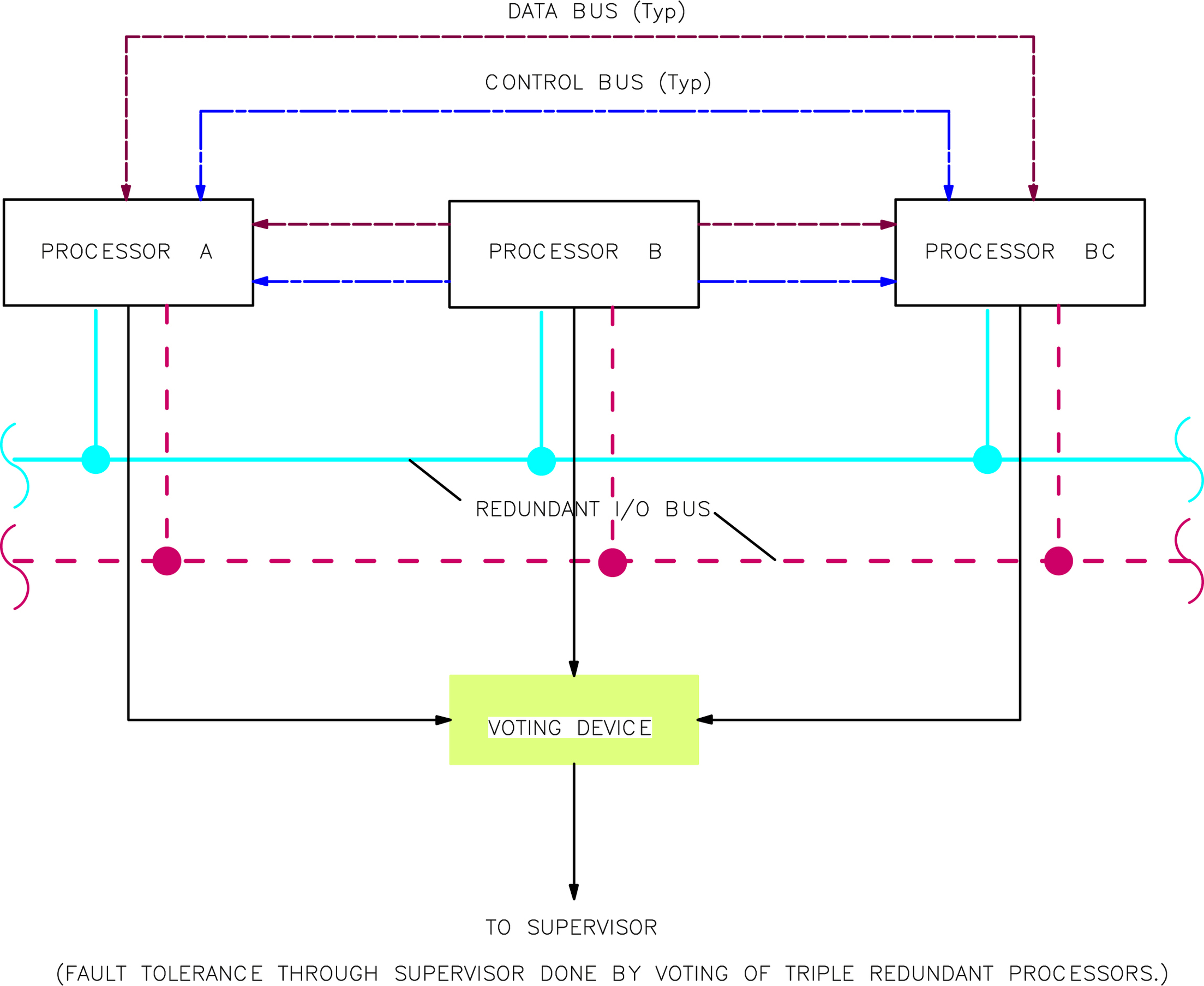

1.1.3. Redundancy

1.1.4. Availability

![]() (XI/1.1.4-1)

(XI/1.1.4-1)

1.1.5. Fault Tolerant Techniques (Computing System)

1.1.6. Validation of Fault Tolerance

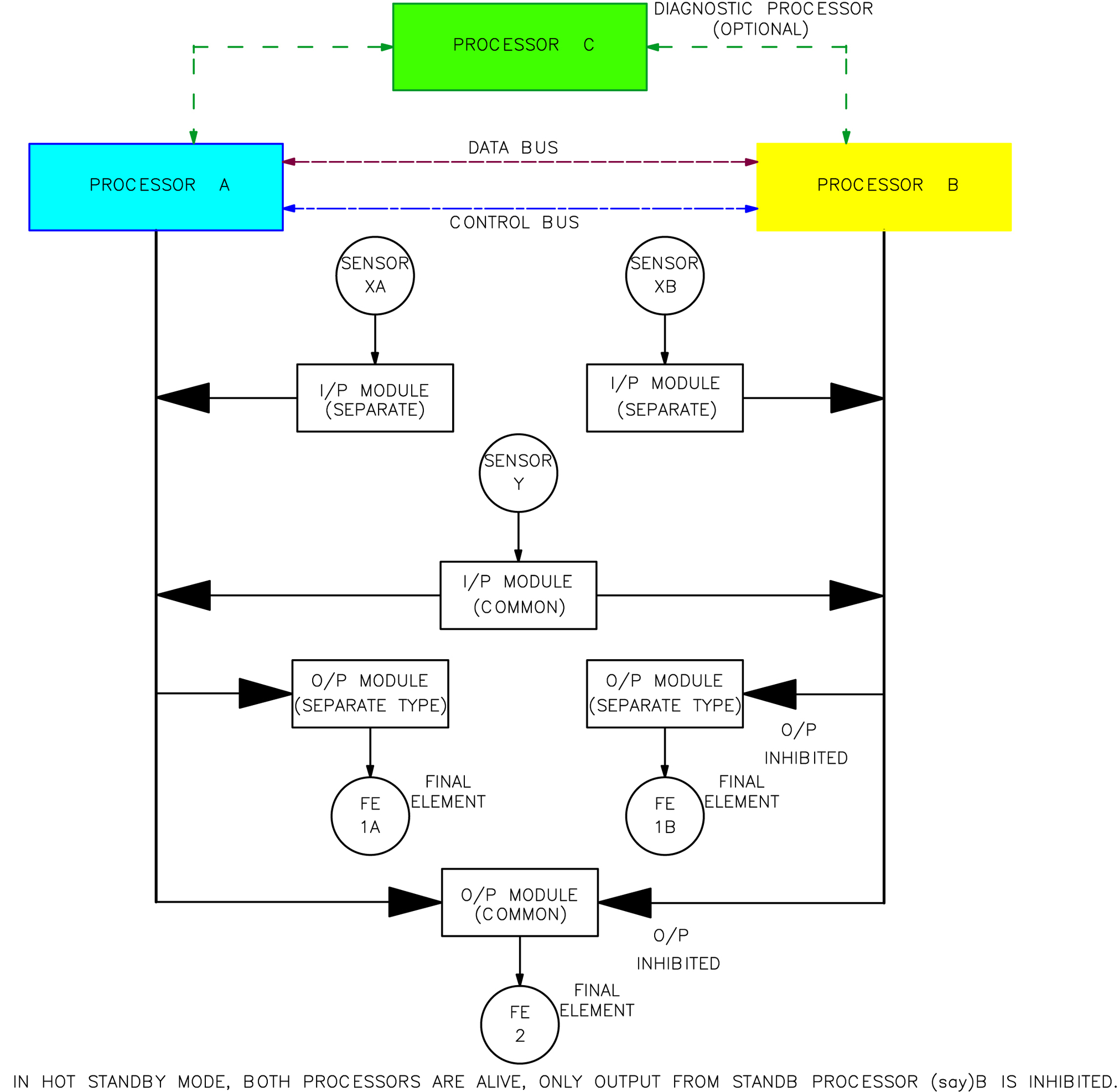

1.2. Fault Tolerance in Control Systems

1.2.1. Frequently Used Terms in Fault Tolerant Control

1.2.2. Ways and Means for Fault Tolerance

1.2.3. Practical Application

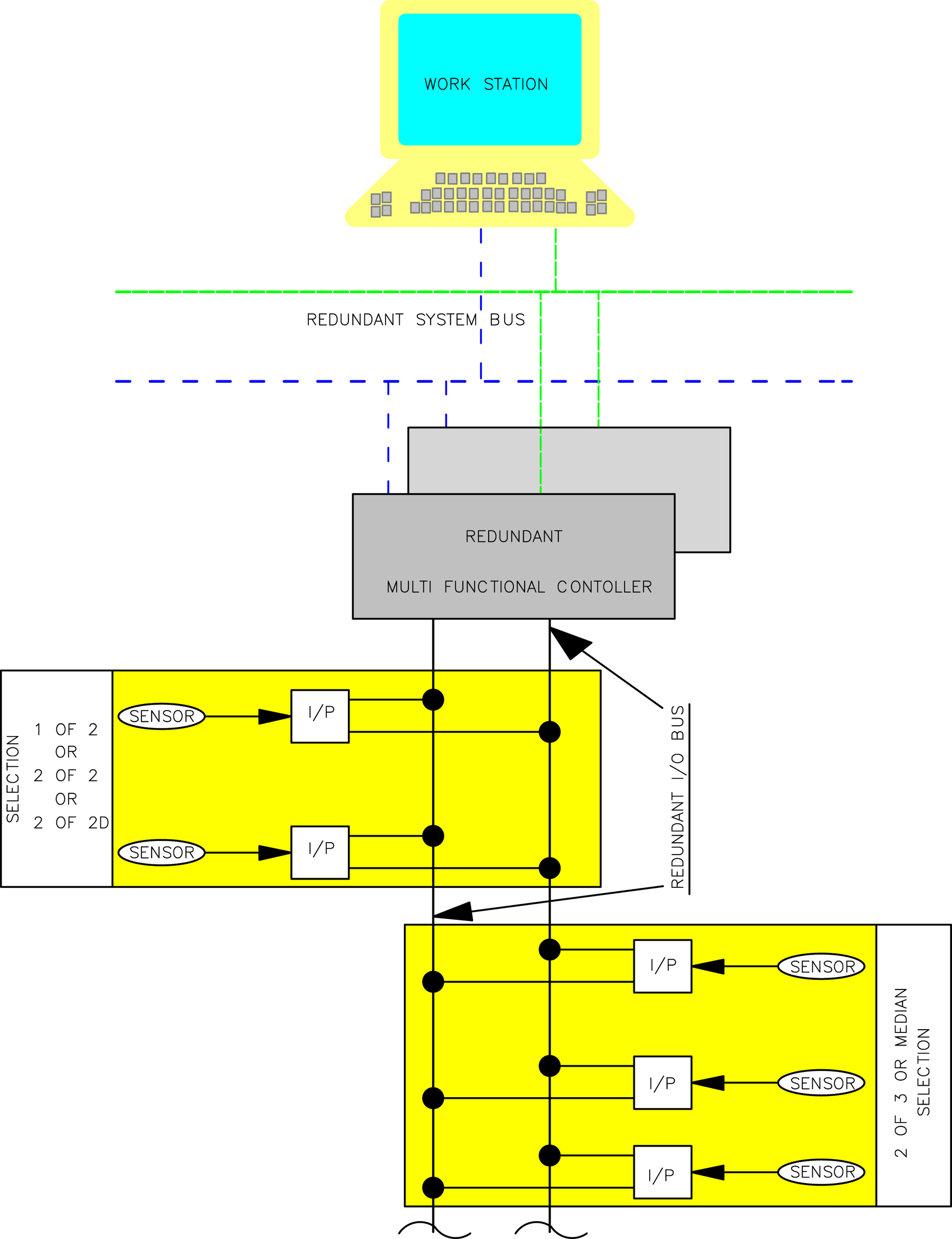

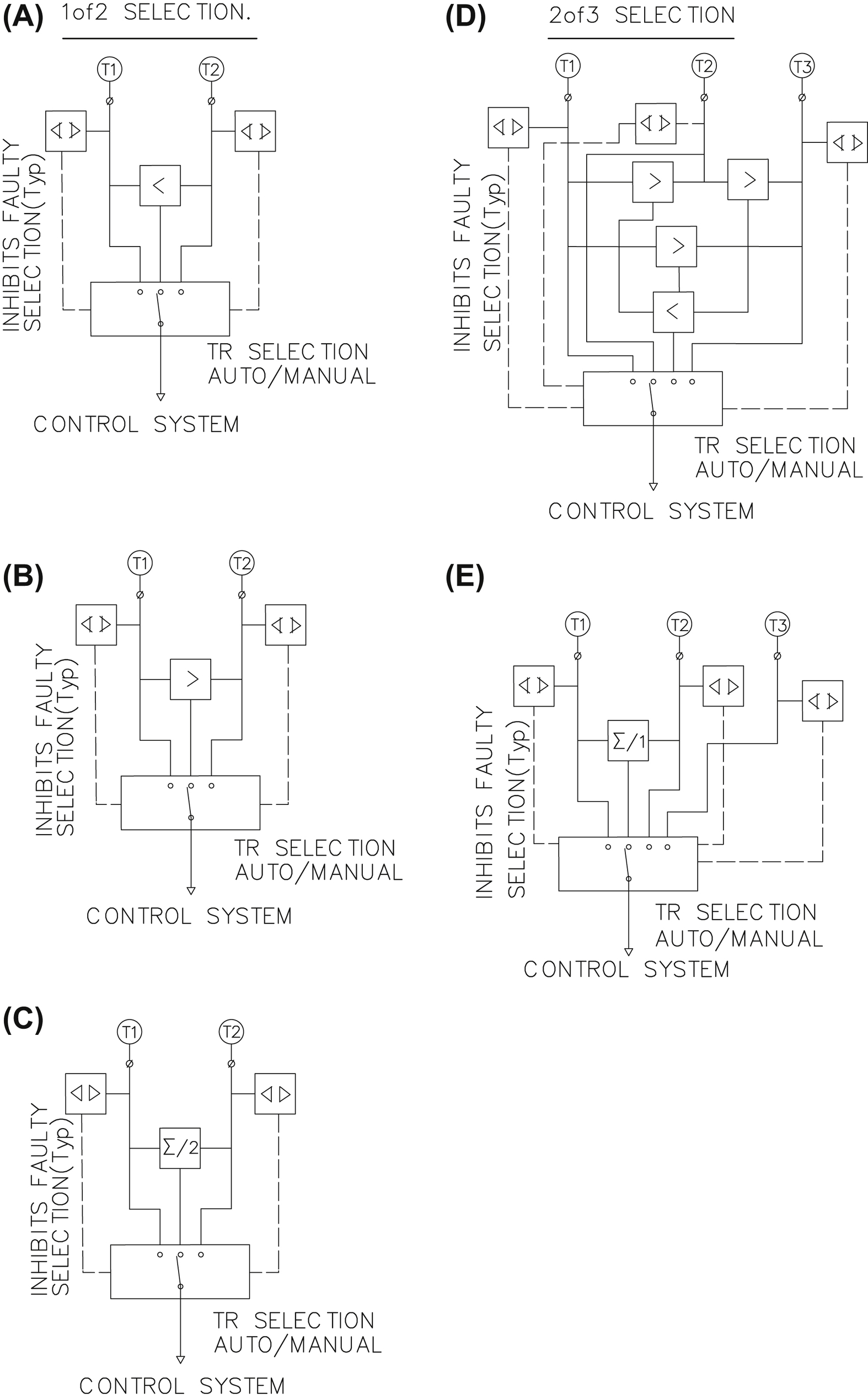

1.3. Redundancy and Voting in Field Instrumentation

1.3.1. Field Instrument Redundancy Selection Details

1.3.2. Input Redundancy Interface at Intelligent Control

1.3.3. Final Element Redundancy

1.4. Fault Tolerant Network

1.4.1. Media Redundancy

1.4.2. Network Node Redundancy

1.4.3. Communication Diagnostics

1.4.4. Fault Tolerant Ethernet

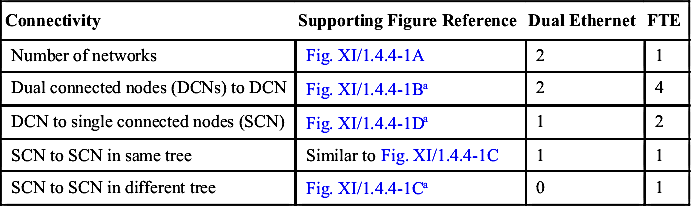

Table XI/1.4.4-1

Comparison Between Dual Ethernet and Fault Tolerant Ethernet (FTE)

| Connectivity | Supporting Figure Reference | Dual Ethernet | FTE |

| Number of networks | Fig. XI/1.4.4-1A | 2 | 1 |

| Dual connected nodes (DCNs) to DCN | Fig. XI/1.4.4-1Ba | 2 | 4 |

| DCN to single connected nodes (SCN) | Fig. XI/1.4.4-1Da | 1 | 2 |

| SCN to SCN in same tree | Similar to Fig. XI/1.4.4-1C | 1 | 1 |

| SCN to SCN in different tree | Fig. XI/1.4.4-1Ca | 0 | 1 |

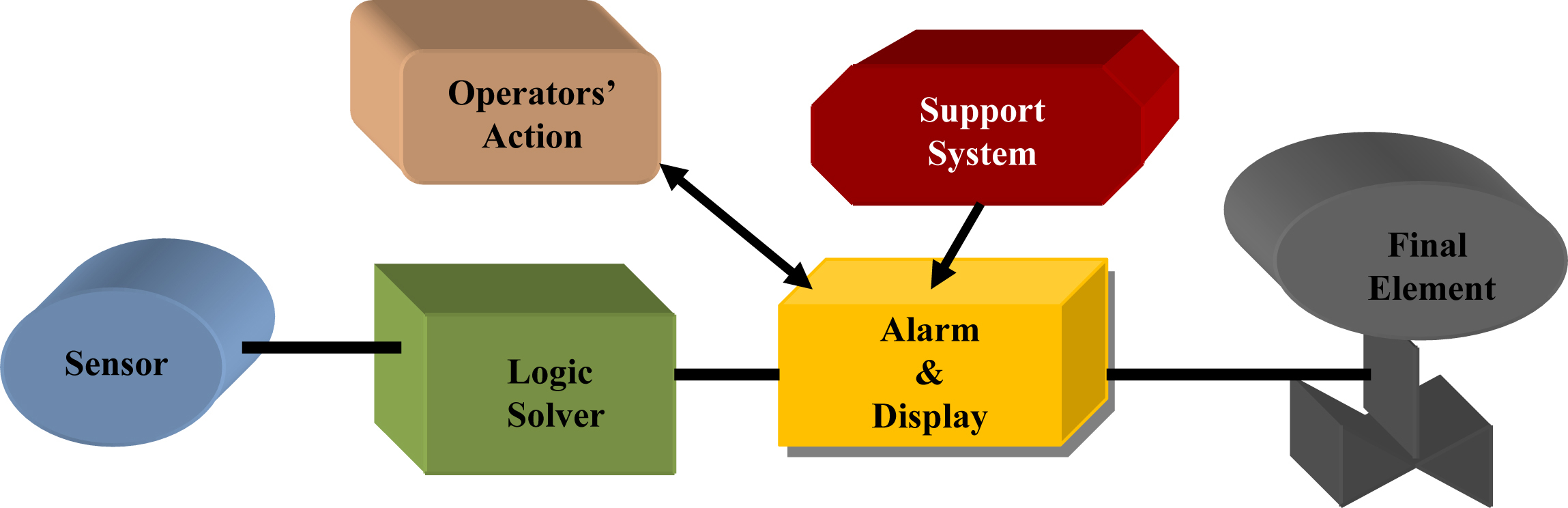

2.0. Protection Layers

2.1. IPL Characteristics

2.1.1. Specificity

2.1.2. Independence

2.1.3. Functionality

2.1.4. Integrity

2.1.5. Dependability (/Reliability)

2.1.6. Auditability

2.1.7. Access Security

2.1.8. Management of Change

2.2. Impact and PFD Guidelines

2.2.1. Initiating Event Validation

2.2.2. Human Error

2.2.3. Human Failure During Fabrication

2.2.4. Advanced LOPA

2.3. Protection Layer Effectiveness

Table XI/2.3-1

Typical Protection Layer Probability of Failures on Demand (PFDs)

| Protection Layer | PFD |

| Control loop | 1 × 10−1 |

| Human performance (trained, no stress) | 1 × 10−2 to 1 × 10−4 |

| Human performance (under stress) | 0.5–1.0 |

| Operator response to alarm | 1 × 10−1 |

| Vessel pressure rating above maximum challenge from internal and external pressure sources | 10−4 or better when vessel integrity is maintained |

![]() (XI/2.3-1)

(XI/2.3-1)

2.4. Operator Action: Protection Layer and Risk Reduction

2.4.1. Operator Action in Protection Layers

2.4.2. Operator Action in BPCS

2.4.3. Operator Action in SIS

3.0. BPCS and SIS Integration: Architectural Issues

3.1. Major Issues Behind Separate Systems

3.1.1. Impact

3.1.2. Flexibility

3.3.3. Facilitation

3.1.4. Analysis Time

3.1.5. Supports

3.2. BPCS and SIS Architectures

3.2.1. Integration Approach

3.2.2. Integration Guidelines

3.2.3. Salient Issues

4.0. Security Issues in SIS

4.1. Security Issues: General Discussions

4.1.1. Vulnerability Check

4.1.2. Probable Checklist to Prevent Cyber Attacks

4.1.3. Architectural Aspects

Table XI/4.1.3-1

Differences in Security Handling in Information Technology (IT) and Industrial Automation and Control Systems (IACSs) [19]

| Security Issue | IT | IACS |

| Antivirus mobile code | Easy to implement and update | Impact on control system difficult to implement |

| Time criticality | Delay may be allowed | No delay—real time |

| Security awareness | Moderate | Not much developed except physical means |

| Patch management | Easily defined and automatic | Original equipment manufacturer-specific (long time to manage) |

| Technology support | 2–3 years multivendor | 10–20 years one vendor |

| Test and audit | Easy modern method | Modern method not suitable |

| Change management | Regular scheduling | Strategic scheduling for impact on control system |

| Incident response | Easily developed and deployed | Uncommon beyond system resumption activities |

| Physical and environmental security | Poor to excellent | Excellent, but remote places may be unmanned |

| Security system development | Integral part of system development | Not an integral part of development |

| Compliance | Limited regulatory | Specific regulatory |

4.1.4. Major Cyber Attacks

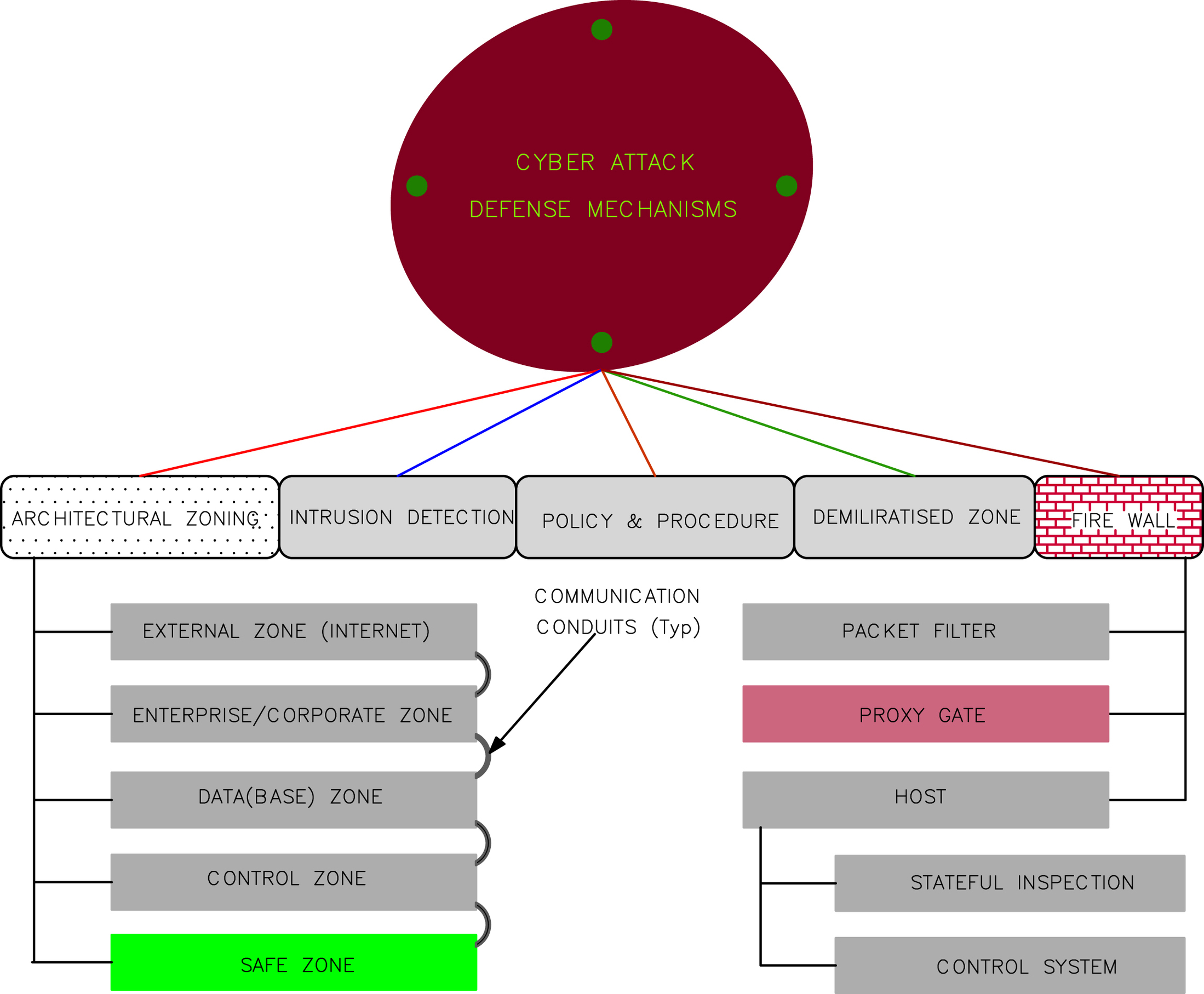

4.1.5. Cyber Attack Defense Mechanisms

4.1.6. Operational Issues

4.2. Firewall

4.2.1. Category and Classification of a Firewall

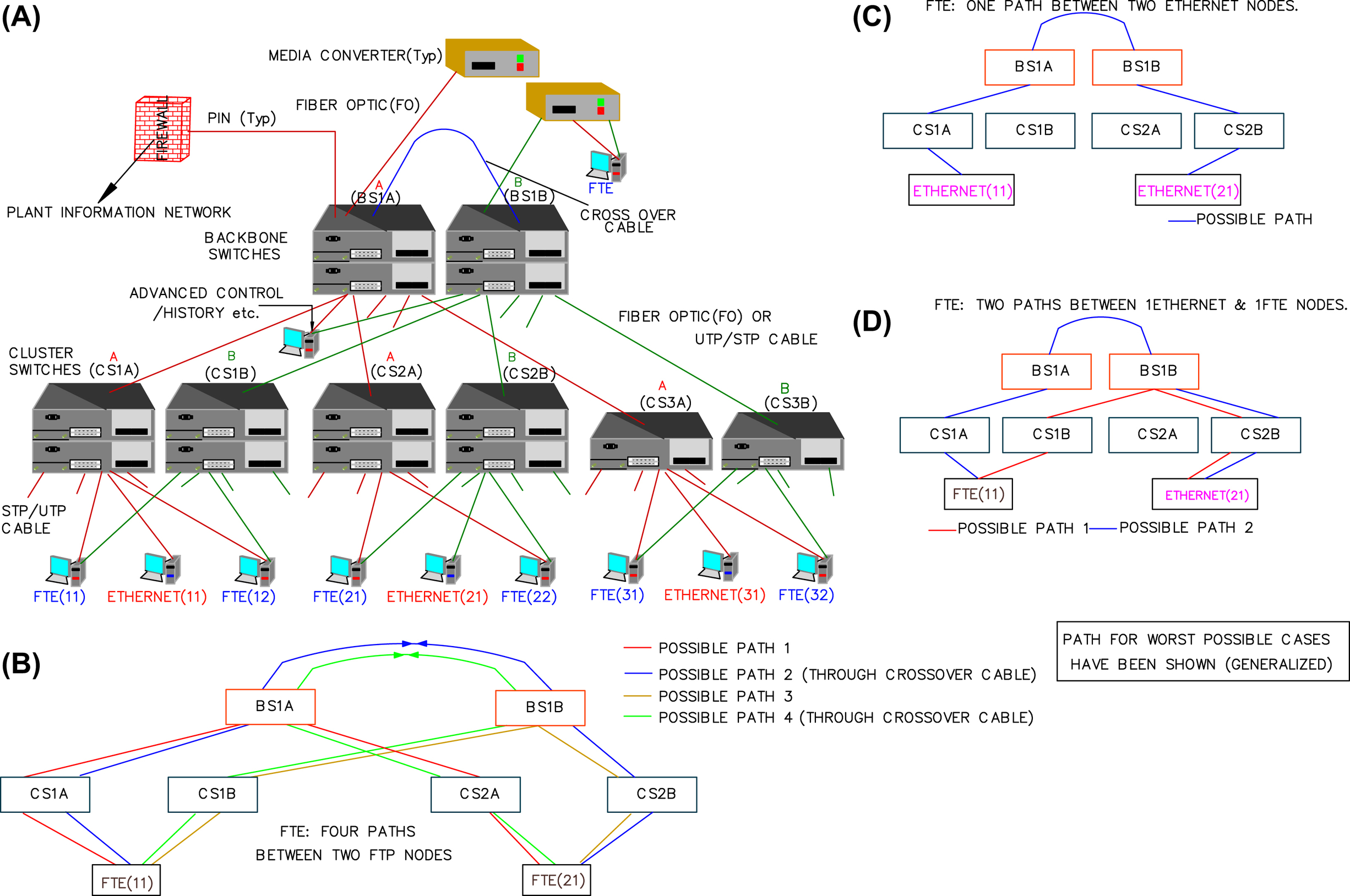

Table XI/4.2.1-1

Hardware Versus Software Firewall [2]

4.2.2. Short Discussions on Various Firewalls

Table XI/4.2.2-1

| Types | Details |

| Stateless packet | This is also known as a static IP filtering firewall. It does not remember the information about the previously passed packet. This is not a smart filter and can be fooled quickly. This type is vulnerable to user datagram protocol-type packets. It has a very high throughput but is costly. It is included with router configuration software or with most open source operating systems. It is highly vulnerable for security. |

| IP packet | IP packet filtering firewall: Every packet is handled on an individual basis. Previously forwarded packets belonging to a connection have no bearing on the filter's decision to forward or drop the packet. |

| Stateful packet | This is a pure packet filtering environment. It is known as smart firewall or dynamic-type packet firewall, because it remembers the information about previously passed packets. |

4.2.3. Firewall Functionality

Table XI/4.2.3-1

| Type Name | Feature |

| Packet filter (see Fig. XI/4.2-1A and D) | • First line of defense (Fig. XI/4.2-1A). • Internet and other digital network data travel in packets of limited sizes. Consists of the Data, Ack, Request or Command, Protocol Information, Source and Destination IP Address, Port Error Checking Code, etc. • Filtering consists of examining incoming and outgoing packets compared with a set of rules for allowing and disallowing transmission or acceptance. • Rather fast because it really does not check any data in the packet except IP header. Works in the network layer (internet) of the Open Systems Interconnection (OSI) model. • Fast but not foolproof. IP address can be spoofed. |

| Circuit relay/gateway (see Fig. XI/4.2-1B and D) | • One step above the packet filter and commonly known as “stateful packet Inspection” to check the legitimacy or validation of the connection between two ends (in addition to packet filtering operation) based on the following: • Source destination IP address/port number • Time of day • Protocol • Username and password • Operates on the transport layer. Stateful inspection makes the decision about connection based on the data stated above. |

| Application gateway (application proxies) (see Fig. XI/4.2-1C and D) | • Acts as proxy for the application at the application layer of the OSI. See Fig. XI/4.2.2-1 also. • Authorizes each packet for each protocol differently. • Follows specific rules and may allow some commands to a server but not others, OR limits access to certain types based on authenticated user. • Setup is quite complex; every client program needs to be set up. Also each protocol must have a proxy in it. • True proxy is much safer. |

| Table Continued | |

From S. Basu, A.K. Debnath, Power Plant Instrumentation and Control Handbook, Elsevier, November 2014; http://store.elsevier.com/Power-Plant-Instrumentation-and-Control-Handbook/Swapan-Basu/isbn-9780128011737/. Courtesy Elsevier.

4.2.4. General Discussions

4.3. Cyber Security Standards

4.3.1. Categories and Divisions in Standards

Table XI/4.3.2-1

62443 Series Standards (Standard Number IEC/ISA 62433-x-y)

| IEC | ISA | 62443-x-y | Title | Status |

| IEC/TS | ISA | 62443-1-1 | Terminology, concepts and models | P, UR |

| IEC/TR | ISA/TR | 62443-1-2 | Master glossary of terms and abbreviations | D |

| IEC | ISA | 62443-1-3 | System security compliance metrics | D |

| IEC/TR | ISA/TR | 62443-1-4 | IACS security life cycle and use case | PL |

| IEC | ISA | 62443-2-1 | IACS security management system requirements | P, UR |

| IEC | ISA | 62443-2-2 | IACS security management system implementation guidance | PL |

| IEC/TR | ISA/TR | 62443-2-3 | Patch management in the IACS environment | P |

| IEC | ISA | 62443-2-4 | Requirements for IACS solution suppliers | P |

| IEC/TR | ISA/TR | 62443-3-1 | Security technologies for IACS | P, UR |

| IEC | ISA | 62443-3-2 | Security assurance levels for zones and conduits | V |

| IEC | ISA | 62443-3-3 | System security requirements and security assurance levels | P |

| IEC | ISA | 62443-4-1 | Product development requirements | V |

| IEC | ISA/TR | 62443-4-4 | Technical security requirements for IACS components | V |

4.3.2. ISA/IEC 62443 Series Standards and Technical Reports

4.3.3. Objective and a Few Definitions of Terms

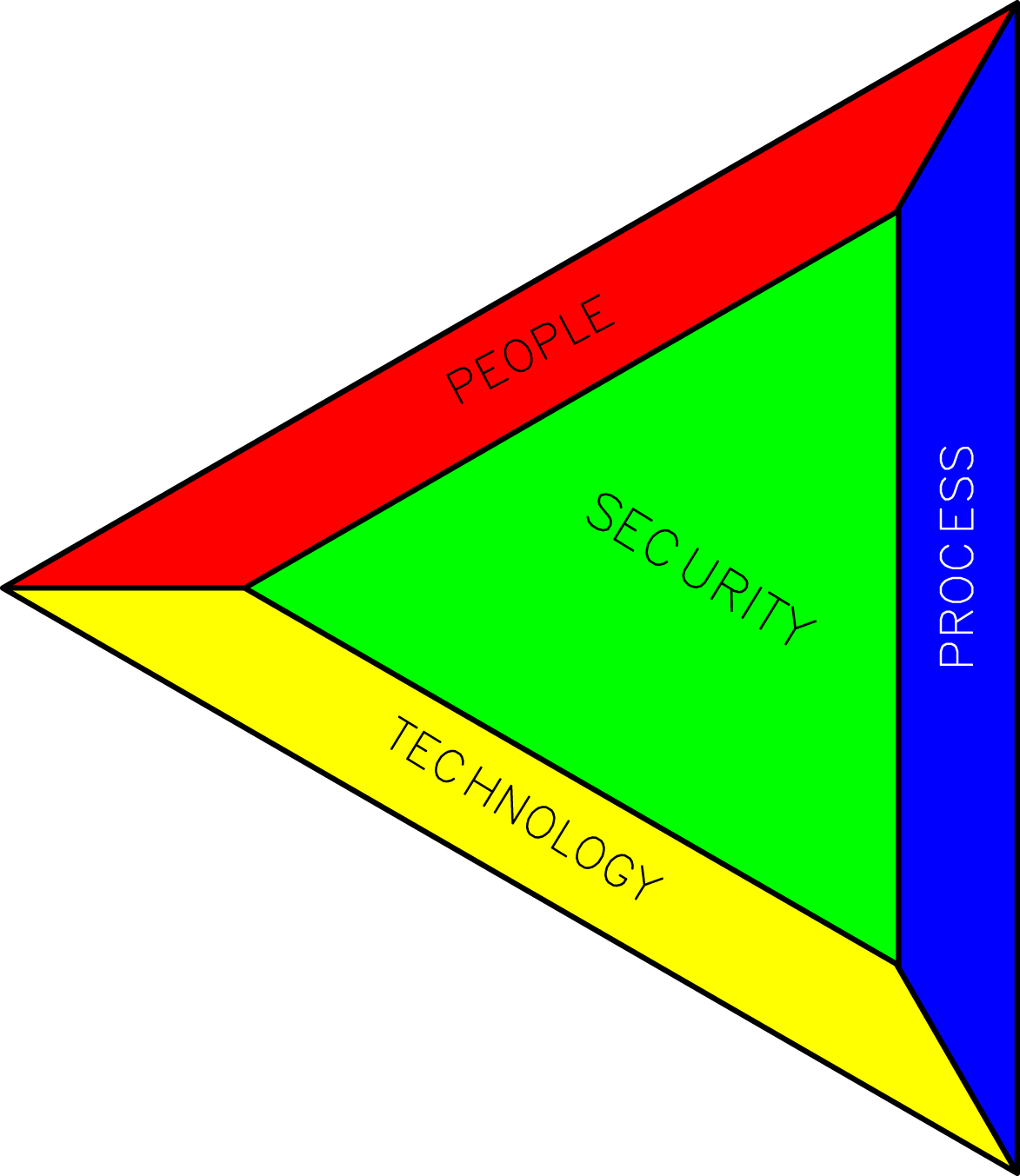

Table XI/4.3.3-1

Connection Between Various Elements (Based on ISA 62443-1-1)

| Element | Connections |

| People | Role: Asset owner/operator, system integrator, product supplier, service provider, compliance authority. |

| People | Beyond the scope of the standard but are connected to security indirectly: Resourcing, relationship, intent, support decision, awareness. |

| Process | Security policy, organization of security, asset management, human resource security, physical and environmental security, access control, communication/operation/business management, incident management, system acquisition, and maintenance management. |

| Technology | Use control, system integrity, data confidentiality, restriction of data flow, response to event in time, availability of resource. |

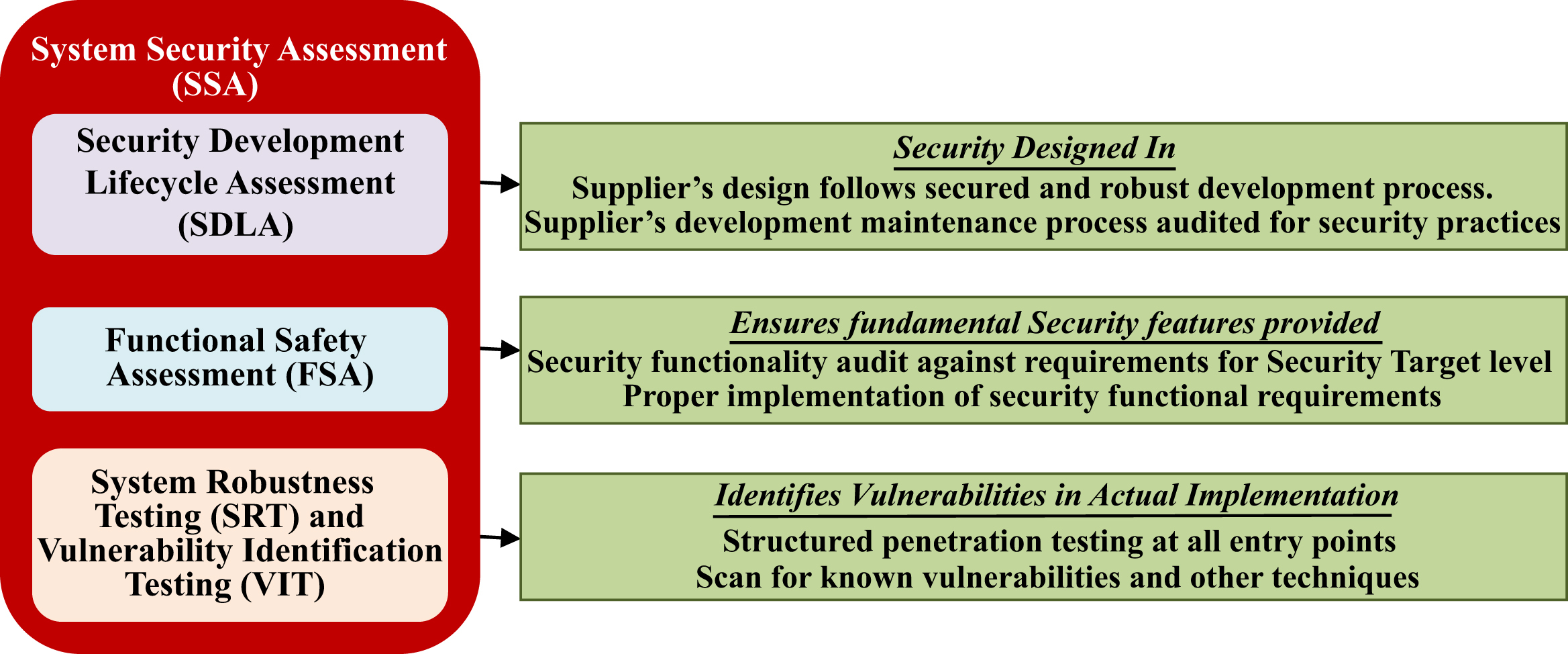

4.3.4. Conformity Assessment as Per IEC

4.3.5. Security Development Life Cycle Assessment

4.3.6. System Security Assessment

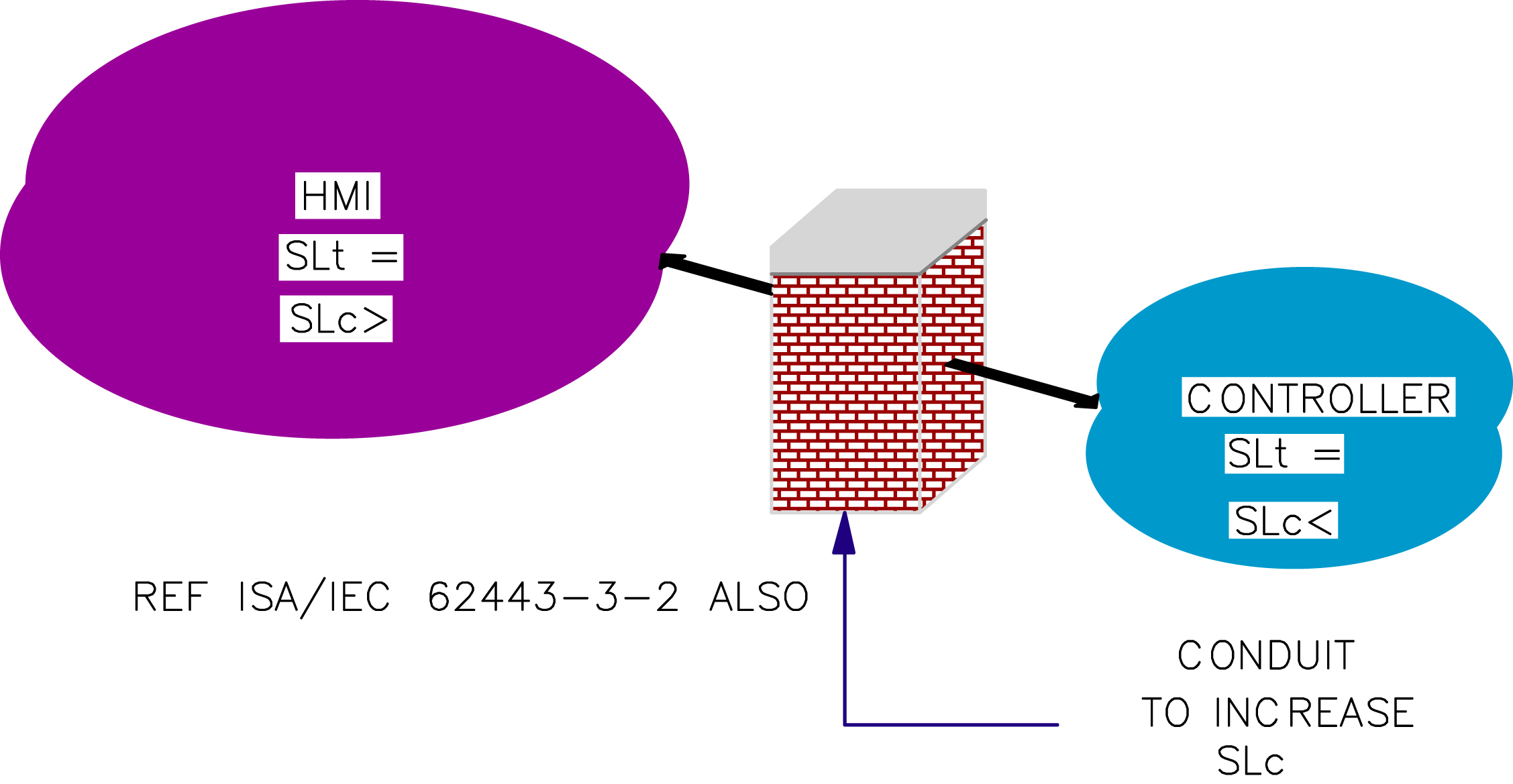

4.4. Zone and Conduit Concept

4.4.1. Discussions on Zone

Table XI/4.4.1-1

| Characteristic Issue | Related Issues |

| Security attributes | Zone: Scope and risk; security: Level, target, strategy and policy; permitted activity and communication |

| Asset inventory | Hardware: External, computer, and development; Access: Authentication and authorization; spares, monitoring and control; simulation, training and reference manual |

| Access | Access and control requirements of the zone |

| Threat and vulnerability | Identification and evaluation of vulnerability for risk assessment and necessary documentation. Suggestion of suitable countermeasure for vulnerabilities in the zone |

| Consequence: Security breach | As a part of risk assessment the consequences shall be analyzed to suggest necessary countermeasures |

| Authorized technology | IACS technologies are evolving to meet the business requirements in better and more efficient ways, but there are a number of vulnerabilities. Naturally, proper selection is important to minimize security vulnerability along with an efficient system to meet the challenges |

| Change management | Formal process to maintain the accuracy of the zone and how to change the security policy to meet the security goal without any compromise |

4.4.2. Conduit Details

4.4.3. Security Level

Table XI/4.4.2-1

| Characteristic Issue | Related Issues |

| Security attributes | Conduit: Scope and risk; security: Level, target, strategy and policy; permitted channels; documentation |

| Asset inventory | Similar to zone; accurate lists of communication channels |

| Access | Access to limited sets of entities and access and control requirements |

| Threat and vulnerability | Identification and evaluation of vulnerability for risk assessment of assets within conduits that fail to meet business requirements; necessary documentation. Suggestion of suitable countermeasure for vulnerabilities in the zone |

| Consequence: Security breach | As a part of risk assessment the consequences shall be analyzed to suggest necessary countermeasures |

| Authorized technology | IACS technologies are evolving to meet the business requirements in better and more efficient ways, but there are a number of vulnerabilities to conduits. Naturally, proper selection is important to minimize security vulnerability to conduits along with an efficient system to meet the challenges |

| Change management | Formal process to maintain the accuracy of the conduit's policy and how to change the security policy to meet the security goal without any compromise |

| Connected zones | Description in terms of the zone to which it is connected |

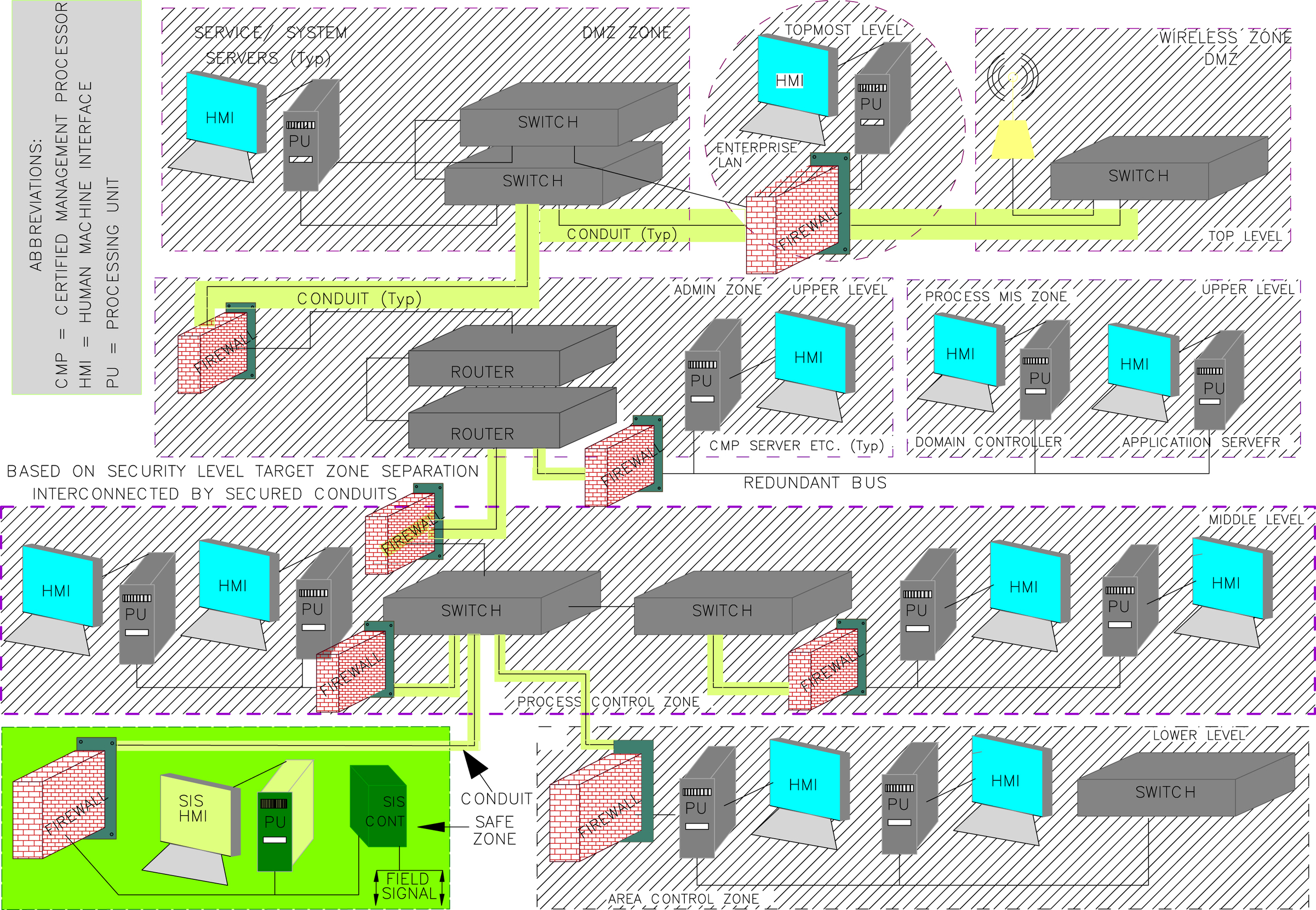

4.4.4. Integrated Network With Zones and Conduits

4.5. Discussions on Security

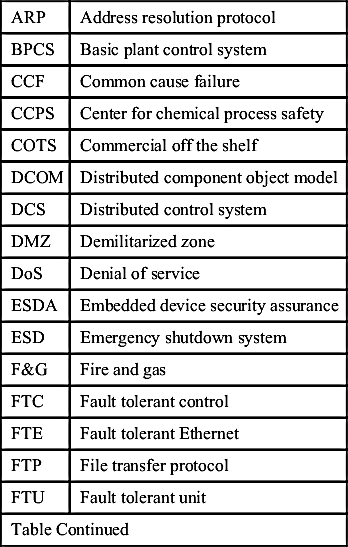

List of Abbreviations

| ARP | Address resolution protocol |

| BPCS | Basic plant control system |

| CCF | Common cause failure |

| CCPS | Center for chemical process safety |

| COTS | Commercial off the shelf |

| DCOM | Distributed component object model |

| DCS | Distributed control system |

| DMZ | Demilitarized zone |

| DoS | Denial of service |

| ESDA | Embedded device security assurance |

| ESD | Emergency shutdown system |

| F&G | Fire and gas |

| FTC | Fault tolerant control |

| FTE | Fault tolerant Ethernet |

| FTP | File transfer protocol |

| FTU | Fault tolerant unit |

| Table Continued | |

| HART | Highway addressable remote transducer |

| HMI | Human–machine interface |

| IACS | Industrial automation and control systems |

| IEC | International Electrotechnical Commission |

| I/O | Input/output |

| IP | Internet protocol |

| IPL | Independent protection layer |

| IT | Information technology |

| LOPA | Layer of protection analysis |

| MDT | Mean downtime |

| MIS | Management information system |

| MTBF | Mean time between failure |

| MTTR | Mean time to repair |

| NMR | N-modular redundancy |

| OPC | Open platform communications |

| OSI | Open systems interconnection |

| PFD | Probability of failure on demand |

| PLC | Programmable logic controller |

| SDLA | Security development life cycle assessment |

| RSTP | Rapid spanning tree protocol |

| SIF | Safety instrument functions |

| SIL | Safety integrity level |

| SIS | Safety instrumentation system/supervisory information system (in case of DCS) [16] |

| SQL | Structured query language |

| STP | Spanning tree protocol |

| TCM | Tricon communications modules |

| TCP | Transmission control protocol |

| TMR | Triple modular redundancy |

| VPN | Virtual private network |