Do you remember the days of physical servers? Generally, the rule of thumb was to have one major role or application per server. That was mainly because many applications and services just did not play well together. They had to be separated, so that they couldn’t fight among themselves and cause problems for users on the network. The unfortunate side effect of this practice was that all of these physical servers were, on average, utilizing 20% of their resources, meaning 80% of those resources were being wasted. We don’t pour a glass of water only to drink 20% of it and waste the rest, do we? We don’t live in only 20% of our homes, do we? Clearly, resource utilization in IT needed a makeover. This is where virtualization technology comes in.

Virtualization has solved our resource utilization problems, by enabling us to make more effective use of the hardware available. This means that we can now host more workloads using less space inside of our datacenters. We can dynamically move workloads from one piece of physical hardware to another, without any interruption to the running workload. We can even provide high-availability functionality to workloads using these virtualization technologies.

It’s not difficult to see how virtualization is changing the IT management landscape. System administrators should now be looking to add this knowledge to their toolbox, to help them manage ever-increasing IT complexity, as virtualization continues to be a staple of IT departments everywhere.

While there are many different virtualization technologies on the market today, this book will focus on Microsoft’s Hyper-V, with a primary focus on Windows Server 2019 and Windows Server 2016, with some mention of Windows Server 2012 R2.

We’ll start this chapter by considering the fundamental nature of Hyper-V. We’re going to be discussing Hyper-V, and virtualization in general, from the standpoint of the fictional company XYZ Corp. This will allow us to take a difficult conceptual topic—Hyper-V—and apply it to solve everyday business issues. This will make it easier to see where this technology could fit in your organization. Once we understand the “why,” we’ll explain how this technology works from the ground up. We’ll continue to build on this foundation throughout the book.

Let’s dig in.

What Is Hyper-V?

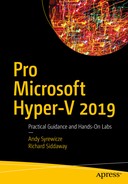

Logical layout of a hypervisor

In Figure 1-1, we have a physical server that contains 8 physical CPUs and 16GB of memory. The hypervisor layer gets installed directly on top of the physical hardware and acts as a broker for the resources contained in the host machine. Figure 1-1 shows that two “virtualized” servers have been created, and each is being given a portion of the host’s physical resources. Each of these virtual machines (VMs) contains fully functional OSs and will act just like physical servers on your network. They are also isolated and separated from one another, just like physical servers. This is the most basic function of a hypervisor such as Hyper-V.

The hypervisor is truly the core of any virtualization platform. Hyper-V itself performs the core functions of Microsoft’s virtualization platform, but other technologies, such as System Center Virtual Machine Manager and Failover Clustering, help create a more holistic virtualization solution. We cover these technologies later in the book, after you’ve learned about the core functions of Hyper-V itself.

Sometimes, it’s not enough just to know what a technology does. We also need to understand how it benefits us and how it can help us solve our business problems. With that in mind as you read the text, picture the fictional company XYZ Corp., which is a company with aging IT infrastructure that is badly in need of updating. As you work through the first couple of chapters, imagine this fictional company as an example of how this technology benefits you and your organization.

The Business Benefits of Hyper-V

Hypervisors have been around for some time. Widespread adoption started more than ten years ago, when IT professionals saw the inherent benefits of virtualized workloads. Some variant of VMware’s ESXi product has been around for the last ten years or so, and some mainframe systems have been utilizing this type of technology since the 1980s!

Let’s look at this from a business perspective, using the example of the fictional company XYZ Corp., which is approaching a hardware refresh cycle. This approach will enable you to see why the industry has transitioned to virtualized workloads.

Setting the Stage

XYZ Corp. is a company that is getting ready to make some expenditures on technology as part of a three-year refresh cycle. Its existing infrastructure has been in place for some time, and no virtualization technologies are in use today. Cost is a major concern, as well as stability. The IT department has been given a limited budget for upgrades, but several of the physical machines are out of support and warranty, and a significant investment would be needed to replace each existing physical machine with another, more current model.

The Physical Computing Model

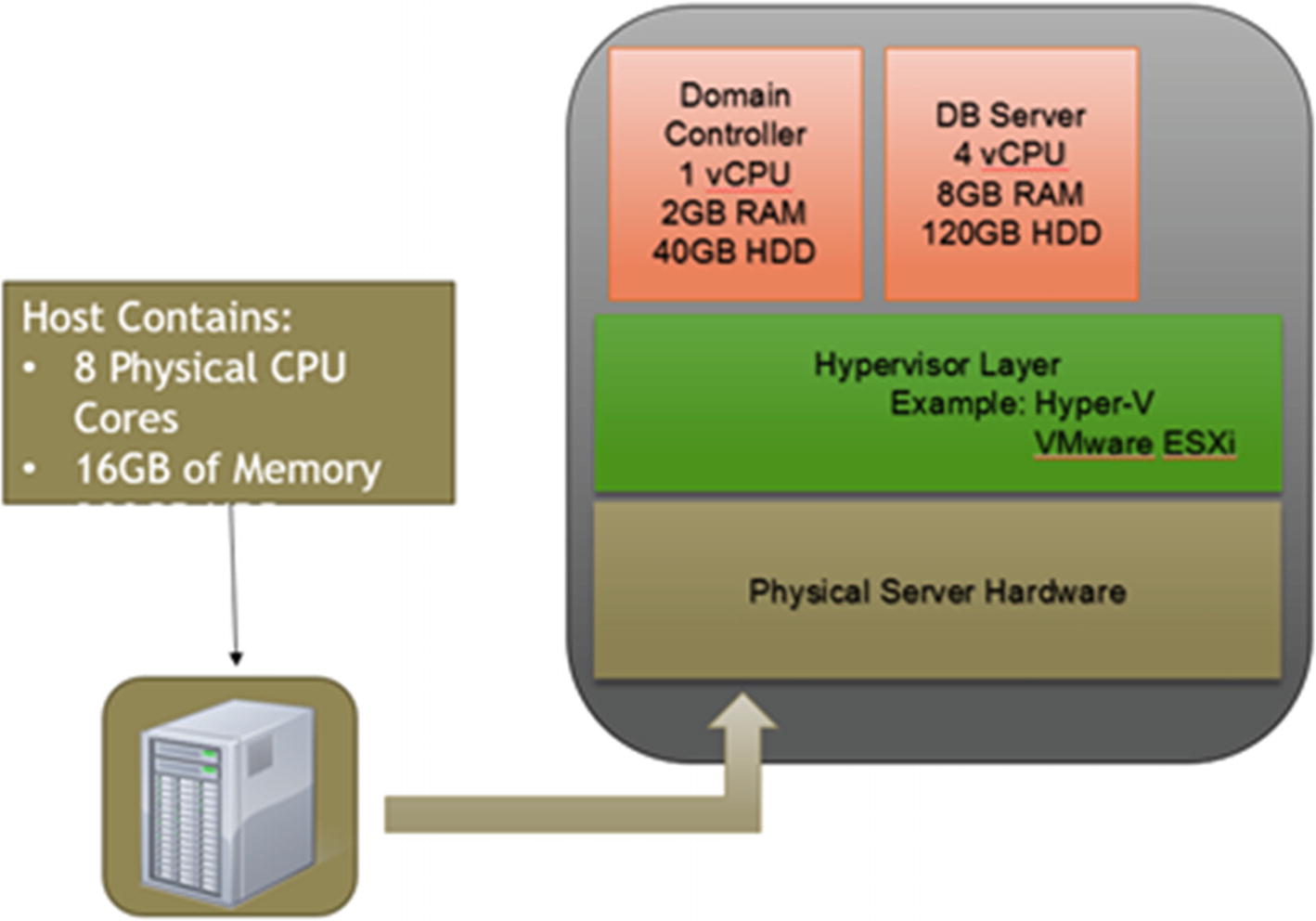

A list of physical servers owned and operated by XYZ Corp

Figure 1-2 shows that XYZ Corp. has five physical servers and that each server generally follows the rule of one role/feature per physical box. Sadly, this method was not conducive to efficient use of computing resources. Historically, most systems would go through life running at only 20% utilization (on average), leaving the remaining 80% of the system with nothing to do.

One role per server was never an official standard; it was an unspoken rule that most system admins adopted. In reality, there are so many bits of software on any given system that, once in a while, issues are bound to arise wherein some of that code doesn’t play nicely with other code on the system. This can cause system outages or degrade performance. Downtime equals money lost to the business. The unspoken rule of one role per machine helped prevent this from occurring.

SERVER CONSOLIDATION

Before the widespread introduction of virtualization, many organizations invoked projects to reduce the number of servers in their environment. This was essentially a cost-cutting exercise, in order to free up hardware for new applications.

Consolidation projects would attempt to match workloads, to enable multiple applications to run on the same server, with varying levels of success.

If your management suggests that a server consolidation project would be a good thing to attempt, you should immediately start talking up the benefits of virtualization. Virtualization gives you the benefits of server consolidation but retains the application isolation inherent in the one-application-per-server concept.

Don’t consolidate—virtualize.

The increase in cost to buy additional physical servers to fill all the roles needed on the network was an acceptable cost, because at the time, there wasn’t a mainstream technology that would allow system admins to make better use of the available hardware. Nor was there any software available that would ensure the separation of workloads to prevent those situations in which two pieces of code are in conflict and create issues. Virtualization technology has now solved this computing dilemma, in the form of the hypervisor.

Making the Change to Virtualized Computing

Virtualization technology enables XYZ Corp. to purchase one or two new, powerful physical servers and place the workloads shown in Figure 1-2 into isolated “virtual” instances on top of a hypervisor running on the new equipment. More powerful servers will be more expensive than a similar though less powerful system, but reducing the number of physical servers and maintaining warranty and support on them usually allows you to have a much lower overall cost.

Total Physical Computing Resources Configured for XYZ Corp

Workload | Number of Physical CPU Cores | Amount of Physical Memory in GB | Amount of Storage in GB |

|---|---|---|---|

Domain Controller | 2 | 2 | 80 |

Domain Controller | 2 | 2 | 80 |

Database Server | 4 | 8 | 300 |

Mail Server | 4 | 8 | 300 |

File/Print Server | 2 | 4 | 500 |

Total | 14 CPU Cores | 24 GB | 1260 GB |

Table 1-1 shows that the company needs significant resources. These are currently physical resources in their existing infrastructure that are underutilized. XYZ Corp. will have to do some performance checking on each of the systems, to obtain a more precise measure as to how much the systems are underutilized. Once they have the actual demand and some hard data, they can start sizing the new physical servers on which they’ll be placing their hypervisors.

Note

You can use such tools as Perfmon, Task Manager, and others to discover and capture this type of information.

Projected Resource Demand per Virtualized Workload for XYZ Corp

Workload | Number of Physical CPU Cores | Amount of Physical Memory in GB | Amount of Storage in GB |

|---|---|---|---|

Domain Controller | 1 | 2 | 50 |

Domain Controller | 1 | 1 | 50 |

Database Server | 2 | 6 | 200 |

Mail Server | 2 | 6 | 150 |

File/Print Server | 1 | 2 | 250 |

Total | 7 CPU Cores | 17GB of RAM | 700GB |

Comparing Table 1-2 to Table 1-1 shows that a reduction of 7 CPU cores and 7GB of RAM are required, if the workloads are virtualized, and 560GB of storage space will be potentially released.

MORE TO LEARN

You may find that a server appears to be using a very small portion of the resources allocated to it, and you'll want to downsize the workload accordingly, once virtualized. You still need to take into account the minimum software requirements for the OS and the software running inside the virtualized OS, to maintain a supported configuration with the associated vendor.

For example, you may have a Microsoft SQL Server system that has a memory demand of 256MB of memory (unlikely, but it could happen). While you could size the workload in that manner once it's virtualized, the minimum system requirements for Microsoft SQL Server state that 1024MB of memory is the minimum required. Therefore, when sizing the memory for that workload, you wouldn't drop below 1024MB of memory for that VM.

Without getting too into the details of the sizing math at this point, taking into account the resource requirements listed in Table 1-2 and adding the resource overhead required to run Hyper-V itself, XYZ Corp. now knows how powerful of a server (or servers) to procure. These new physical server(s) will be more powerful individually than the previous five physical servers and will conduct the work that all five of them performed previously.

Additionally, it would be wise for XYZ Corp. to allocate an additional 20%–40% of resources to account for future growth. This is by no means an industry-defined standard. The needs of the organization will dictate this more than anything. Many organizations will add the maximum number of CPUs and maximize the RAM in a new virtualization host, as it’s cheaper to do that than to add components at a later date. This approach ensures that there is room for future growth in the virtualized environment.

The Virtualized Computing Model

Once the new hardware is purchased and arrives, Hyper-V will be installed and the workloads migrated in their existing state into a VM, or they may be migrated in a more traditional manner, if the OS and/or installed software is being upgraded as well. During this process, XYZ Corp. can allocate each of its core computing resources (CPU, memory, storage, and networking) to each virtualized workload, using the projected resource demand they determined earlier in Table 1-2.

Once migration has been completed, each workload will be running on one of the new servers, each in its own isolated virtualized environment. In this new configuration, every entity on the XYZ Corp. network that was able to talk to the (now virtualized) workloads can still do so. Nothing has changed from a communications perspective. These workloads are still reachable on the network, and the end points won’t even know that the workloads have been virtualized. Everything is business as usual for XYZ Corp.’s end users.

XYZ Corp.’s now virtualized computing workload. The virtualization host’s specifications are higher than the calculations from Table 1-2 would indicate, to allow for future growth of the environment.

A question that probably will arise during a virtualization project is whether all the company’s workloads are compatible with virtualization. While there may be workloads that shouldn’t be virtualized, such as some specialized manufacturing software, they are becoming fewer and farther between. Software vendors are noting the mass adoption of virtualization, and they are working to make sure that their products can be virtualized. If you are unsure, check with each individual software vendor on a case-by-case basis.

Now that you have a view of what a virtualized environment looks like, it’s time to review the Hyper-V architecture in more detail.

Hyper-V Architecture

High-level Hyper-V architecture

Hardware Layer

Hyper-V expects that the machine will have hardware-assisted virtualization, such as Intel-VT or AMD-V.

SLAT (second-level address translation) is also a requirement.

These are computing technologies that are contained within the CPU on your Hyper-V host. Hardware-assisted virtualization and SLAT both provide enhanced computational capabilities when dealing with virtualized workloads. Most new hardware supports these requirements, but some options may still have to be enabled in the systems BIOS. Check your system’s documentation for details.

Hypervisor Layer

The hypervisor controls access to CPU and memory and allocates them as resources to various partitions (or VMs) running on top of them. When the Hyper-V role is installed, the OS of the physical server is made virtual during the process and becomes the parent partition shown in Figure 1-4.

VMBus

This is a virtual bus that enables communication between the host system and the VMs running on the host. If a virtual machine has the Hyper-V integration services installed, it will be able to utilize this bus for information exchanges with the host.

Parent Partition

This partition (or VM) is a “hidden” VM that acts as the management OS and controls the hypervisor. After you install the Hyper-V role on a Windows server, the hypervisor layer is placed between the hardware and the OS. The installed OS effectively becomes a VM running on Hyper-V, which is used to provide device drivers, power management services, storage, and networking functionality to the VMs running on the host.

Virtualization Service Provider (VSP)

VSP is only in the parent partition. It provides support for virtualized devices with full driver capabilities contained within the child VMs over the VMBus, that is, it provides optimized access to hardware resources to child VMs.

Virtual Machine Worker Process (VMWP)

The VM worker process (one separate process for each running VM on the Hyper-V host) provides management functionality and access from the parent partition to each of the child partitions. These processes run in user mode.

Virtual Machine Management Service (VMMS)

The VMMS is responsible for managing the state of all virtual machines (or child partitions) running on the Hyper-V host. The VMMS runs in user mode, within the parent partition.

Child Partition

Child partitions are the VMs hosted on your Hyper-V installation that are given access to CPU and memory by the hypervisor. Access to storage and networking resources is granted through the parent partition’s VSP. There are two different types of child partitions: enlightened and unenlightened. An enlightened VM will have the integration tools installed, have full synthetic device support, and utilize the VMBus for enhanced support and features. An unenlightened VM will not have the integration tools and will have emulated virtual devices instead of synthetic. Emulated devices do not perform as well as synthetic devices. We’ll be talking about enlightened and unenlightened VMs later on in this book.

Virtualization Service Client (VSC)

The VSC in each child VM utilizes the hardware resources that are provided by the parent partition. Communication is via the VMBus.

Integration Components (ICs)

These components, also known as integration tools, allow the child partition to communicate with the hypervisor and provides a number of other functions and benefits. We’ll be discussing integration components in a few chapters.

Note

The use of the term partitions can be confusing at times. In the case of Hyper-V, the word partition can be used interchangeably with the term virtual machine, and you can expect to see this terminology throughout the text.

As you can see, there are a lot of components in this technology. You may not grasp the Hyper-V architecture right away, but don’t fret! As you read the book, understanding the underlying architecture, and having an idea of how things are laid out, will help you comprehend why some functions and features in Hyper-V are set up and used the way they are.

We mentioned, at the top of the chapter, that we’re concentrating on Windows Server 2019 and Windows Server 2016 in this book . Microsoft has complicated the picture regarding Windows server versions, as we’ll explain next.

Windows Server Versions

Windows Server 2008

Windows Server 2008 R2

Windows Server 2012

Windows Server 2012 R2

Windows Server 2016

Microsoft has a ten-year support policy (though the support period is often extended), so support for Windows Server 2008 and 2008 R2 ends at the start of 2020. Windows Server 2012 and 2012 R2 will no longer be supported after October 2023.

This model changed with Windows Server 2016. Microsoft has moved to a continuous support policy similar to that introduced for Windows 10. There are now two release channels for Windows Server customers.

The first channel is the Long-Term Servicing Channel (LTSC). This is effectively the historical model used by Microsoft with new major versions of Windows Server released every two to four years. These releases have the same ten-year support life cycle that Microsoft has used for previous versions of Windows Server. The current LTSC version (as of this writing) is Windows Server 2016, with Windows Server 2019 expected toward the end of 2018. Releases from this channel are suited to scenarios requiring functional stability, for instance, exchange servers or large database servers. LTSC releases can receive updates (security and other) but won’t get new features or functionality during their lifetime. LTSC releases are available as Server Core (though installation calls it Server, and it is the default option!) and Server with Desktop Experience (GUI).

The new channel is the Semi-Annual Channel (SAC). Releases in this channel occur every six months (March and September) and are designated as Windows Server, version YYMM, in which YY is the two-digit year and MM is the two-digit month. The first release in this channel was Windows Server, version 1709 (September 2017) followed by Windows Server, version 1803 (March 2018).

SAC releases have a supported lifetime of 18 months, as opposed to the 10 years for the LTSC releases. New functionality and features are introduced through SAC releases for customers who wish to innovate on a quicker cadence than the LTSC release. New features and functionality introduced through SAC releases should be rolled into the next LTSC release. SAC releases are currently only available as Server Core to Software Assurance and cloud customers.

The advantage of using SAC releases is that you get new functionality much quicker. The disadvantage is that your servers have a maximum life cycle of 18 months. SAC releases are currently recommended for containerized applications, container hosts, and applications requiring fast innovation.

We’ll give a brief summary of the changes in the Windows Server versions, concentrating on features that may affect your Hyper-V environment.

Nano Server is now only for container images and is 80% smaller.

Server Core container image is 60% smaller.

Linux containers are now supported.

VM start order can be controlled.

Virtualized persistent memory

Server Core base container image reduced by 30% over version 1709

Curl, tar, and SSH support

Windows Subsystem for Linux

Container networking enhancements and increased Kubernetes functionality

Shielded VMs can now be Windows or Linux installs

Network encryption

Extending Windows Defender into the OS kernel

Further shrinkage of the Server Core container image

Increased support for Kubernetes

Hyper-converged infrastructure

Windows Server, version 1809, will be the SAC release that corresponds to the feature set of Windows Server 2019.

Nano Server

Nano Server is a very small footprint installation option introduced with Windows Server 2016. It was a headless server that has to be managed remotely. Nano Server supports a limited number of OS features, including container host, Hyper-V, IIS, file server, and clustering.

In Windows Server, version 1709, Nano Server was reduced to a container image OS only. You can’t create a Nano Server VM or install it on a physical machine.

We recommend that you use Nano server only as a container image, owing to this change.

Hyper-V Server

There is also the option of running Microsoft Hyper-V server. This is a low-footprint version of the server OS that is optimized for use as a Hyper-V host. It contains the Windows hypervisor, the driver model, and virtualization components. You can’t install other server features, such as file server or IIS.

Hyper-V server machines don’t provide a GUI and have to be managed remotely. They are an ideal virtualization platform, as minimum resources are consumed by the host, which maximizes what is available to the VM workloads.

Windows 10

Hyper-V has been available in the Windows client since Windows 8 and continues in Windows 10. Hyper-V on a Windows client machine has a subset of the features present in the server version. Using a client machine as a virtualization platform is good for development or demonstration purposes but can’t be used in production.

You’ve likely had enough of the theoretical discussions by now, so we’ve provided some lab questions as a refresher. In the next chapter, you’ll discover the Hyper-V management utilities that you’ll be working with, as you get deeper into the Microsoft virtualization stack.

Lab Work

- 1.

What is a hypervisor?

- 2.

What are the key benefits of the virtualized computing model?

- 3.

What is the parent partition, and how is it created?

- 4.

What are the key differences between a parent partition and a child partition?

- 5.

What is the primary purpose of the VMBus?

- 6.

What is the primary purpose of the integration components?