5.3 Frame-Compatible Stereo Coding

To deploy the two-view stereo video over the existing content delivery infrastructure, which normally supports one 2D video stream, the stereo video should be processed such that it is frame- and format-compatible during the transmission phase. There are two different types of frame-compatible stereo coding according to the picture resolution and discussed separately in the following two sections.

5.3.1 Half-Resolution Frame-Compatible Stereo Coding

In order to deploy stereo video through current broadcasting infrastructure originally designed for 2D video coding and transmission, almost all current 3D broadcasting solutions are based on multiplexing the stereo video into a single coded frame via spatial subsampling, that is, the original left and right views are subsampled into half resolution and then merged into a single video frame for compression and transmission over the infrastructure widely used for 2D video programs. At the decoder side, the de-multiplexing and interpolation are conducted to reconstruct the dual views [13]. By doing so, the frame merged from left and right view is frame compatible to existing video codec.

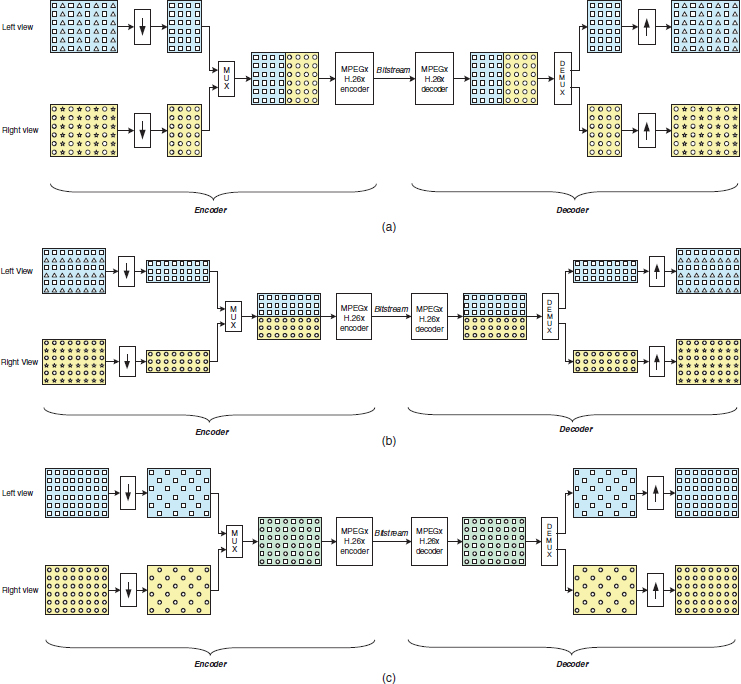

The half-resolution frame-compatible encoder consists of three components: (a) spatial resampling, (b) resampled pixel repacking, and (c) compression of packed frames. For the spatial resampling component, there are three common methods: (a) side-by-side format, which downsamples the original full-resolution video frame in each view with a factor of two horizontally, (b) over/under format, which downsamples the original full-resolution video frame in each view with a factor of two vertically, and (c) checkerboard format, which downsamples the original full-resolution video frame in each view in the quincunx grid manner. It has been demonstrated that the side-by-side format and over/under format have very similar compression performance, and the checkerboard format has the worst compression performance. The generic codec structure is depicted in Figure 5.9. The downsampling process should be applied to both luma and chroma components. The decimation can be performed by taking the same position of pixels from each view (e.g., odd columns from left view and odd columns from right view for side-by-side format); or a different position from each view (e.g., odd columns from left view and even columns from right view for side-by-side format). The former method is referred to as the common decimation method and the latter one is often called complementary decimation. And it may also involve filtering procedures to reduce the aliasing for better quality. After the downsampling, the downsampled pixels will be packed/multiplexed to fit them into the one full-resolution video frame. By doing so, the packed frame can be compressed by any video codec, such as MPEG-x or H.26x. At the decoder side, a corresponding video decoder is deployed first to decode the packaged video frame. The re-multiplexing module will unpack the pixels and put them back to the original sampling coordinates and the associated views. An upsampling procedure is followed to interpolate the missing pixels so that the dimension of each frame is restored to its original size.

Figure 5.9 (a) Side-by-side format. (b) Over/under format. (c) Checkerboard (quincunx) format.

The temporal multiplexing approach, by interleaving left and right view into one video sequence, can also be adopted in frame-compatible video coding. However, this approach will double the required bandwidth to deliver the two-times frame refresh rate. Subject to the channel bandwidth in the existing infrastructure, the temporal multiplexing scheme should be carried out in a half-frame-rate fashion in each view, which often cannot provide satisfactory visual quality.

5.3.2 Full-Resolution Frame-Compatible Layer Approach

Although half-resolution frame-compatible stereo video coding provides a convenient way to meet today's infrastructure requirement, the end user's viewing quality still suffers from the reduced spatial resolution. Based on the existing half-resolution frame-compatible stereo video coding, one could apply the layered video coding technology to send additional information in the enhancement layers to improve the final reconstructed full-resolution frame [14].

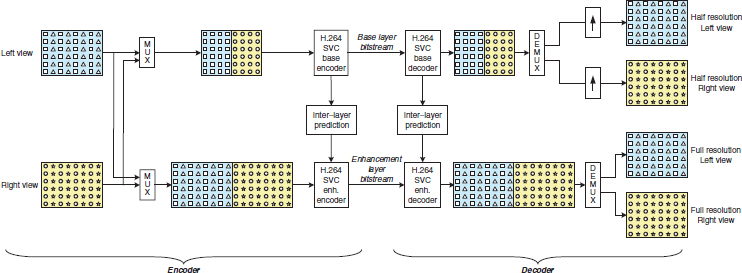

One straightforward method is to apply the spatial scalability coding tools [15] provided by the scalable video coding (SVC) extension of H.264/AVC [16]. An example using side-by-side format is illustrated in Figure 5.10. The base layer of the spatial scalability codec works as the legacy half-resolution frame-compatible video codec. To utilize the tools provided by SVC, the encoder will also construct the reference frames in a side-by-side format but with dimension 2W × H. The base layer will be first upsampled to the same dimension, 2W × H, and the encoder will perform inter-layer prediction from the upsampled base layer to generate the residual for encoding. When the decoder receives the enhancement layer, the decoder performs the same upsampling operations and adds the enhancement layer information back to improve the overall video quality.

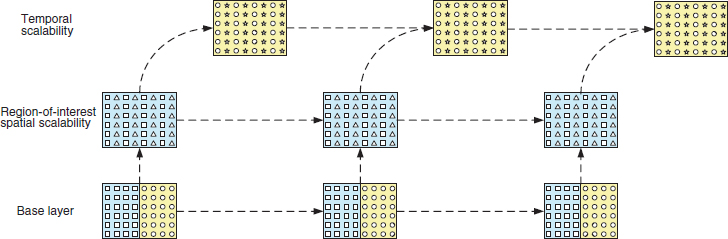

Another approach based on SVC is to utilize both spatial and temporal scalability, which is shown in Figure 5.11. The enhancement layer of the left view is carried through the region-of-interest spatial scalability layer by upsampling the left view portion of the reconstructed base layer, and the enhancement layer of right view will be encoded through the temporal scalability from the enhanced left view.

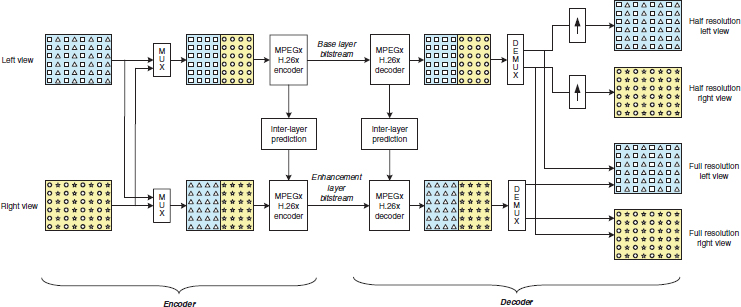

There are other layered full-resolution frame-compatible approaches based on the multi-view video coding (MVC) extension of H.264/AVC [17], which will be discussed later. One realization is shown in Figure 5.12. Note that although MVC provides a way to compress multiple views in a single stream, it does not provide the capability to support the full-resolution frame-compatible format. On the other hand, MVC provides a container to deliver multiple video sequences in the bit stream. Similar to the SVC-based solution, the base layer of MVC-based codec operates as the required half-resolution frame-compatible video codec to generate the base view. The pixels that are not included in the sampling grid in the half-resolution base view will be filtered and encoded in the enhancement view. Note that the resampling in the base view and enhanced view should be performed properly to alleviate the aliasing artifact. The alternative to reduce the resampling artifact is proposed through the usage of three-view MVC [18], which has the base layer to provide the half-resolution frame-compatible sequence and other two views to contain the full resolution sequence for the left view and the right view individually.

Figure 5.10 Full-resolution frame compatible format based on SVC spatial scalability.

Figure 5.11 Full-resolution frame-compatible format based on SVC spatial plus temporal scalability.

Figure 5.12 Full-resolution frame compatible format based on MVC.