Requirements and Challenges for Big Data Architectures

Abstract

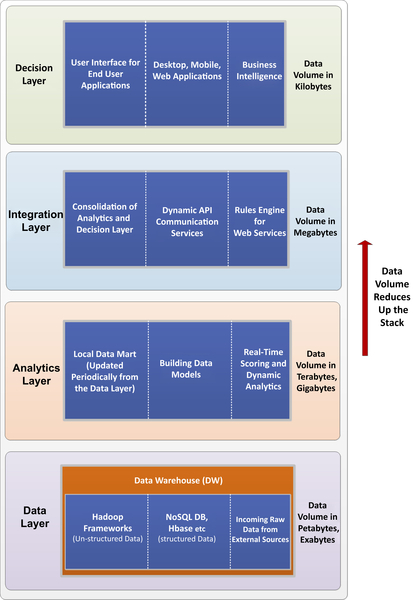

Big data analytics encompass the integration of a range of techniques while deploying and using this technology in practice. The processing requirements of big data span across multiple machines with the seamless integration of a large range of subsystems functioning in a distributed fashion. Because we witness the processing requirements of big data exceeding the capabilities of most current machines, building up such a huge processing architecture involves several challenges in terms of both the hardware and software deployments. Understanding the analytics assets across the underpinning core technologies will add additional values, while driving innovation and insights with big data. This chapter is structured to showcase the challenges and requirements of a big data processing infrastructure and also gives an overview of the core technologies and considerations involved in the concept of big data processing.

Keywords

Acquisition; Analysis; Deployment; Extract; Integration; OrganizationWhat Are the Challenges Involved in Big Data Processing?

Deployment Concept

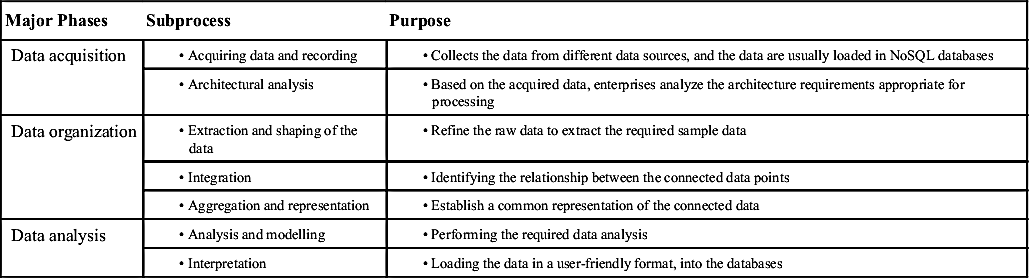

Table 9.1