Big Data Concerns in Autonomous AI Systems

Abstract

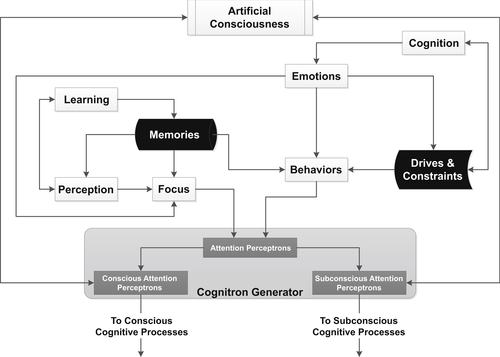

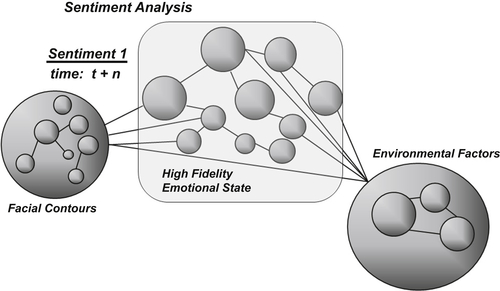

Current and future space, air, and ground systems are growing in complexity and capability, creating serious challenges to operators who monitor, maintain, and use systems in an ever-growing network of assets. Growing interest in autonomous systems with cognitive skills to monitor, analyze, diagnose, and predict behaviors in real time makes this problem even more challenging. Systems today continue to struggle to satisfy the need to obtain actionable knowledge from an ever-increasing and inherently duplicative store of non–context specific multidisciplinary information content. In addition, increased automation is the norm and truly autonomous systems are the growing future for atomic/subatomic exploration and within challenging environments unfriendly to the physical human condition. Simultaneously, the size, speed, and complexity of systems continue to increase rapidly to improve timely generation of actionable knowledge. However, development of valuable readily consumable knowledge density and context quality continues to improve more slowly and incrementally. New concepts, mechanisms, and implements are required to facilitate the development and competency of complex systems capable of autonomous operation, self-healing, and thus critical management of their knowledge economy and higher-fidelity self-awareness of their real-time internal and external operational environments. Presented here are new concepts and notional architectures to solve the problem of how to take the fuzziness of information content and drive it toward context-specific topical knowledge development. We believe this is necessary to facilitate real-time cognition-based information discovery, decomposition, reduction, normalization, encoding, memory recall (recombinant knowledge construction, and, most important, enhanced/improved decision making for autonomous artificially intelligent systems.

Keywords

Artificial intelligence; Autonomous decision making; Autonomous robotics; Newtonian mechanics; Quantum mechanics; Real-time autonomous systemsIntroduction

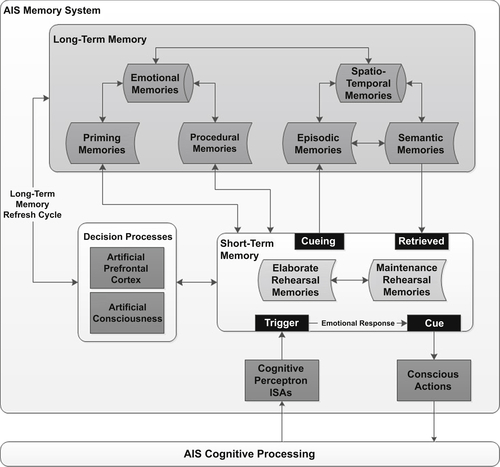

Artificially Intelligent System Memory Management

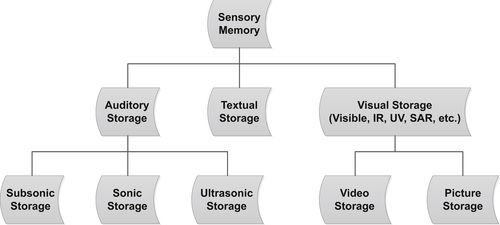

Sensory Memories

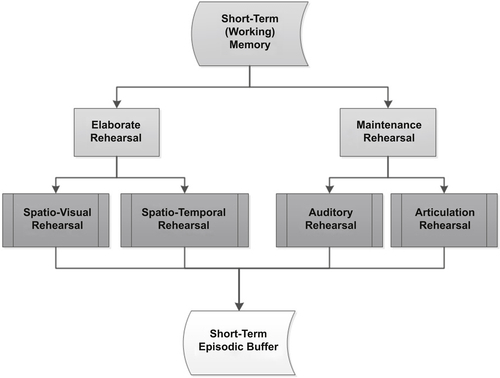

Short-term Artificial Memories

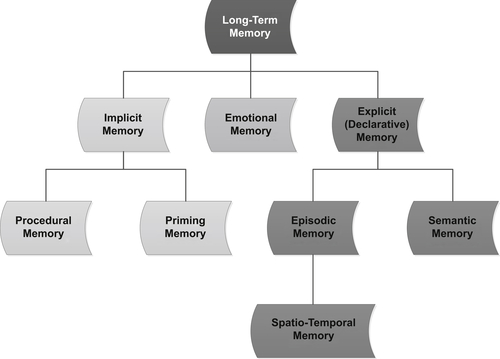

Long-term Artificial Memories

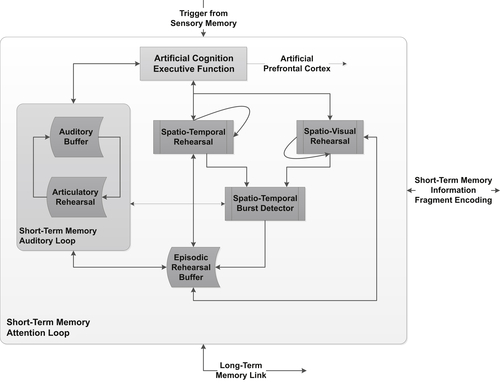

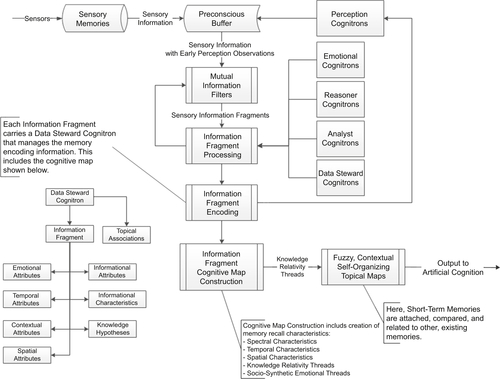

Artificial Memory Processing and Encoding

Short-term Artificial Memory Processing

Long-term Artificial Memory Processing

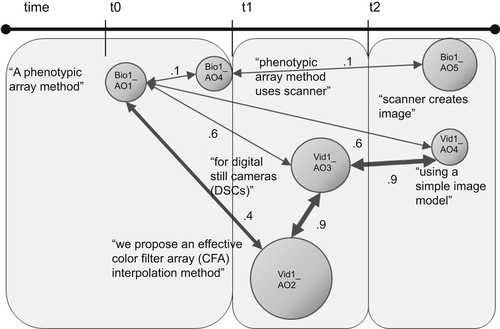

Implicit Biographical Memory Recall/Reconstruction Using Spectral Decomposition Mapping

Constructivist Learning

Hans Vaihinger (1852–1933) asserted that people develop “workable fictions.” This is his philosophy of “As if” such as mathematical infinity or God. Alfred Korzybski’s (1879–1950) system of semantics focused on the role of the speaker in assigning meaning to events. Thus constructivists thought that human beings operated on the basis of symbolic or linguistic constructs that help navigate the world without contacting it in any simple or direct way. Postmodern thinkers assert that constructions are viable to the extent that they help us live our lives meaningfully and find validation in shared understandings of others. We live in a world constituted by multiple social realities, no one of which can claim to be “objectively” true across persons, cultures, or historical epochs. Instead, the constructions on the basis of which we live are at best provisional ways of organizing our “selves” and our activities, which could under other circumstances be constituted quite differently.