Tools and Technologies for the Implementation of Big Data

Abstract

This chapter uses the five V’s of Big Data (volume, velocity, variety, veracity, and value) to form the basis for consideration of the current status and issues relating to the introduction of Big Data analysis into organizations. The first three are critical to understanding the implications and consequences of available choices for the techniques, tools, and order to provide an understanding of choices that need to be made based on understanding the nature of the data sources and the content. All five V’s are invoked to evaluate some of the most critical issues involved in the choices made during the early stages of implementing a Big Data analytics project. Big Data analytics is a comparatively new field; as such, it is important to recognize that elements are currently well along the Gartner hype cycle into productive use. The concept of the planning fallacy is used with information technology project success reference class data created by the Standish Group to improve the success rates of Big Data projects. International Organization for Standardization 27002 provides a basis considering critical issues raised by data protection regimes in relation to the sources and locations of data and processing of Big Data.

Keywords

Analysis; Data protection; Governance; Hype cycle; Implementation; Paradigms; Project success factors; Techniques; Technologies; Tools; Trust; Value; VeracityIntroduction

Techniques

Representation, Storage, and Data Management

Distributed Databases

Massively Parallel Processing Databases

Data-Mining Grids

Distributed File Systems

Cloud-Based Databases

Analysis

A/B Testing

Association Rule Learning

Classification

Crowdsourcing

Data Mining

Natural Language Processing and Text Analysis

Sentiment Analysis

Signal Processing

Visualization

Computational Tools

Hadoop

MapReduce

Apache Cassandra

Implementation

Implementation Issues

New Technology Introduction and Expectations

Project Initiation and Launch

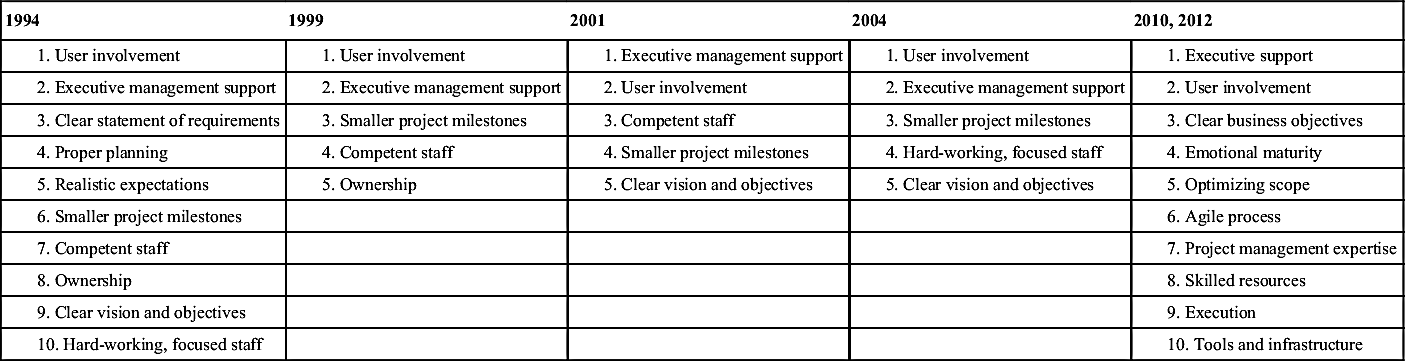

Table 10.1

Rising to the Peak of Inflated Expectations

| Technologies on the Rise | Time to Deliver, in Years | At the Peak of Inflated Expectations | Time to Deliver, in Years |

| Information valuation | >10 | Dynamic data masking | 5–10 |

| High-performance message infrastructure | 5–10 | Social content | 2–5 |

| Predictive modeling solutions | 2–5 | Claims analytics | 2–5 |

| Internet of things | >10 | Content analytics | 5–10 |

| Search-based data discovery tools | 5–10 | Context-enriched services | 5–10 |

| Video search | 5–10 | Logical data warehouse | 5–10 |

| NoSQL database management systems | 2–5 | ||

| Social network analysis | 5–10 | ||

| Advanced fraud detection and analysis technologies | 2–5 | ||

| Open SCADA (Supervisory Control And Data Acquisition) | 5–10 | ||

| Complex-event processing | 5–10 | ||

| Social analytics | 2–5 | ||

| Semantic web | >10 | ||

| Cloud-based grid computing | 2–5 | ||

| Cloud collaboration services | 5–10 | ||

| Cloud parallel processing | 5–10 | ||

| Geographic information systems for mapping, visualization, and analytics | 5–10 | ||

| Database platform as a service | 2–5 | ||

| In-memory database management systems | 2–5 | ||

| Activity streams | 2–5 | ||

| IT service root cause analysis tools | 5–10 | ||

| Open government data | 2–5 |

Sourced from Gartner (2012).

Table 10.2

Falling from the Peak to Reality

| Sliding into the Trough of Disillusionment | Climbing the Slope of Enlightenment | Entering the Plateau of Productivity |

| Typically 2–5 years delivery | Typically >2 years delivery | |

| Telematics | Intelligent electronic devices (2–5) | Web analytics |

| In-memory data grids | Supply chain analytics (obsolete) | Column-store DBMS |

| Web experience analytics | Social media monitors (<2) | Predictive analytics |

| Cloud computing | Speech recognition (2–5) | |

| Sales analytics (5–10) | ||

| MapReduce and alternatives | ||

| Database software as a service | ||

| In-memory analytics | ||

| Text analytics |

Sourced from Gartner (2012).

Information Technology Project Reference Class

Mitigating Factors

User Factors and Change Management

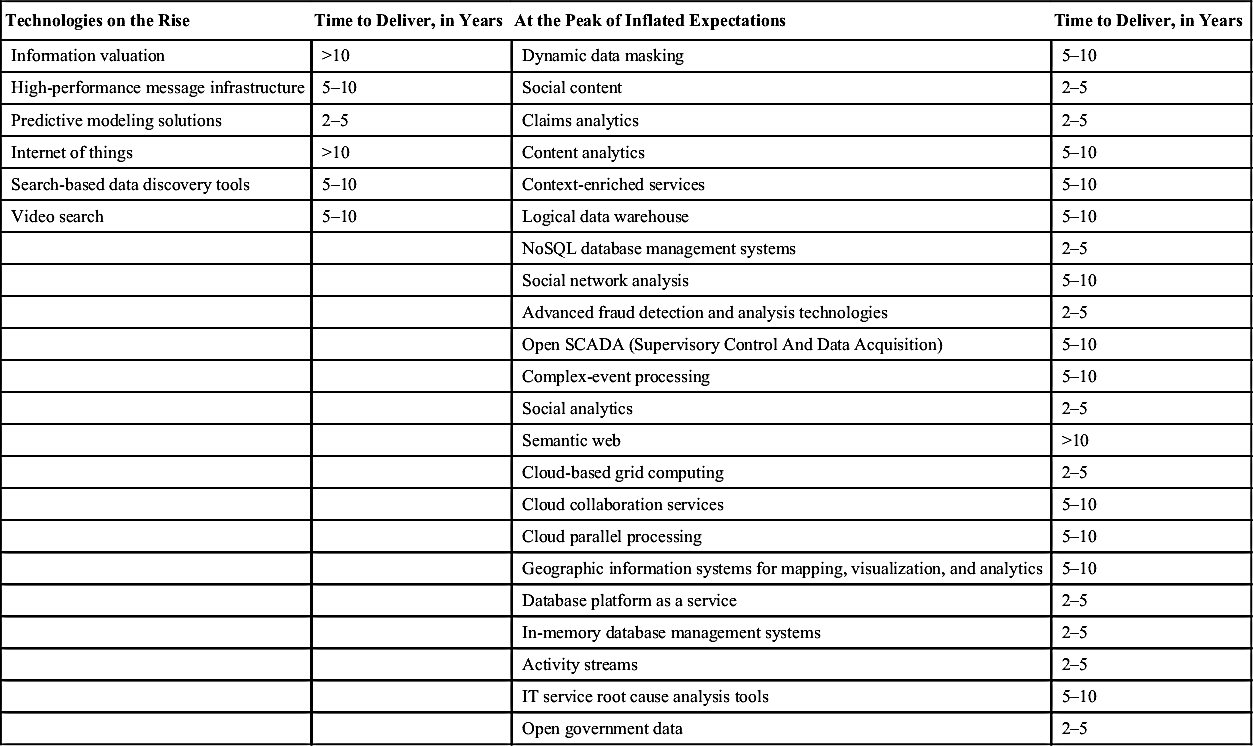

Table 10.3

Standish Group Success Factors