Chapter 43. Parallel Programming and Parallel LINQ

Modern computers ship with multicore architectures, meaning that they have more than one processor. The simplest home computer has at least dual-core architecture, so you surely have a machine with multiple processors. Managed applications do their work using only one processor. This makes things easier, but with this approach you do not unleash all the system resources. The reason is that all elaborations rely on a single processor that is overcharged and will take more time. Having the possibility of scaling the application execution over all available processors instead is a technique that would improve how system resources are consumed and would speed up the application execution. The reason is simple: Instead of having only one processor doing the work, you have all the available processors doing the work concurrently. Scaling applications across multiple processors is known as parallel computing, which is not something new in the programming world. The word parallel means that multiple tasks are executed concurrently, in parallel. The .NET Framework 4.5 includes a special library dedicated to parallel computing for the Microsoft platform. This library is called Task Parallel Library, and it has been dramatically improved in the new version of .NET, meaning that code can run up to 400% faster than in .NET 4.0. It also includes a specific implementation of Language Integrated Query, known as Parallel LINQ. In this chapter you learn what the library is, how it is structured, and how you can use it for writing parallel code in your applications starting from basic concepts up to writing parallel queries with LINQ and passing through interesting objects such as the concurrent collections.

Introducing Parallel Computing

The .NET Framework 4.5 (as well as its predecessor) provides support for parallel computing through the Task Parallel Library (TPL), which is a set of Application Programming Interfaces (APIs) offered by specific extensions of the System.Threading.dll assembly. The reference to this assembly is included by default when creating new projects, so you do not need to add one manually. The TPL is reachable via the System.Threading and System.Threading.Tasks namespaces that provide objects for scaling work execution over multiple processors. You write small units of work known as tasks. Tasks are scheduled for execution by the TPL’s Task Scheduler, which is responsible for executing tasks according to available threads. This is possible because the Task Scheduler is integrated with the .NET Thread Pool. The good news is that the .NET Framework can automatically use all available processors on the target machines without the need to recompile code.

Note

Parallel computing makes it easier to scale applications over multiple processors, but it remains something complex in terms of concepts. This is because you will face some threading concepts, such as synchronization locks, deadlocks, and so on. Therefore, you should have at least a basic knowledge of threading issues before writing parallel code. Another important consideration is figuring out when you should use parallel computing. The answer is not easy because you are the only one who knows how your applications consume resources. The general rule is that parallel computing gives the best advantage when you have intensive processing scenarios. In simpler elaborations, parallel computing is not necessarily the best choice and can cause performance loss. Use it when your applications require hard CPU loops.

Most of the parallel APIs are available through the System.Threading.Tasks.Task and System.Threading.Tasks.Parallel classes. The first one is described in detail later; now we provide coverage of the most important classes for parallelism.

What’s New in .NET 4.5: Custom Task Scheduling

If you already have a good experience with parallel computing in .NET 4.0, you might be interested in knowing that .NET 4.5 introduces the ability to create custom task schedulers. The new version exposes the ConcurrentExclusiveSchedulerPair class, which provides support for coordinating works in various ways. Such a class exposes two instances of the TaskScheduler class via the ConcurrentScheduler and ExclusiveScheduler properties. They provide asynchronous support for reader/writer locks, and the benefit that they provide is that tasks scheduled in this way can run as long as there are no executing exclusive tasks. After an exclusive task is scheduled, no more concurrent tasks are allowed to run. Only when all running concurrent tasks have completed, will the exclusive tasks be allowed to run one at a time. Another useful control you can have over tasks is that the ConcurrentExclusiveSchedulerPair also supports restricting the number of tasks that can run concurrently. You instead use the ExclusiveScheduler class to run queued tasks sequentially. This topic is for readers with an advanced experience with the TPL. You can find further information in the MSDN documentation: http://msdn.microsoft.com/en-us/library/system.threading.tasks.concurrentexclusiveschedulerpair(v=vs.110).aspx

Introducing Parallel Classes

Parallelism in the .NET Framework 4.5 is possible due to a number of classes, some responsible for maintaining the architecture of the TPL and some for performing operations in a concurrent fashion. The following subsection provides a brief coverage of the most important classes, describing their purpose.

The Parallel Class

The System.Threading.Tasks.Parallel class is one of the most important classes in parallel computing because it provides shared methods for running concurrent tasks and for executing parallel loops. In this chapter, you can find several examples of usage of this class; for now, you just need to know that it provides the Invoke, For, and ForEach shared methods. The first one enables running multiple tasks concurrently, and the other ones enable you to execute loops in parallel.

The TaskScheduler Class

The System.Threading.Tasks.TaskScheduler class is responsible for the low-level work of sending tasks to the thread queue. This means that when you start a new concurrent task, the task is sent to the scheduler that checks for thread availability in the .NET thread pool. If a thread is available, the task is pushed into the thread and executed. You do not interact with the task scheduler. (The class exposes some members that you can use to understand the task’s state.) The first property is Current, which retrieves the instance of the running task scheduler. This is required to access information. For example, you can understand the concurrency level by reading the MaximumConcurrencyLevel property as follows:

Console.WriteLine("The maximum concurrency level is {0}",

TaskScheduler.Current.MaximumConcurrencyLevel)

There are also some protected methods that can be used to force tasks execution (such as QueueTask and TryDequeue), but these are accessible if you want to create your own custom task scheduler, which is beyond the scope of this chapter.

The TaskFactory Class

The System.Threading.Tasks.TaskFactory class provides support for generating and running new tasks and is exposed as a shared property of the Task class, as explained in the next section. The most important member is the StartNew method, which enables creating a new task and automatically starting it.

The ParallelOptions Class

The System.Threading.Tasks.ParallelOptions class provides a way for setting options on tasks creation. Specifically, it provides properties for setting task cancellation properties (CancellationToken), the instance of the scheduler (TaskScheduler), and the maximum number of threads that a task is split across (MaxDegreeOfParallelism).

Understanding and Using Tasks

The parallel computing in the .NET Framework development relies on the concept of tasks. This section is therefore about the core of the parallel computing, and you learn to use tasks for scaling units of work across multiple threads and processors.

Read this Section Carefully

This section is particularly important not only with regard to the current chapter, but also because the concept of tasks returns in Chapter 44, “Asynchronous Programming.” For this reason it is very important that you carefully read the concepts about tasks; you will reuse them in the next chapter.

What Is a Task?

Chapter 42, “Processes and Multithreading,” discusses multithreading and illustrates how a thread is a unit of work that you can use to split a big task across multiple units of work. Different from the pure threading world, in parallel computing the most important concept is the task, which is the basic unit of work and can be scaled across all available processors. A task is not a thread; a thread can run multiple tasks, but each task can be also scaled across more than one thread, depending on available resources. The task is therefore the most basic unit of work for operations executed in parallel. In terms of code, a task is nothing but an instance of the System.Threading.Tasks.Task class that holds a reference to a delegate, pointing to a method that does some work. The implementation is similar to what you do with Thread objects but with the differences previously discussed. You have two alternatives for executing operations with tasks: The first one is calling the Parallel.Invoke method; the second one is manually creating and managing instances of the Task class. The following subsections cover both scenarios.

Running Tasks with Parallel.Invoke

The first way of running tasks in parallel is calling the Parallel.Invoke shared method. This method can receive an array of System.Action objects as parameters, so each Action is translated by the runtime into a task. If possible, tasks are executed in parallel. The following example demonstrates how to perform three calculations concurrently:

'Requires an Imports System.Threading.Tasks directive

Dim angle As Double = 150

Dim sineResult As Double

Dim cosineResult As Double

Dim tangentResult As Double

Parallel.Invoke(Sub()

Console.WriteLine(Thread.CurrentThread.

ManagedThreadId)

Dim radians As Double = angle * Math.PI / 180

sineResult = Math.Sin(radians)

End Sub,

Sub()

Console.WriteLine(Thread.CurrentThread.

ManagedThreadId)

Dim radians As Double = angle * Math.PI / 180

cosineResult = Math.Cos(radians)

End Sub,

Sub()

Console.WriteLine(Thread.CurrentThread.

ManagedThreadId)

Dim radians As Double = angle * Math.PI / 180

tangentResult = Math.Tan(radians)

End Sub)

In the example, the code uses statement lambdas; each of them is translated into a task by the runtime that is also responsible for creating and scheduling threads and for scaling tasks across all available processors. If you run the code, you can see how the tasks run within separate threads, automatically created for you by the TPL. As an alternative, you can supply AddressOf clauses pointing to methods performing the required operations, instead of using statement lambdas. Although this approach is useful when you need to run tasks in parallel the fastest way, it does not enable you to take control over tasks themselves. This is something that requires explicit instances of the Task class, as explained in the next subsection.

Creating, Running, and Managing Tasks: The Task Class

The System.Threading.Tasks.Task class represents the unit of work in the parallel computing based on .NET Framework. Differently from calling Parallel.Invoke, when you create an instance of the Task class, you get deep control over the task itself, such as starting, stopping, waiting for completion, and cancelling. The constructor of the class requires you to supply a delegate or a lambda expression to provide a method containing the code to be executed within the task. The following code demonstrates how you create a new task and then start it:

Dim simpleTask As New Task(Sub()

'Do your work here...

End Sub)

simpleTask.Start()

You supply the constructor with a lambda expression or with a delegate and then invoke the Start instance method. The Task class also exposes a Factory property of type TaskFactory that offers members for interacting with tasks. For example, you can use this property for creating and starting a new task all in one as follows:

Dim factoryTask = Task.Factory.StartNew(Sub()

'Do your work here

End Sub)

This has the same result as the first code snippet. The logic is that you can create instances of the Task class, each with some code that will be executed in parallel.

Getting the Thread ID

When you launch a new task, the task is executed within a managed thread. If you want to get information on the thread, in the code for the task you can access it via the System.Threading.Thread.CurrentThread shared property. For example, the CurrentThread.ManagedThreadId property will return the thread ID that is hosting the task.

Creating Tasks That Return Values

The Task class also has a generic counterpart that you can use for creating tasks that return a value. Consider the following code snippet that creates a task returning a value of type Double, which is the result of calculating the tangent of an angle:

Dim taskWithResult = Task(Of Double).

Factory.StartNew(Function()

Dim radians As Double _

= 120 * Math.PI / 180

Dim tan As Double = _

Math.Tan(radians)

Return tan

End Function)

Console.WriteLine(taskWithResult.Result)

You use a Function, which represents a System.Func(Of T), so that you can return a value from your operation. The result is accessed via the Task.Result property. In the preceding example, the Result property contains the result of the tangent calculation. The problem is that the start value on which the calculation is performed is hard-coded. If you want to pass a value as an argument, you need to approach the problem differently. The following code demonstrates how to implement a method that receives an argument that can be reached from within the new task:

Private Function CalcTan(ByVal angle As Double) As Double

Dim t = Task(Of Double).Factory.

StartNew(Function()

Dim radians As Double = angle * Math.PI / 180

tangentResult = Math.Tan(radians)

Return tangentResult

End Function)

Return t.Result

End Function

The result of the calculation is returned from the task. This result is wrapped by the Task.Result instance property, which is then returned as the method result.

Waiting for Tasks to Complete

You can explicitly wait for a task to complete by invoking the Task.Wait method. The following code waits until the task completes:

Dim simpleTask = Task.Factory.StartNew(Sub()

'Do your work here

End Sub)

simpleTask.Wait()

You can alternatively pass a number of milliseconds to the Wait method so that you can also check for a timeout. The following code demonstrates this:

simpleTask.Wait(1000)

If simpleTask.IsCompleted Then

'completed

Else

'timeout

End If

Notice how the IsCompleted property enables you to check whether the task is marked as completed by the runtime. Wait has to be enclosed inside a Try..Catch block because the method asks the runtime to complete a task that could raise any exceptions. This is an example:

Try

simpleTask.Wait(1000)

If simpleTask.IsCompleted Then

'completed

Else

'timeout

End If

'parallel exception

Catch ex As AggregateException

End Try

Exception Handling

Handling exceptions is a crucial topic in parallel programming. The problem is that multiple tasks that run concurrently could raise more than one exception concurrently, and you need to understand what the actual problem is. The .NET Framework 4.5 offers the System.AggregateException class that wraps all exceptions that occurred concurrently into one instance. Such class then exposes, over classic properties, an InnerExceptions collection that you can iterate for checking which exceptions occurred. The following code demonstrates how you catch an AggregateException and how you iterate the instance:

Dim aTask = Task.Factory.StartNew(Sub() Console.

WriteLine("A demo task"))

Try

aTask.Wait()

Catch ex As AggregateException

For Each fault In ex.InnerExceptions

If TypeOf (fault) Is InvalidOperationException Then

'Handle the exception here..

ElseIf TypeOf (fault) Is NullReferenceException Then

'Handle the exception here..

End If

Next

Catch ex As Exception

End Try

Each item in InnerExceptions is an exception that you can verify with TypeOf. Another problem is when you have tasks that run nested tasks that throw exceptions. In this case you can use the AggregateException.Flatten method, which wraps exceptions thrown by nested tasks into the parent instance. The following code demonstrates how to accomplish this:

Dim aTask = Task.Factory.StartNew(Sub() Console.

WriteLine("A demo task"))

Try

aTask.Wait()

Catch ex As AggregateException

For Each fault In ex.Flatten.InnerExceptions

If TypeOf (fault) Is InvalidOperationException Then

'Handle the exception here..

ElseIf TypeOf (fault) Is NullReferenceException Then

'Handle the exception here..

End If

Next

Catch ex As Exception

End Try

Flatten returns an instance of the AggregateException storing inner exceptions that included errors coming from nested tasks.

Canceling Tasks

In some situations you want to cancel task execution. To programmatically cancel a task, you need to enable tasks for cancellation, which requires some lines of code. You need an instance of the System.Threading.CancellationTokenSource class; this instance tells a System.Threading.CancellationToken that it should be canceled. The CancellationToken class provides notifications for cancellation. The following lines declare both objects:

Dim tokenSource As New CancellationTokenSource()

Dim token As CancellationToken = tokenSource.Token

.NET 4.5 Improvements: Setting Time for Cancellation

The .NET Framework 4.5 now enables you to create an instance of the CancellationTokenSource class and specify on such an instance how much time will have to pass before a cancellation occurs:

Dim tokenSource As New CancellationTokenSource(TimeSpan.FromSeconds(5))

You specify a TimeSpan value directly in the constructor.

Then you can start a new task using an overload of the TaskFactory.StartNew method that takes the cancellation token as an argument. The following line accomplishes this:

Dim aTask = Task.Factory.StartNew(Sub() DoSomething(token), token)

You still pass a delegate as an argument; in the preceding example the delegate takes an argument of type CancellationToken that is useful for checking the state of cancellation during the task execution. The following code snippet provides the implementation of the DoSomething method, in a demonstrative way:

Sub DoSomething(ByVal cancelToken As CancellationToken)

'Check if cancellation was requested before

'the task starts

If cancelToken.IsCancellationRequested = True Then

cancelToken.ThrowIfCancellationRequested()

End If

For i As Integer = 0 To 1000

'Simulates some work

Thread.SpinWait(10000)

If cancelToken.IsCancellationRequested Then

'Cancellation was requested

cancelToken.ThrowIfCancellationRequested()

End If

Next

End Sub

The IsCancellationRequested property returns True if cancellation over the current task was requested. The ThrowIfCancellationRequested method throws an OperationCanceledException to communicate to the caller that the task was canceled. In the preceding code snippet, the Thread.SpinWait method simulates some work inside a loop. Notice how checking for cancellation is performed at each iteration so that an exception can be thrown if the task is actually canceled. The next step is to request cancellation in the main code. This is accomplished by invoking the CancellationTokenSource.Cancel method, as demonstrated in the following code:

tokenSource.Cancel()

Try

aTask.Wait()

Catch ex As AggregateException

'Handle concurrent exceptions here...

Catch ex As Exception

End Try

Disable “Just My Code”

The OperationCanceledException is correctly thrown if the Just My Code is disabled (refer to Chapter 5, “Debugging Visual Basic 2012 Applications,” for details on Just My Code). If it is enabled, the compiler sends a message saying that an OperationCanceledException was unhandled by user code. This is benign, so you can go on running your code by repressing F5.

The Barrier Class

The System.Threading namespace in .NET 4.5 exposes a class named Barrier. The goal of this class is bringing a number of tasks that work concurrently to a common point before taking further steps. Tasks work across multiple phases and signal that they arrived at the barrier, waiting for all other tasks to arrive. The constructor of the class offers several overloads, but all have the number of tasks participating in the concurrent work in common. You can also specify the action to take after they arrive at the common point (that is, they reach the barrier and complete the current phase). Notice that the same instance of the Barrier class can be used multiple times, for representing multiple phases. The following code demonstrates how three tasks reach the barrier after their work, signaling the work completion and waiting for other tasks to finish:

Sub BarrierDemo()

' Create a barrier with three participants

' The Sub lambda provides an action that will be taken

' at the end of the phase

Dim myBarrier As New Barrier(3,

Sub(b)

Console.

WriteLine("Barrier has been " & _

"reached (phase number: {0})",

b.CurrentPhaseNumber)

End Sub)

' This is the sample work made by all participant tasks

Dim myaction As Action =

Sub()

For i = 1 To 3

Dim threadId As Integer =

Thread.CurrentThread.ManagedThreadId

Console.WriteLine("Thread {0} before wait.", threadId)

'Waits for other tasks to arrive at this same point:

myBarrier.SignalAndWait()

Console.WriteLine("Thread {0} after wait.", threadId)

Next

End Sub

' Starts three tasks, representing the three participants

Parallel.Invoke(myAction, myAction, myAction)

' Once done, disposes the Barrier.

myBarrier.Dispose()

End Sub

The code performs these steps:

1. Creates an instance of the Barrier class adding three participants and specifying the action to take when the barrier is reached.

2. Declares a common job for the three tasks (the myAction object), which perform an iteration against running threads simulating some work. When each task completes the work, the Barrier.SignalAndWait method is invoked. This tells the runtime to wait for other tasks to complete their work before going to the next phase.

3. Launches the three concurrent tasks and disposes of the myBarrier object at the appropriate time.

The code also reuses the same Barrier instance to work across multiple phases. Such a class also exposes interesting members, such as these:

• AddParticipant and AddParticipants—These methods enable you to add one or the specified number of participant tasks to the barrier, respectively.

• RemoveParticipant and RemoveParticipants—These methods enable you to remove one or the specified number of participant tasks from the barrier, respectively.

• CurrentPhaseNumber property of type Long—This returns the current phase number.

• ParticipantCount property of type Integer—This returns the number of tasks involved in the operation.

• ParticipantsRemaining property of type Integer—This returns the number of tasks that have not invoked the SignalAndWait method yet.

A Barrier represents a single phase in the process; multiple instances of the same Barrier class, like in the preceding code, represent multiple phases.

Parallel Loops

The Task Parallel Library offers the ability of scaling loops such as For and For Each. This is possible due to the implementation of the shared Parallel.For and Parallel.ForEach methods. Both methods can use a multicore architecture for the parallel execution of loops, as explained in next subsections. Now create a new Console application with Visual Basic. The goal of the next example is to simulate an intensive processing for demonstrating the advantage of parallel loops and demonstrating how the Task Parallel Library is responsible for managing threads for you. With that said, write the following code:

'Requires an Imports System.Threading directive

Private Sub SimulateProcessing()

Threading.Thread.SpinWait(80000000)

End Sub

Private Function GetThreadId() As String

Return "Thread ID: " + Thread.CurrentThread.

ManagedThreadId.ToString

End Function

Tip

The Thread.SpinWait method tells a thread that it has to wait for the specified number of iterations to be completed. You might often find this method in the code samples about parallel computing with the .NET Framework.

The SimulateProcessing method just simulates and performs intensive processing against fictitious data. GetThreadId can help demonstrate the TPL influence on threads management. Now in the Sub Main of the main module, write the following code that takes a StopWatch object for measuring elapsed time:

Dim sw As New Stopwatch

sw.Start()

'This comment will be replaced by

'the method executing the loop

sw.Stop()

Console.WriteLine("Elapsed: {0}", sw.Elapsed)

Console.ReadLine()

This code helps to measure time in both classic and parallel loops as explained soon.

Note

Remember that parallel loops get the most out in particular circumstances such as intensive processing. If you need to iterate a collection without heavy CPU business, parallel loops will probably not be helpful, and you should use classic loops. Choose parallel loops only when your processing is intensive enough to require the work of all the processors on your machine.

Parallel.For Loop

Writing a parallel For loop is an easy task, although it is important to remember that you can use parallelism against intensive and time-consuming operations. Imagine you want to invoke the code defined at the beginning of this section for a finite number of times to simulate an intensive processing. This is how you would do it with a classic For loop:

Sub ClassicForTest()

For i = 0 To 15

Console.WriteLine(i.ToString + GetThreadId())

SimulateProcessing()

Next

End Sub

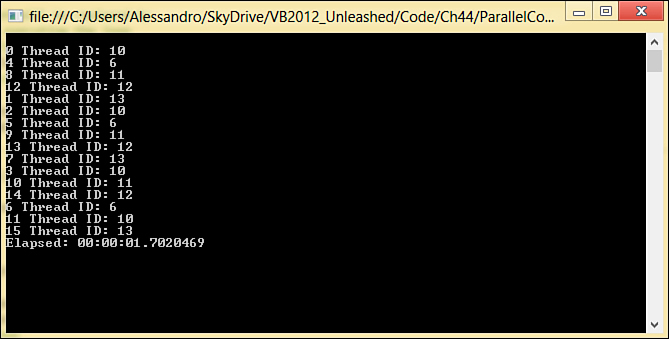

Nothing new here; the code writes the thread identifier at each step and simulates an intensive processing. If you run the code, you get the result shown in Figure 43.1, where you can see how all the work relies on a single thread and how the loop result is ordered.

Figure 43.1. Running a classic For loop to demonstrate single threading.

The next code snippet is how you write a parallel loop that accomplishes the same thing:

'Requires an Imports System.Threading.Tasks directive

Sub ParallelForTest()

Parallel.For(0, 16, Sub(i)

Console.WriteLine(i.ToString + _

GetThreadId())

SimulateProcessing()

End Sub)

End Sub

Parallel.For receives three arguments: The first one is the “from” part of the For loop, the second one is the “to” part of the For loop (and it is exclusive to the loop), and the third is the action to take at each step. Such action is represented by a System.Action(Of Integer) that you write under the form of a statement lambda that takes a variable (i in the previous example) representing the loop counter. To provide a simpler explanation, the Sub..End Sub block in the statement lambda of the parallel loop contains the same code of the For..Next block in the classic loop. If you run the code, you can see how things change, as shown in Figure 43.2.

Figure 43.2. The parallel loop runs multiple threads and takes less time.

You immediately notice two things: The first one is that Parallel.For automatically splits the loop execution across multiple threads, differently from the classic loop in which the execution relied on a single thread. Multiple threads are shared across the multicore architecture of your machine, thus speeding up the loop. The second thing you notice is the speed of execution. Using a parallel loop running the previous example on my machine took about 5 seconds less than the classic loop. Because the loop execution is split across multiple threads, such threads run in parallel. This means that maintaining a sequential execution order is not possible with Parallel.For loops. As you can see from Figure 43.2, the iterations are not executed sequentially, and this is appropriate because it means that multiple operations are executed concurrently, which is the purpose of the TPL. Just be aware of this when architecting your code.

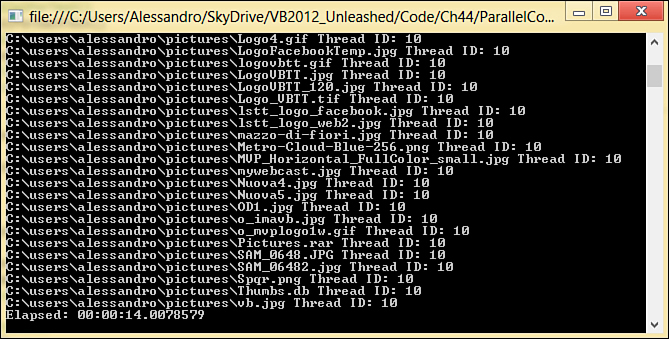

Parallel.ForEach Loop

Similarly to For loops, the Parallel class offers an implementation of For..Each loops for iterating items within a collection in parallel. Using the methods shown at the beginning of this section for retrieving thread information, simulate intensive processing and the measuring of elapsed time. Imagine you want to retrieve the list of image files in the user-level Pictures folder simulating an intensive processing over each filename. This task can be accomplished via a classic For..Each loop as follows:

Sub ClassicForEachTest()

Dim allFiles = IO.Directory.

EnumerateFiles("C:usersalessandropictures")

For Each fileName In allFiles

Console.WriteLine(fileName + GetThreadId())

SimulateProcessing()

Next

End Sub

The intensive processing simulation still relies on a single thread and on a single processor; thus, it will be expensive in terms of time and system resources. Figure 43.3 shows the result of the loop.

Figure 43.3. Iterating items in a collection under intensive processing is expensive with a classic For..Each loop.

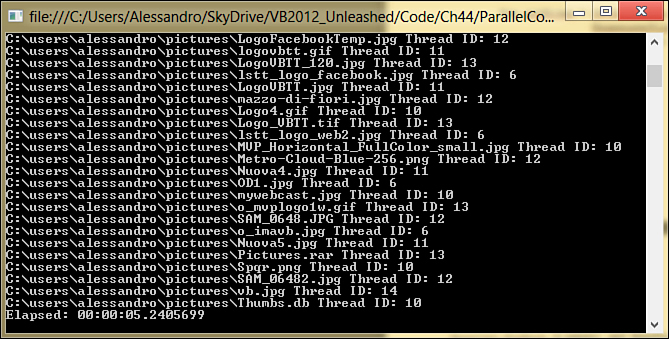

Fortunately, the Task Parallel Library enables you to iterate items in a collection concurrently. This is accomplished with the Parallel.ForEach method, which is demonstrated in the following code:

Sub ParallelForEachTest()

Dim allFiles = IO.Directory.

EnumerateFiles("C:usersalessandropictures")

Parallel.ForEach(Of String)(allFiles, Sub(fileName)

Console.WriteLine( _

fileName + GetThreadId())

SimulateProcessing()

End Sub)

End Sub

Parallel.ForEach is generic and therefore requires specifying the type of items in the collection. In this case the collection is an IEnumerable(Of String), so ForEach takes (Of String) as the generic parameter. Talking about arguments, the first one is the collection to iterate, whereas the second one is an Action(Of T). Therefore, it’s a reference to a delegate or a statement lambda like in the preceding example, representing the action to take over each item in the collection. If you run the code snippet, you get the result shown in Figure 43.4.

Figure 43.4. Performing a Parallel.ForEach loop speeds up intensive processing over items in the collection.

The difference is evident. The parallel loop completes processing in almost 10 seconds less than the classic loop on my machine; this is because the parallel loop automatically runs multiple threads for splitting the operation across multiple units of work. Specifically, it takes full advantage of the multicore processor’s architecture of the running machine to take the most from system resources.

Partitioning Improvements in .NET 4.5

When a Parallel.ForEach loop (and PLINQ queries as well) processes an IEnumerable, the runtime uses a default partitioning scheme that involves chunking. This means that every time a thread goes back to the IEnumerable to fetch more items, the thread can take multiple items at a time. This provides optimal performances but involves buffering items, which is not always an appropriate approach for some situations. For instance, if you think of data going over networks, you might prefer to process a single data item as soon as it is received rather than waiting for multiple items to be received and processed concurrently. The .NET Framework 4.5 introduces a new enumeration called System.Collections.Concurrent.EnumerablePartitionerOptions that enables you to specify whether the runtime should not use the default partitioning scheme and, consequently, avoid the buffering. The following code provides an example with the Parallel.ForEach loop that you saw previously:

'With no buffering

Parallel.ForEach(Of String)(Partitioner.Create(allFiles,

EnumerablePartitionerOptions.NoBuffering),

Sub()

Console.WriteLine(fileName + GetThreadId())

SimulateProcessing()

End Sub)

You invoke the Create shared method of the System.Collections.Concurrent.Partitioner class to specify both the IEnumerable source and the partitioning scheme. PartitionerOptions has two values: NoBuffering, which avoids buffering, and None which is the default partitioning scheme (and that you can eventually avoid, using the normal syntax described previously in the “Parallel.ForEach Loop” section).

The ParallelLoopState Class

The System.Threading.Tasks.ParallelLoopState enables you to get information on the state of parallel loops such as Parallel.For and Parallel.ForEach. For example, you can find out whether a loop has been stopped via the Boolean property IsStopped or whether the loop threw an exception via the IsExceptional property. Moreover, you can stop a loop with the Break and Stop methods. The first one requests the runtime to stop the loop execution when possible, but including the current iteration. Stop does the same but excludes the current iteration. You need to pass a variable of type ParallelLoopState to the delegate invoked for the loop or let the compiler infer the type as in the following example:

'The compiler infers ParallelLoopState

'for the loopState identifier

Parallel.For(0, 16, Sub(i, loopState)

Console.WriteLine(i.ToString + _

GetThreadId())

SimulateProcessing()

If loopState.IsExceptional Then

'an exception occurred

End If

'Breaks the loop at the 10th iteration

If i = 10 Then

loopState.Break()

End If

End Sub)

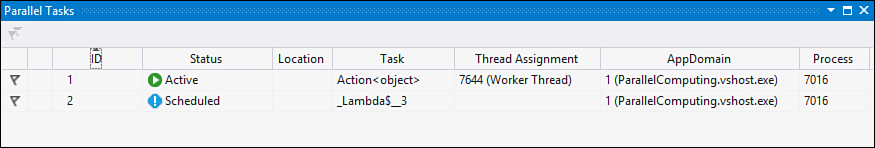

Debugging Tools for Parallel Tasks

Visual Studio 2012 offers two useful tool windows that you can use for debugging purposes when working on both parallel tasks and loops. To understand how such tooling works, consider the following code that creates and starts three tasks:

Sub CreateSomeTaks()

Dim taskA = Task.Factory.StartNew(Sub() Console.WriteLine("Task A"))

Dim taskB = Task.Factory.StartNew(Sub() Console.WriteLine("Task B"))

Dim taskC = Task.Factory.StartNew(Sub() Console.WriteLine("Task C"))

End Sub

Place a breakpoint on the End Sub statement and run the code. Because the tasks work in parallel, some of them may be running at this point and others may not be. To understand what is happening, you can open the Parallel Tasks tool window (select Debug, Windows, Parallel Tasks if not already visible). This window shows the state of each task, as represented in Figure 43.5.

Figure 43.5. The Parallel Tasks tool window.

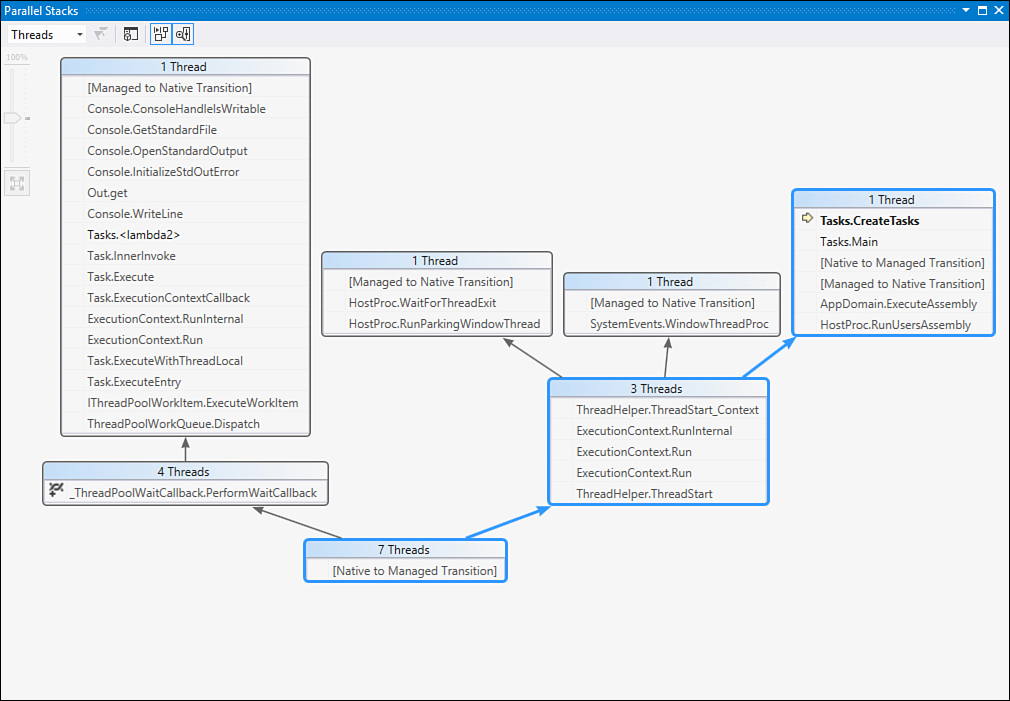

Among the other information, such a window shows the task ID, the status (that is, if it is running, scheduled, waiting, dead-locked, or completed), the delegate that is making the actual job (in the Task column), and the actual thread that refers to the task. Next, you can use the Parallel Stacks tool window (which can be enabled via Debug, Windows, Parallel Stacks) that shows the call stack for threads and their relationships. Figure 43.6 shows an example.

Figure 43.6. The Parallel Stacks window.

For each thread the window shows information that you can investigate by right-clicking each row.

Concurrent Collections

Parallel computing relies on multithreading, although with some particular specifications for taking advantage of multicore architectures. The real problem is when you need to work with collections because in a multithreaded environment, multiple threads could access a collection attempting to make edits that need to be controlled. The .NET Framework 4.5 retakes from its predecessor a number of thread-safe concurrent collections, exposed by the System.Collections.Concurrent namespace, which is useful in parallel computing with .NET Framework because they grant concurrent access to their members from threads.

What Does Thread-Safe Mean?

A collection is thread-safe when access to its members is allowed to only one thread per time (or to a few threads in particular cases).

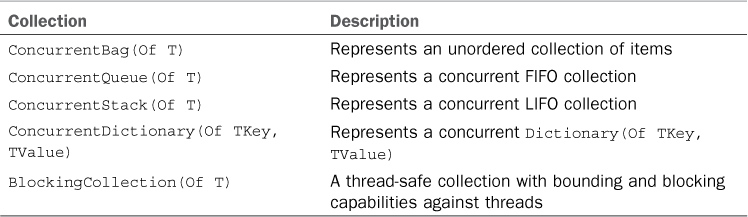

Table 43.1 summarizes concurrent collections in .NET 4.5.

Table 43.1. Available Concurrent Collections

The first four listed collections are essentially thread-safe implementations of generic collections you already learned in Chapter 16, “Working with Collections and Iterators.” BlockingCollection is a little bit more complex but interesting.

ConcurrentBag(Of T)

The ConcurrentBag(Of T) is the most basic concurrent collection, in that it is just an unordered collection of items. The following code demonstrates how you use it for adding, iterating, counting, and removing items:

'Creating an instance

Dim cb As New ConcurrentBag(Of String)

'Adding some items

cb.Add("String one")

cb.Add("String two")

cb.Add("String three")

'Showing items count

Console.WriteLine(cb.Count)

'Listing items in the collection

For Each item In cb

Console.WriteLine(item)

Next

'Removing an item

Dim anItem As String = String.Empty

cb.TryTake(anItem)

Console.WriteLine(anItem)

You add items to the collection by invoking the Add method. The Count property gets the number of items in the collection, and the IsEmpty property tells you whether the collection is empty. To remove an item, you invoke TryTake, which takes the first item, assigns it to the result variable (in this case anItem), and then removes it from the collection. It returns True if removing succeeds; otherwise, it returns False. Keep in mind that this collection offers no order for items; therefore, iteration results are completely random.

ConcurrentQueue(Of T)

The ConcurrentQueue(Of T) collection is just a thread-safe implementation of the Queue(Of T) collection, so it takes the logic of FIFO (first in, first out), where the first element in the collection is the first to be removed. The following code shows an example:

'Creating an instance

Dim cq As New ConcurrentQueue(Of Integer)

'Adding items

cq.Enqueue(1)

cq.Enqueue(2)

'Removing an item from the queue

Dim item As Integer

cq.TryDequeue(item)

Console.WriteLine(item)

'Returns "1":

Console.WriteLine(cq.Count)

The main difference with Queue is how items are removed from the queue. In this concurrent implementation, you invoke TryDequeue, which passes the removed item to a result variable by reference. The method returns True in case of success; otherwise, it returns False. Still the Count property returns the number of items in the queue.

ConcurrentStack(Of T)

ConcurrentStack(Of T) is the thread-safe implementation of the Stack(Of T) generic collection and works according to the LIFO (last in, first out) logic. The following code shows an example of using this collection:

'Creating an instance

Dim cs As New ConcurrentStack(Of Integer)

'Adding an item

cs.Push(1)

'Adding an array

cs.PushRange(New Integer() {10, 5, 10, 20})

Dim items() As Integer = New Integer(3) {}

'Removing an array

cs.TryPopRange(items, 0, 4)

'Iterating the array

Array.ForEach(Of Integer)(items, Sub(i)

Console.WriteLine(i)

End Sub)

'Removing an item

Dim anItem As Integer

cs.TryPop(anItem)

Console.WriteLine(anItem)

The big difference between this collection and its thread-unsafe counterpart is that you can also add an array of items invoking PushRange, but you still invoke Push to add a single item. To remove an array from the stack, you invoke TryPopRange, which takes three arguments: the target array that will store the removed items, the start index, and the number of items to remove. Both PushRange and TryPopRange return a Boolean value indicating whether they succeeded. The Array.ForEach loop in the preceding code is just an example for demonstrating how the array was actually removed from the collection. Finally, you invoke TryPop for removing an item from the stack; this item is then assigned to a result variable, passed by reference.

ConcurrentDictionary(Of TKey, TValue)

The ConcurrentDictionary collection has the same purpose as its thread-unsafe counterpart, but it differs in how methods work. All methods for adding, retrieving, and removing items return a Boolean value indicating success or failure, and their names all start with Try. The following code shows an example:

'Where String is for names and Integer for ages

Dim cd As New ConcurrentDictionary(Of String, Integer)

Dim result As Boolean

'Adding some items

result = cd.TryAdd("Alessandro", 35)

result = cd.TryAdd("Nadia", 31)

result = cd.TryAdd("Robert", 38)

'Removing an item

result = cd.TryRemove("Nadia", 31)

'Getting a value for the specified key

Dim value As Integer

result = cd.TryGetValue("Alessandro", value)

Console.WriteLine(value)

The logic of the collection is then the same as Dictionary, so refer to this one for details.

BlockingCollection(Of T)

The BlockingCollection(Of T) is a special concurrent collection. At the highest level such a collection has two characteristics. The first is that if a thread attempts to retrieve items from the collection while it is empty, the thread is blocked until some items are added to the collection. The second one is that if a thread attempts to add items to the collection, but this has reached the maximum number of items possible; the thread is blocked until some space is freed in the collection. Another interesting feature is completion. You can mark the collection as complete so that no other items can be added. This is accomplished via the CompleteAdding instance method. After you invoke this method, if a thread attempts to add items, an InvalidOperationException is thrown. The following code shows how to create a BlockingCollection for strings:

Dim bc As New BlockingCollection(Of String)

bc.Add("First")

bc.Add("Second")

bc.Add("Third")

bc.Add("Fourth")

'Marks the collection as complete

bc.CompleteAdding()

'Returns an exception

'bc.Add("Fifth")

'Removes an item from the collection (FIFO)

Dim result = bc.Take()

Console.WriteLine(result)

You add items invoking the Add method, and you mark the collection complete with CompleteAdding. To remove an item, you invoke Take. This method removes the first item added to the collection, according to the FIFO approach. This is because the BlockingCollection is not actually a storage collection, though it creates a ConcurrentQueue behind the scenes, adding blocking logic to this one. The class also exposes some properties:

• BoundedCapacity—Returns the bounded capacity for the collection. You can provide the capacity via the constructor. If not, the property returns -1 as the value indicating that it’s a growing collection.

• IsCompleted—Indicates whether the collection has been marked with CompleteAdding, and it is also empty.

• IsAddingCompleted—Indicates whether the collection has been marked with CompleteAdding.

The class has other interesting characteristics. For example, the beginning of the discussion explained why it is considered blocking. By the way, it also offers methods whose names all begin with Try, such as TryAdd and TryTake, which provides overloads that enable doing their respective work without being blocked. The last feature of the BlockingCollection is a number of static methods that you can use for adding and removing items to and from multiple BlockingCollection instances simultaneously, both blocking and nonblocking. These methods are AddToAny, TakeFromAny, TryAddToAny, and TryTakeFromAny. The following code shows an example of adding a string to multiple instances of the collection:

Dim collection1 As New BlockingCollection(Of String)

Dim collection2 As New BlockingCollection(Of String)

Dim colls(1) As BlockingCollection(Of String)

colls(0) = collection1

colls(1) = collection2

BlockingCollection(Of String).AddToAny(colls, "anItem")

All the mentioned methods take an array of collections; this is the reason for the code implementation as previously illustrated.

Introducing Parallel LINQ

Parallel LINQ, also known as PLINQ, is a special LINQ implementation provided by .NET Framework 4.5 that enables developers to query data using the LINQ syntax but uses multicore and multiprocessor architectures that have support by the Task Parallel Library. Creating “parallel” queries is an easy task, although there are some architectural differences with classic LINQ (or more with classic programming). These are discussed during this chapter. To create a parallel query, you just need to invoke the AsParallel extension method onto the data source you are querying. The following code provides an example:

Dim range = Enumerable.Range(0, 1000)

'Just add "AsParallel"

Dim query = From num In range.AsParallel

Where (IsOdd(num))

Select num

You can use Parallel LINQ and the Task Parallel Library only in particular scenarios, such as intensive calculations or large amounts of data. Because of this, to give you an idea of how PLINQ can improve performance, the code presented in this chapter simulates intensive work on easier code so that you can focus on PLINQ instead of other code.

Simulating an Intensive Work

Parallel LINQ provides benefits when you work in extreme situations such as intensive works or large amounts of data. In different situations, PLINQ is not necessarily better than classic LINQ. To understand how PLINQ works, first we need to write code that simulates an intensive work. After creating a new Console project, write the following method that determines whether a number is odd but suspends the current thread for several milliseconds by invoking the System.Threading.Thread.SpinWait shared method:

'Checks if a number is odd

Private Function IsOdd(ByVal number As Integer) As Boolean

'Simulate an intensive work

System.Threading.Thread.SpinWait(1000000)

Return (number Mod 2) <> 0

End Function

Now that we have an intensive work, we can compare both the classic and parallel LINQ queries.

Measuring Performances of a Classic LINQ Query

The goal of this paragraph is to explain how you can execute a classic LINQ query over intensive processing and measure its performance in milliseconds. Consider the following code:

Private Sub ClassicLinqQuery()

Dim range = Enumerable.Range(0, 1000)

Dim query = From num In range

Where (IsOdd(num))

Select num

'Measuring performance

Dim sw As Stopwatch = Stopwatch.StartNew

'Linq query is executed when invoking Count

Console.WriteLine("Total odd numbers: " + query.Count.ToString)

sw.Stop()

Console.WriteLine(sw.ElapsedMilliseconds.ToString)

Console.ReadLine()

End Sub

Given a range of predefined numbers (Enumerable.Range), the code looks for odd numbers and collects them into an IEnumerable(Of Integer). To measure performance, we can use the Stopwatch class that starts a counter (Stopwatch.StartNew). Because, as you already know, LINQ queries are executed when you use them, such a query is executed when the code invokes the Count property to show how many odd numbers are stored within the query variable. When done, the counter is stopped so that we can get the number of milliseconds needed to perform the query itself. By the way, measuring time is not enough. The real goal is to understand how the CPU is used and how a LINQ query impacts performance. To accomplish this, on Windows 8 right-click the Windows Task Bar and start the Task Manager. Then click the Performance tab, and then click the Open Resource Monitor. This provides a way for looking at the CPU usage while running your code. If you are running Windows 7 or Windows Vista with Service Pack 2, you will not need to open any resource monitor because the Task Manager provides all you need in the main window (just select the Always on Top option for a better view). The previous code, which can be run by invoking the ClassicLinqQuery from the Main method, produces the following result on my dual-core machine:

Total odd numbers: 500

3667

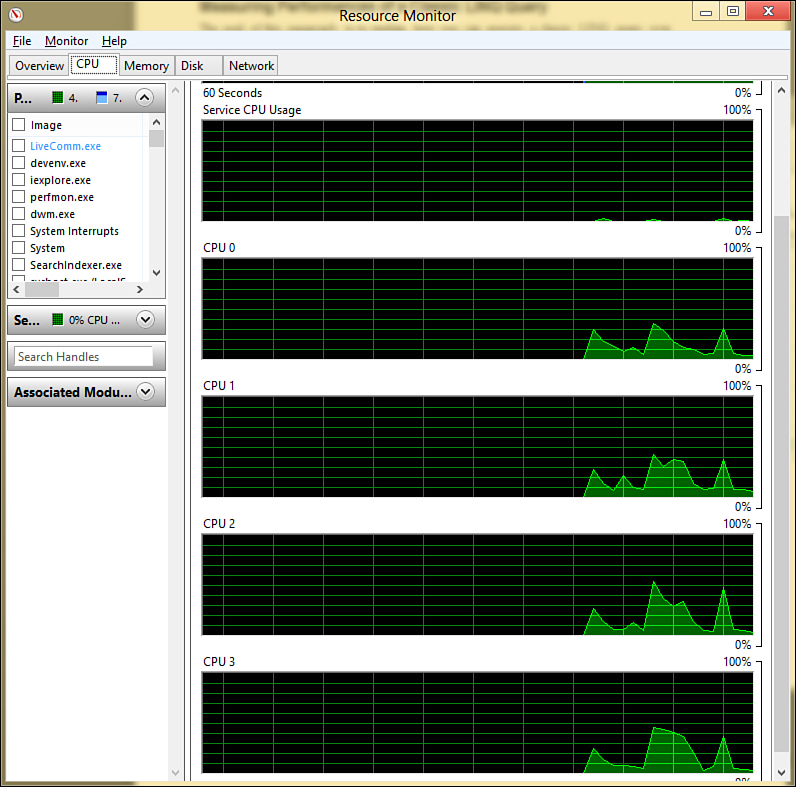

This means that executing a query versus the intensive processing took about 3 1/2 seconds. The other interesting thing is about the CPU usage. Figure 43.7 shows that during the processing, the CPU was used for a medium percentage of resources. Obviously, this percentage can vary depending on the machine and on the running processes and applications.

Figure 43.7. CPU usage during a classic LINQ query.

That CPU usage was not full is not necessarily good because it means that all the work relies on a single thread and is considered as if it were running on a single processor. Therefore, an overload of work exists for only some resources while other resources are free. To scale the work over multiple threads and multiple processors, a Parallel LINQ query is more efficient.

Measuring Performances of a PLINQ Query

To create a parallel query, you need to invoke the AsParallel extension method for the data source you want to query. Copy the method shown in the previous paragraph and rename it as PLinqQuery; then change the first line of the query as follows:

Dim query = From num In range.AsParallel

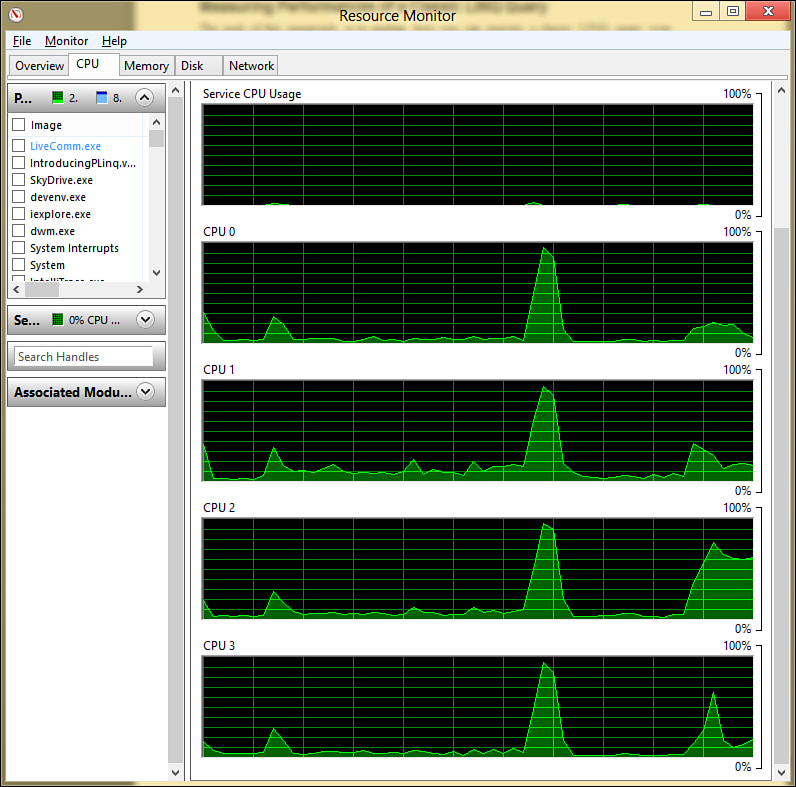

Different from a LINQ query, AsParallel returns a ParallelQuery(Of T) that is exposed by the System.Linq namespace and that is specific for PLINQ. However, it works as an IEnumerable(Of T) but enables you to scale data over multicore processors. Now edit Sub Main so that it invokes the PLinqQuery method and runs the code again. Figure 43.8 shows what you should see when the application is processing data.

Figure 43.8. CPU usage during a Parallel LINQ query.

It is worth noticing how all processors are being used, which is demonstrated by the peaks of CPU usage. Processing was scaled along all available processors. On my dual-core machine, the previous code produces the following result:

Total odd numbers: 500

2512

The PLINQ query took only 2 1/2 seconds, which is less than the classic LINQ query result. So, PLINQ can dramatically improve your code performance, although there are some other considerations, as discussed in the next paragraphs.

Converting to Sequential Queries

PLINQ queries are evaluated in parallel, meaning that they use multicore architectures—also thanks to the ParallelQuery(Of T) class. If you want to convert such a result into an IEnumerable (Of T) and provide sequential evaluation of the query, you can invoke the AsSequential extension method from the query result variable.

Ordering Sequences

One of the most important consequences of Parallel LINQ (and, more generally, of parallel computing) is that processing is not done sequentially as it would happen on single-threaded code. This is because multiple threads run concurrently. To understand this, the following is an excerpt from the iteration on the result of the parallel query:

295

315

297

317

299

319

You would probably expect something like the following instead, which is produced by the classic LINQ query:

295

296

297

298

299

300

If you need to work in sequential order but do not want to lose the capabilities of PLINQ query, you can invoke the AsOrdered extension method that preserves the sequential order of the result, as demonstrated by the following code snippet:

Dim query = From num In range.AsParallel.AsOrdered

If you now run the code again, you get an ordered set of odd numbers.

AsParallel and Binary Operators

In some situations you use operators that take two data sources; among such operators, there are the following binary operators: Join, GroupJoin, Except, Concat, Intersect, Union, Zip, and SequenceEqual. To use parallelism with binary operators on two data sources, you need to invoke AsParallel on both collections, as demonstrated by the following code:

Dim result = firstSource.AsParallel.Except(secondSource.AsParallel)

The following code still works, but it won’t use parallelism:

Dim result = firstSource.AsParallel.Except(secondSource)

Using ParallelEnumerable

The System.Linq namespace for .NET 4.5 provides the ParallelEnumerable class, which is the parallel counterpart of Enumerable and provides extension methods specific to parallelism, such as AsParallel. You can use ParallelEnumerable members instead of invoking AsParallel because both return a ParallelQuery(Of T). For example, the PLINQ query in the first example could be rewritten as follows:

Dim range = ParallelEnumerable.Range(0, 1000)

'Just add "AsParallel"

Dim query = From num In range

Where (IsOdd(num))

Select num

In this case the range variable is of type ParallelEnumerable(Of Integer), and therefore you do not need to invoke AsParallel. Some differences in how data is handled do exist, which can often lead AsParallel to be faster. Explaining in detail the ParallelEnumerable architecture is beyond the scope in this introductory chapter, but if you are curious you can take a look at this blog post from the Task Parallel Library Team, which is still valid today: http://blogs.msdn.com/pfxteam/archive/2007/12/02/6558579.aspx.

Controlling PLINQ Queries

PLINQ offers additional extension methods and features to provide more control over tasks that run queries, all exposed by the System.Linq.ParallelEnumerable class. In this section you get an overview of extension methods and learn how you can control your PLINQ queries.

Setting the Maximum Tasks Number

As explained previously in this chapter, the Task Parallel Library relies on tasks instead of threads, although working with tasks means scaling processing over multiple threads. You can specify the maximum number of tasks that can execute a thread by invoking the WithDegreeOfParallelism extension method and passing the number as an argument. The following code demonstrates how you can get the list of running processes with a PLINQ query that runs a maximum of three concurrent tasks:

Dim processes = Process.GetProcesses.

AsParallel.WithDegreeOfParallelism(3)

Forcing Parallelism in Every Query

Not all code can benefit from parallelism and PLINQ. Such technology is intelligent enough to determine whether a query can benefit from PLINQ according to its shape. The shape of a query consists of the operator it requires and algorithm or delegates that are involved. PLINQ analyzes the shape and can determine where to apply a parallel algorithm. You can force a query to be completely parallelized, regardless of its shape, by invoking the WithExecutionMode extension methods that receive an argument of type ParallelExecutionMode that is an enumeration exposing two self-explanatory members: ForceParallelism and Default. The following code demonstrates how you can force a query to be completely parallelized:

Dim processes = Process.GetProcesses.

AsParallel.WithExecutionMode( _

ParallelExecutionMode.ForceParallelism)

.NET 4.5 Improvements

Due to some internal implementations, in the first version of PLINQ in .NET 4.0 the compiler determined that some parallel queries would run with better performance if executed sequentially. This was the case of queries involving some particular operators such as OrderBy and Take. In .NET 4.5, a lot of improvements have been brought to Parallel LINQ and now most of the issues have been solved so that most queries can run in parallel.

Merge Options

PLINQ automatically partitions query sources so that it can use multiple threads that can work on each part concurrently. You can control how parts are handled by invoking the WithMergeOptions method that receives an argument of type ParallelMergeOptions. Such enumeration provides the following specifications:

• NotBuffered—Returns elements composing the result as soon as they are available

• FullyBuffered—Returns the complete result, meaning that query operations are buffered until every one has been completed

• AutoBuffered—Leaves to the compiler to choose the best buffering method in that particular situation

You invoke WithMergeOptions as follows:

Dim processes = Process.GetProcesses.

AsParallel.WithMergeOptions( _

ParallelMergeOptions.FullyBuffered)

With the exception of the ForAll method that is always NotBuffered and OrderBy that is always FullyBuffered, other extension methods/operators can support all merge options. The full list of operators is described in the following page of the MSDN Library: http://msdn.microsoft.com/en-us/library/dd547137(v=vs.110).aspx.

Canceling PLINQ Queries

If you need to provide a way for canceling a PLINQ query, you can invoke the WithCancellation method. You first need to implement a method to be invoked when you need to cancel the query. The method receives a CancellationTokenSource argument (which sends notices that a query must be canceled) and can be implemented as follows:

Dim cs As New CancellationTokenSource

Private Sub DoCancel(ByVal cs As CancellationTokenSource)

'Ensures that query is cancelled when executing

Thread.Sleep(500)

cs.Cancel()

End Sub

When you have a method of this kind, you need to start a new task by pointing to this method as follows:

Tasks.Task.Factory.StartNew(Sub()

DoCancel(cs)

End Sub)

When a PLINQ query is canceled, an OperationCanceledException is thrown so that you can handle cancellation, as demonstrated in the following code snippet:

Private Sub CancellationDemo()

Try

Dim processes = Process.GetProcesses.

AsParallel.WithCancellation(cs.Token)

Catch ex As OperationCanceledException

Console.WriteLine(ex.Message)

Catch ex As Exception

End Try

End Sub

To cancel a query, invoke the DoCancel method.

Handling Exceptions

In single-core scenarios, LINQ queries are executed sequentially. This means that if your code encounters an exception, the exception is at a specific point, and the code can handle it normally. In multicore scenarios, multiple exceptions could occur because more threads are running on multiple processors concurrently. Because of this, PLINQ uses the AggregateException class, which is specific for exceptions within parallelism and has already been discussed in this chapter. With PLINQ, you might find useful two members: Flatten is a method that turns it into a single exception, and InnerExceptions is a property storing a collection of InnerException objects. Each represents one of the occurred exceptions.

Disable “Just My Code”

As you did for Task Parallel Library samples shown previously, to correctly catch an AggregateException you need to disable the Just My Code debugging in the debug options; otherwise, the code execution will break on the query and will not enable you to investigate the exception.

Consider the following code, in which an array of strings stores some null values and causes NullReferenceException at runtime:

Private Sub HandlingExceptions()

Dim strings() As String = New String() {"Test",

Nothing,

Nothing,

"Test"}

'Just add "AsParallel"

Try

Dim query = strings.AsParallel.

Where(Function(s) s.StartsWith("T")).

Select(Function(s) s)

For Each item In query

Console.WriteLine(item)

Next

Catch ex As AggregateException

For Each problem In ex.InnerExceptions

Console.WriteLine(problem.ToString)

Next

Catch ex As Exception

Finally

Console.ReadLine()

End Try

End Sub

In a single-core scenario, a single NullReferenceException is caught and handled the first time the code encounters the error. In multicore scenarios, an AggregateException could happen due to multiple threads running on multiple processors; therefore, you cannot control where and how many exceptions can be thrown. Consequently, the AggregateException stores information on such exceptions. The previous code shows how you can iterate the InnerExceptions property.

Summary

Parallel computing enables you to use multicore architectures for scaling operation execution across all available processors on the machine. In this chapter, you learned how parallel computing in .NET Framework 4.5 relies on the concept of task; for this, you learned how to create and run tasks via the System.Threading.Tasks.Task class to generate units of work for running tasks in parallel. You also learned how to handle concurrent exceptions and request tasks cancellation. Another important topic in parallel computing is loops. Here you learned how the Parallel.For and Parallel.ForEach loops enable multithreaded iterations that are scaled across all available processors. Next, you took a tour inside the concurrent collections in .NET Framework 4.5, a set of thread-safe collections you can use to share information across tasks. Finally, you got information about a specific LINQ implementation known as Parallel LINQ that enables you to scale query executions over multiple threads and processors so that you can get the benefits in improving performances of your code. Specifically, you learned how to invoke the AsParallel method for creating parallelized queries and comparing them to classic LINQ queries. You also saw how to control queries forcing parallelism, implementing cancellation, setting the maximum number of tasks, and handling the AggregateException exception.