Chapter 2. The Four MPLS Builders

Depending on the function of a Multiprotocol Label Switching (MPLS) label, it can receive many names: transport label, service label, VPN label, entropy label, and so on. This chapter focuses on the original and primary function of MPLS labels: the transport of data packets through a labeled tunnel.

Chapter 1 describes how MPLS tunnels are provisioned by using a static label-mapping technique. However, this approach is limited in terms of scalability, operability, failure detection, and redundancy. There is fortunately a classic solution at hand: signaling the tunnels with protocols that create MPLS paths in a dynamic manner. What protocols? There are actually a few of them, each with their pros and cons.

This chapter covers the following alternatives:

-

Two pure MPLS signaling protocols: Label Distribution Protocol (LDP) and Resource Reservation Protocol with Traffic Engineering (RSVP-TE)

-

The modern MPLS extensions of classic IP routing protocols: Border Gateway Protocol (BGP), Intermediate System–to–Intermediate System (IS-IS), and Open Shortest-Path First (OSPF)

BGP has had MPLS extensions since the early times, and they keep evolving. As for IS-IS and OSPF, their MPLS extensions have come more recently with a technology called SPRING or Segment Routing. SPRING, which was still in IETF draft state at the time of the publication of this book, also has extensions for BGP.

The four MPLS Builders are therefore: LDP, RSVP-TE, BGP, and the Interior Gateway Protocol (IGP). LDP was already proposed in the 1990s, so why are there so many other MPLS signaling protocols? First, LDP did not cover the Traffic Engineering use case, so RSVP-TE was soon proposed for that purpose. And because neither LDP nor RSVP-TE nicely solved the interdomain use case, new BGP extensions were defined to achieve it. Some scenarios are a good fit for LDP, or for RSVP-TE, or for BGP, or for a combination of them. As for SPRING, most of its use cases can be covered by a combination of other protocols (LDP, RSVP-TE, and BGP), but it is a recent technology whose applications are diversifying, it brings deterministic labels to the table, and it is very interesting to see how you can use the IGP to build MPLS LSPs.

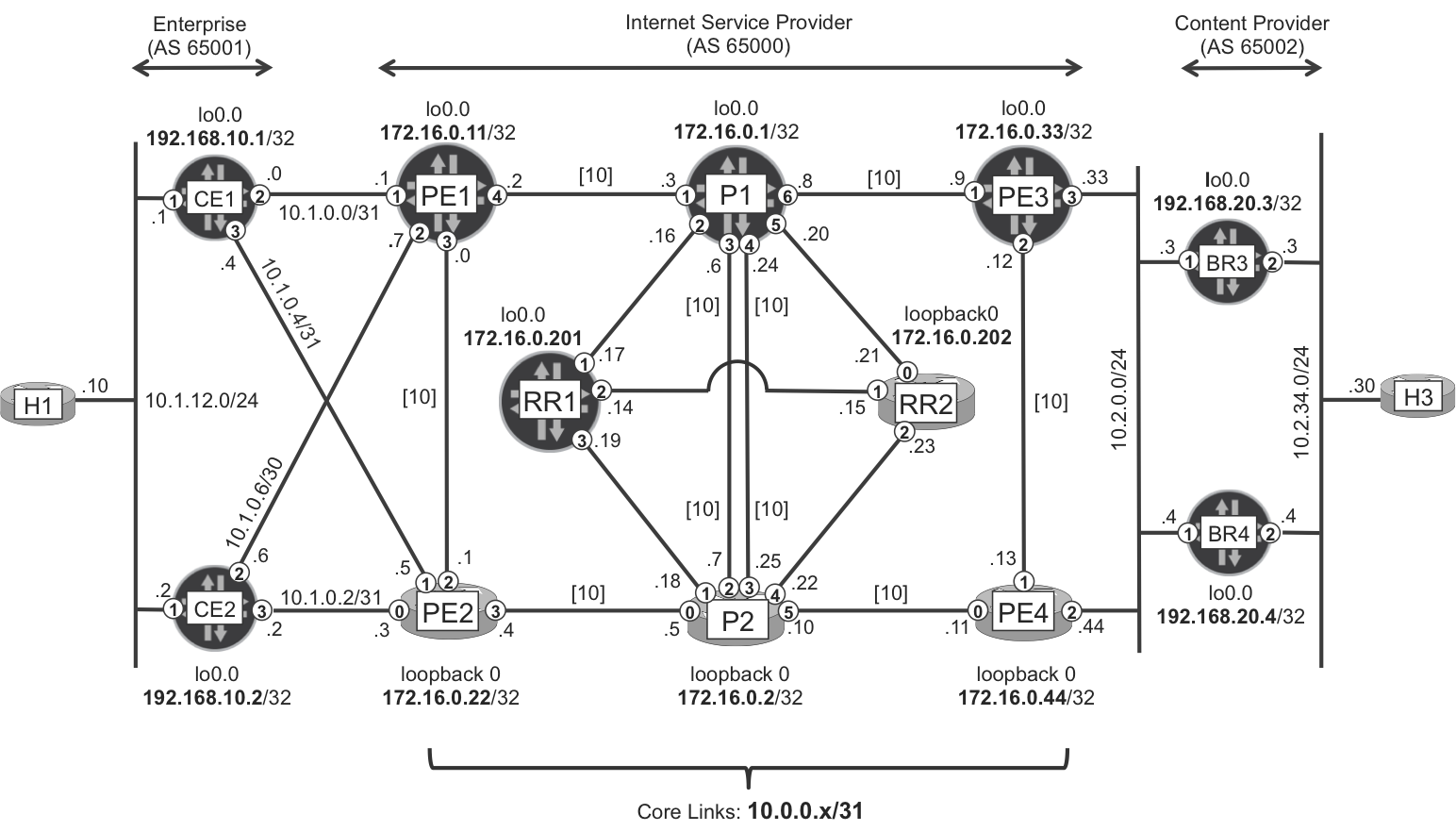

Let’s begin with LDP, probably the most classic and widespread of them all. The baseline topology is borrowed from Chapter 1. For convenience, it is also displayed here in Figure 2-1.

Figure 2-1. Basic MPLS topology

Note

In this chapter, all the IGP core link IS-IS metrics are set to the default value (10). This makes internal load-balancing scenarios more interesting.

LDP

Despite its simple appearance, LDP (RFC 5036) is not that easy to understand. Indeed, LDP can signal three types of transport Label-Switched Paths (LSPs): multipoint-to-point (MP2P), point-to-multipoint (P2MP), and multipoint-to-multipoint (MP2MP). Unlike its fellow RSVP-TE, LDP does not signal the LSP type that happens to be the most intuitive of them all: point-to-point (P2P) LSPs. This chapter focuses on unicast traffic, which in the context of LDP is transported in MP2P LSPs. These go from any ingress provider edge (PE) to a given egress PE. Last but not least, LDP does not implement Traffic Engineering.

So, why is LDP such a popular MPLS transport protocol? Several characteristics make it highly scalable and operationally attractive. First, label signaling takes place on TCP connections, achieving reliable delivery with minimal refresh. Second, MP2P LSPs involve a significant state reduction. And finally, when it comes to configuring transport LSPs, LDP is plug-and-play. You just enable LDP on the core interfaces, and the magic is done.

Example 2-1. LDP configuration at PE1 (Junos)

protocols {

ldp {

track-igp-metric;

interface ge-0/0/3.0;

interface ge-0/0/4.0;

}}

The track-igp-metric knob couples LDP to the IGP and it is a best practice for loop avoidance. Remember that throughout this entire book, it is assumed that all the MPLS interfaces are declared under [edit protocols mpls] and have family mpls enabled, as in Chapter 1.

Following is a basic LDP configuration in IOS XR.

Example 2-2. LDP configuration at PE2 (IOS XR)

mpls ldp interface GigabitEthernet0/0/0/3 interface GigabitEthernet0/0/0/4

Note

In IOS XR, MPLS often relies on LDP to be globally enabled. If the network runs a different MPLS label signaling protocol, you don’t need to configure any interfaces under mpls ldp, but the global statement is typically needed.

LDP Discovery and LDP Sessions

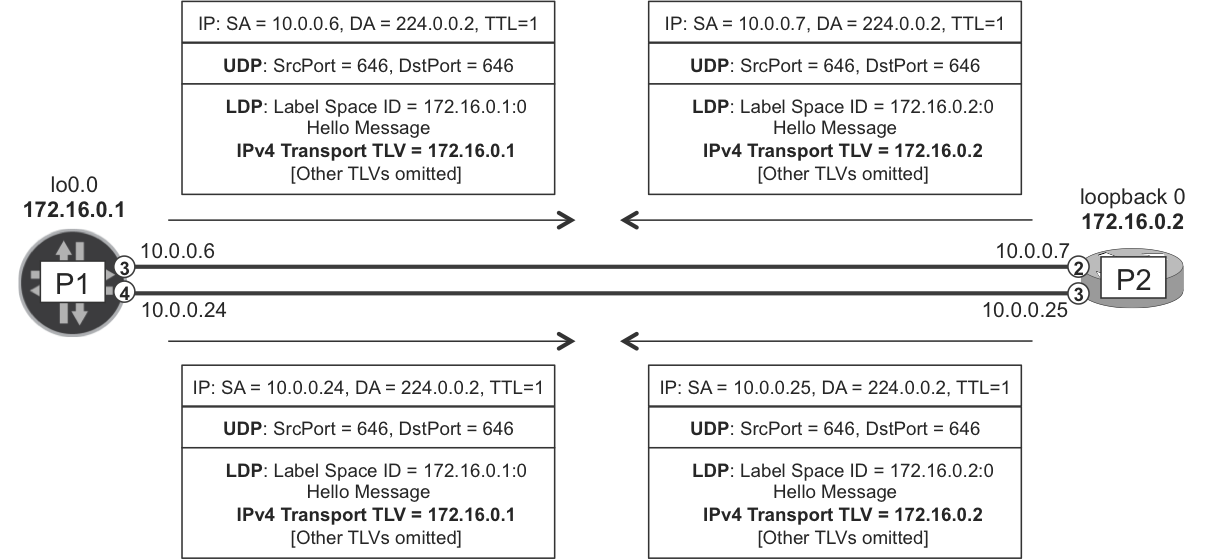

As soon as LDP is enabled on an interface, a process called basic discovery begins. The LSR begins to send and receive LDP hello messages on each of the configured interfaces. Let’s focus on the message exchange between P1 and P2, which is illustrated in Figure 2-2.

Figure 2-2. LDP hello messages

In the basic discovery process, LDP hello messages are encapsulated as follows:

-

First, in a UDP header, with source and destination port 646

-

Then, in an IPv4 header with TTL=1 and destination address 224.0.0.2, the all-routers link-local multicast address

These packets are not routable, and their purpose is to establish adjacencies between directly connected neighbors only. Note that there is another method called extended discovery, also known as targeted LDP, whereby the LDP hellos are unicast and multihop (TTL>1). This is described later in this chapter.

The basic discovery process builds LDP hello adjacencies. There is one per LDP-enabled interface, so P1 and P2 establish two hello adjacencies.

Example 2-3. LDP hello adjacencies at P1 (Junos)

juniper@P1> show ldp neighbor Address Interface Label space ID Hold time 10.0.0.2 ge-2/0/1.0 172.16.0.11:0 13 10.0.0.7 ge-2/0/3.0 172.16.0.2:0 12 10.0.0.25 ge-2/0/4.0 172.16.0.2:0 12 10.0.0.9 ge-2/0/6.0 172.16.0.33:0 14

Example 2-4. LDP hello adjacencies at P2 (IOS XR)

RP/0/0/CPU0:P2#show mpls ldp discovery brief Local LDP Identifier: 172.16.0.2:0 Discovery Source VRF Name Peer LDP Id Holdtime Session ----------------- -------------- -------------- -------- ------- Gi0/0/0/0 default 172.16.0.22:0 15 Y Gi0/0/0/2 default 172.16.0.1:0 15 Y Gi0/0/0/3 default 172.16.0.1:0 15 Y Gi0/0/0/5 default 172.16.0.44:0 15 Y

The LDP hello messages originated by P1 have two key pieces of information:

-

The label space 172.16.0.1:0, whose format is <LSR ID>:<label space ID>. The <LSR ID> is simply P1’s router ID.

But, what do the label space and the transport address stand for?

Let’s begin with the transport address. LDP discovery triggers the establishment of one LDP-over-TCP session between each pair of neighboring LSRs. The endpoints of these multihop TCP sessions are precisely the transport addresses encoded in the UDP-based hellos, as shown in Example 2-5.

Example 2-5. LDP over TCP session (CE1)

juniper@P1> show system connections | match "proto|646" Proto Recv-Q Send-Q Local Address Foreign Address (state) tcp4 0 0 172.16.0.1.646 172.16.0.2.51596 ESTABLISHED tcp4 0 0 172.16.0.1.646 172.16.0.33.50368 ESTABLISHED tcp4 0 0 172.16.0.1.646 172.16.0.11.49804 ESTABLISHED tcp4 0 0 *.646 *.* LISTEN udp4 0 0 *.646 *.*

It is important to configure the router ID to the same value as a reachable loopback address; otherwise, the LDP session cannot be established.

Note

Even though P1 and P2 have more than one LDP hello adjacency, they only establish one LDP session, from loopback to loopback.

After they establish the TCP connection via the classic three-way handshake, P1 and P2 exchange LDP initialization messages and finally the label information. Let’s have a look at the LDP sessions.

Example 2-6. LDP sessions at P1 (Junos)

juniper@P1> show ldp session Address State Connection Hold time Adv. Mode 172.16.0.2 Operational Open 24 DU 172.16.0.11 Operational Open 21 DU 172.16.0.33 Operational Open 20 DU

Example 2-7. LDP Sessions at P2 (IOS XR)

RP/0/0/CPU0:P2#show mpls ldp neighbor brief Peer GR NSR Up Time Discovery Address IPv4 Label ----------------- -- --- --------- ------- ------- ---------- 172.16.0.22:0 N N 1d04h 1 6 25 172.16.0.44:0 N N 1d04h 1 5 23 172.16.0.1:0 N N 00:02:02 2 6 10

The terminology becomes a bit confusing across vendors, so we’ve summarized the concepts. This book uses the RFC terms.

| RFC 5036 | LDP hello adjacencies (UDP) | LDP sessions (TCP) |

|---|---|---|

| Junos | show ldp neighbor |

show ldp session |

| IOS XR | show mpls ldp discovery |

show mpls ldp neighbor |

There are two types of heartbeat mechanisms in LDP:

-

LDP-over-UDP Hello messages to maintain LDP Hello Adjacencies

-

LDP-over-TCP keepalives to maintain LDP Sessions (TCP already provides a keepalive mechanism, but LDP keepalives are more frequent and hence more robust)

LDP Label Mapping

As soon as two neighbors establish an LDP session, they begin to exchange label mapping messages that associate IPv4 prefixes to MPLS labels. These label mappings make up a Label Information Base (LIB).

IPv4 prefixes are one example of Forwarding Equivalence Class (FEC) elements. According to RFC 5036, “The FEC associated with an LSP specifies which packets are ‘mapped’ to that LSP.”

Translated to this chapter’s example topology, PE1 needs an LSP terminated at PE3 in order to send packets beyond PE3. And the FEC associated to that LSP is represented by 172.16.0.33/32, PE3’s loopback address. Although it is not the most precise expression, you could say that 172.16.0.33/32 is a FEC. The ingress PE (in this example, PE1) does not necessarily tunnel traffic destined to the FEC itself. Most typically, the packet matches a route at PE1 whose BGP next hop is 172.16.0.33. This is the association between the packet and the FEC. Good old MPLS logic!

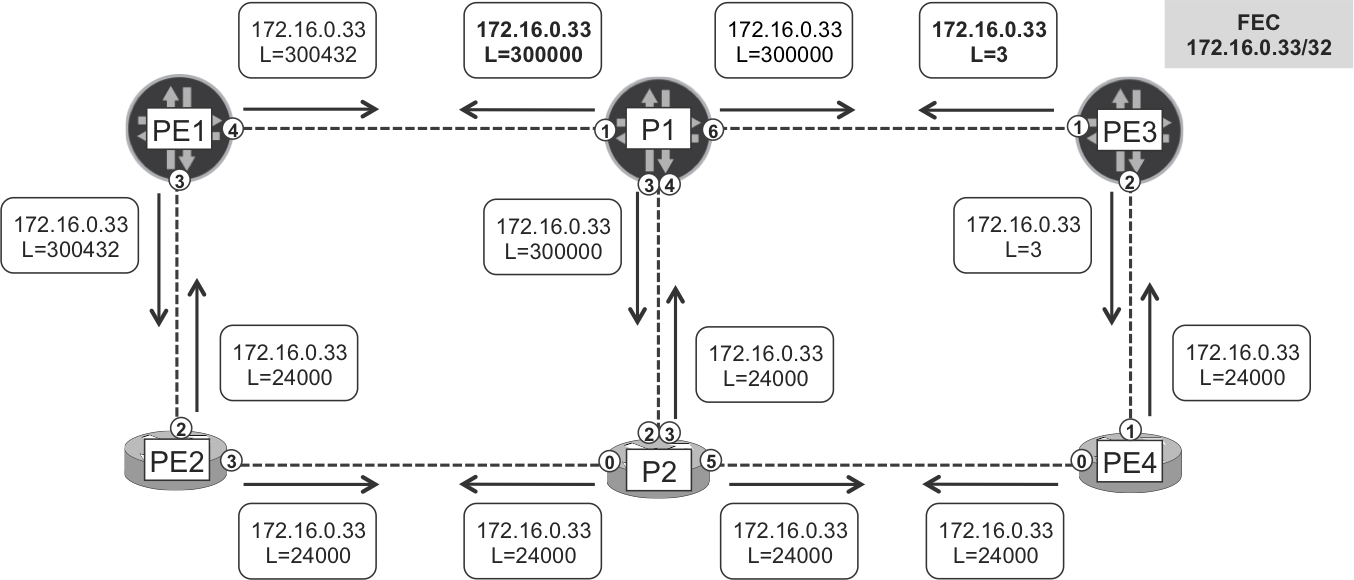

Probably the best way to understand LDP is to see it at work. Let’s focus on one IPv4 prefix or FEC: the loopback address of PE3 (172.16.0.33/32).

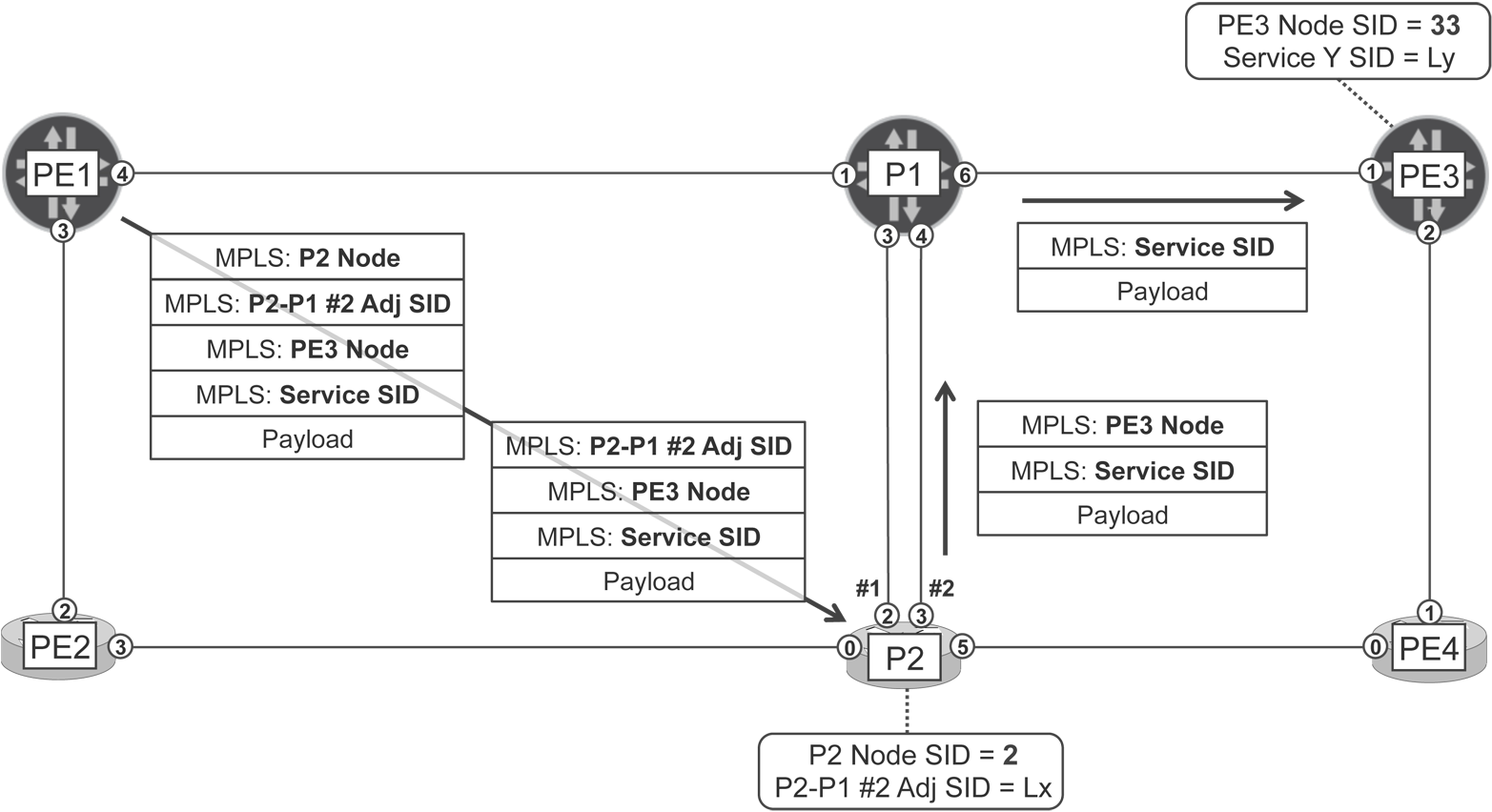

In Figure 2-3, you can see that all of the core routers in the network advertise a label mapping for this prefix. This is a bit surprising because PE3 receives from its neighbors label mappings for its own loopback address! As its name implies, LDP is just that, a label distribution protocol, not a routing protocol. It simply distributes label mappings and does not care about whether these announcements make topological sense.

Looking carefully at Figure 2-3, you can see that each router advertises the same label mapping on every LDP session. For example, P1 advertises the mapping [FEC element 172.16.0.33/32, label 300000] to all its neighbors. This is a local label binding at P1. Indeed, P1 locally binds the label 300000 to 172.16.0.33/32, and it’s telling its LDP peers: if you want me to tunnel a packet toward PE3, send it to me with a topmost MPLS header containing label 300000.

Figure 2-3. LDP label mapping messages for 172.16.0.33

This assignment has only local significance and must be interpreted in the context of label space 172.16.0.1:0. How is the label space decoded? The first field is P1’s router ID, and the second field (zero) translates to a platform label space. What does this mean? Label lookup takes place in P1 regardless of the interface on which the MPLS packet arrives. If P1 receives a packet whose outer MPLS label is 300000, no matter the input interface, P1 will place it on a LSP toward PE3. The mapping (172.16.0.33/32, 3000000) has platform-wide significance within P1.

Note

Both Junos and IOS XR use a platform label space.

RFC 3031 also defines per-interface label spaces, wherein each input interface has its own LIB: an incoming MPLS packet’s label is interpreted in the context of the input interface. Although per-interface label spaces are not implemented, Chapter 21 covers a more generic concept: context-specific label spaces, defined in RFC 5331.

Back to Figure 2-3. Because MPLS labels have local significance, each router typically advertises a different label mapping for a given FEC. However, there is no rule that enforces the labels to be different. For example, PE2, P2, and PE4 happen to all be advertising the same label for 172.16.0.33/32. This is completely fine because each label belongs to a different platform (LSR) label space. It’s a simple coincidence.

Note

LDP label mappings are dynamic and may change upon route flap.

LDP signaling and MPLS forwarding in the Junos plane

Example 2-8 gives us a chance to look at a live demonstration; in this case, a loopback-to-loopback traceroute from CE1 to BR3 traversing the Junos plane (PE1, P1, PE3).

Example 2-8. Traceroute through the Junos LDP plane

juniper@CE1> traceroute 192.168.20.3 source 192.168.10.1

traceroute to 192.168.20.3 (192.168.20.3) from 192.168.10.1 [...]

1 PE1 (10.1.0.1) 7.962 ms 4.506 ms 5.145 ms

2 P1 (10.0.0.3) 16.347 ms 10.390 ms 10.131 ms

MPLS Label=300000 CoS=0 TTL=1 S=1

3 PE3 (10.0.0.9) 9.755 ms 7.490 ms 7.409 ms

4 BR3 (192.168.20.3) 8.266 ms 10.196 ms 6.466 ms

Let’s interpret the output step by step. As you saw in Chapter 1, PE1 has a BGP route toward BR3’s loopback, and the BGP next hop of this route is PE3. Then, PE1 resolves this BGP next hop by looking at the inet.3 auxiliary table, and this is how the Internet route (to BR3) gets a labeled forwarding next hop.

Tip

If an IPv4 BGP route does not have a BGP next hop in inet.3, Junos tries to find it in inet.0. You can disable this second lookup and make inet.3 the only resolution Routing Information Base (RIB) for IPv4 routes by using this command: set routing-options resolution rib inet.0 resolution-ribs inet.3

Let’s see the BGP next-hop resolution process in detail.

Example 2-9. MPLS forwarding at ingress PE1 (Junos)

juniper@PE1> show route 192.168.20.3 active-path detail

[...]

Protocol next hop: 172.16.0.33

juniper@PE1> show route table inet.3 172.16.0.33

inet.3: 9 destinations, 9 routes (9 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

172.16.0.33/32 *[LDP/9] 11:00:49, metric 20

> to 10.0.0.3 via ge-2/0/4.0, Push 300000

juniper@PE1> show route forwarding-table destination 192.168.20.3

Routing table: default.inet

Internet:

Destination Type Next hop Type Index NhRef Netif

192.168.20.3/32 user indr 1048574 3

10.0.0.3 Push 300000 593 2 ge-2/0/4.0

Note

This double table lookup takes place only at the control plane. Transit packets are processed according to the forwarding table, which already has the resolved forwarding next hop.

PE1 pushes an MPLS header with label 300000 and sends the packet to the forwarding next hop P1. Why label 300000? The answer is in Figure 2-3 and in Example 2-10. This is the label that P1 maps to FEC 172.16.0.33/32.

Example 2-10. Label Mappings at ingress PE1 (Junos)

juniper@PE1> show ldp database | match "put|172.16.0.33" Input label database, 172.16.0.11:0--172.16.0.1:0 300000 172.16.0.33/32 Output label database, 172.16.0.11:0--172.16.0.1:0 300432 172.16.0.33/32 Input label database, 172.16.0.11:0--172.16.0.22:0 24000 172.16.0.33/32 Output label database, 172.16.0.11:0--172.16.0.22:0 300432 172.16.0.33/32

This is an interesting command. It lets you know the label mappings that PE1 is learning (Input label database) and advertising (Output label database). This usage of the input and output keywords is sometimes a bit confusing:

-

The Input label database contains MPLS labels that PE1 must add to a packet when sending it out to a neighbor. This is input for the control or signaling plane (LDP), but output for the forwarding (MPLS) plane.

-

The

Output label databasecontains MPLS labels that PE1 expects to receive from its neighbors. This is output for the control or signaling plane (LDP), but it’s input for the forwarding (MPLS) plane.

After this point is clarified, let’s answer the most important question of this LDP section. If PE1 learns label 300000 from space 172.16.0.1:0, and label 24000 from space 172.16.0.22:0, why is it choosing the first mapping to program the forwarding plane? The answer is on the IGP. Although most of the example topologies in this book use IS-IS, OSPF is an equally valid option and (unless specified otherwise), every statement henceforth applies to IS-IS and OSPF indistinctly.

The shortest path to go from PE1 to PE3 is via P1, so among the several label mappings available for 172.16.0.33/32, PE1 chooses the one advertised by P1. This tight coupling with the IGP is the conceptual key to understanding LDP.

Let’s move on to P1, a pure LSR or P-router.

Example 2-11. LDP signaling and MPLS forwarding at P1 (Junos)

juniper@P1> show ldp database | match "put|172.16.0.33"

Input label database, 172.16.0.1:0--172.16.0.2:0

24000 172.16.0.33/32

Output label database, 172.16.0.1:0--172.16.0.2:0

300000 172.16.0.33/32

Input label database, 172.16.0.1:0--172.16.0.11:0

300432 172.16.0.33/32

Output label database, 172.16.0.1:0--172.16.0.11:0

300000 172.16.0.33/32

Input label database, 172.16.0.1:0--172.16.0.33:0

3 172.16.0.33/32

Output label database, 172.16.0.1:0--172.16.0.33:0

300000 172.16.0.33/32

juniper@P1> show route table mpls.0 label 300000

mpls.0: 12 destinations, 12 routes (12 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

300000 *[LDP/9] 00:47:20, metric 10

> to 10.0.0.9 via ge-2/0/6.0, Pop

300000(S=0) *[LDP/9] 00:47:20, metric 10

> to 10.0.0.9 via ge-2/0/6.0, Pop

juniper@P1> show route forwarding-table label 300000 table default

Routing table: default.mpls

MPLS:

Destination Type RtRef Next hop Index NhRef Netif

300000 user 0 10.0.0.9 Pop 605 2 ge-2/0/6.0

300000(S=0) user 0 10.0.0.9 Pop 614 2 ge-2/0/6.0

The IGP tells P1 that the next router in the path toward PE3 is PE3 itself. Naturally! And PE3 maps label 3 to FEC 172.16.0.33/32, its own loopback. This is a reserved label value called implicit null. It is not a real label, but a forwarding instruction that translates to pop the label. In other words, an MPLS packet never carries the label value 3, which is simply a signaling artifact. So, the IPv4 packet arrives unlabeled to PE3, and PE3 has the BGP route to reach BR3. The traceroute trip finishes here. This behavior is called Penultimate Hop Popping (PHP).

There is no label swap operation in a two-hop LSP with PHP. For a longer LSP such as PE1-P1A-P1B-PE3, P1A would perform a label swap.

Note

You can disable PHP and configure explicit null (value 0 for IPv4, value 2 for IPv6), therefore making a real transport MPLS header arrive at the egress PE. One of the applications of explicit null is to keep independent class of service policies for IP and MPLS.

So, is this an LSP? Yes, it is Label-Switched Path; there are MPLS labels after all. But it is signaled in a particular way. The Label Mapping messages depicted in Figure 2-3 allow any router in the network to send MPLS-labeled traffic toward PE3. This is a many-to-one or, in other words, an MP2P LSP.

Let’s finish with a useful toolset described in RFC 4379: MPLS ping and traceroute. These tools don’t require any specific configuration in Junos and they inject UDP-over-IPv4 data packets in an LSP. In that sense, they are very useful to test an LSP’s forwarding plane. The destination IPv4 address of these packets is in the range 127/8, which is reserved for loopback use and is not routable. The appropriate MPLS labels are pushed in order to reach the destination PE, in this case 172.16.0.33. Following is an MPLS traceroute.

Example 2-12. MPLS LDP traceroute (Junos)

juniper@PE1> traceroute mpls ldp 172.16.0.33

Probe options: ttl 64, retries 3, wait 10, paths 16, exp 7[...]

ttl Label Protocol Address Previous Hop Probe Status

1 300000 LDP 10.0.0.3 (null) Success

FEC-Stack-Sent: LDP

ttl Label Protocol Address Previous Hop Probe Status

2 3 LDP 10.0.0.9 10.0.0.3 Egress

FEC-Stack-Sent: LDP

Path 1 via ge-2/0/4.0 destination 127.0.0.64

LDP signaling and MPLS forwarding in the IOS XR plane

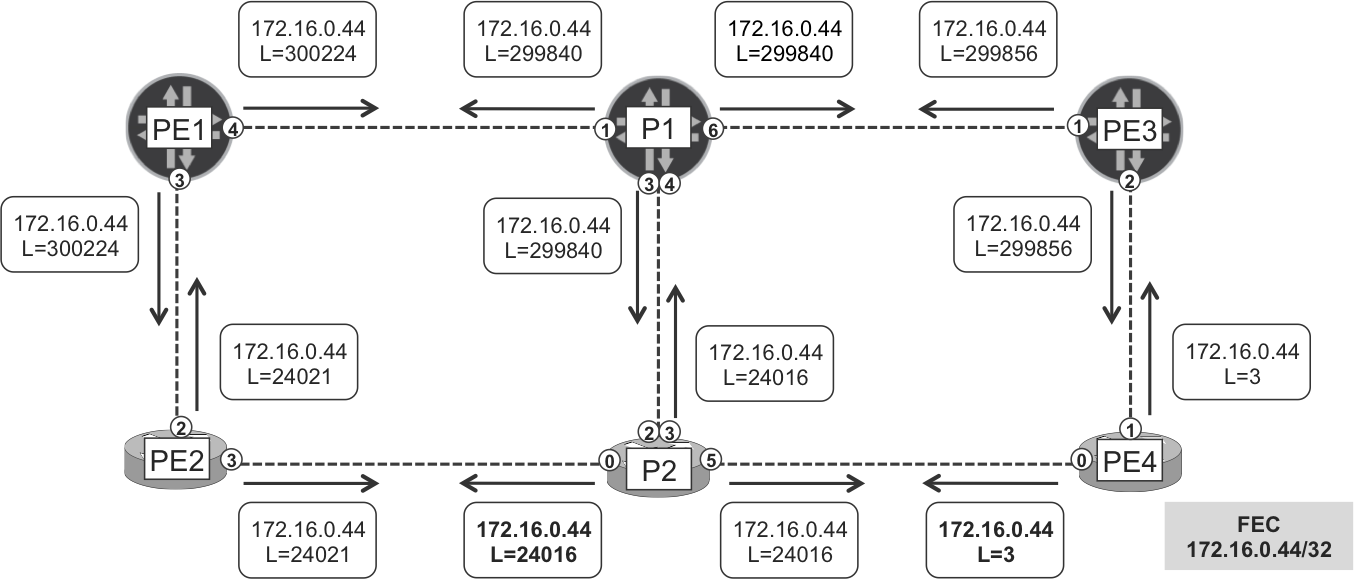

Figure 2-4 presents a similar example, this time focusing on the IOS XR plane (PE2, P2, PE4). The logic is practically identical.

Figure 2-4. LDP label mapping messages for 172.16.0.44

Following is an IPv4 (non MPLS) traceroute from CE2 to BR4.

Example 2-13. Traceroute through the IOS XR Plane

juniper@CE2> traceroute 192.168.20.4 source 192.168.10.2

traceroute to 192.168.20.4 (192.168.20.4) from 192.168.10.2 [...]

1 PE2 (10.1.0.3) 4.358 ms 2.560 ms 5.822 ms

2 P2 (10.0.0.5) 9.627 ms 8.049 ms 9.261 ms

MPLS Label=24016 CoS=0 TTL=1 S=1

3 PE4 (10.0.0.11) 8.869 ms 7.833 ms 9.193 ms

4 BR4 (192.168.20.4) 10.627 ms 11.592 ms 11.593 ms

PE2 has a BGP route toward BR4’s loopback, and the BGP next hop of this route is PE4. As is expained in Chapter 1, IOS XR does not have an auxiliary table such as inet.3 in Junos. The actual forwarding is ruled by the Cisco Express Forwarding (CEF) entry for 172.16.0.44/32.

Example 2-14. MPLS forwarding at ingress PE2 (IOS XR)

RP/0/0/CPU0:PE2#show route 192.168.20.4

Routing entry for 192.168.20.4/32

Known via "bgp 65000", distance 200, metric 0

Tag 65002, type internal

Installed Nov 17 08:32:32.941 for 00:30:58

Routing Descriptor Blocks

172.16.0.44, from 172.16.0.201

Route metric is 0

No advertising protos.

RP/0/0/CPU0:PE2#show cef 172.16.0.44

172.16.0.44/32, version 91, internal [...]

local adjacency 10.0.0.5

Prefix Len 32, traffic index 0, precedence n/a, priority 3

via 10.0.0.5, GigabitEthernet0/0/0/3, 6 dependencies [...]

path-idx 0 NHID 0x0 [0xa0eb34a4 0x0]

next hop 10.0.0.5

local adjacency

local label 24021 labels imposed {24016}

PE2 pushes an MPLS header with label 24016 and sends the packet to the forwarding next hop P2. Why label 24016? As you can see in Figure 2-4 and in Example 2-15, this is the label that P2 maps to FEC 172.16.0.44/32.

Example 2-15. Label mappings at ingress PE2 (IOS XR)

RP/0/0/CPU0:PE2# show mpls ldp bindings 172.16.0.44/32

172.16.0.44/32, rev 85

Local binding: label: 24021

Remote bindings: (2 peers)

Peer Label

----------------- ---------

172.16.0.2:0 24016

172.16.0.11:0 300224

Now, let’s see the LDP signaling and the forwarding state on P2, the next hop LSR.

Example 2-16. LDP signaling and MPLS forwarding at P2 (IOS XR)

RP/0/0/CPU0:P2# show mpls ldp bindings 172.16.0.44/32

172.16.0.44/32, rev 36

Local binding: label: 24016

Remote bindings: (3 peers)

Peer Label

----------------- ---------

172.16.0.1:0 299840

172.16.0.22:0 24021

172.16.0.44:0 ImpNull

RP/0/0/CPU0:P2#show mpls forwarding labels 24016

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ --------- -------------- ---------- ------------ ----------

24016 Pop 172.16.0.44/32 Gi0/0/0/5 10.0.0.11 379266

Unlike Junos, IOS XR uses MPLS forwarding to reach internal IPv4 prefixes. So, a plain IPv4 traceroute from PE2 to PE4 shows the label, too (although it provides less information than MPLS traceroute).

Example 2-17. IPv4 Traceroute from PE2 to PE4 (IOS XR)

RP/0/0/CPU0:PE2#traceroute ipv4 172.16.0.44 [...] 1 p2 (10.0.0.5) [MPLS: Label 24016 Exp 0] 9 msec 0 msec 0 msec 2 pe4 (10.0.0.11) 0 msec * 0 msec

LDP and Equal-Cost Multipath

According to the IGP metric, there is no single shortest path from PE1 to PE4. Instead, there are four possible equal-cost paths: PE1-PE2-P2-PE4, PE1-P1-PE3-PE4, and two times PE1-P1-P2-PE4 (there are two parallel links between P1 and P2). This condition is called Equal-Cost Multipath (ECMP). With ECMP, each next hop is distinct from a Layer 3 (L3) perspective.

Similarly, a popular technology called Link Aggregation Group (LAG), or Link Bundling, also results in several equal-cost paths. Some common LAG variants are Aggregated Ethernet (AE) and Aggregated SONET (AS). In this case, a single L3 interface can span several physical links that are bundled together. Finally, you can achieve complex equal-cost topologies by combining ECMP and LAG together (e.g., one of the P1-P2 connections could be a LAG).

As soon as there are equal-cost paths to a destination, a natural question arises: which path do the packets follow? Well, they are load balanced, according to a certain logic that is explained later in this section.

Let’s step back for a moment and revisit LDP. Because LDP is coupled to the IGP, it implements ECMP natively. You can check this easily by using MPLS traceroute from PE1 to PE4 (different 127/8 destination IPv4 addresses are automatically used to trigger load balancing); see Example 2-18.

Example 2-18. LDP ECMP (Junos)

juniper@PE1> traceroute mpls ldp 172.16.0.44/32

Probe options: ttl 64, retries 3, wait 10, paths 16, exp 7 [...]

ttl Label Protocol Address Previous Hop Probe Status

1 24021 LDP 10.0.0.1 (null) Success

FEC-Stack-Sent: LDP

ttl Label Protocol Address Previous Hop Probe Status

2 24016 Unknown 10.0.0.5 10.0.0.1 Success

FEC-Stack-Sent: LDP

ttl Label Protocol Address Previous Hop Probe Status

3 3 Unknown 10.0.0.11 10.0.0.5 Egress

FEC-Stack-Sent: LDP

Path 1 via ge-2/0/3.0 destination 127.0.0.64

ttl Label Protocol Address Previous Hop Probe Status

1 299840 LDP 10.0.0.3 (null) Success

FEC-Stack-Sent: LDP

ttl Label Protocol Address Previous Hop Probe Status

2 299856 LDP 10.0.0.9 10.0.0.3 Success

FEC-Stack-Sent: LDP

ttl Label Protocol Address Previous Hop Probe Status

3 3 LDP 10.0.0.13 10.0.0.9 Egress

FEC-Stack-Sent: LDP

Path 2 via ge-2/0/4.0 destination 127.0.1.64

ttl Label Protocol Address Previous Hop Probe Status

2 24016 LDP 10.0.0.25 10.0.0.3 Success

FEC-Stack-Sent: LDP

ttl Label Protocol Address Previous Hop Probe Status

3 3 Unknown 10.0.0.11 10.0.0.25 Egress

FEC-Stack-Sent: LDP

Path 3 via ge-2/0/4.0 destination 127.0.1.65

ttl Label Protocol Address Previous Hop Probe Status

2 24016 LDP 10.0.0.7 10.0.0.3 Success

FEC-Stack-Sent: LDP

ttl Label Protocol Address Previous Hop Probe Status

3 3 Unknown 10.0.0.11 10.0.0.7 Egress

FEC-Stack-Sent: LDP

Path 4 via ge-2/0/4.0 destination 127.0.1.69

Note

You must explicitly enable MPLS Operations, Administration and Management (OAM) in IOS XR by using the global configuration command mpls oam.

The LSP from PE1 to PE4 has four possible equal-cost paths. So, not only the LDP LSPs are MP2P, they are also ECMP-aware. This makes it more challenging to perform fault isolation on very meshed LDP networks.

Here’s what happens from the point of view of a given LSR:

-

When a packet arrives at a specific interface and with a given MPLS label, is it easy to determine the interface to which the LSR will switch the packet out? If there is just one shortest path to the egress PE, it’s easy. But if there is ECMP toward the destination FEC, only advanced vendor-specific tools (beyond the scope of this book) can help to predict the result of the load-balancing decision.

-

When the LSR switches a packet out of an interface with a given MPLS label, it is not easy to guess the previous history of that packet. Which ingress PE did inject it in the MPLS core? At which interface did the packet arrive to the LSR? It is tricky to answer these questions because these LSPs are MP2P and the LDP label space is per platform.

Note that in the previous example, TTL=1 entry for paths 3 and 4 is the same as in path 2; therefore, in the interest of brevity, Junos does not display it. All of these paths traverse P-routers at both planes: Junos (P1) and IOS XR (P2). With the software versions used in this book, MPLS OAM has an interoperability issue that causes the Protocol to be displayed as Unknown. This issue is specific of MPLS OAM only: as far as plain transport LDP is concerned, interoperability is perfect.

In practice, load balancing in LDP networks takes place on a hop-by-hop basis. PE1 has two equal-cost next hops to reach PE4: P1 and PE2. In turn, P1 has three equal-cost next hops to reach PE4: PE3 and twice P2. And so on.

Load-balancing hash algorithm

Load balancing is a complex topic that is intimately related to the hardware implementation of each platform. The good news is that Junos and IOS XR are both capable of doing per-flow load balancing of IP and MPLS traffic. Unlike stateful firewalls, LSRs perform packet-based (not flow-based) forwarding, so what is a flow in the context of a LSR?

A flow is a set of packets with common values in their headers. For example, all the packets of a TCP connection from a client to a server (or of a voice stream between two endpoints), have several fields in common: source and destination address, transport protocol, source and destination ports, and so on. To guarantee that all the packets of a given flow arrive to the destination in the correct order, they should all follow exactly the same path; indirectly, this means that they share respectively the same MPLS label values, hop by hop.

Note

The set of fields that are selected from the packet headers depends on the platform and on the configuration. These fine-tuning details are beyond the scope of this book.

On the other hand, different flows should be evenly distributed across equal-cost next hops such as ECMP, LAG, and so on. Otherwise, some links would not be utilized and others would quickly saturate. This phenomenon is commonly called traffic polarization.

Let’s see how routers achieve per-flow load balancing. For every single packet, the router selects some header fields (plus a fixed local randomization seed) and applies a mathematical algorithm to them called a hash. This algorithm is very sensitive to small variations of its input values. The hash result determines (modulus the number of equal-cost next hops) the actual forwarding next hop to which the packet is mapped. All the packets of a given flow receive the same hash value and are hence forwarded out to the same next hop.

Basic per-flow load balancing is enabled by default in IOS XR, but it requires explicit configuration in Junos, which performs per-destination route hashing by default.

Example 2-19. Enabling per-flow load balancing in Junos

policy-options {

policy-statement PL-LB {

then load-balance per-packet;

}}

routing-options {

forwarding-table export PL-LB;

}

Note

The per-packet syntax remains for historical reasons, but the way it is implemented in modern Junos versions is per-flow (hash based).

Let’s forget for a moment that the topology has two vendor-specific planes. This is a vendor-agnostic analysis of an IP flow from CE1 to BR4:

-

The ingress PE1 receives plain IPv4 packets from CE1 and applies a hash to them. Because all the packets belong to the same flow, the result of the hash is the same and they are all forwarded to the same next hop: P1 or PE2. If the next hop is PE2, there is only one shortest path remaining and the load-balancing discussion stops here.

-

Let’s suppose that the next hop is P1. So, P1 receives MPLS packets and applies a hash to them. This hash takes into account the MPLS label value(s) and it might also consider the inner (e.g., IPv4) headers. As a result, all the packets of this flow are sent out to one and only one of the available next hops: PE3, P2-link1, or P2-link2.

MPLS hash and Entropy Labels

Many LSRs in the industry are able to include MPLS packet payload fields (like IP addresses, TCP/UDP ports) into the load-balancing hash algorithm. But some low-end (or old) platforms from different vendors cannot do that. This can be an issue if the number of active FECs is low. For example, in a domestic Internet Service Provider (ISP) that sends all the upstream traffic up to only two big Internet gateways, most of the packets carry either label L1 (mapped to FEC gateway_1) or label L2 (mapped to FEC gateway_2). Two different label values are clearly not enough to spread traffic across multiple equal-cost paths.

To ensure that there is enough randomness to achieve good load balancing on these devices, RFC 6790 introduces the concept of Entropy Labels. These labels have a per-flow random value and do not have any forwarding significance. In other words, they are not mapped to any FEC. Their goal is just to ensure smooth load balancing along the available equal cost paths. You can read more about Entropy Labels in Chapter 6.

There is a similar technology called Flow-Aware Transport (FAT, RFC 6391), but it is specific of Layer 2 (L2) services. Chapter 6 also covers this in greater detail.

LDP Implementation Details

Although Junos and IOS XR have behaved similarly in the examples so far, their LDP implementation is actually quite different. Let’s follow the LDP advertising flow, starting at the egress PE.

Local FEC label binding/allocation

As shown earlier, PE3 and PE4 both advertise their own loopback mapped to the implicit null label. The following command shows all of the local (or egress) FECs that PE3 and PE4 advertise.

Example 2-20. Default label bindings for local routes (Junos, IOS XR)

juniper@PE3> show ldp database session 172.16.0.44 | match "put| 3"

Input label database, 172.16.0.33:0--172.16.0.44:0

3 10.0.0.10/31

3 10.0.0.12/31

3 10.2.0.0/24

3 10.255.0.0/16

3 172.16.0.44/32

Output label database, 172.16.0.33:0--172.16.0.44:0

3 172.16.0.33/32

The only local FEC that PE3 (Junos) advertises via LDP is its primary lo0.0 address. This is a default behavior that you can change by applying an egress-policy at the [edit protocols ldp] hierarchy. A common use case covered in Chapter 3 is the advertisement of nonprimary lo0.0 IP addresses. Additionally, LDP export policies provide granular per-neighbor FEC advertisement.

On the other hand, PE4 (IOS XR) advertises label mappings for all its directly connected routes by default. Most services use LSPs whose endpoints are loopback addresses, though. In that sense, you can configure IOS XR to do the following:

-

Only advertise /32 FECs by using

mpls ldp address-family ipv4 label local allocate for host-routes -

Granular label binding and advertisement with policies applied at

mpls ldp address-family ipv4 label.

The benefit is a lower amount of state to be kept and exchanged in the LIBs.

What about remote (nonlocal) FECs? By default, both Junos and IOS XR advertise label mappings for IGP routes, regardless of their mask. Again, the previously listed knobs make it possible to change this default behavior.

Label advertisement modes

Figure 2-3 and Figure 2-4 illustrate the Downstream Unsolicited (DU) LDP label advertisement (or distribution) mode that both Junos and IOS XR use by default. This elicits two questions:

-

Why downstream? When it advertises label mapping (300000, 172.16.0.33/32), P1 is telling its neighbors: if you want to use me as a downstream LSR to reach 172.16.0.33/32, send me the packets with this label. So, P1 becomes a potential downstream LSR for that FEC.

-

Why unsolicited? P1’s neighbors do not request any label mappings from P1; however, P1 sends the messages.

Chapter 16 briefly mentions another label distribution method called Downstream on Demand (DoD), which is also used by RSVP-TE.

Label distribution control modes

There are two label distribution control modes: ordered and independent. Junos implements the ordered mode, whereas IOS XR implements the independent mode.

In the ordered mode, the following sequence takes place in strict chronological sequence (see Figure 2-3):

-

PE3 advertises the label mapping (172.16.0.33/32, 3) to its neighbors.

-

P1 receives this label mapping from PE3, the egress LSR, and the shortest-path next hop from P1 to 172.16.0.33 is precisely the direct link P1→PE3.

-

P1 binds label 300000 to this FEC, installs the forwarding entry (300000→ pop to 10.0.0.9) in its Label Forwarding Information Base (LFIB) and advertises the Label Mapping (172.16.0.33/32, 300000) to its neighbors.

-

PE1 receives the label mapping from P1, and the shortest path next hop from PE1 to 172.16.0.33 is precisely P1.

-

PE1 binds label 300432 to the FEC, installs the forwarding entry (300432→ swap 300000 to 10.0.0.3) in its LFIB and advertises the label mapping (172.16.0.33/32, 300432) to its neighbors.

In a nutshell, before binding a label to a remote FEC, Junos LSRs first need to receive a label mapping from the shortest-path downstream LSR en route to the FEC. Likewise, if it loses the downstream labeled state to the FEC (due to an LDP event or to a topology change), after some time the Junos LSR removes the label binding and sends a Label Withdraw message out to its neighbors.

The ordered mode guarantees a strong consistency between the control and the forwarding plane; on the other hand, it requires a potentially higher time to establish the LSPs.

How about independent mode? P2 (IOS XR) binds and announces label mappings regardless of the FEC’s downstream label state.

Suppose that P2 has not established any LDP session yet. Nevertheless, P2 binds labels to local and remote FECs. Then, suppose that the LDP session between P2 and PE2 (and only this session) comes up. At this point, P2 advertises all the label mappings to PE2. These mappings include (172.16.0.33/32, 24000) and (172.16.0.44/32, 24016). As you can see in Example 2-21, the resulting LFIB entries at P2 are marked as Unlabelled.

Example 2-21. Unlabeled bindings in independent mode (IOS XR)

RP/0/0/CPU0:P2#show mpls forwarding

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- --------------- ---------- ---------- ---------

[...]

24000 Unlabelled 172.16.0.33/32 Gi0/0/0/2 10.0.0.6 25110

Unlabelled 172.16.0.33/32 Gi0/0/0/3 10.0.0.24 2664

Unlabelled 172.16.0.33/32 Gi0/0/0/5 10.0.0.11 2664

24016 Unlabelled 172.16.0.44/32 Gi0/0/0/5 10.0.0.11 134

[...]

What if P2 receives a packet whose outer MPLS label is 24000? The Unlabelled instruction means pop all the labels and forward to the next hop(s) in the LFIB. This is different from the Pop instruction, which just pops the outer label.

The outcome depends on the traffic flows:

-

Internet traffic from CE2 to BR4 successfully reaches its destination.

-

Internet traffic from CE2 to BR3 is forwarded by P2 across three equal-cost next hops. Two of them point to P1, which has no route toward the destination and thus drops the packets.

-

VPN traffic with several labels in the stack might be mapped to the master routing instance (and likely discarded) by the next hop.

When all the LDP sessions come up and P2 receives all the label mapping messages from its neighbors, P2’s LFIB is programmed with the appropriate Swap (to a given label) and Pop instructions.

Example 2-22. Labeled bindings in independent mode (IOS XR)

RP/0/0/CPU0:P2#show mpls forwarding

Local Outgoing Prefix Outgoing Next Hop Bytes

Label Label or ID Interface Switched

------ ----------- --------------- ---------- ---------- ---------

[...]

24000 300000 172.16.0.33/32 Gi0/0/0/2 10.0.0.6 25110

300000 172.16.0.33/32 Gi0/0/0/3 10.0.0.24 2664

24000 172.16.0.33/32 Gi0/0/0/5 10.0.0.11 2664

24016 Pop 172.16.0.44/32 Gi0/0/0/5 10.0.0.11 134

[...]

The ordered and independent label distribution control modes are radically different and each has its pros and cons in terms of control and delay. The final state after LDP converges is the same, regardless of the implemented mode.

Label retention modes

Both Junos and IOS XR implement Liberal Label Retention Mode (as opposed to Conservative) by default, meaning that the LSRs accept and store all the incoming label mapping messages. For example, PE1 receives label mappings for FEC 172.16.0.33/32 from both P1 and PE2. Even though the forwarding next hop is P1, PE1 decides to store both label mappings. Why? Potentially, a topology change in the future might turn PE2 into the next hop. Therefore, PE1 keeps all the states, just in case.

FEC aggregation

Looking back at Example 2-20, PE4 advertises five different local FECs to PE3, all of them mapped to the implicit null label. Let’s focus on two of them: 172.16.0.44/32 and 10.0.0.10/31. By default, PE3 advertises them with the same label to P1.

This default behavior in Junos is called FEC aggregation, and you can disable it by configuring set protocols ldp deaggregate. Here is the outcome:

Example 2-23. Default FEC aggregation (Junos)

juniper@PE3> show ldp database | match "put|172.16.0.44|10.0.0.10"

[...]

Output label database, 172.16.0.33:0--172.16.0.1:0

299856 10.0.0.10/31

299856 172.16.0.44/32

Input label database, 172.16.0.33:0--172.16.0.44:0

3 10.0.0.10/31

3 172.16.0.44/32

Example 2-24. FEC de-aggregation (Junos)

juniper@PE3> show ldp database | match "put|172.16.0.44|10.0.0.10"

[...]

Output label database, 172.16.0.33:0--172.16.0.1:0

299920 10.0.0.10/31

299856 172.16.0.44/32

Input label database, 172.16.0.33:0--172.16.0.44:0

3 10.0.0.10/31

3 172.16.0.44/32

Note

IOS XR does not perform FEC aggregation by default. In other words, it performs FEC de-aggregation by default.

LDP Inter-Area

Looking back at Figure 2-1, let’s suppose the following:

-

PE1 and PE2 are L2-only IS-IS routers in Area 49.0001.

-

PE3 and PE4 are L1-only IS-IS routers in Area 49.0002.

-

P1 and P2 are IS-IS L1-L2 routers, present in both Areas.

In this scenario, PE3 and PE4 only have a default route to reach PE1 and PE2. And the same would happen with OSPF stub areas. A default route is not specific enough for PE3 and PE4 to process the LDP label mappings for 172.16.0.11/32 and 172.16.0.22/32. This breaks MPLS forwarding.

RFC 5283 proposes a clean solution to this problem, but it is not implemented yet. Is there a workaround? Yes: selective IS-IS L2-to-L1 route leaking, or non-stub OSPF areas. However, this approach has an impact on routing scalability. Chapter 16 covers a clean solution to this challenge, called Seamless MPLS.

Protecting LDP Networks from Traffic Blackholing

Because it is tightly coupled to the IGP but it is not the IGP, plain LDP builds fragile MPLS networks that can easily cause traffic blackholing. Let’s see why, and how to make it more robust.

LDP IGP Synchronization (RFC 5443)

What happens if PE1 and P1 bring up an IS-IS adjacency together, but for whatever reason (routing/filtering issue, misconfiguration, etc.), they do not establish an LDP session to each other? From the point of view of PE1, the shortest path to PE3 is still PE1-P1-PE3. Unfortunately, this path is unlabeled, so P1 discards the customer traffic. In other words, CE1 can no longer ping BR3.

The LDP IGP Synchronization feature increases the IGP metric of a link if LDP is down on it. This way, the network dynamically skips unlabeled links and restores the service. Following is the syntax for IS-IS, which is very similar to the one for OSPF.

Example 2-25. LDP IGP Synchronization in Junos and IOS XR

/* Junos sample configuration */

protocols {

isis {

interface ge-0/0/4.0 ldp-synchronization;

}}

/* IOS XR sample configuration */

router isis mycore

interface GigabitEthernet0/0/0/3

address-family ipv4 unicast

mpls ldp sync

In the following example, the LDP IGP Synchronization feature is turned on for all the network core links, and all the LDP sessions are up except for the one between PE1 and P1. The customer traffic finds its way through a longer yet labeled path. So the end-to-end service is fine.

Example 2-26. LDP IGP Synchronization in action

juniper@PE1> show isis database level 2 PE1.00-00 extensive

[...]

IS extended neighbor: P1.00, Metric: default 16777214

IS extended neighbor: PE2.00, Metric: default 10

[...]

juniper@PE1> show isis database level 2 P1.00-00 extensive

[...]

IS extended neighbor: PE1.00, Metric: default 16777214

IS extended neighbor: P2.00, Metric: default 10

IS extended neighbor: P2.00, Metric: default 10

IS extended neighbor: PE3.00, Metric: default 10

[...]

juniper@CE1> traceroute 192.168.20.3 source 192.168.10.1

traceroute to 192.168.20.3 (192.168.20.3) from 192.168.10.1 [...]

1 PE1 (10.1.0.1) 7.577 ms 3.113 ms 3.478 ms

2 PE2 (10.0.0.1) 14.778 ms 13.087 ms 11.303 ms

MPLS Label=24000 CoS=0 TTL=1 S=1

3 P2 (10.0.0.5) 11.723 ms 12.630 ms 14.843 ms

MPLS Label=24000 CoS=0 TTL=1 S=1

4 P1 (10.0.0.24) 14.599 ms 15.018 ms 23.803 ms

MPLS Label=300032 CoS=0 TTL=1 S=1

5 PE3 (10.0.0.9) 13.564 ms 20.615 ms 25.406 ms

6 BR3 (192.168.20.3) 18.587 ms 15.589 ms 19.322 ms

Both Junos and IOS XR support this feature on IGP interfaces configured as point-to-point, which is the recommended mode for core links. In addition, IOS XR also supports it on broadcast links.

LDP Session Protection

Session Protection is another LDP robustness enhancement, based on the Targeted Hello functionality that is defined on RFC 5036. With this feature, two directly connected LDP peers exchange two kinds of LDP-over-UDP Hello packets:

- LDP Link Hellos

- Single-hop (TTL=1) multicast packets sourced at the link address, destined to 224.0.0.2 and sent independently on each link. These packets achieve basic discovery (see Figure 2-2).

- LDP Targeted Hellos

- Multihop (TTL>1) loopback-to-loopback unicast packets, enabled by using the Session Protection feature.

Note

LDP-over-UDP Targeted Hellos are not the same thing as LDP-over-TCP keepalive messages; they coexist.

LDP Session Protection, as it name implies, maintains the LDP session up upon a link flap. Even if the direct PE1-P1 link goes down, the LDP-over-TCP session and the LDP-over-UDP targeted hello adjacency are both multihop. These packets are routed across the alternate PE1-PE2-P2-P1 path, and in this way the LDP session and the LDP hello adjacency between PE1 and P1 both remain up. The routers keep all the LDP label mappings, which adds forwarding plane robustness to the network.

Let’s look at the configuration and its outcome in Junos:

Example 2-27. LDP Session Protection in Junos (PE1)

protocols {

ldp {

interface lo0.0;

session-protection;

}}

juniper@PE1> show ldp session

Address State Connection Hold time Adv. Mode

172.16.0.1 Operational Open 26 DU

172.16.0.22 Operational Open 29 DU

juniper@PE1> show ldp neighbor

Address Interface Label space ID Hold time

10.0.0.1 ge-2/0/3.0 172.16.0.22:0 13

10.0.0.3 ge-2/0/4.0 172.16.0.1:0 14

172.16.0.1 lo0.0 172.16.0.1:0 44

172.16.0.22 lo0.0 172.16.0.22:0 41

PE1 does not have parallel links to any neighboring router. So, there are two hello adjacencies to each peer (identified by a common Label space ID): the link hello and the targeted hello adjacency.

Finally, let’s see it on IOS XR:

Example 2-28. LDP Session Protection in IOS XR (PE2)

mpls ldp session protection RP/0/0/CPU0:PE2#show mpls ldp discovery brief Local LDP Identifier: 172.16.0.22:0 Discovery Source VRF Name Peer LDP Id Holdtime Session -------------------- ---------- ---------------- -------- ------- Gi0/0/0/2 default 172.16.0.11:0 15 Y Gi0/0/0/3 default 172.16.0.2:0 15 Y Tgt:172.16.0.2 default 172.16.0.2:0 90 Y Tgt:172.16.0.11 default 172.16.0.11:0 45 Y

RSVP-TE

RSVP was initially defined in RFC 2205 as a protocol to make resource reservations along paths in the Internet. Although this original specification did not have much success in terms of industry adoption and real deployments, RSVP was further evolved into the popular RSVP-TE (RFC 3209, Extensions to RSVP for LSP Tunnels), the most flexible and powerful of all the MPLS signaling protocols—which requires more state in the network. Although the TE in the acronym RSVP-TE stands for Traffic Engineering, RSVP-TE has its own place in the MPLS world, and it is a valid deployment choice even for scenarios in which TE is not required. This section covers basic RSVP-TE, and leaves Traffic Engineering to Chapter 13, Chapter 14, and Chapter 15. Very often, this book refers to RSVP-TE simply as RSVP.

RSVP-TE is easier to understand than LDP. It builds two types of LSPs: P2P and P2MP. IP unicast traffic is tunneled in P2P LSPs. Unlike the MP2P LSPs (from-any-to-one) signaled with LDP, RSVP-TE P2P LSPs (from-one-to-one) have a clear head-end. Conceptually, they are very similar to the static LSPs of Chapter 1, except that this time they are dynamically signaled with a protocol: RSVP-TE.

On the other hand, RSVP-TE is not as plug-and-play as LDP. The first necessary (but not sufficient) step is to enable Traffic Engineering in the IGP (IS-IS, in this example) and to configure RSVP on the core interfaces, except for the links to the RRs.

Example 2-29. RSVP-TE configuration at PE1 (Junos)

protocols {

isis {

level 2 wide-metrics-only;

}

rsvp {

interface ge-0/0/3.0;

interface ge-0/0/4.0;

}}

Tip

In Junos, IS-IS Traffic Engineering extensions are turned on by default. OSPF TE extensions require explicit configuration by using the set protocols ospf traffic-engineering command.

Example 2-30. RSVP-TE configuration at PE2 (IOS XR)

1 router isis mycore 2 address-family ipv4 unicast 3 metric-style wide 4 mpls traffic-eng level-2-only 5 mpls traffic-eng router-id Loopback0 6 ! 7 rsvp 8 interface GigabitEthernet0/0/0/3 9 interface GigabitEthernet0/0/0/4 10 ! 11 mpls traffic-eng 12 interface GigabitEthernet0/0/0/3 13 interface GigabitEthernet0/0/0/4

Note

Lines 7 through 9 are actually not needed for basic RSVP-TE operation, but it is a good practice to add them.

The configuration in Example 2-29 and Example 2-30 does not bring up any RSVP-TE neighbors or LSPs. As you can see in Example 2-31, it just enables the RSVP protocol on the interfaces.

Example 2-31. RSVP-TE baseline state at PE1 and PE2

juniper@PE1> show rsvp neighbor

RSVP neighbor: 0 learned

juniper@PE1> show rsvp interface

RSVP interface: 2 active

Active Subscr- Static Available Reserved[...]

Interface State resv iption BW BW BW [...]

ge-2/0/3.0 Up 0 100% 1000Mbps 1000Mbps 0bps [...]

ge-2/0/4.0 Up 0 100% 1000Mbps 1000Mbps 0bps [...]

RP/0/0/CPU0:PE2#show rsvp neighbors

RP/0/0/CPU0:PE2#show rsvp interface

RDM: Default I/F B/W %: 75% [default] (max resv/bc0), 0% [default]

Interface MaxBW (bps) MaxFlow (bps) Allocated (bps) MaxSub (bps)

----------- ------------ ------------- --------------- ------------

Gi0/0/0/2 0 0 0 ( 0%) 0

Gi0/0/0/3 0 0 0 ( 0%) 0

The lack of neighbors is expected. Unlike LDP and IGPs, the role of hello packets in RSVP-TE is quite secondary. RSVP-TE LSPs have their own refresh mechanism and it is not mandatory to have hello adjacencies on the interfaces. RSVP hello adjacencies are typically established when at least one RSVP-TE LSP traverses the link.

RSVP-TE LSP Fundamentals

Unless you use a central controller (see Chapter 15), you need to configure RSVP LSPs explicitly at the ingress PE. There are basically two ways of doing it: defining LSPs one by one, or enabling a certain level of endpoint autodiscovery. Let’s begin with the first approach, which has the advantage of providing more control and flexibility for each individual LSP. Despite its power, the need for manual LSP configuration is one of the reasons why some designers prefer LDP to RSVP, and reserve RSVP for scenarios in which Traffic Engineering is required.

RSVP-TE Tunnels, LSPs, and Sessions

Table 2-2 summarizes the different terminology used by RFC 3209, Junos, and IOS XR.

| RFC 3209 | Tunnel | LSP |

|---|---|---|

| Junos | LSP | Session |

| IOS XR | Tunnel | Path, Session |

In the terms of RFC 3209, you configure tunnels on the ingress PE. A tunnel is incarnated through one or more LSPs. There are several reasons why you may have more than one LSP per tunnel, for example:

-

A tunnel has a primary LSP protected by a standby LSP. This topic is discussed in Chapter 19. This type of tunnel has two persistent LSPs.

-

A tunnel has only one primary LSP but it is being resignaled upon failure, reoptimization, or a change in TE constraints such as bandwidth. In these cases, the tunnel may transitorily have more than one LSP.

You can view an LSP as an incarnation of a tunnel. Two LSPs that belong to the same tunnel share the Tunnel ID value and have a different LSP ID that differentiates them.

In this book, the different vendor terminologies are used and you might see the words tunnel and LSP used in a relatively relaxed and interchangeable manner. This chapter uses the Junos terminology.

RSVP-TE LSP configuration

RSVP-TE LSPs are configured at the head-end (ingress) PE. This makes sense for P2P LSPs, because MPLS LSPs in general—with the exception of MP2MP—are unidirectional. So, even with no specific LSP configuration at PE3 and PE4, Example 2-32 and Example 2-33 are enough to signal the following LSPs.

From PE1 (Junos) to: PE2, PE3, and PE4.

Example 2-32. RSVP-TE LSP configuration at PE1 (Junos)

1 groups {

2 GR-LSP {

3 protocols {

4 mpls label-switched-path <*> adaptive;

5 }}}

6 protocols {

7 mpls {

8 apply-groups GR-LSP;

9 label-switched-path PE1--->PE2 to 172.16.0.22;

10 label-switched-path PE1--->PE3 to 172.16.0.33;

11 label-switched-path PE1--->PE4 to 172.16.0.44;

12 }}

From PE2 (IOS XR) to: PE1, PE3, and PE4.

Example 2-33. RSVP-TE LSP configuration at PE2 (IOS XR)

group GR-LSP interface 'tunnel-te.*' ipv4 unnumbered Loopback0 autoroute announce record-route path-option 1 dynamic end-group ! interface tunnel-te11 apply-group GR-LSP signalled-name PE2--->PE1 destination 172.16.0.11 ! interface tunnel-te33 apply-group GR-LSP signalled-name PE2--->PE3 destination 172.16.0.33 ! interface tunnel-te44 apply-group GR-LSP signalled-name PE2--->PE4 destination 172.16.0.44

Bidirectional end-to-end traffic (such as a successful ping between CE1 and BR3) also requires right-to-left LSPs for the return traffic. As a result, unless another MPLS flavor such as LDP or SPRING is enabled in the core, you also need to configure RSVP-TE LSPs rooted from PE3 and from PE4.

In this way, the network has a full mesh of PE→PE RSVP LSPs.

In “RSVP-TE in Action”, you will see that PE1 (Junos) automatically installs 172.16.0.33/32 in the inet.3 auxiliary table, pointing to LSP PE1--->PE3. On the other hand, PE2 (IOS XR) needs the autoroute announce command to make the CEF entry 172.16.0.44/32 point to interface tunnel-te44 (LSP PE2--->PE4). But this command has more implications, as you can see at the end of Chapter 3.

The Traffic Engineering Database

What happens directly after you configure a RSVP-TE LSP? By default, the ingress PE doesn’t leave anything to fate. It decides in advance the LSP’s exact itinerary by building an ordered list of the hops that the LSP should go through. This list is encoded in an Explicit Route Object (ERO). But where does the ingress PE find the information to compute the ERO? It finds it in the Traffic Engineering Database (TED).

Let’s have a sneak peek on a Junos router’s TED.

Example 2-34. TED at PE1 (Junos)

juniper@PE1> show ted database PE1.00

TED database: 7 ISIS nodes 7 INET nodes

ID Type Age(s) LnkIn LnkOut Protocol

PE1.00(172.16.0.11) Rtr 198 2 2 IS-IS(2)

To: P1.00(172.16.0.1), Local: 10.0.0.2, Remote: 10.0.0.3

To: PE2.00(172.16.0.22), Local: 10.0.0.0, Remote: 10.0.0.1

juniper@PE1> show ted database P1.00

TED database: 7 ISIS nodes 7 INET nodes

ID Type Age(s) LnkIn LnkOut Protocol

P1.00(172.16.0.1) Rtr 92 4 4 IS-IS(2)

To: PE1.00(172.16.0.11), Local: 10.0.0.3, Remote: 10.0.0.2

To: PE3.00(172.16.0.33), Local: 10.0.0.8, Remote: 10.0.0.9

To: P2.00(172.16.0.2), Local: 10.0.0.6, Remote: 10.0.0.7

To: P2.00(172.16.0.2), Local: 10.0.0.24, Remote: 10.0.0.25

juniper@PE1> show ted database PE3.00

TED database: 7 ISIS nodes 7 INET nodes

ID Type Age(s) LnkIn LnkOut Protocol

PE3.00(172.16.0.33) Rtr 133 2 2 IS-IS(2)

To: P1.00(172.16.0.1), Local: 10.0.0.9, Remote: 10.0.0.8

To: PE4.00(172.16.0.44), Local: 10.0.0.12, Remote: 10.0.0.13

Similarly, PE2 (IOS XR) also has a TED (Example 2-35).

Example 2-35. TED at PE2 (IOS XR)

RP/0/0/CPU0:PE2#show mpls traffic-eng topology brief 172.16.0.22 [...] IGP Id: 1720.1600.0022.00, MPLS TE Id: 172.16.0.22 Router Node (IS-IS mycore level-2) Link[0]:Point-to-Point, Nbr IGP Id:1720.1600.0002.00 [...] Link[1]:Point-to-Point, Nbr IGP Id:1720.1600.0011.00 [...] RP/0/0/CPU0:PE2#show mpls traffic-eng topology brief 172.16.0.2 [...] IGP Id: 1720.1600.0002.00, MPLS TE Id: 172.16.0.2 Router Node (IS-IS mycore level-2) Link[0]:Point-to-Point, Nbr IGP Id:1720.1600.0022.00 [...] Link[1]:Point-to-Point, Nbr IGP Id:1720.1600.0001.00 [...] Link[2]:Point-to-Point, Nbr IGP Id:1720.1600.0001.00 [...] Link[3]:Point-to-Point, Nbr IGP Id:1720.1600.0044.00 [...] RP/0/0/CPU0:PE2#show mpls traffic-eng topology brief 172.16.0.44 [...] IGP Id: 1720.1600.0044.00, MPLS TE Id: 172.16.0.44 Router Node (IS-IS mycore level-2) Link[0]:Point-to-Point, Nbr IGP Id:1720.1600.0002.00 [...] Link[1]:Point-to-Point, Nbr IGP Id:1720.1600.0033.00 [...]

Note

Although not shown due to the restrictions of space, the TEDs for PE1 and PE2 also contain the nodes from the other vendor’s plane.

The TED looks very much like a Link State Database (LSDB). Indeed, protocols such as IS-IS or OSPF feed the information to build the TED. In addition, both the LSDB and the TED contain per-link Traffic Engineering information that you can see by using the extensive keyword.

Here are the main differences between the IS-IS (or OSPF) LSDB and the TED:

-

The TED is protocol agnostic. It can be populated by IS-IS, OSPF, or even BGP (with a special address family).

-

The TED is unique and there is one separate LSDB per IGP (OSPF, IS-IS) instance or process.

-

The IS-IS (or OSPF) LSDB has information about MPLS and non-MPLS interfaces, whereas the TED only contains MPLS interfaces.

And how can you tell from the LSDB whether a link has MPLS turned on? Let’s temporarily remove family mpls from PE1’s interface ge-2/0/4 (connected to P1). Or, alternatively, delete ge-2/0/4 from protocols rsvp | mpls. Example 2-36 shows PE1’s Link State Packet.

Example 2-36. Link State Packet with MPLS and non-MPLS interfaces (Junos)

juniper@PE1> show isis database PE1 extensive

[...]

TLVs:

IS extended neighbor: PE2.00, Metric: default 10

IP address: 10.0.0.0

Neighbor's IP address: 10.0.0.1

Local interface index: 336, Remote interface index: 0

Current reservable bandwidth:

Priority 0 : 1000Mbps

Priority 1 : 1000Mbps

Priority 2 : 1000Mbps

Priority 3 : 1000Mbps

Priority 4 : 1000Mbps

Priority 5 : 1000Mbps

Priority 6 : 1000Mbps

Priority 7 : 1000Mbps

Maximum reservable bandwidth: 1000Mbps

Maximum bandwidth: 1000Mbps

Administrative groups: 0 <none>

IS extended neighbor: P1.00, Metric: default 10

IP address: 10.0.0.2

Neighbor's IP address: 10.0.0.3

Local interface index: 337, Remote interface index: 0

juniper@PE1> show ted database PE1

TED database: 11 ISIS nodes 7 INET nodes

ID Type Age(s) LnkIn LnkOut Protocol

PE1.00(172.16.0.11) Rtr 601 2 1 IS-IS(2)

To: PE2.00(172.16.0.22), Local: 10.0.0.0, Remote: 10.0.0.1

Local interface index: 336, Remote interface index: 0

Note

The acronym LSP can stand for Label-Switched Path or for Link State Packet. In this book, it typically has the first meaning.

Only the MPLS link (PE1-PE2) contains Traffic Engineering sub–Type Length Value (sub-TLVs), and as a result this is the only interface at PE1 that makes it to the TED. Let’s enable MPLS and RSVP on PE1-P1 interface again and move on.

Constrained Shortest-Path First

To compute the ERO for the PE1→PE3 LSP, PE1 runs an algorithm called Constrained Shortest-Path First (CSPF), which finds the best path to PE3 in the TED. Although this book does explore a wide variety of TE constraints later on in Chapter 13 through Chapter 15, the LSPs in Example 2-32 and Example 2-33 are so simple that they impose no constraints at all. And without constraints, CSPF looks very much like the traditional Shortest-Path First (SPF). Here is the outcome of the CSPF calculation that preceded PE1→PE3 LSP’s signaling from PE1:

Example 2-37. CSPF computation for PE1→PE3 LSP (Junos)

juniper@PE1> show rsvp session name PE1--->PE3 detail [...] Explct route: 10.0.0.1 10.0.0.5 10.0.0.24 10.0.0.9

Surprise! The PE1→PE3 LSP is now signaled via PE2, and it has four hops instead of two. Why? Remember that MPLS was temporarily disabled on the PE1→P1 link. This brought down the RSVP-TE LSP and triggered a CSPF computation through a longer alternate path. Yet, now that PE1→P1 is fine again from the point of view of MPLS, why is the LSP still following a longer path?

In both Junos and IOS XR, simple RSVP-TE LSPs tend to avoid flapping links. When they are signaled, RSVP LSPs can remain indefinitely on their current path. If there is a failure (e.g., in one of the path’s links or nodes), the ingress PE runs CSPF again and resignals the LSP.

Thus, the PE1→PE3 LSP has a suboptimal ERO. How can you reoptimize this LSP, or in other words, how can you trigger a CSPF recalculation? Manually flapping a link is not a good idea. There are better ways.

First, you can manually reoptimize an LSP by executing the following operational commands:

-

Junos:

clear mpls lsp name PE1--->PE3 optimize -

IOS XR:

mpls traffic-eng reoptimize 44(tunnel-te 44)

However, this is not scalable from an operational perspective. In both Junos and IOS XR, it is recommended that you configure a reoptimization timer. When the timer expires, the ingress PE runs CSPF again, and if the result is better than the current path, the LSP is resignaled.

Tip

If the network service requirements (latency, bandwidth, etc.) allow it, try to use high timer values. Staying on stable links is a good thing!

You can configure reoptimization timers in Junos either globally or on a per-LSP basis, and they are global in IOS XR. Let’s call this timer T1 (in seconds):

-

Junos:

protocols mpls [label-switched-path <name>] optimize-timer <T1> -

IOS XR:

mpls traffic-eng reoptimize <T1>

LSP optimization takes place in a make-before-break fashion. Before tearing down the original path, PE1 signals a new PE1→PE3 path and gracefully switches the traffic to it. In that sense, the change is not disruptive and does not cause any transit packet loss. In scaled environments, it is wise to delay this switchover, allowing time for the LSP’s forwarding plane to be ready before the routes point to the new path. Let’s call this timer T2 (in seconds):

-

Junos:

protocols mpls optimize-switchover-delay <T2> -

IOS XR:

mpls traffic-eng optimize timers delay installation <T2>

How do T1 and T2 relate to each other? Let’s see an example, by using the Junos terminology from Table 2-2.

The PE1→PE3 LSP is initially mapped to RSVP session A, which follows the shortest IGP path PE1-P1-PE3. Then, the PE1-P1 link experiences a short flap (up→down→up).

Directly after the up→down transition, RSVP session A goes down, and PE1 signals a new RSVP session B through a (longer) available path—for example, PE1-PE2-P2-PE4-PE3. PE1 quickly activates the LSP on RSVP session B and starts timer T1. At this point, the user traffic is restored.

While T1 is ticking down, the link comes back up and IS-IS converges. That’s orthogonal to T1, which just keeps ticking down. When T1 expires, PE1 signals a new RSVP session C through the shortest path PE1-P1-PE3, and starts timer T2.

While T2 is ticking down, PE1 keeps both RSVP sessions B and C up, but the LSP and the user traffic are still on session B. Only when T2 expires, PE1 switches the LSP and the user traffic to session C.

RSVP-TE messages

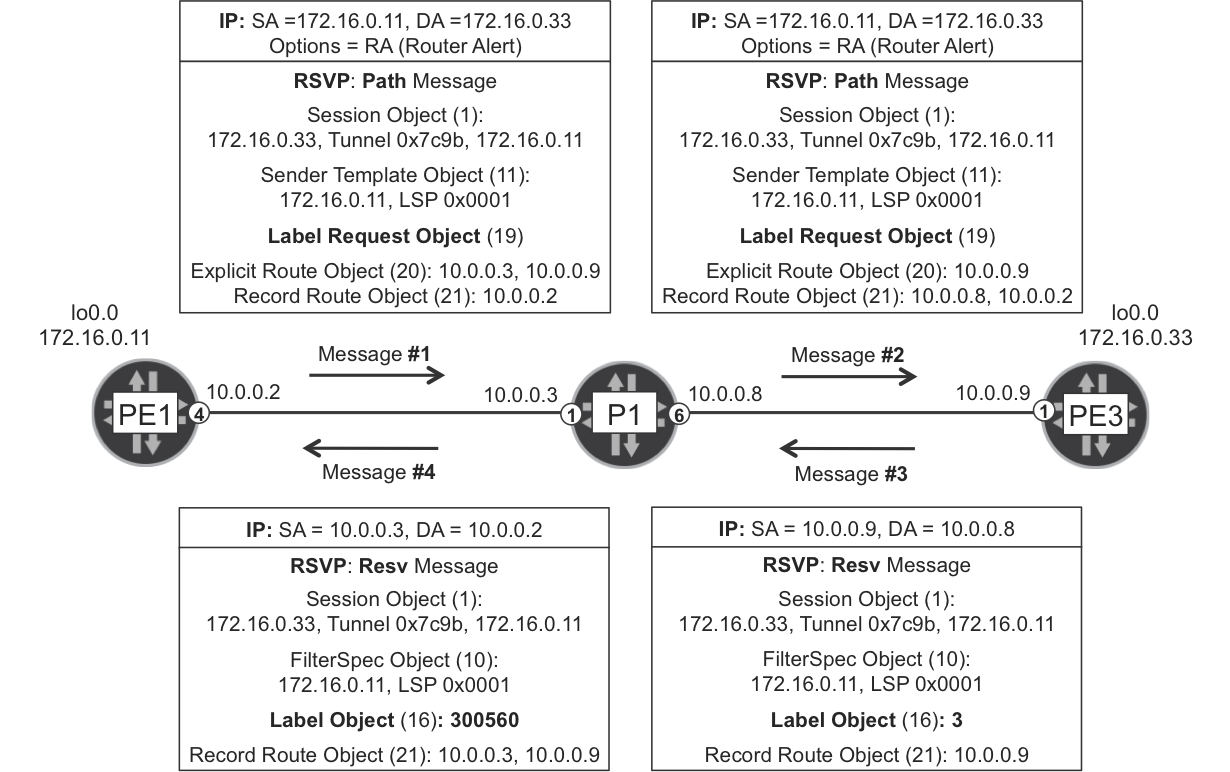

After the ingress PE computes the ERO, it begins to signal the LSP. Let’s focus on the PE1→PE3 example. As shown in Figure 2-5, the ingress PE (PE1) sends Path messages and the egress PE (PE3) answers with Resv messages. These RSVP messages are encapsulated directly on top of IP (RSVP = IPv4 protocol 46).

Figure 2-5. RSVP-TE Path and Resv messages

In addition to the ERO, a Path message contains several objects, including the Record Route Object (RRO). The ERO and the RRO have symmetrical roles: whereas the ERO shrinks hop by hop (as there are less hops to go), the RRO grows hop by hop (as there are more hops left behind).

Note

Try to spot the Tunnel ID and the LSP ID in Figure 2-5. When the LSP is resignaled (upon failure, reoptimization, or a change in TE requirements), the Tunnel ID remains the same and the LSP ID is incremented.

RSVP Path messages have a destination IPv4 address equal to the egress PE’s loopback (and not to the transit LSR). For this reason, the ingress PE sets the Router Alert (RA) option in the IPv4 header. This allows the transit LSRs (P1) to intercept and process the Path messages at the control plane, thereby creating dynamic local LSP state and updating both the ERO and the RRO on a hop-by-hop basis.

The Resv messages flow in the opposite direction (upstream) and contain label information. First, the egress PE (PE3) signals the implicit null label; then, the upstream LSRs assign a locally unique label bound to the LSP.

Note

In RSVP-TE, a label is locally bound to an LSP, not to an FEC. If PE1 signals 1,000 LSPs toward PE3 with the same ERO, P1 assigns 1,000 different MPLS labels, one per LSP.

Because Resv messages are triggered by Path messages, RSVP-TE label distribution method is DoD, as compared to the default LDP mode (DU).

RSVP-TE LSPs are maintained by periodic Path/Resv message refresh. This per-LSP message exchange is often called an RSVP session. You can view an RSVP session as a control plane incarnation of an LSP. This is a subtle nuance, so in the RSVP world, the terms LSP and session are often used interchangeably (see Table 2-3).

After it is configured to do so, PE3 also signals a PE3→PE1 LSP by sending Path messages to PE1 and receiving Resv messages from PE1. This enables bidirectional end-to-end traffic.

LSRs send Path and Resv messages periodically in order to keep the RSVP-TE sessions alive. Chapter 16 covers some possible optimizations.

There is also a set of messages (PathErr, PathTear, ResvErr, and ResvTear) that signal LSP error conditions or tear down RSVP-TE LSPs.

RSVP-TE in Action

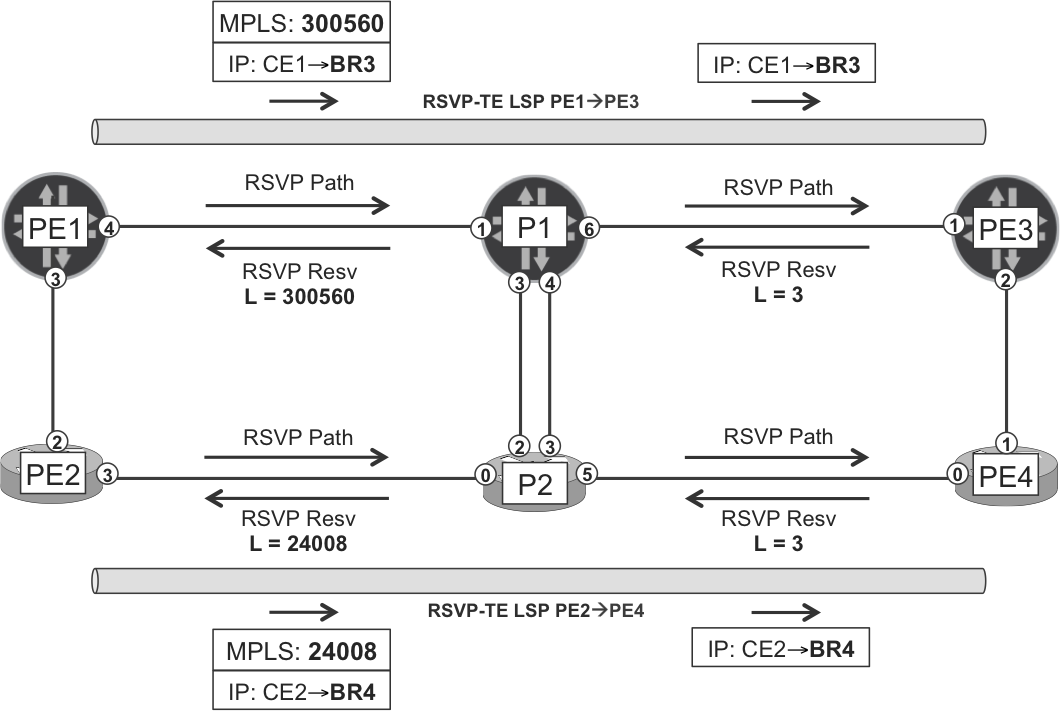

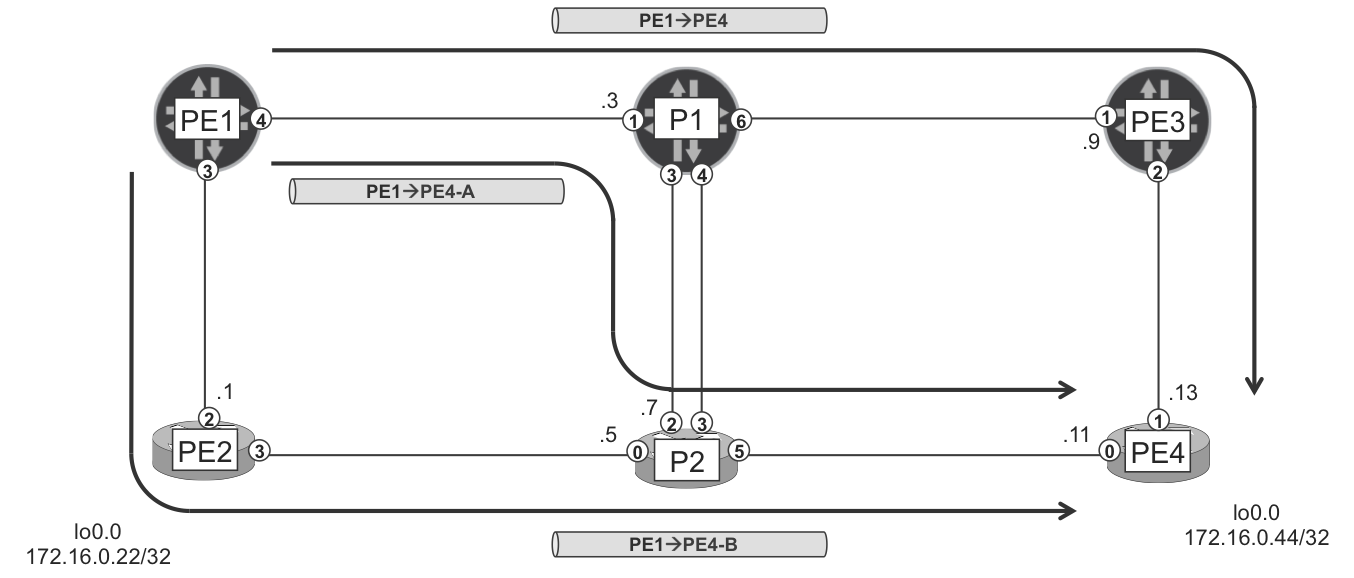

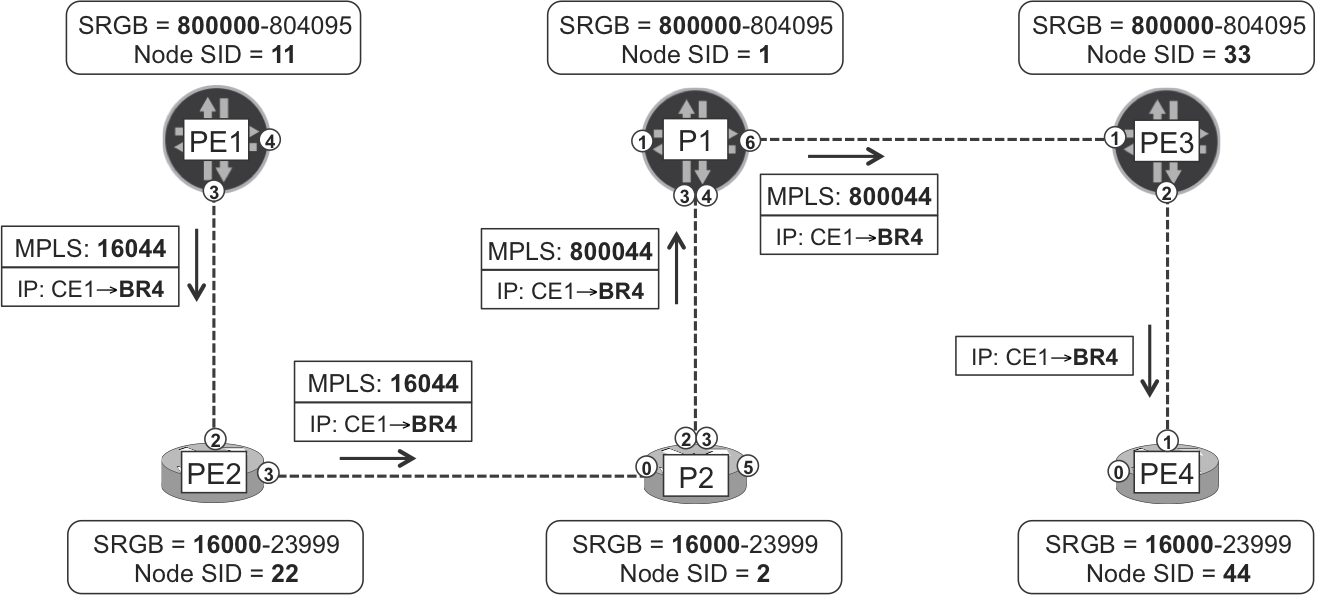

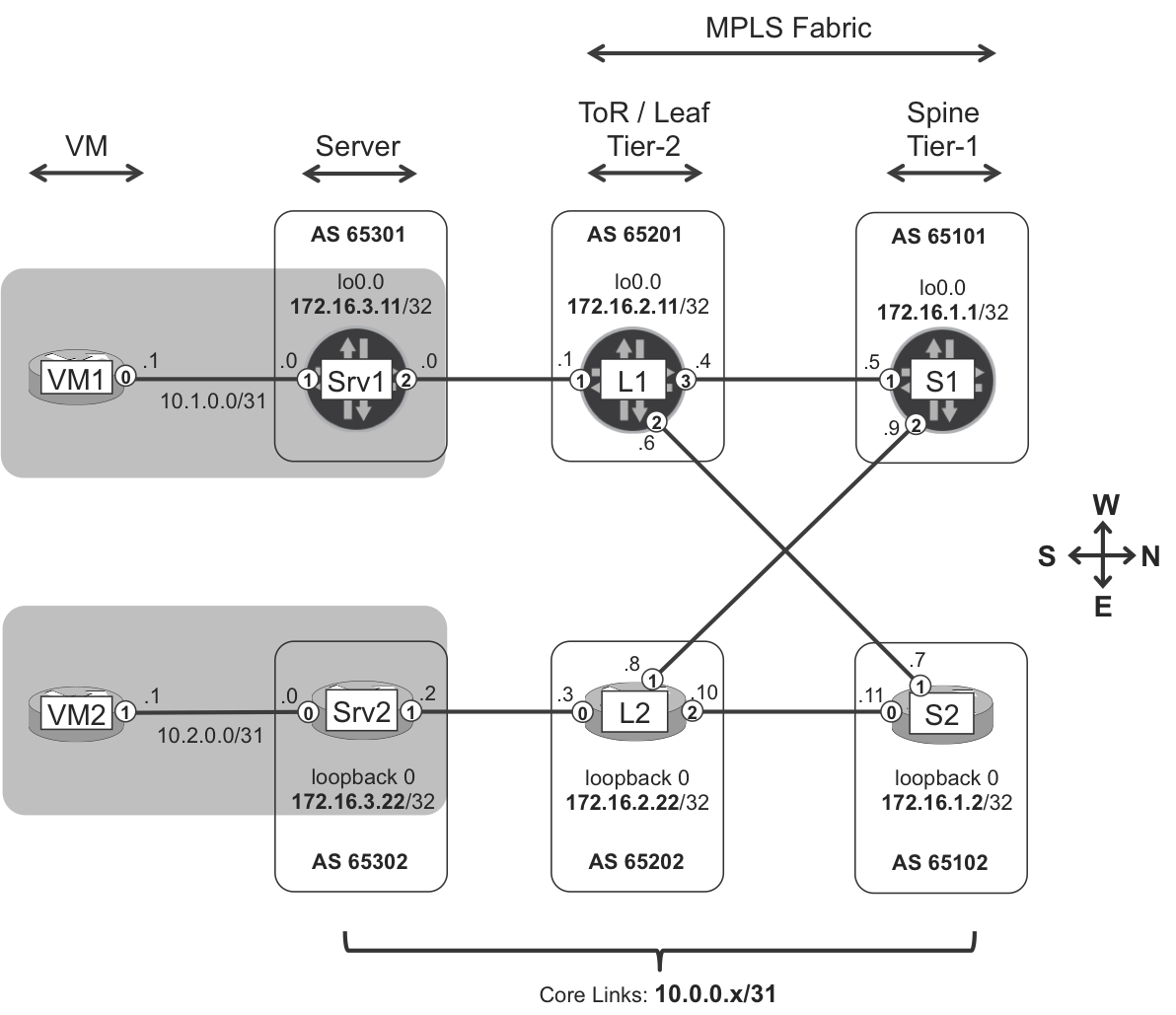

Let’s see two end-to-end traffic examples, first on the Junos plane (LSP PE1→PE3) and then on the IOS XR plane (PE2→PE4). Figure 2-6 illustrates the RSVP signaling involved in both examples.

Figure 2-6. RSVP-TE LSPs on Junos and IOS XR planes

RSVP-TE signaling and MPLS forwarding in the Junos plane

The first example (Example 2-38) is a loopback-to-loopback traceroute from CE1 to BR3 traversing the Junos plane (PE1, P1, PE3).

Example 2-38. Traceroute through the Junos plane

juniper@CE1> traceroute 192.168.20.3 source 192.168.10.1

traceroute to 192.168.20.3 (192.168.20.3) from 192.168.10.1 [...]

1 PE1 (10.1.0.1) 21.468 ms 8.833 ms 4.311 ms

2 P1 (10.0.0.3) 20.169 ms 33.771 ms 137.208 ms

MPLS Label=300560 CoS=0 TTL=1 S=1

3 PE3 (10.0.0.9) 14.305 ms 13.516 ms 12.845 ms

4 BR3 (192.168.20.3) 23.651 ms 10.378 ms 11.674 ms

Let’s interpret the output step by step. As you saw in Chapter 1, PE1 has a BGP route toward BR3’s loopback, and the BGP next hop of this route is PE3. Then, PE1 resolves this BGP next hop by looking at the inet.3 auxiliary table, and this is how the Internet route (to BR3) gets a labeled forwarding next hop.

Example 2-39. MPLS forwarding at ingress PE1 (Junos)

juniper@PE1> show route 192.168.20.3 active-path detail

[...]

Protocol next hop: 172.16.0.33

juniper@PE1> show route table inet.3 172.16.0.33

inet.3: 3 destinations, 3 routes (3 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

172.16.0.33/32 *[RSVP/7/1] 05:01:26, metric 20

> to 10.0.0.3 via ge-2/0/4.0, label-switched-path PE1--->PE3

juniper@PE1> show route forwarding-table destination 192.168.20.3

Routing table: default.inet

Internet:

Destination Type Next hop Type Index NhRef Netif

192.168.20.3/32 user indr 1048576 2

10.0.0.3 Push 300560 595 2 ge-2/0/4.0

PE1 pushes an MPLS header with label 300560 and sends the packet to the forwarding next hop P1. Why label 300560? The answer is in Figure 2-5, Figure 2-6, and Example 2-40: because this is the label that P1 maps to the LSP PE1→PE3.

Example 2-40. RSVP sessions at PE1 (Junos)

juniper@PE1> show rsvp session Ingress RSVP: 3 sessions To From State Style Labelin Labelout LSPname 172.16.0.22 172.16.0.11 Up SE - 3 PE1--->PE2 172.16.0.33 172.16.0.11 Up FF - 300560 PE1--->PE3 172.16.0.44 172.16.0.11 Up SE - 300256 PE1--->PE4 Total 3 displayed, Up 3, Down 0 Egress RSVP: 3 sessions To From State Style Labelin Labelout LSPname 172.16.0.11 172.16.0.22 Up SE 3 - PE2--->PE1 172.16.0.11 172.16.0.44 Up SE 3 - PE4--->PE1 172.16.0.11 172.16.0.33 Up FF 3 - PE3--->PE1 Total 3 displayed, Up 3, Down 0 Transit RSVP: 2 sessions To From State Style Labelin Labelout LSPname 172.16.0.22 172.16.0.33 Up SE 299952 3 PE3--->PE2 172.16.0.33 172.16.0.22 Up SE 299968 300144 PE2--->PE3 Total 2 displayed, Up 2, Down 0 juniper@PE1> show rsvp session name PE1--->PE3 detail [...] PATH sentto: 10.0.0.3 (ge-2/0/4.0) 4226 pkts RESV rcvfrom: 10.0.0.3 (ge-2/0/4.0) 4235 pkts[...] Explct route: 10.0.0.3 10.0.0.9 Record route: <self> 10.0.0.3 10.0.0.9

Note

The two first columns in the previous output are To and From. The order is important: first comes the tail-end of the LSP and then the head-end. It’s not always intuitive because the LSPs are signaled the other way around.

From the perspective of PE1, there are three types of RSVP sessions:

-

Ingress RSVP sessions correspond to LSPs originated at PE1 (head-end). They have PE1’s router ID in the second column (

From). -

Egress RSVP sessions correspond to LSPs that terminate at PE1 (tail-end). They have PE1’s router ID in the first column (

To). -

Transit RSVP sessions correspond to LSPs that go through PE1, but whose two endpoints are both outside PE1.

The Style column can show two different values: Shared Explicit (SE) and Fixed Filter (FF). SE is the recommended mode because it makes sure that bandwidth reservations (if any) are not double counted. It is the default in IOS XR and requires explicit configuration in Junos, as you can see in Example 2-32, line 4 (adaptive keyword).

Now, let’s see how to interpret the Labelin and Labelout columns:

-

If PE1 needs to send a packet through LSP PE1→PE3, PE1 pushes label 300560 to the packet before sending it out to the next hop.

-

If PE1 receives an incoming packet with outermost label 299968, PE1 maps the packet to LSP PE2→PE3 and swaps its label to 300144.

-

If PE1 receives an incoming packet with outermost label 299952, PE1 maps the packet to LSP PE3→PE2 and pops the label.

As you can see, RSVP’s Labelin and Labelout are forwarding-plane concepts. MPLS data packets are received by using Labelin and sent by using Labelout. In this sense, show rsvp session and show ldp database have an opposite interpretation of what input and output mean. Indeed, LDP’s input and output label database contain labels learned and advertised, respectively. But MPLS packets flow in the reverse direction!

Back to RSVP: let’s compare two similar (but not identical) commands in Junos.

Example 2-41. RSVP session versus MPLS LSP (Junos)

juniper@PE1> show rsvp session ingress name PE1--->PE3 Ingress RSVP: 3 sessions To From State Style Labelin Labelout LSPname 172.16.0.33 172.16.0.11 Up FF - 300560 PE1--->PE3 juniper@PE1> show mpls lsp ingress name PE1--->PE3 Ingress LSP: 3 sessions To From State P ActivePath LSPname 172.16.0.33 172.16.0.11 Up * PE1--->PE3 Total 1 displayed, Up 1, Down 0

If the LSP is up and stable, the first command provides more information (namely, the labels). But, the second command is very useful in other situations: for example, if the LSP cannot be established due to a CSPF failure (no RSVP session), or if the LSP is being reoptimized or it has path protection (two RSVP sessions for the same LSP). These two commands are complementary.

Note

You can see the Tunnel ID by looking at the port number in the show rsvp session extensive output.

Let’s move on to P1, a pure LSR or P-router (Example 2-42).

Example 2-42. RSVP signaling and MPLS forwarding at P1 (Junos)

juniper@PE1> show rsvp session transit name PE1--->PE3 Transit RSVP: 6 sessions To From State Style Labelin Labelout LSPname 172.16.0.33 172.16.0.11 Up FF 300560 3 PE1--->PE3 juniper@P1> show route forwarding-table label 300560 table default Routing table: default.mpls MPLS: Destination Type RtRef Next hop Index NhRef Netif 300560 user 0 10.0.0.9 Pop 586 2 ge-2/0/6.0 300560(S=0) user 0 10.0.0.9 Pop 588 2 ge-2/0/6.0

The forwarding table has two routes for label 300560, one for each value of the Bottom of Stack (BoS) bit in the external MPLS header. Which one is relevant for the CE1-to-BR3 traceroute packets? These arrive to P1 with just one MPLS label. In single-label stacks, the Top of Stack (ToS) label is at the same time the BoS label, so the BoS bit is set to 1 (S=1) and the first route applies.

As you saw in the LDP section, label 3 is a reserved label value called implicit null and it translates to pop the label. So, the IPv4 packet arrives unlabeled to PE3, and PE3 has the BGP route to reach BR3.

Let’s wrap up by looking at an RSVP-TE LSP traceroute.

Example 2-43. MPLS RSVP-TE traceroute from PE1 to PE3 (Junos)

juniper@PE1> traceroute mpls rsvp PE1--->PE3

Probe options: retries 3, exp 7

ttl Label Protocol Address Previous Hop Probe Status

1 300560 RSVP-TE 10.0.0.3 (null) Success

FEC-Stack-Sent: RSVP

ttl Label Protocol Address Previous Hop Probe Status

2 3 RSVP-TE 10.0.0.9 10.0.0.3 Egress

FEC-Stack-Sent: RSVP

Path 1 via ge-2/0/4.0 destination 127.0.0.64

RSVP-TE signaling and MPLS forwarding in the IOS XR plane

Example 2-44 is an end-to-end traceroute from CE2 to BR4 that goes through the IOS XR plane (PE2, P2, PE4).

Example 2-44. Traceroute through the IOS XR plane

juniper@CE2> traceroute 192.168.20.4 source 192.168.10.2

traceroute to 192.168.20.4 (192.168.20.4) from 192.168.10.2 [...]

1 PE2 (10.1.0.3) 2.833 ms 3.041 ms 2.441 ms

2 P2 (10.0.0.5) 10.465 ms 8.480 ms 9.311 ms

MPLS Label=24008 CoS=0 TTL=1 S=1

3 PE4 (10.0.0.11) 8.461 ms 8.757 ms 7.982 ms

4 BR4 (192.168.20.4) 9.109 ms 10.427 ms 9.248 ms

PE2 has a BGP route toward BR4’s loopback, and the BGP next hop of this route is PE4. The key here is the CEF entry for 172.16.0.44/32. Let’s have a look at it.

Example 2-45. MPLS forwarding at ingress PE2 (IOS XR)

1 RP/0/0/CPU0:PE2#show cef 172.16.0.44

2 172.16.0.44/32, version 91, internal [...]

3 local adjacency 10.0.0.5

4 Prefix Len 32, traffic index 0, precedence n/a, priority 1

5 via 172.16.0.44, tunnel-te44, 4 dependencies [...]

6 path-idx 0 NHID 0x0 [0xa0db3250 0x0]

7 next hop 172.16.0.44

8 local adjacency

9 local label 24016 labels imposed {ImplNull}

The label operation for this LSP is as follows: push a real label, not implicit null. The real label does not show in line 9. Actually, seeing ImplNull there is a sign that everything is OK.

What is tunnel-te44? This is an explicitly configured interface, and it pushes an MPLS label with a value (24008) that matches traceroute’s output, as shown in Example 2-44 and in Example 2-46 (line 7):

Example 2-46. RSVP-TE LSP at PE2 (IOS XR)

1 RP/0/0/CPU0:PE2#show mpls traffic-eng tunnels name tunnel-te44 detail 2 Name: tunnel-te44 Destination: 172.16.0.44 Ifhandle:0x580 3 Signalled-Name: PE2--->PE4 4 Status: 5 Admin: up Oper: up Path: valid Signalling: connected 6 [...] 7 Outgoing Interface: GigabitEthernet0/0/0/3, Outgoing Label: 24008 8 Path Info: 9 Outgoing: 10 Explicit Route: 11 Strict, 10.0.0.5 12 Strict, 10.0.0.11 13 Strict, 172.16.0.44 14 Resv Info: 15 Record Route: 16 IPv4 10.0.0.5, flags 0x0 17 IPv4 10.0.0.11, flags 0x0 18 19 RP/0/0/CPU0:PE2#show rsvp session tunnel-name PE2--->PE4 20 Type Destination Add DPort Proto/ExtTunID PSBs RSBs Reqs 21 ---- --------------- ----- --------------- ----- ----- ----- 22 LSP4 172.16.0.44 44 172.16.0.22 1 1 0

Now, let’s look at the RSVP-TE session and forwarding entries on P2, the next hop LSR.

Example 2-47. RSVP signaling and MPLS forwarding at P2 (IOS XR)

RP/0/0/CPU0:P2#show rsvp session tunnel-name PE2--->PE4 detail

SESSION: IPv4-LSP Addr: 172.16.0.44, TunID: 44, ExtID: 172.16.0.22

Tunnel Name: PE2--->PE4 [...]

RSVP Path Info:

InLabel: GigabitEthernet0/0/0/0, 24008

Incoming Address: 10.0.0.5

Explicit Route:

Strict, 10.0.0.5/32

Strict, 10.0.0.11/32

Strict, 172.16.0.44/32

Record Route:

IPv4 10.0.0.4, flags 0x0

Tspec: avg rate=0, burst=1K, peak rate=0

RSVP Resv Info:

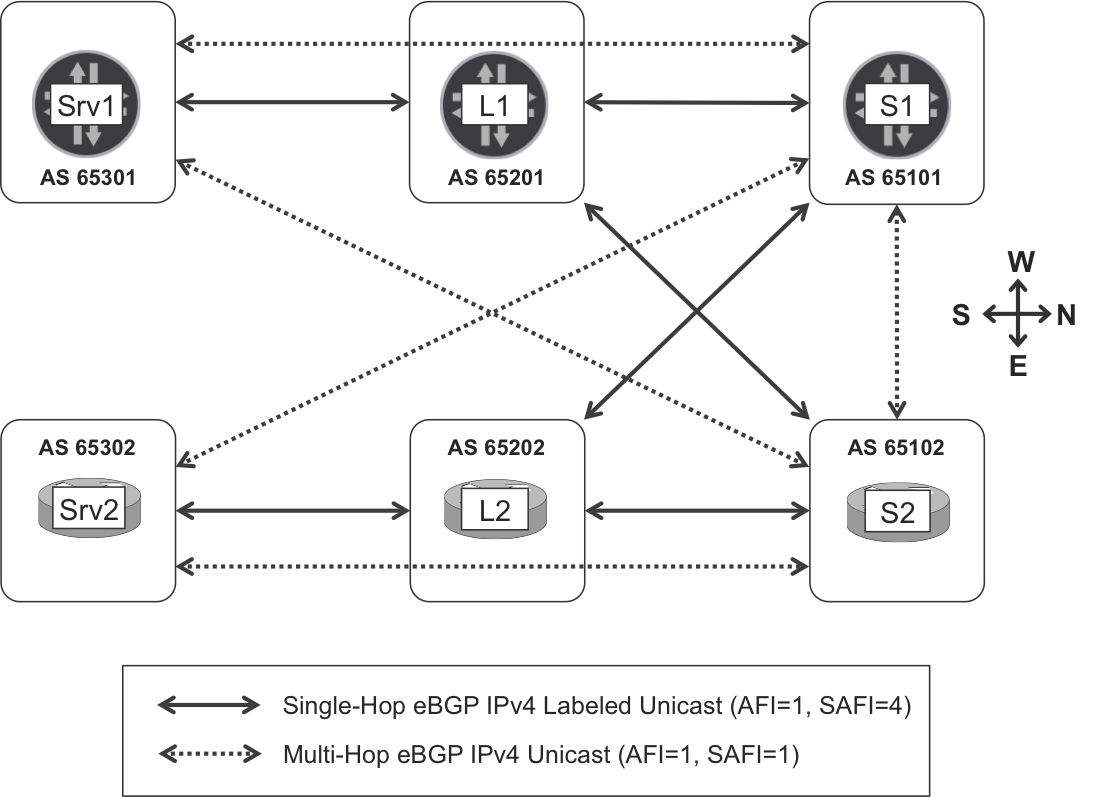

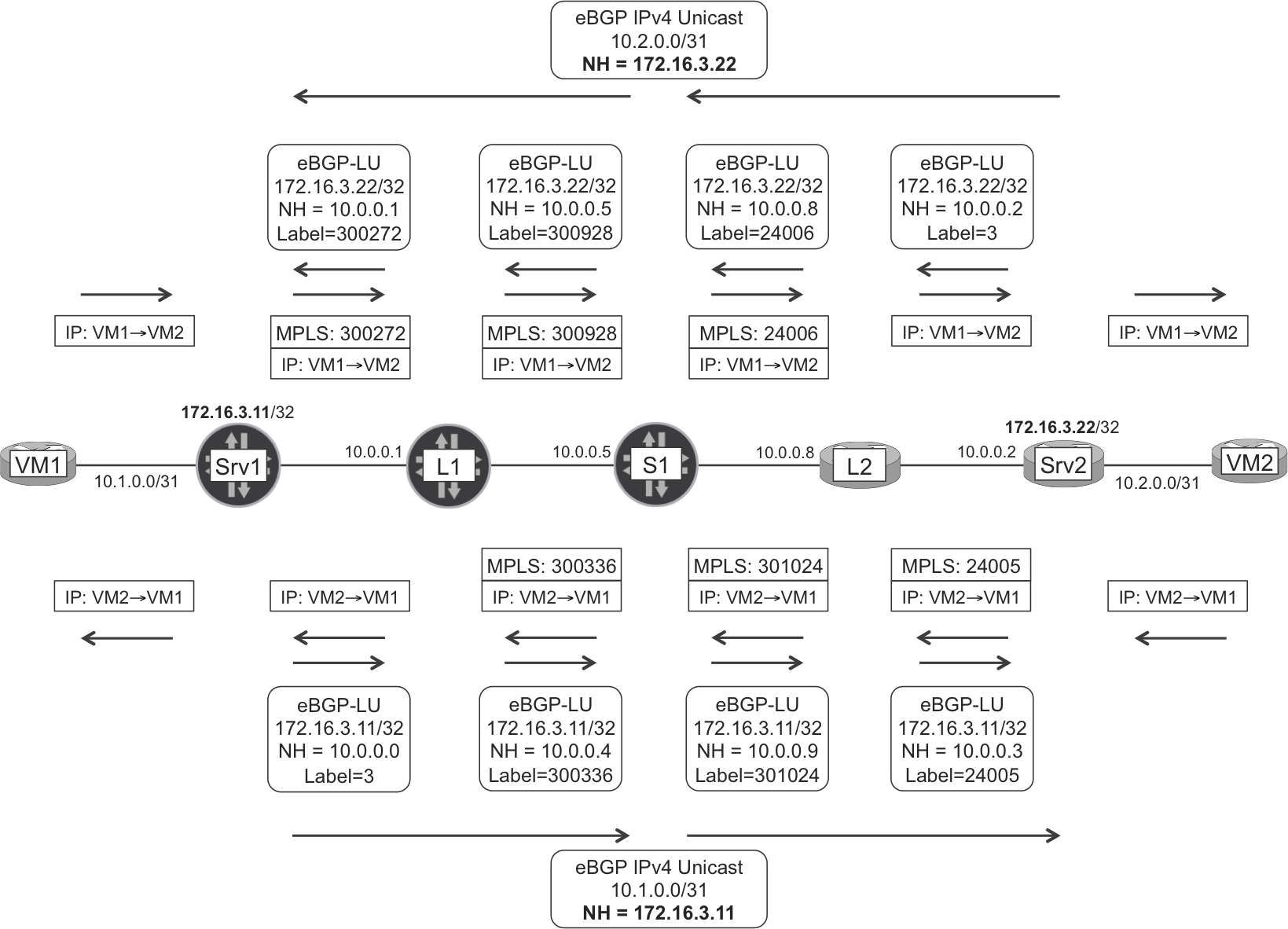

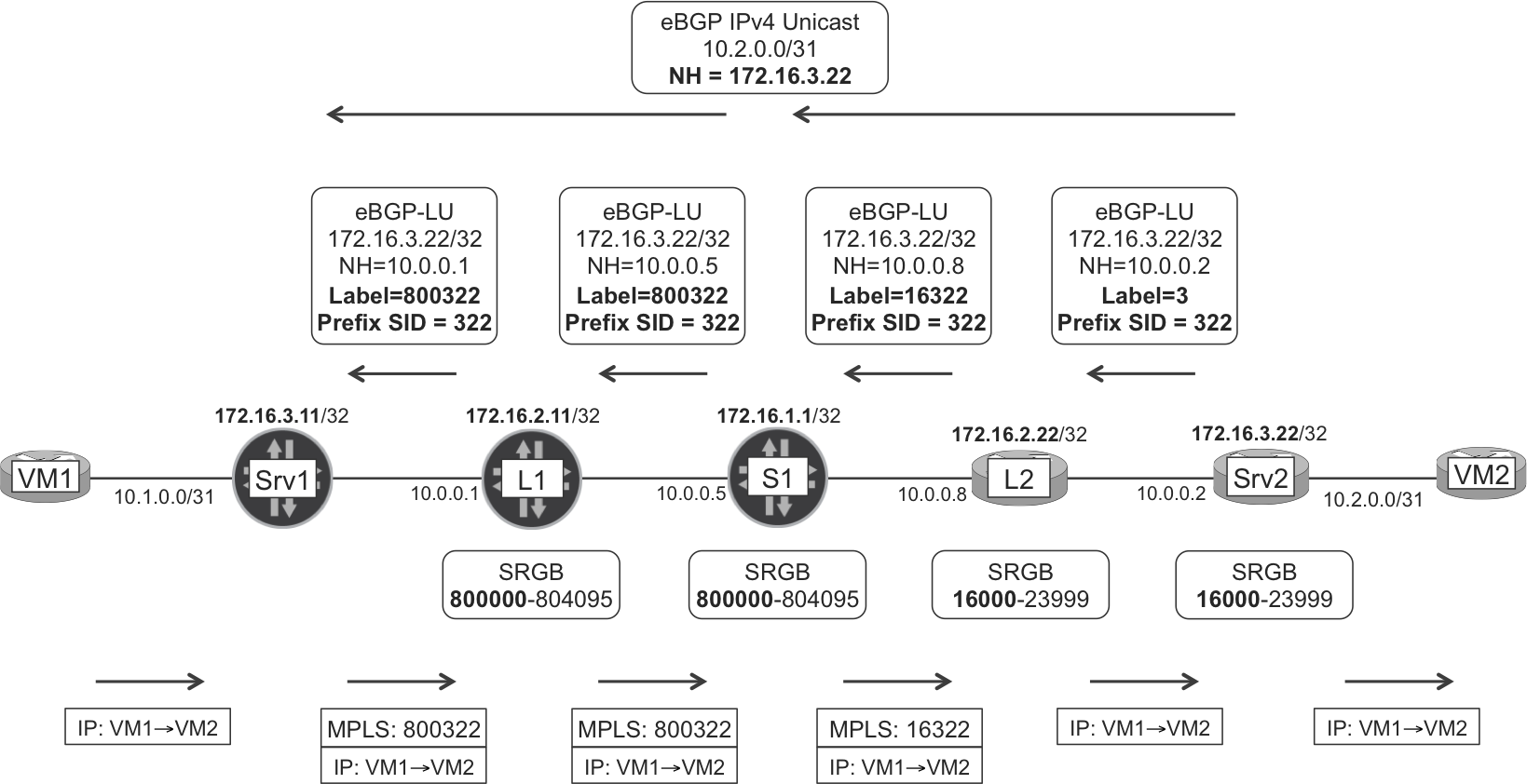

OutLabel: GigabitEthernet0/0/0/5, 3