A fast test suite avoids connecting to remote systems, like databases, LDAP, etc. Just verifying the core units and avoiding external systems can result in a faster running test suite with more coverage. This can lead to a useful smoke test that provides developers with confidence in the system without running all the tests.

With these steps, we will see how to cut out test cases that interact with external systems.

- Create a test module called

recipe60_test.pyfor writing some tests against our network application.import logging from network import * import unittest from springpython.database.factory import * from springpython.database.core import *

- Create a test case that removes the database connection and stubs out the data access functions.

class EventCorrelatorUnitTests(unittest.TestCase): def setUp(self): db_name = "recipe60.db" factory = Sqlite3ConnectionFactory(db=db_name) self.correlator = EventCorrelator(factory) # We "unplug" the DatabaseTemplate so that # we don't talk to a real database. self.correlator.dt = None # Instead, we create a dictionary of # canned data to return back self.return_values = {} # For each sub-function of the network app, # we replace them with stubs which return our # canned data. def stub_store_event(event): event.id = self.return_values["id"] return event, self.return_values["active"] self.correlator.store_event = stub_store_event def stub_impact(event): return (self.return_values["services"], self.return_values["equipment"]) self.correlator.impact = stub_impact def stub_update_service(service, event): return service + " updated" self.correlator.update_service = stub_update_service def stub_update_equip(equip, event): return equip + " updated" self.correlator.update_equipment = stub_update_equip - Create a test method that creates a set of canned data values, invokes the applications process method, and then verifies the values.

def test_process_events(self): # For this test case, we can preload the canned data, # and verify that our process function is working # as expected without touching the database. self.return_values["id"] = 4668 self.return_values["active"] = True self.return_values["services"] = ["service1", "service2"] self.return_values["equipment"] = ["device1"] evt1 = Event("pyhost1", "serverRestart", 5) stored_event, is_active, updated_services, updated_equipment = self.correlator.process(evt1) self.assertEquals(4668, stored_event.id) self.assertTrue(is_active) self.assertEquals(2, len(updated_services)) self.assertEquals(1, len(updated_equipment)) - Create another test case that preloads the database using a SQL script (see Chapter 7, Building a network management application for details about the SQL script).

class EventCorrelatorIntegrationTests(unittest.TestCase): def setUp(self): db_name = "recipe60.db" factory = Sqlite3ConnectionFactory(db=db_name) self.correlator = EventCorrelator(factory) dt = DatabaseTemplate(factory) sql = open("network.sql").read().split(";") for statement in sql: dt.execute(statement + ";") - Write a test method that calls the network application's process method, and then prints out the results.

def test_process_events(self): evt1 = Event("pyhost1", "serverRestart", 5) stored_event, is_active, updated_services, updated_equipment = self.correlator.process(evt1) print "Stored event: %s" % stored_event if is_active: print "This event was an active event." print "Updated services: %s" % updated_services print "Updated equipment: %s" % updated_equipment print "---------------------------------" - Create a module called

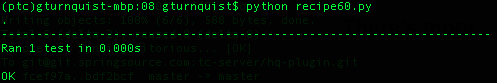

recipe60.pythat only imports the unit test that avoids making SQL calls.from recipe60_test import EventCorrelatorUnitTests if __name__ == "__main__": import unittest unittest.main() - Run the test module.

This test suite exercises the unit tests and avoids running the test case that integrates with a live database. It uses Python import statements to decide what test cases to include.

In our contrived scenario, there is little gained performance. But with a real project, there are probably a lot more computer cycles spent on integration testing due to the extra costs of talking to external systems.

The idea is to create a subset of tests that verify to some degree that our application works by covering a big chunk of it in a smaller amount of time.

The trick with smoke testing is deciding what is a good enough test. Automated testing cannot completely confirm that our application has no bugs. We are foiled by the fact that either a particular bug doesn't exist, or we haven't written a test case that exposes such a bug. To engage in smoke testing, we are deciding to use a subset of these tests for a quick pulse read. Again, deciding what subset gives us a good enough pulse may be more art than science.

This recipe focuses on the idea that unit tests will probably run quicker, and that cutting out the integration tests will remove the slower test cases. If all the unit tests pass, then there is some confidence that our application is in good shape.

I must point out that test cases don't just easily fall into the category of unit test or integration test. It is more of a continuum. In this recipe's sample code, we wrote one unit test and one integration test, and picked the unit test for our smoke test suite.

Does this appear arbitrary and perhaps contrived? Sure it does. That is why smoke testing isn't cut and dry, but instead requires some analysis and judgment about what to pick. And as development proceeds, there is room for fine tuning.

Tip

I once developed a system that ingested invoices from different suppliers. I wrote unit tests that setup empty database tables, ingested files of many formats, and then examined the contents of the database to verify processing. The test suite took over 45 minutes to run. This pressured me to not run the test suite as often as desired. I crafted a smoke test suite that involved only running the unit tests that did NOT talk to the database (since they were quick) combined with ingesting one supplier invoice. It ran in less than five minutes, and provided a quicker means to assure myself that fundamental changes to the code did not break the entire system. I could run this many times during the day, and only run the comprehensive suite about once a day.

Does this code appear very similar to that shown in the recipe Defining a subset of test cases using import statements? Yes it does. So why include it in this recipe? Because what is picked for the smoke test suite is just as critical as the tactics used to make it happen. The other recipe decided to pick up an integration test while cutting out the unit tests to create a smaller, faster running test suite.

This recipe shows that another possibility is to cut out the lengthier integration tests and instead run as many unit tests as possible, considering they are probably faster.

As stated earlier, smoke testing isn't cut and dry. It involves picking the best representation of tests without taking up too much time in running them. It is quite possible that all the tests written up to this point don't precisely target the idea of capturing the pulse of the system. A good smoke test suite may involve mixing together a subset of unit and integration tests.

- Chapter 7, Building a network management application

- Defining a subset of test cases using import statements