In this recipe we will create interactive GUI controlled with a Kinect sensor.

In this example we are using the InteractiveObject class that we covered in the Creating an interactive object that responds to the mouse recipe from Chapter 10, Interacting with the User.

Download and install the Kinect for Windows SDK from http://www.microsoft.com/en-us/kinectforwindows/.

Download the KinectSDK CinderBlock from GitHub at https://github.com/BanTheRewind/Cinder-KinectSdk, and unpack it to the blocks directory.

We will now create a Cinder application controlled with hand gestures.

- Include the necessary header files:

#include "cinder/Rand.h" #include "cinder/gl/Texture.h" #include "cinder/Utilities.h" #include "Kinect.h" #include "InteractiveObject.h";

- Add the Kinect SDK using the following statement:

using namespace KinectSdk;

- Implement the class for a waving hand gesture recognition as follows:

class WaveHandGesture { public: enum GestureCheckResult { Fail, Pausing, Suceed }; private: GestureCheckResult checkStateLeft( const Skeleton & skeleton ) { // hand above elbow if (skeleton.at(JointName::NUI_SKELETON_POSITION_HAND_RIGHT).y > skeleton.at(JointName::NUI_SKELETON_POSITION_ELBOW_RIGHT).y) { // hand right of elbow if (skeleton.at(JointName::NUI_SKELETON_POSITION_HAND_RIGHT).x > skeleton.at(JointName::NUI_SKELETON_POSITION_ELBOW_RIGHT).x) { return Suceed; } return Pausing; } return Fail; } GestureCheckResult checkStateRight( const Skeleton & skeleton ) { // hand above elbow if (skeleton.at(JointName::NUI_SKELETON_POSITION_HAND_RIGHT).y > skeleton.at(JointName::NUI_SKELETON_POSITION_ELBOW_RIGHT).y) { // hand left of elbow if (skeleton.at(JointName::NUI_SKELETON_POSITION_HAND_RIGHT).x < skeleton.at(JointName::NUI_SKELETON_POSITION_ELBOW_RIGHT).x) { return Suceed; } return Pausing; } return Fail; } int currentPhase; public: WaveHandGesture() { currentPhase = 0; } GestureCheckResult check( const Skeleton & skeleton ) { GestureCheckResult res; switch(currentPhase) { case0: // start on left case2: res = checkStateLeft(skeleton); if( res == Suceed ) { currentPhase++; } elseif( res == Fail ) { currentPhase = 0; return Fail; } return Pausing; break; case1: // to the right case3: res = checkStateRight(skeleton); if( res == Suceed ) { currentPhase++; } elseif( res == Fail ) { currentPhase = 0; return Fail; } return Pausing; break; case4: // to the left res = checkStateLeft(skeleton); if( res == Suceed ) { currentPhase = 0; return Suceed; } elseif( res == Fail ) { currentPhase = 0; return Fail; } return Pausing; break; } return Fail; } }; - Implement

NuiInteractiveObjectextending theInteractiveObjectclass:class NuiInteractiveObject: public InteractiveObject { public: NuiInteractiveObject(const Rectf & rect) : InteractiveObject(rect) { mHilight = 0.0f; } void update(bool activated, const Vec2f & cursorPos) { if(activated && rect.contains(cursorPos)) { mHilight += 0.08f; } else { mHilight -= 0.005f; } mHilight = math<float>::clamp(mHilight); } virtualvoid draw() { gl::color(0.f, 0.f, 1.f, 0.3f+0.7f*mHilight); gl::drawSolidRect(rect); } float mHilight; }; - Implement the

NuiControllerclass that manages the active objects:class NuiController { public: NuiController() {} void registerObject(NuiInteractiveObject *object) { objects.push_back( object ); } void unregisterObject(NuiInteractiveObject *object) { vector<NuiInteractiveObject*>::iterator it = find(objects.begin(), objects.end(), object); objects.erase( it ); } void clear() { objects.clear(); } void update(bool activated, const Vec2f & cursorPos) { vector<NuiInteractiveObject*>::iterator it; for(it = objects.begin(); it != objects.end(); ++it) { (*it)->update(activated, cursorPos); } } void draw() { vector<NuiInteractiveObject*>::iterator it; for(it = objects.begin(); it != objects.end(); ++it) { (*it)->draw(); } } vector<NuiInteractiveObject*> objects; }; - Add the members to you main application class for handling Kinect devices and data:

KinectSdk::KinectRef mKinect; vector<KinectSdk::Skeleton> mSkeletons; gl::Texture mVideoTexture;

- Add members to store the calculated cursor position:

Rectf mPIZ; Vec2f mCursorPos;

- Add the members that we will use for gesture recognition and user activation:

vector<WaveHandGesture*> mGestureControllers; bool mUserActivated; int mActiveUser;

- Add a member to handle

NuiController:NuiController* mNuiController;

- Set window settings by implementing

prepareSettings:void MainApp::prepareSettings(Settings* settings) { settings->setWindowSize(800, 600); } - In the

setupmethod, set the default values for members:mPIZ = Rectf(0.f,0.f, 0.85f,0.5f); mCursorPos = Vec2f::zero(); mUserActivated = false; mActiveUser = 0;

- In the

setupmethod initialize Kinect and gesture recognition for10users:mKinect = Kinect::create(); mKinect->enableDepth( false ); mKinect->enableVideo( false ); mKinect->start(); for(int i = 0; i <10; i++) { mGestureControllers.push_back( new WaveHandGesture() ); } - In the

setupmethod, initialize the user interface consisting of objects of typeNuiInterativeObject:mNuiController = new NuiController(); float cols = 10.f; float rows = 10.f; Rectf rect = Rectf(0.f,0.f, getWindowWidth()/cols - 1.f, getWindowHeight()/rows - 1.f); or(int ir = 0; ir < rows; ir++) { for(int ic = 0; ic < cols; ic++) { Vec2f offset = (rect.getSize()+Vec2f::one()) * Vec2f(ic,ir); Rectf r = Rectf( offset, offset+rect.getSize() ); mNuiController->registerObject( new NuiInteractiveObject® ); } } - In the

updatemethod, we are checking if the Kinect device is capturing, getting tracked skeletons, and iterating:if ( mKinect->isCapturing() ) { if ( mKinect->checkNewSkeletons() ) { mSkeletons = mKinect->getSkeletons(); } uint32_t i = 0; vector<Skeleton>::const_iterator skeletonIt; for (skeletonIt = mSkeletons.cbegin(); skeletonIt != mSkeletons.cend(); ++skeletonIt, i++ ) { - Inside the loop, we are checking if the skeleton is complete and deactivating the cursor controls if it is not complete:

if(mUserActivated && i == mActiveUser && skeletonIt->size() != JointName::NUI_SKELETON_POSITION_COUNT ) { mUserActivated = false; } - Inside the loop check if the skeleton is valid. Notice we are only processing 10 skeletons. You can modify this number, but remember to provide sufficient number of gesture controllers in

mGestureControllers:if ( skeletonIt->size() == JointName::NUI_SKELETON_POSITION_COUNT && i <10 ) { - Inside the loop and the

ifstatement, check for the completed activation gesture. While the skeleton is activated, we are calculating person interaction zone:if( !mUserActivated || ( mUserActivated && i != mActiveUser ) ) { WaveHandGesture::GestureCheckResult res; res = mGestureControllers[i]->check( *skeletonIt ); if( res == WaveHandGesture::Suceed && ( !mUserActivated || i != mActiveUser ) ) { mActiveUser = i; float armLen = 0; Vec3f handRight = skeletonIt->at(JointName::NUI_SKELETON_POSITION_HAND_RIGHT); Vec3f elbowRight = skeletonIt->at(JointName::NUI_SKELETON_POSITION_ELBOW_RIGHT); Vec3f shoulderRight = skeletonIt->at(JointName::NUI_SKELETON_POSITION_SHOULDER_RIGHT); armLen += handRight.distance( elbowRight ); armLen += elbowRight.distance( shoulderRight ); mPIZ.x2 = armLen; mPIZ.y2 = mPIZ.getWidth() / getWindowAspectRatio(); mUserActivated = true; } } - Inside the loop and the

ifstatement, we are calculating cursor positions for active users:if(mUserActivated && i == mActiveUser) { Vec3f handPos = skeletonIt->at(JointName::NUI_SKELETON_POSITION_HAND_RIGHT); Rectf piz = Rectf(mPIZ); piz.offset( skeletonIt->at(JointName::NUI_SKELETON_POSITION_SPINE).xy() ); mCursorPos = handPos.xy() - piz.getUpperLeft(); mCursorPos /= piz.getSize(); mCursorPos.y = (1.f - mCursorPos.y); mCursorPos *= getWindowSize(); } - Close the opened

ifstatements and theforloop:} } } - At the end of the

updatemethod, update theNuiControllerobject:mNuiController->update(mUserActivated, mCursorPos);

- Implement the

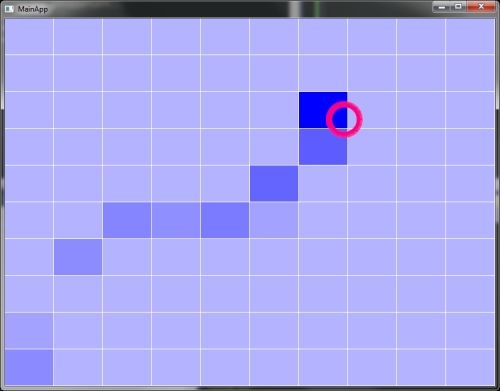

drawmethod as follows:void MainApp::draw() { // Clear window gl::setViewport( getWindowBounds() ); gl::clear( Color::white() ); gl::setMatricesWindow( getWindowSize() ); gl::enableAlphaBlending(); mNuiController->draw(); if(mUserActivated) { gl::color(1.f,0.f,0.5f, 1.f); glLineWidth(10.f); gl::drawStrokedCircle(mCursorPos, 25.f); } }

The application is tracking users using Kinect SDK. Skeleton data of the active user are used to calculate the cursor position by following the guidelines provided by Microsoft with Kinect SDK documentation. Activation is invoked by a hand waving gesture.

This is an example of UI responsive to cursor controlled by a user's hand. Elements of the grid light up under the cursor and fade out on roll-out.