8.2 The Jordan Canonical Form

In this section, we will show that any linear operator L on an n-dimensional vector space V can be represented by a block diagonal matrix whose diagonal blocks are simple Jordan matrices. We will apply this result to solving systems of linear differential equations of the form , where A is defective.

Let us begin by considering the case where L has more than one distinct eigenvalue. We wish to show that if L has distinct eigenvalues , then V can be decomposed into a direct sum of invariant subspaces such that is nilpotent on for each . To do this, we must first prove the following lemma and theorem.

Lemma 8.2.1

If L is a linear operator mapping an n-dimensional vector space V into itself, then there exists a positive integer such that for all .

Proof

If , then clearly ker is a subspace of ker. We claim that if ker for some i, then ker for all . We will prove this by induction on k. In the case , there is nothing to prove. Assume that for some the result holds all indices less than k. If , then

Thus, . By the induction hypothesis, . Therefore, and hence . Since , it follows that and hence . Thus, if for some i, then

Since V is finite dimensional, the dimension of cannot keep increasing as k increases. Thus for some , we must have dim and hence and must be equal. It follows that

∎

Theorem 8.2.2

If L is a linear transformation on an n-dimensional vector space V, then there exist invariant subspaces X and Y such that , L is nilpotent on X, and is invertible.

Proof

Choose to be the smallest positive integer such that . It follows from Lemma 8.2.1 that for all . Let . Clearly, X is invariant under L for if , then , which is a proper subspace of . Let . If , then for some v and hence

Thus, and hence

Therefore, . We claim . Let be a basis for X and let be a basis for Y. By Lemma 8.2.1, it suffices to show that are linearly independent and hence form a basis for V. If

then applying to both sides gives

or

Therefore, and hence

Since the ’s are linearly independent, it follows that

and hence (1) simplifies to

Since the ’s are linearly independent, it follows that

Thus, are linearly independent and therefore . L is invariant and nilpotent on X. We claim that L is invariant and invertible on Y. Let ; then for some . Thus,

Therefore, and hence Y is invariant under L. To prove is invertible, it suffices to show that

This, however, follows immediately since and .

∎

We are now ready to prove the main result of this section.

Theorem 8.2.3

Let L be a linear operator mapping a finite dimensional vector space V into itself. If are the distinct eigenvalues of L, then V can be decomposed into a direct sum

such that is nilpotent on and the dimension of equals the multiplicity of .

Proof

Let . By Theorem 8.2.2, there exist subspaces and that are invariant under such that is nilpotent on , and is invertible. It follows that and are also invariant under L. By Corollary 8.1.2, can be represented by a block diagonal matrix , where diagonal blocks are simple Jordan matrices whose diagonal elements all equal . Thus,

where is the dimension of . Let be a matrix representing . Since is invertible on , it follows that is not an eigenvalue of . Thus,

where . It follows from Lemma 8.1.2 that the operator L on V can be represented by the matrix

Thus, if each eigenvalue of L has multiplicity , then

Therefore, and

If we consider the operator on the vector space , then we can decompose into a direct sum such that and are invariant under is nilpotent on , and is invertible. Indeed, we can continue this process of decomposing into a direct sum until we obtain a direct sum of the form

The vector space will be of dimension with a single eigenvalue . Thus, if we set , then will be nilpotent on and we will have the desired decomposition of V.

∎

It follows from Theorem 8.2.3 that each operator L mapping an n-dimensional vector space V into itself can be represented by a block diagonal matrix of the form

where each is an block diagonal matrix whose blocks consist of simple Jordan matrices with ’s along the main diagonal.

If A is an matrix, then A represents the operator with respect to the standard basis on , where is defined by

By the preceding remarks, can be represented by a matrix J of the form just described. It follows that A is similar to J. Thus, each matrix A with distinct eigenvalues is similar to a matrix J of the form

where is an matrix of the form

with the ’s being simple Jordan matrices. The matrix J defined by (2) and (3) is called the Jordan canonical form of A. The Jordan canonical form of a matrix is unique except for a reordering of the simple Jordan blocks along the diagonal.

Example 1 1

Find the Jordan canonical form of the matrix

SOLUTION

The characteristic polynomial of A is

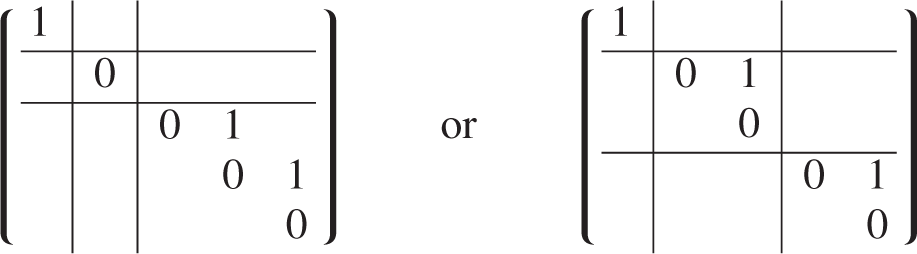

The eigenspace corresponding to is spanned by and the eigen-space corresponding to is spanned by and . Thus, the Jordan canonical form of A then will consist of three simple Jordan blocks. Except for a reordering of the blocks, there are only two possibilities:

To determine which of these forms is correct, we compute .

Next we consider the systems

for . Since these systems turn out to be inconsistent, the Jordan canonical form of A cannot have any simple Jordan blocks and, consequently, it must be of the form

To find X, we must solve

for . The system has infinitely many solutions. We need choose only one of these, say, . Similarly, has infinitely many solutions, one of which is . Let

The reader may verify that .

One of the main applications of the Jordan canonical form is in solving systems of linear differential equations that have defective coefficient matrices. Given such a system

we can simplify it by using the Jordan canonical form of A. Indeed, if , then

Thus, if we set , then and the system simplifies to

Multiplying by , we get

Because of the structure of J, this new system is much easier to solve. Indeed, solving (4) will only involve solving a number of smaller systems, each of the form

These equations can be solved one at a time starting with the last. The solution to the last equation is clearly

The solution to any equation of the form

is given by

Thus, we can solve

for and then solve

for , etc.

Example 2

Solve the initial value problem

SOLUTION

The coefficient matrix A has two distinct eigenvalues and , each of multiplicity 2. The corresponding eigenspaces are both dimension 1. Using the methods of this section, A can be factored into a product , where

The choice of X is not unique. The reader may verify that the one we have calculated:

does the job. If we now change variable and set , then we can rewrite the system in the form

The block structure of J allows us to break up the system into two simpler systems:

The first system is not difficult to solve.

To solve the second system, we first solve

getting

Thus,

and hence

Finally, we have

If we set and use the initial conditions to solve for the ’s, we get

Thus, the solution to the initial value problem is

The Jordan canonical form not only provides a nice representation of an operator, but it also allows us to solve systems of the form even when the coefficient matrix is defective. From a theoretical point of view, its importance cannot be questioned. As far as practical applications go, however, it is generally not very useful.

If , it is usually necessary to calculate the eigenvalues of A by some numerical method. The calculated ’s are only approximations to the actual eigenvalues. Thus, we could have calculated values and , which are unequal while actually . So in practice, it may be difficult to determine the correct multiplicity of the eigenvalues. Furthermore, in order to solve , we need to find the similarity matrix X such that . However, when A has multiple eigenvalues, the matrix X may be very sensitive to perturbations and, in practice, one is not guaranteed that the entries of the computed similarity matrix will have any digits of accuracy whatsoever. A recommended alternative is to compute the matrix exponential and use it to solve the system .