1.4 Matrix Algebra

The algebraic rules used for real numbers may or may not work when matrices are used. For example, if a and b are real numbers, then

For real numbers, the operations of addition and multiplication are both commutative. The first of these algebraic rules works when we replace a and b by square matrices A and B, that is,

However, we have already seen that matrix multiplication is not commutative. This fact deserves special emphasis.

In this section, we examine which algebraic rules work for matrices and which do not.

Algebraic Rules

The following theorem provides some useful rules for doing matrix algebra.

Theorem 1.4.1

Each of the following statements is valid for any scalars α and β and for any matrices A, B, and C for which the indicated operations are defined.

We will prove two of the rules and leave the rest for the reader to verify.

Proof of Rule 4

Assume that is an matrix and and are both matrices. Let and . It follows that

and

But

so that and hence .

∎

Proof of Rule 3

Let A be an matrix, B an matrix, and C an matrix. Let and . We must show that . By the definition of matrix multiplication,

The ijth term of DC is

and the (i, j) entry of AE is

Since

it follows that

∎

The algebraic rules given in Theorem 1.4.1 seem quite natural, since they are similar to the rules that we use with real numbers. However, there are important differences between the rules for matrix algebra and the algebraic rules for real numbers. Some of these differences are illustrated in Exercises 1 through 5 at the end of this section.

Example 1

If

verify that and .

SOLUTION

Thus,

Therefore,

Notation

Since , we may simply omit the parentheses and write ABC. The same is true for a product of four or more matrices. In the case where an matrix is multiplied by itself a number of times, it is convenient to use exponential notation. Thus, if k is a positive integer, then

Example 2

If

then

and, in general,

The Identity Matrix

Just as the number 1 acts as an identity for the multiplication of real numbers, there is a special matrix I that acts as an identity for matrix multiplication; that is,

for any matrix A. It is easy to verify that, if we define I to be an matrix with 1’s on the main diagonal and 0’s elsewhere, then I satisfies equation (4) for any matrix A. More formally, we have the following definition.

As an example, let us verify equation (4) in the case :

and

In general, if B is any matrix and C is any matrix, then

The column vectors of the identity matrix I are the standard vectors used to define a coordinate system in Euclidean n-space. The standard notation for the jth column vector of I is , rather than the usual . Thus, the identity matrix can be written

Matrix Inversion

A real number a is said to have a multiplicative inverse if there exists a number b such that . Any nonzero number a has a multiplicative inverse . We generalize the concept of multiplicative inverses to matrices with the following definition.

If B and C are both multiplicative inverses of A, then

Thus, a matrix can have at most one multiplicative inverse. We will refer to the multiplicative inverse of a nonsingular matrix A as simply the inverse of A and denote it by .

Example 3

The matrices

are inverses of each other, since

and

Example 4

The matrices

are inverses, since

and

Example 5

The matrix

has no inverse. Indeed, if B is any matrix, then

Thus, BA cannot equal I.

Note

Only square matrices have multiplicative inverses. One should not use the terms singular and nonsingular when referring to nonsquare matrices.

Often we will be working with products of nonsingular matrices. It turns out that any product of nonsingular matrices is nonsingular. The following theorem characterizes how the inverse of the product of a pair of nonsingular matrices A and B is related to the inverses of A and B:

Theorem 1.4.2

If A and B are nonsingular matrices, then AB is also nonsingular and .

Proof

∎

It follows by induction that, if are all nonsingular matrices, then the product is nonsingular and

In the next section, we will learn how to determine whether a matrix has a multiplicative inverse. We will also learn a method for computing the inverse of a nonsingular matrix.

Algebraic Rules for Transposes

There are four basic algebraic rules involving transposes.

Algebraic Rules for Transposes

The first three rules are straightforward. We leave it to the reader to verify that they are valid. To prove the fourth rule, we need only show that the (i, j) entries of and are equal. If A is an matrix, then, for the multiplications to be possible, B must have n rows. The (i, j) entry of is the (j, i) entry of AB. It is computed by multiplying the jth row vector of A times the ith column vector of B:

The (i, j) entry of is computed by multiplying the ith row of times the jth column of . Since the ith row of is the transpose of the ith column of B and the jth column of is the transpose of the jth row of A, it follows that the (i, j) entry of is given by

It follows from (5) and (6) that the (i, j) entries of and are equal.

The next example illustrates the idea behind the last proof.

Example 6

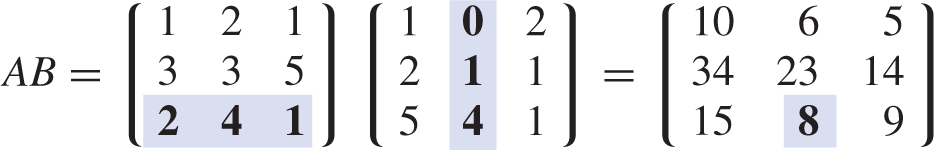

Let

Note that, on the one hand, the (3, 2) entry of AB is computed taking the scalar product of the third row of A and the second column of B.

When the product is transposed, the (3, 2) entry of AB becomes the (2, 3) entry of .

On the other hand, the (2, 3) entry of is computed taking the scalar product of the second row of and the third column of .

In both cases, the arithmetic for computing the (2, 3) entry is the same.

Symmetric Matrices and Networks

Recall that a matrix A is symmetric if . One type of application that leads to symmetric matrices is problems involving networks. These problems are often solved using the techniques of an area of mathematics called graph theory.

Theorem 1.4.3

If A is an adjacency matrix of a graph and represents the (i, j) entry of , then is equal to the number of walks of length k from to .

Proof

The proof is by mathematical induction. In the case , it follows from the definition of the adjacency matrix that represents the number of walks of length 1 from to . Assume for some m that each entry of is equal to the number of walks of length m between the corresponding vertices. Thus, is the number of walks of length m from to . Now on the one hand, if there is an edge , then is the number of walks of length from to of the form

On the other hand, if is not an edge, then there are no walks of length of this form from to and

It follows that the total number of walks of length from to is given by

But this is just the (i, j) entry of .

∎

Example 7

To determine the number of walks of length 3 between any two vertices of the graph in Figure 1.4.2, we need only compute

Thus, the number of walks of length 3 from to is . Note that the matrix is symmetric. This reflects the fact that there are the same number of walks of length 3 from to as there are from to .