6.5 The Singular Value Decomposition

In many applications, it is necessary either to determine the rank of a matrix or to determine whether the matrix is deficient in rank. Theoretically, we can use Gaussian elimination to reduce the matrix to row echelon form and then count the number of nonzero rows. However, this approach is not practical in finite-precision arithmetic. If A is rank deficient and U is the computed echelon form, then, because of rounding errors in the elimination process, it is unlikely that U will have the proper number of nonzero rows. In practice, the coefficient matrix A usually involves some error. This may be due to errors in the data or to the finite number system. Thus, it is generally more practical to ask whether A is “close” to a rank-deficient matrix. However, it may well turn out that A is close to being rank deficient and the computed row echelon form U is not.

In this section, we assume throughout that A is an m×n

The σi

We begin by showing that such a decomposition is always possible.

Theorem 6.5.1 The SVD Theorem

If A is an m×n

Proof

ATA

Hence,

We may assume that the columns of V have been ordered so that the corresponding eigenvalues satisfy

The singular values of A are given by

Let r denote the rank of A. The matrix ATA

The same relation holds for the singular values

Now let

and

Hence, ∑1

The column vectors of V2

and, consequently, the column vectors of V2

and since V is an orthogonal matrix, it follows that

So far we have shown how to construct the matrices V and Σ of the singular value decomposition. To complete the proof, we must show how to construct an m×m

or, equivalently,

Comparing the first r columns of each side of (3), we see that

Thus, if we define

and

then it follows that

The column vectors of U1

It follows from (4) that each uj,1≤j≤r

It follows from Theorem 5.2.2 that u1,…,um

∎

Observations

Let A be an m×n

The singular values σ1,…,σn

σ1,…,σn of A are unique; however, the matrices U and V are not unique.Since V diagonalizes ATA

ATA , it follows that the vjvj ’s are eigenvectors of ATAATA .Since AAT=UΣΣTUT

AAT=UΣΣTUT , it follows that U diagonalizes AATAAT and that the ujuj ’s are eigenvectors of AATAAT .Comparing the jth columns of each side of the equation

AV=UΣAV=UΣ we get

Avj=σjujj=1,…,nAvj=σjujj=1,…,n Similarly,

ATU=VΣTATU=VΣT and hence

ATuj=σjvjfor j=1,…,nATuj=0for j=n+1,…,mATuj=σjvjATuj=0for j=1,…,nfor j=n+1,…,m The vj

vj ’s are called the right singular vectors of A, and the ujuj ’s are called the left singular vectors of A.If A has rank r, then

v1,…,vr

v1,…,vr form an orthonormal basis for R(AT)R(AT) .vr+1,…,vn

vr+1,…,vn form an orthonormal basis for N(A)N(A) .u1,…,ur

u1,…,ur form an orthonormal basis for R(A)R(A) .ur+1,…,um

ur+1,…,um form an orthonormal basis for N(AT)N(AT) .

The rank of the matrix A is equal to the number of its nonzero singular values (where singular values are counted according to multiplicity). The reader should be careful not to make a similar assumption about eigenvalues. The matrix

M=[0100001000010000]M=⎡⎣⎢⎢⎢⎢0000100001000010⎤⎦⎥⎥⎥⎥ for example, has rank 3 even though all of its eigenvalues are 0.

In the case that A has rank r<n

r<n , if we setU1=(u1,u2,…,ur)V1=(v1,v2,…,vr)U1=(u1,u2,…,ur)V1=(v1,v2,…,vr) and define Σ1

Σ1 as in equation (1), thenA=U1Σ1VT1(6)A=U1Σ1VT1 The factorization (6) is called the compact form of the singular value decomposition of A. This form is useful in many applications.

Example 1

Let

Compute the singular values and the singular value decomposition of A.

SOLUTION

The matrix

has eigenvalues λ1=4

diagonalizes ATA

The remaining column vectors of U must form an orthonormal basis for N(AT)

Since these vectors are already orthogonal, it is not necessary to use the Gram–Schmidt process to obtain an orthonormal basis. We need only set

It then follows that

∎

Visualizing the SVD

If we view an m×n

are mutually orthogonal and the corresponding unit vectors u1,u2,…,ur

Example 2

Let

To find a pair of right singular vectors of A, we must find a pair of orthonormal vectors x and y for which the image vectors Ax and Ay are orthogonal. Choosing the standard basis vectors for ℝ2

are not orthogonal. See Figure 6.5.1.

Figure 6.5.1.

One way to search for the right singular vectors is to simultaneously rotate this initial pair of vectors around the unit circle and for each rotated pair

check to see if Ax and Ay are orthogonal. For the given matrix A, this will happen when the tip of our initial x vector gets rotated to the point (0.6,0.8). It follows that the right singular vectors are

Since

it follows that the singular values are σ1=1.5

∎

Figure 6.5.2.

Numerical Rank and Lower Rank Approximations

If A is an m×n

We will not prove this result, since the proof is beyond the scope of this text. Assuming that the minimum is achieved, we will show how such a matrix X can be derived from the singular value decomposition of A. The following lemma will be useful.

Lemma 6.5.2

If A is an m×n

Proof

∎

If A has singular value decomposition UΣVT

Since

It follows that

Theorem 6.5.3

Let A=UΣVT

In particular, if A′=UΣ′VT

then

Proof

Let X be a matrix in M satisfying (7). Since A′∈?, it follows that

We will show that

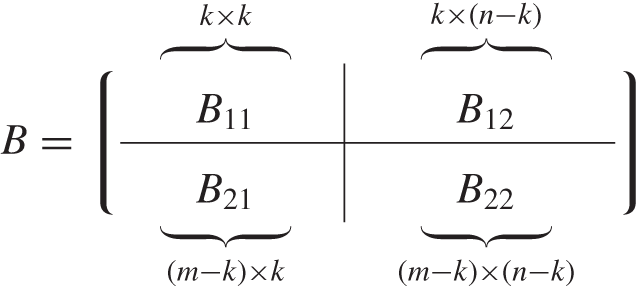

and hence that equality holds in (8). Let QΩPT be the singular value decomposition of X, where

If we set B=QTAP, then A=QBPT, and it follows that

Let us partition B in the same manner as Ω.

It follows that

We claim that B12=O. If not, then define

The matrix Y is in ? and

But this contradicts the definition of X. Therefore, B12=O. In a similar manner, it can be shown that B21 must equal O. If we set

then Z∈M and

It follows from the definition of X that B11 must equal ΩK. If B22 has singular value decomposition U1ΛVT1, then

Let

Now,

and hence it follows that the diagonal elements of Λ are singular values of A. Thus,

It then follows from (8) that

∎

If A has singular value decomposition UΣVT, then we can think of A as the product of UΣ times VT. If we partition UΣ into columns and VT into rows, then

and we can represent A by an outer product expansion

If A is of rank n, then

will be the matrix of rank n−1 that is closest to A with respect to the Frobenius norm. Similarly,

will be the nearest matrix of rank n−2, and so on. In particular, if A is a nonsingular n×n matrix, then A′ is singular and ‖A−A′‖F=σn. Thus, σn may be taken as a measure of how close a square matrix is to being singular.

The reader should be careful not to use the value of det(A) as a measure of how close A is to being singular. If, for example, A is the 100×100 diagonal matrix whose diagonal entries are all 12, then det(A)=2−100; however, σ100=12. By contrast, the matrix in the next example is very close to being singular even though its determinant is 1 and all its eigenvalues are equal to 1.

Example 3

Let A be an n×n upper triangular matrix whose diagonal elements are all 1 and whose entries above the main diagonal are all −1:

Notice that detdet(A)=det(A−1)=1 and all the eigenvalues of A are 1. However, if n is large, then A is close to being singular. To see this, let

The matrix B must be singular, since the system Bx=0 has a nontrivial solution x=(2n−2,2n−3,…,20,1)T. Since the matrices A and B differ only in the (n,1) position, we have

It follows from Theorem 6.5.3 that

Thus, if n=100, then σn≤1/298 and, consequently, A is very close to singular.

∎

Example 4

Suppose that A is a 5×5 matrix with singular values

and suppose that the machine epsilon is 5×10−15. To determine the numerical rank, we compare the singular values to

Since three of the singular values are greater than 10−13, the matrix has numerical rank 3.

∎

Application 2 Digital Image Processing

A video image or photograph can be digitized by breaking it up into a rectangular array of cells (or pixels) and measuring the gray level of each cell. This information can be stored and transmitted as an m×n matrix A. The entries of A are nonnegative numbers corresponding to the measures of the gray levels. Because the gray levels of any one cell generally turn out to be close to the gray levels of its neighboring cells, it is possible to reduce the amount of storage necessary from mn to a relatively small multiple of m+n+1. Generally, the matrix A will have many small singular values. Consequently, A can be approximated by a matrix of much lower rank.

If A has singular value decomposition UΣVT, then A can be represented by the outer product expansion

The closest matrix of rank k is obtained by truncating this sum after the first k terms:

The total storage for Ak is k(m+n+1). We can choose k to be considerably less than n and still have the digital image corresponding to Ak very close to the original. For typical choices of k, the storage required for Ak will be less than 20 percent of the amount of storage necessary for the entire matrix A.

Figure 6.5.3 shows an image corresponding to a 176×260 matrix A and three images corresponding to lower rank approximations of A. The gentlemen in the picture are (left to right) James H. Wilkinson, Wallace Givens, and George Forsythe (three pioneers in the field of numerical linear algebra).

Figure 6.5.3.

Courtesy Oakridge National Laboratory, U.S. Dept. of Energy

Application 3 Information Retrieval-Latent Semantic Indexing

We return again to the information retrieval application discussed in Sections 1.3 and 5.1. In this application, a database of documents is represented by a database matrix Q. To search the database, we form a unit search vector x and set y=QTx. The documents that best match the search criteria are those corresponding to the entries of y that are closest to 1.

Because of the problems of polysemy and synonymy, we can think of our database as an approximation. Some of the entries of the database matrix may contain extraneous components due to multiple meanings of words, and some may miss including components because of synonymy. Suppose that it were possible to correct for these problems and come up with a perfect database matrix P. If we set E=Q−P, then, since Q=P+E, we can think of E as a matrix representing the errors in our database matrix Q. Unfortunately, E is unknown, so we cannot determine P exactly. However, if we can find a simpler approximation Q1 for Q, then Q1 will also be an approximation for P. Thus, Q1=P+E1 for some error matrix E1. In the method of latent semantic indexing (LSI), the database matrix Q is approximated by a matrix Q1 with lower rank. The idea behind the method is that the lower rank matrix may still provide a good approximation to P and, because of its simpler structure, may actually involve less error; that is, ∥E1∥<∥E∥.

The lower rank approximation can be obtained by truncating the outer product expansion of the singular value decomposition of Q. This is equivalent to setting

and then setting Q1=U1Σ1VT1, the compact form of the singular value decomposition of the rank r matrix. Furthermore, if r<min(m,n)/2, then this factorization is computationally more efficient to use and the searches will be speeded up. The speed of computation is proportional to the amount of arithmetic involved. The matrix vector multiplication QTx requires a total of mn scalar multiplications (m multiplications for each of the n entries of the product). In contrast, QT1=V1Σ1UT1, and the multiplication QT1x=V1(Σ1(U1xT)) requires a total of r(m+n+1) scalar multiplications. For example, if m=n=1000 and r=200, then

The search with the lower rank matrix should be more than twice as fast.

Application 4 Psychology—Principal Component Analysis

In Section 5.1, we saw how psychologist Charles Spearman used a correlation matrix to compare scores on a series of aptitude tests. On the basis of the observed correlations, Spearman concluded that the test results provided evidence of common basic underlying functions. Further work by psychologists to identify the common factors that make up intelligence has led to development of an area of study known as factor analysis.

Predating Spearman’s work by a few years is a 1901 paper by Karl Pearson analyzing a correlation matrix derived from measuring seven physical variables for each of 3000 criminals. This study contains the roots of a method popularized by Harold Hotelling in a well-known paper published in 1933. The method is known as principal component analysis.

To see the basic idea of this method, assume that a series of n aptitude tests is administered to a group of m individuals and that the deviations from the mean for the tests form the columns of an m×n matrix X. Although, in practice, column vectors of X are positively correlated, the hypothetical factors that account for the scores should be uncorrelated. Thus, we wish to introduce mutually orthogonal vectors y1,y2,. . . ,yr corresponding to the hypothetical factors. We require that the vectors span R(X), and hence the number of vectors, r, should be equal to the rank of X. Furthermore, we wish to number these vectors in decreasing order of variance.

The first principal component vector, y1, should account for the most variance. Since y1 is in the column space of X, we can represent it as a product Xv1 for some v1∈ℝn. The covariance matrix is

and the variance of y1 is given by

The vector v1 is chosen to maximize vTSv over all unit vectors v. This can be accomplished by choosing v1 to be a unit eigenvector of XTX belonging to its maximum eigenvalue λ1. (See Exercise 28 of Section 6.4.) The eigenvectors of XTX are the right singular vectors of X. Thus, v1 is the right singular vector of X corresponding to the largest singular value σ1=√λ1. If u1 is the corresponding left singular vector, then

The second principal component vector must be of the form y2=Xv2. It can be shown that the vector which maximizes vTSv over all unit vectors that are orthogonal to v1 is just the second right singular vector v2 of X. If we choose v2 in this way and u2 is the corresponding left singular vector, then

and since

it follows that y1 and y2 are orthogonal. The remaining yi’s are determined in a similar manner.

In general, the singular value decomposition solves the principal component problem. If X has rank r and singular value decomposition X=U1Σ1VT1 (in compact form), then the principal component vectors are given by

The left singular vectors u1,. . . ,un are the normalized principal component vectors. If we set W=Σ1VT1, then

The columns of the matrix U1 correspond to the hypothetical intelligence factors. The entries in each column measure how well the individual students exhibit that particular intellectual ability. The matrix W measures to what extent each test depends on the hypothetical factors.