Introduction

History is a nightmare from which I am trying to awake.

—James Joyce

Four decades ago a program of a million lines was regarded as huge, reserved for only the most massive mainframe systems in the bowels of the Department of Defense (DoD1). It was routinely estimated that to build such programs would require 1,000 engineers working for a decade. Today most of the applications on a PC are well over a million lines, and many are in the 10 million range. Moreover, they are expected to be built in a couple of years or less. So programs are growing ever larger while clients expect them to be developed with less effort.

In today’s high-tech society rapid obsolescence is routine, and the economic model of the firm emphasizes growth more than ever before. These forces create the need for more, different, and better products in shorter time. Since all those new products depend upon ever-increasing amounts of software to run, the pressure on software developers to do their part to decrease time-to-market is relentless.

Developers are also assaulted on another front. Four decades ago the national average was 150 defects per KLOC2 for released code. That was back in the good old days when no one had a clue how to go about writing “good” software. The GOTO and global data reigned, designs were done on blackboards and cocktail napkins, and the DEL key was the programmer’s favorite. Developing software was the arcane specialty of a few mutated electrical engineers so, frankly, nobody really cared much if it was buggy.

Alas, such a utopia could not last. In the ’70s and ’80s two things happened. First, there was a quality revolution led by the Japanese that was quickly followed by the rest of Asia and, more slowly, by the industrialized West. This led consumers into a new era where they no longer had to plan on their car or TV being in the shop for one week out of six. Consumers really liked that. Second, soon after this the quality revolution software began to weasel its way into virtually everything. Suddenly software stood out as the only thing that was always breaking. Now that consumers were aware of a better way, they didn’t like that at all.

So while software developers were trying to cope with building ever-larger programs in ever-decreasing time, they suddenly found that they were also expected to get to 5-sigma defect rates.3 To make matters worse, a popular slogan of the ’80s became “Quality is Free.” No matter that every software developer was certain that this was hogwash because software quality is a direct trade-off against development time. Sadly, the market forces were then and are now beyond the influence of software developers, so no one paid any attention to the wails of software developers. Thus the ’80s brought new meaning to the term “software crisis,” mostly at the expense of developer mental health.

The second major event was an extraordinary explosion in the technology of computing. Previously the technological advances were mostly characterized by new languages and operating systems. But the huge growth of PC software, the demand for interoperability among programs, and the emergence of the World Wide Web as a major force in computing resulted in a great deal of innovation.4 Today developers face a mind-boggling set of alternatives for everything from planning tools through architectural strategies to testing processes. Worse, new technologies are being introduced before developers are fluent in the old ones.

Finally, developers are faced with a constant parade of requirements changes. The computing space is not the only domain that is facing increasing innovation and change. For core management information systems the Federal Accounting Standards Bureau (FASB), the IRS, and sundry other agencies in the United States decree changes with annoying regularity. Product competition causes marketeers to relentlessly press for new features as products are developed to keep pace with competitor announcements. Similarly, the standards, techniques, and technologies of the engineering disciplines are also changing rapidly as the academics strive to bring order to this chaos. The days of the “requirements freeze” and the project-level waterfall model are long gone.

To summarize, modern software developers are faced with an ancient problem: putting five pounds of stuff in a three-pound bag as quickly as possible without spilling any. We have better tools, methodologies, processes, and technologies today than we did a half century ago, but the problems have grown in proportion. The software crisis that Basic Assembly Language (BAL) was supposed to solve is still with us, which segues to this book’s first Ingot of Wisdom:

Software development is an impossible task. The only thing the developer can do is cope and keep smiling.

The State of the Art

A keynote speaker at a conference in 1995 asked everyone who used Microsoft Windows to raise their hand. Several hundred people did. He then asked all those whose system had crashed in the past week to keep their hands up. All but about a half dozen kept their hands up. The keynoter then asked everyone to keep their hands up who thought that Microsoft would go out of business as a result. All but a dozen hands went down. The keynoter used this to validate his assertion that good quality in software was not necessarily a requirement that was important to a software company’s survival.

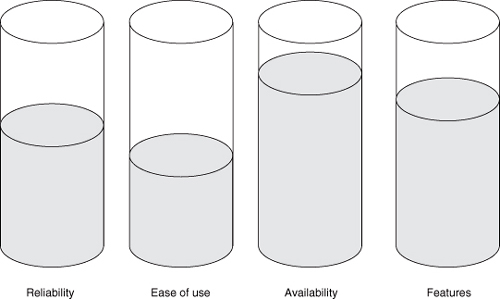

That conclusion is much less warranted today, as Microsoft discovered in its wars with Linux.5 The keynoter was correct, though, that the key determining factor in a software company’s viability in the marketplace is whether they deliver what their customers want. Broadly speaking, this can be viewed as an analogy of four buckets containing fluids representing reliability, features, ease of use, and availability (in the sense of time-to-market) where the cost of filling each of those buckets is different, as shown in Figure I-1. The end customer clearly wants all of those things but the vendor has a limited budget. So the vendor makes a decision that trades total cost against the mix of levels to which the buckets are filled. The marketplace then determines how well the vendor’s decision provides overall satisfaction to the customer.

The decision about how much to fill each bucket is fundamentally a marketing decision. The marketeers earn their big bucks by anticipating what combination of levels will be optimal for a given total price in a given market. The task of minimizing the cost of filling each bucket lies with the software developers. Whenever the developer brings about a cost reduction, that reduction is directly reflected in a competitive advantage for that aspect of the product. The value of that advantage will depend upon the particular market niche. So, to measure the State of the Art of software development the question becomes: What tools are available to reduce the costs of filling the various buckets?

Figure I-1. Trade-offs for conflicting development priorities

We have a variety of software tools to help us, such as version control systems. We also have lots of processes, ranging from XP (eXtreme Programming) to formal methods in clean room shops. And we have as many software design methodologies as there are methodologists.6 We also have process frameworks like the RUP7 and the CMM,8 not to mention languages, integrated development environments (IDEs), metrics systems, estimating models, and all the rest of the potpourri.

We have precious little data to sort out which combinations of the available methodologies, technologies, and practices are best.

The days are long gone when a couple of hackers could sit down in their spare bedrooms and come up with a killer application after a few months of consuming lots of Jolt Cola and cold pizza. If you tell someone at a job interview that you wrote 100 KLOC last year, you can probably kiss that job off because hiring managers have figured out that the only way you can get that kind of productivity is by writing terminally unreliable and utterly unmaintainable crap. To effectively lower the cost of filling those buckets you need to go at software development in an organized and systematic way.

Because of this there is a lot of lip service paid to software engineering in today’s industry rags. Alas, we are probably a long way from respectability as an engineering discipline. Software development is still an art, although it is more bilious green than black. The art lies in cobbling together a coherent development system that is appropriate for a given environment from a hodgepodge of disparate practices and tools. Instead of providing discipline for putting together such systems, our “software engineering” merely says, “Here’s a framework and lots of alternatives to drape on it. Selecting the right alternatives and gluing them together correctly for the particular development environment is left as an exercise for the student.”

What Works

Nonetheless, the reality is that the state of the art of software development has improved significantly over the past half century. The same number of people routinely develop much bigger programs with much better quality in shorter time, so we have to be doing something right. The tricky part is to figure out what things are more right than others.

Initially there was a collection of hard-won lessons based upon decades of rather rocky experience prior to 1970. For example, once upon a time FORTRAN’s assigned-GOTO statement and COBOL’s ALTER statement were regarded as powerful tools for elegantly handling complex programming problems. A decade later, programming managers were making their use grounds for summary dismissal because the programs using those features tended to be unmaintainable when requirements changed.9

All these hard-won lessons formed a notion of developer experience. The programmers who had been around long enough to make a lot of mistakes and who were bright enough to learn from those mistakes became software wizards. Unfortunately, each developer essentially had to reinvent this wheel because there was no central body of knowledge about software development.

Starting in the late ’60s a variety of methodologies for programming in 3GLs and for software design sprang up. The earliest ones simply enumerated the hard-won practices and mistakes of the past in a public fashion for everyone’s benefit. Soon, though, various software wizards began to combine these practices into cohesive design methodologies. These methodologies were different in detail but shared a number of characteristics in common, so they were grouped under the umbrella of Structured Development (SD). Because they were rather abstract views—the big picture of software design—the authors found it convenient to employ specialized notations. Since many of the wizards were also academicians, the notations tended to be graphical so that theoretical constraints from set and graph theory could be readily applied.

SD was the single most important advance in software development of the middle twentieth century. It quite literally brought order out of chaos. Among other things, it was the engine of growth of data processing, which later evolved into the less prosaic information technology in the ’80s, in the corporate world because acres of entry-level COBOL programmers could now churn out large numbers of reports that provided unprecedented visibility into day-to-day corporate operations. Better yet, those reports were usually pretty accurate.

Alas, SD was only the first step on a long road to more and better software development. As one might expect for something so utterly new, it wasn’t a panacea, and by the late ’70s some warts were beginning to show up. Understanding what those warts were is critical to understanding why and how object-oriented development evolved in the ’80s.