Achieving Excellence in Software Development Using Metrics ◾ 95

Product Health Report

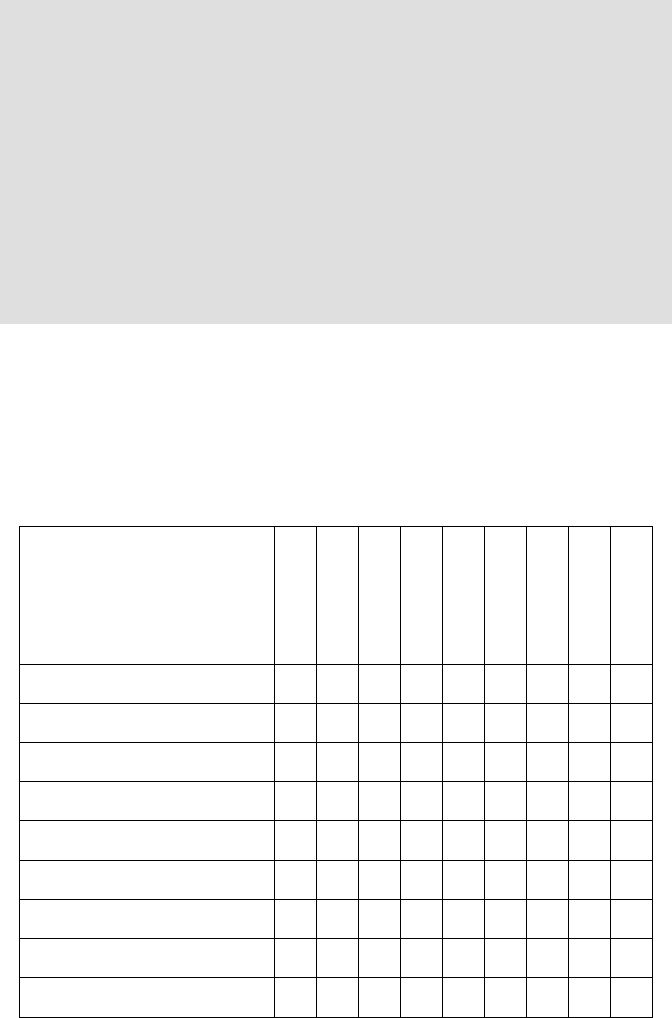

Product metric results can be organized in matrix format to present recorded infor-

mation about each component. ere are many ways one can organize this infor-

mation. A simple form is shown as follows:

Metric

Component 1

Component 2

Component 3

Component 4

Component 5

Component 6

Component 7

Component 8

Component 9

Date tested

FP

KLOC

Design complexity

Code complexity

Defect count

Defects/FP

Reliability

Residual defects (predicted)

specification limits does not have meaning. Delay should be measured in real

time. e cost of time depends on the context. For example, a single day delay

in software delivery to large Aerospace Systems may incur huge costs. Where

thousands of vendors are involved, scheduled delay can have catastrophic

cumulative effects. us, the following metric must be used:

Schedule slip = (actual schedule − planned schedule) in days

e time loss must then be converted into monetary loss. Dollars lost due

to schedule slip is a better metric than the percentage of process compliance.

A 1-day slip might translate into millions of dollars in some large projects.

Customers may levy sizable penalty on schedule slips.

96 ◾ Simple Statistical Methods for Software Engineering

Statistical Process Control Charts

From collected process data, we plot control charts. Simple statistical process control

(SPC) techniques can be used to make process control a success. e control charts

are sufficient to make project teams alert and deliver quality work products and

simultaneously cut costs. Each chart can be plotted with results from completing

various components, arranged in a time sequence, as shown in Figure 6.2. ese

charts are also known as process performance baselines.

Case Study: Early Size Measurements

Measuring size early in the life cycle adds great value. During the first few weeks

of any development project, the early size indicators provide clarity to the project

teams when things are otherwise fuzzy.

e Netherlands Software Metrics Users Association (NESMA) has developed

early function point counting. According to NESMA,

A detailed function point count is of course more accurate than

an estimated or an indicative count; but it also costs more time

and needs more detailed specifications. It’s up to the project

manager and the phase in the system life cycle as to which

type of function point count can be used.

In many applications an indicative function point count gives

a surprisingly good estimate of the size of the application. Often

it is relatively easy to carry out an indicative function point count,

because a data model is available or can be made with little effort.

Goal 7%

Schedule variance %

25

20

15

10

5

0

1 2 3 4 5 6 7

Figure 6.2 Control chart on schedule variance.

Achieving Excellence in Software Development Using Metrics ◾ 97

When use cases are known, by assigning different weight factors to different

actor types, we can calculate use case point. is metric can be set up early in the

project and used as an estimator of cost and time. Technical and environmental

factors can be incorporated to enrich the use case point for estimation.

Similarly, when test cases are developed, a metric called test case point (TCP)

can be developed by assigning complexity weights to the test cases. e sum of

TCP can be used to estimate effort and schedule.

Alternatively, we can measure object points based on screens and reports in the

software.

Use case points, test case points, and object points are variants of functional

size. ey can be converted into FP by using appropriate scale factors.

Measure functional size as the project starts.

is will bring clarity into requirements and help in the estimation of cost,

schedule and quality.

Once functional size is measured, the information is used to estimate man-

power and time required to execute the project. is is conveniently performed by

applying any regression model that correlates size with effort. COCOMO is one

such model, or one can use homegrown models for this purpose.

ere is a simpler way to estimate effort. We can identify the type of soft-

ware we have to develop. Yong Xia identifies five software types: end-user software

(developed for the personal use of the developer), management information system,

outsourced projects, system software, commercial software, and military software.

On the basis of type, we can anticipate the FP per staff month, which can vary from

1000 to 35 [9]. Using this conversion factor, we can quickly arrive at the effort esti-

mate. Once effort is known, we can derive time required, again by using available

regression relationships.

Early metrics capture functional size and arrive at effort estimates; measure-

ment and estimation are harmoniously blended.

Project Progress Using Earned Value Metrics

Tracking Progress

Whether one builds software or a skyscraper, earned value metrics can be used to

advantage. To constrict earned value metric, we need to make two basic observa-

tions: schedule and cost are measured at every milestone.

e first achievement of EVM is in the way it distinguishes value from cost.

Project earns value by doing work. Value is measured as follows:

Budgeted cost of work is its value.

98 ◾ Simple Statistical Methods for Software Engineering

In EVM terminology, this is referred to as budgeted cost of work. From a project

plan, one can see how the project value increases with time. e project is said to

“earn value” as work is completed. To measure progress, we define the following

metrics.

Planned value = budgeted cost of work scheduled (BCWS)

Earned value = budgeted cost of work performed (BCWP)

An example of project progress tracked with these two metrics is shown in

Figure 6.3.

At a glance, one can see how earned value trails behind planned value, graphi-

cally illustrating project progress. is is known as the earned value graph.

Tracking Project Cost

e actual cost expended to complete the work reported is measured as the actual

cost of work performed (ACWP). Cost information can be included in the earned

value graph, as shown in Figure 6.4.

In addition to the earned value graph, we can compute performance indicators

such as project variances and performance indices. We can also predict the time

and cost required to finish the project using linear extrapolation. ese metrics are

listed in Data 6.1.

An earned value report would typically include all the earned value metrics and

present graphical and tabular views of project progress and project future.

Budgeted cost

Planned value

(BCWS)

Earned value

(BCWP)

Review date

Time

Release

target date

Figure 6.3 Tracking project progress.

Achieving Excellence in Software Development Using Metrics ◾ 99

e project management body of knowledge (PMBOK) treats earned value

metrics as crucial information for the project manager. Several standards and

guidelines are available to describe how EVM works. For example, the EVMS stan-

dard released by design of experiments (DOE) utilizes the EVMS information as

an effective project management system to ensure successful project execution.

Review date

Release

target date

Budgeted cost

Planned value

(BCWS)

Earned value

(BCWP)

Time

Actual cost

(ACWP)

Figure 6.4 Tracking project cost.

Data 6.1 Earned Value Metrics

Core Metrics

Budgeted cost of work scheduled (BCWS) (also planned value [PV])

Budgeted cost of work performed (BCWP) (also earned value [EV])

Actual cost of work performed (ACWP) (also actual cost [AC])

Performance Metrics

Cost variance = PV − AC

Schedule variance = EV − PV

Cost performance index (CPI) = EV/AC

Schedule performance index (SPI) = EV/PV

Project performance index (PPI) = SPI × CPI

To complete schedule performance index (TCSPI)

Predictive Metrics

Budget at completion = BAC

Estimate to complete (ETC) = BAC − EV

Estimate at completion (EAC)

EAC = AC + (BAC − EV) Optimistic

EAC = AC + (BAC − EV)/CPI Most likely

EAC = BAC/CPI Most likelysimple (widely used)

EAC = BAC/PPI Pessimistic

Cost variance at completion (VAC) = BAC − EAC

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.