Chapter 1. Introduction

1.1 Definitions

1.1.1 Very-Large-Scale Integration (VLSI)

The amount of functionality that can be integrated on a single chip in current fabrication processes is associated with the term very-large-scale integration (VLSI). Preceding chip design generations were known as small-scale integration (SSI), medium-scale integration (MSI), and large-scale integration (LSI). Actually, VLSI has been used to refer to a succession of several manufacturing process technologies, each providing improved transistor and interconnect dimension scaling. A proposal was made to refer to current designs as ultra-large-scale integration, but the term ULSI has never really gained traction.

The complexity of a new VLSI design project is used to estimate the engineering resources required. However, it is not particularly straightforward to provide a single metric for describing the complexity of a VLSI design and its associated manufacturing technology. The previous fabrication technology generations were predominately dedicated to either digital or analog circuitry. For digital designs, the number of equivalent NAND logic gates was typically applied as the measure of design complexity, and the logic density, measured in gates/mm**2, was the corresponding technology characteristic. The succession of VLSI manufacturing processes has enabled a much richer diversity of digital, analog, and memory functions to be integrated, making a logic gate estimate less applicable to the design and making gates/mm**2 less representative of the process. The embedded memory technologies available from the fabrication process have a major impact on the physical complexity of the chip design, with options for volatile (e.g., SRAM) and non-volatile (e.g., flash) array circuits. For designs with significant on-chip memory requirements, the specific memory cell implementation is a strong factor in estimating the final chip layout area (and, thus, cost). The number of metal interconnect layers available in the VLSI process—especially the electrical resistance and capacitance of each layer—also has a tremendous influence on the engineering resources needed to achieve design targets.

In summary, the complexity of a VLSI design is not easy to define when estimating project difficulty. Comparisons to the resources needed for previous designs are often insightful if a comparable methodology was used. As will be discussed shortly, the ability to reuse existing logical and physical intellectual property is a major consideration when preparing a project plan.

1.1.2 Power, Performance, and Area (PPA)

The high-level objectives for any new design are typically the power, performance, and full-chip area, typically referred to as the “PPA targets.”

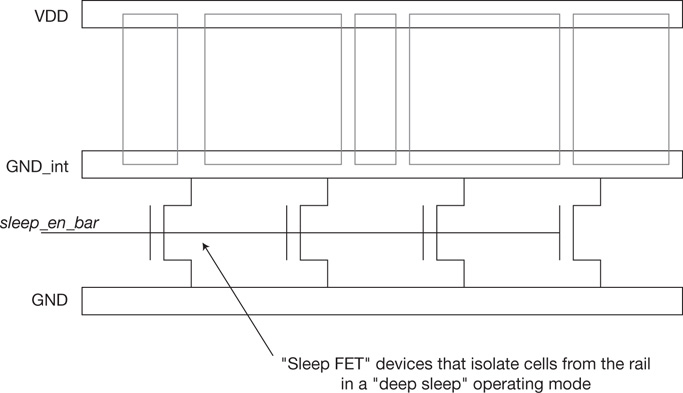

The chip power dissipation target is an integral specification for any product market, from the battery life of a mobile application to the electrical delivery (and cooling) for racks of equipment in a data center. The methodology includes a power calculation flow; actually, multiple flows are used to calculate a power dissipation value. Initially, signal switching activity from functional simulation testcases provides a (relative) measure of active power dissipation, using estimates for signal loading. Subsequently, a more detailed measure is calculated using the physical circuit implementation with resistive losses. Multiple modes of chip operation may require power dissipation data as well. For example, a standby/sleep mode requires a calculation of (inactive) transistor leakage currents rather than the active power associated with switching transients.

The performance targets of a design may involve many separate specifications, and analyzing each of them requires methodology flow steps. The most common performance target is the clock frequency (or, inversely, the clock period) applied to the functional logic timing paths between flops receiving a common clock domain signal. Logic path evaluation must complete in the allocated time period. In addition, a myriad of other (internal and external) design performance specifications are covered by the timing analysis flow, including the following:

(Maximum) clock distribution arrival skew between different flops

(Minimum) test logic clock frequency for the application of test patterns

Chip output signal timing constraints, relative to either an internally sourced clock sent with the data or an applied external clock reference

The area of the chip design is a factor in determining the final product cost. The larger the area, the fewer die sites available on the fabricated silicon wafer. A larger die likely requires a more expensive chip package, as well. The manufacturing production yield is strongly dependent upon the die area due to the probabilistic nature of an (irreparable) defect present on a die site. The accuracy of the chip area estimate prepared as part of the initial project proposal is crucial to achieving production cost targets. The methodology does not have a direct influence on this estimate, perhaps, but it may still play an important role. As mentioned earlier, a trial block design exercise may be useful to test the flow steps and provide data for representative block area estimates. It will also provide insights into the number and type of interconnect layers needed to complete block signal routes and achieve performance targets. The specific metallization stack selected for the block and global signal routes will impact fabrication costs, with each metal layer providing particular signal delay characteristics and signal route capacity (measured in wiring tracks-per-micron on the preferred route direction for the layer).

1.1.3 Application-Specific Integrated Circuits (ASICs)

Electronic products were traditionally designed with commodity part numbers, using SSI, MSI, LSI, microprocessor, memory, and analog (including radio-frequency) packages on printed circuit boards (PCBs). Unique fabrication process technologies were introduced to add commodity programmable logic and programmable (non-volatile) memory parts to provide greater product differentiation. With the transition to VLSI chip designs and processes, a number of factors led to the development of entirely new design methodologies:

The markets for electronic products grew tremendously and became much more diverse. Mobile products required intense focus on reducing power dissipation. Product enclosures pursued unique form factors, minimizing the overall volume. Both trends necessitated maximizing the integration of digital, memory, and analog functions. (Packaging technologies were also driven toward much higher pin counts and areal pin densities for PCB attach.)

Product differentiation became increasingly important to capture market share from competitors.

The advances in EDA tool algorithms were being transferred from academia and corporate research labs to the broader microelectronics industry, providing vastly improved productivity for designers to represent and validate functionality and implement and analyze the physical layout. Hardware description language semantics were introduced to allow functionality to be described at a much higher level of abstraction than the Boolean logic gate schematics used for MSI/LSI designs. For physical design, algorithms for automated circuit placement and signal routing were introduced. The circuits to be placed were selected from a previously released cell library, whose individual layouts had been verified to their logical equivalent and whose circuit delays had been characterized. The complexity of these library elements typically covered a wide range of SSI and MSI logic functions, small memory arrays and register files, input receiver and output driver pad circuits, and (potentially) simple analog blocks (e.g., phased-lock loop, ADC/DAC data converters).

These factors led to the adoption of a new set of products known as application-specific integrated circuits (ASICs).

A number of companies began to offer a full suite of ASIC services, such as developing cell libraries, releasing EDA tool software suites, assisting with PPA estimation, performing cell placement and routing, generating test patterns, releasing product data to manufacturing, executing package assembly and final test, and completing product qualification. Design teams were to follow the ASIC company’s documented methodology. The handoff from design to services consisted of the final logical description, including a netlist of interconnected cells plus performance targets. The detailed netlist was synthesized from the hardware description language functional model, either manually or with the aid of logic synthesis algorithms. In return, the ASIC company provided an upfront services and production cost quote for completing the physical implementation and release to manufacturing, simplifying the budgetary planning overall.

There were still many integrated circuits (ICs) developed using a full custom methodology, where all functional blocks consisted of unique logic circuits and manually drawn physical layouts, without utilizing automated placement and routing of library cells. This custom methodology was used only for high-volume parts, where the intensive engineering resources required could be amortized over the sales volume. (As ASIC designs typically were between commodity LSI parts and custom VLSI designs in terms of complexity, with functionality implemented using an existing cell library, these designs were also referred to as semi-custom.)

1.1.4 System-on-a-Chip (SoC)

As VLSI process technologies continued to evolve, offering increasingly scaled transistor and interconnect dimensions, there were several shifts in the ASIC market:

The complexity of physical library content required to meet application requirements grew—for example, larger and more diverse memory types (caches, tertiary arrays); programmable fuse arrays; high-speed external serial interfaces with serializer/deserializer (SerDes) physical units; and, especially, larger functional blocks (microcontrollers and processor cores for industry standard architectures).

Design teams were seeking multiple, competitive sources of these new library requirements. Rather than relying solely on the library available from the ASIC services provider, design teams sought sourcing of IP from other suppliers. This initiative was either out of financial interests (specifically, lower licensing costs) or technical necessity (e.g., if the ASIC provider did not offer a suitable PPA solution for the IP required).

Design teams sought to be more directly involved in the selection of the silicon fabrication and package assembly/test suppliers. Much as with the negotiations with IP suppliers for library features, design teams were willing to invest additional internal resources to investigate and select production sources. The goal was to more closely manage operational costs and balance supply/demand order forecasts rather than to work through the ASIC services company interface.

EDA software tools began to be marketed independently from the ASIC services company, allowing design teams to develop their own internal methodologies. The breadth of responsibilities for the internal EDA tool support team grew. The design engineers providing (part-time) EDA software support for the ASIC design methodology were consolidated into a single “CAD department.” This new and larger organization was established with the mission to provide comprehensive EDA software support for the internal methodology to all design teams. Comparable tools from different EDA vendors were subjected to benchmark evaluations to ensure suitability with the proposed methodology, confirm PPA results on representative blocks, and measure the tool runtime and IT resources required. These benchmarks also helped the CAD team assess the task of developing flow scripts and utilities around the tool to integrate into the methodology. Software licensing costs from the EDA tool vendor were also a major factor in the final competitive benchmark recommendations.

These shifts in the ASIC market resulted in the growth of several new semiconductor businesses and organizations:

Semiconductor foundries—These companies offer silicon fabrication support to customers submitting designs that have been verified using their process design kit (PDK) of manufacturing layout design rules and transistor/interconnect electrical models.

Outsourced assembly and test vendors (OSATs)—These specialty companies provide a broad set of services, including good die separation from the silicon wafer, die-to-package assembly, and final product test/qualification.

EDA tool vendors—The EDA vendors vary in terms of their software tool offerings. Some focus on point tool applications for a specific methodology flow step. The larger firms have worked to integrate multiple tools into an integrated platform, encompassing multiple flow steps.

Industry standards organizations—To facilitate the use of EDA tools from multiple suppliers in a design methodology, a set of industry-standard data formats were developed for tool input/output at various common step interfaces. For high-impact flow steps, the Institute of Electrical and Electronics Engineers (IEEE) has taken ownership of these evolving standards. For example, the syntax and semantics of the hardware description languages Verilog (and its successor, SystemVerilog) and the VHSIC Hardware Description Language (VHDL) are IEEE standards, subject to periodic updates. The format for representing the electrical characteristics of signal interconnects extracted from a physical circuit layout is the Standard Parasitic Exchange Format (SPEF), another IEEE standard. Other data format standards are maintained by industry consortia, with approval committees comprised of member company representatives. The Open Artwork System Interchange Standard (OASIS) definition for the file-based representation of physical layout data is maintained by the Semiconductor Equipment and Materials International (SEMI) organization.

De facto standards—There are some de facto data representation standards that are not formally approved and maintained. Their use became so widespread, often because of market-leading tools, that most EDA vendors support the adoption of the format to prevent their own products from being at a disadvantage to integrate into a flow step. The Simulation Program with Integrated Circuit Emphasis (SPICE) circuit simulation program reads in a netlist of interconnected transistor models and electrical primitives. After the transient circuit simulation completes, the signal waveform results are written. Both the SPICE netlist and output waveform file formats are de facto standards. The predecessor format to OASIS for layout description, the Graphic Database System (GDS)—along with its long-used successor, GDS-II—is another de facto standard. The Fast Signal Database (FSDB) format is a de facto standard for representing functional logic simulation results.

When evaluating a tool to integrate in the methodology flow, specific attention must be given to the consistency of the input and output file formats between steps. Data translation utilities added into a flow introduce the risk of data integrity loss if the utility does not recognize—or, worse, misinterprets—a data file record. This is especially important for de facto data formats. (It is also crucial to examine an EDA vendor’s tool errata documentation for any semantics included in a standard specification that are not fully supported to determine the potential impact to the design team.)

Intellectual property suppliers—The ASIC shift is perhaps best illustrated by the importance of companies that have focused on the development of complex intellectual property licensed to design companies for integration. Design teams (and/or their ASIC services provider) may not have the expertise or financial and schedule resources to develop all the diverse functionality required for an upcoming project. A separate IP vendor, often working in close collaboration with the foundries during initial development of a new process technology, could fabricate and qualify a large IP block. The emergence of standard (external) bus interface definitions (e.g., DDRx, PCIe, USB, InfiniBand) has accelerated this IP adoption method. Design teams need not be experts in the functional and electrical details of these protocols but can leverage the availability of existing IP for reuse. (Note that this discussion refers to IP functions that include a physical implementation, in a specific foundry’s process technology; there are also functional model-only IP offerings, described shortly.)

Several EDA vendors have also recently expanded their product offerings to include IP libraries. There is a natural synergy between advanced EDA tool development and IP design for a new fabrication process. The EDA team collaborates with the foundry during process development to identify new tool features that are required. The IP team also has an early collaboration with the foundry to prepare designs for fabrication and testing so that the IP is available for leading customers when the process qualification is complete. As part of the EDA vendor engineering staff, the IP team also helps test and qualify the new software (with software licenses for free, to be sure). This synergistic relationship between tool and IP development is proving to be financially successful for the EDA vendor and also to improve the quality of new EDA software version releases.

For similar reasons, the foundries are also increasingly offering IP libraries. The foundries have internal process development teams focused on specific circuit requirements (e.g., device characterization and reliability, memory bit cell technology, fuse programmability). As with the EDA vendors, the foundries are also investing in enhancing internal circuit design expertise. It is a natural extension of these process development areas for the foundry to offer customers IP libraries consisting of diverse sets of functionality, including a base cell library, I/O pad circuits, memory (and fuse) arrays, and so on. The foundry may offer the IP library at an aggressive licensing price as a means of attracting more customer “design wins” to secure the corresponding manufacturing volume and revenue.

In summary, VLSI designs have evolved in complexity from the earlier ASIC approach, both in terms of the diversity of integrated IP and the unique methodologies applied by the design team. A description that better describes the current class of VLSI designs is system-on-a-chip (SoC).

1.2 Intellectual Property (IP) Models

SoC chip designs incorporate a range of physical IP components of varying functional and PPA complexities.

1.2.1 Standard Cells

Basic logic functions are collected into a library of physical layout cells with corresponding electrical models. Design blocks are implemented by the placement and routing of these cells to match the description of a logical netlist. The layout of these standard cells adheres to a template applied throughout the cell library. Specifically, the template defines:

The position and width of (lower-level) metal layer power and ground rails

The number of lower-level metal wiring tracks available for signal connections within the cell implementation

Valid locations for pins to connect to the cells with signal routes

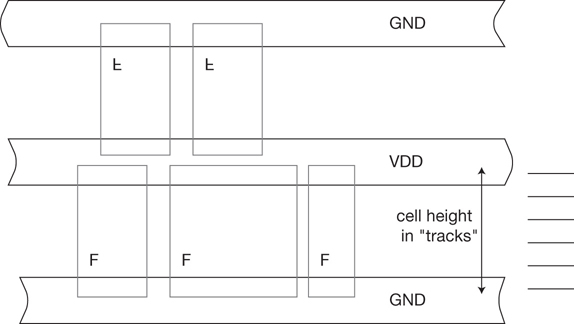

The cell layouts are thus constrained in one dimension (e.g., the vertical dimension in Figure 1.1) and typically span a variable width in the other dimension to complete the layout connections. In Figure 1.1, note that the standard cell template employs a shared power and ground rail design. The orientation of alternating rows of cells would be flipped in the vertical direction to share rail connections with the adjacent row. The width of the rails in the template would be designed to provide a low-resistive voltage drop for the current provided to both adjacent cell rows.

Figure 1.1 Standard cell layout template example, illustrating shared power and ground rails. Logic cell layouts are one (perhaps two) template rows tall and of variable width. The template cell height spans an integral number of horizontal wiring tracks.

The standard cell library content is thus limited to the logic functions that can be successfully connected within the wiring track limit. Typically, flip-flop circuits are the most difficult to complete; their layouts often define the allocation of intra-cell tracks in the template.

Given the wiring track constraint, some cell layouts may add connections on an upper metal layer, which is typically used for inter-cell routes. In these cases, the placement and routing methodology needs to accept a partial route blockage map, honoring the unavailability of these tracks for signal routes due to the cell layouts. Some libraries may also include “two-high” cells, under the assumption that the placement flow can accommodate both one-high and two-high dimensions; this enables a richer set of library logic cells to be available.

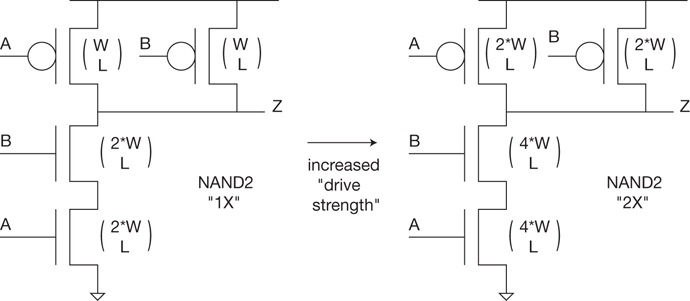

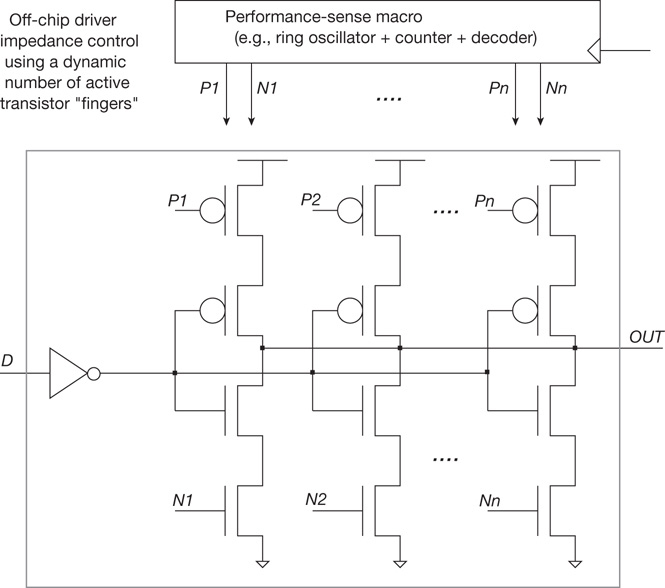

Cell Drive Strengths

To assist with PPA optimization during physical implementation, each logic function in the standard cell library is likely to be offered in multiple drive strengths. For example, a function built with minimum-sized transistors may be denoted as 1X. Increasing transistor sizes may enable 2X, 4X, and so on alternatives, as illustrated in Figure 1.2. The template height constraint limits the transistor dimensions in the physical layout; larger drive strength variants need multiple transistor fingers connected in parallel, spanning a greater cell width. (A transistor finger is an individual device with common drain, source, gate, and substrate connections to the other fingers connected in parallel. The total device width is the sum of the individual finger widths. Section 10.2 discusses layout proximity effects, where the surrounding layout topology impacts the device performance. In this case, the device current model for the individual fingers varies, necessitating expansion of the total device width into the individual fingers for detailed circuit analysis.)

Figure 1.2 Cell library functions are provided in multiple drive strengths. The wider devices used for higher drive strengths may require a layout using parallel fingers. The pin input capacitance is the sum of all device input capacitances, each of which is proportional to the product of device width and length.

The cell layouts must all observe a minimum half-rule space within the template boundary to allow adjacent cell placements to satisfy the process lithography requirements. Although the output impedance of a higher-drive-strength cell is reduced, providing improved signal transition delays, the capacitive loading presented to the circuits sourcing the input pins is increased, adversely impacting their performance and power dissipation. Performance optimization requires an incremental path-oriented algorithm for cell drive strength selection to evaluate the relative trade-offs between improved drive strength and increased loading for cells and interconnects in the path.

Cell Threshold Voltage Variants

Another cell library option is to offer the logic functions with circuit variants using different transistor threshold voltages available in the fabrication process technology. For example, a single drive strength of a cell could be available using standard Vt (SVT), high Vt (HVT), and low Vt (LVT) transistors. The lithographic layout rules from the foundry typically facilitate placing SVT, LVT, and HVT transistors in close proximity; that is, SVT, LVT, and HVT cells could be placed adjacent to each other. (In the most advanced process nodes, there are new lithographic rules impacting the adjacency of different device threshold types, necessitating additional spacing between cell threshold variants.)

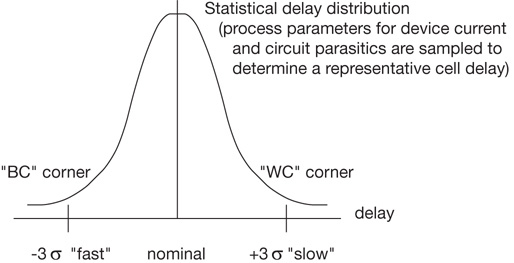

The circuit transition delay for an LVT cell is improved over its SVT equivalent, without the cell area and input capacitive loading increase associated with a higher-drive-strength version. The trade-off is that the LVT cell static leakage current power dissipation is greatly increased. Whereas a 2X drive strength transistor size effectively doubles the leakage current over 1X, the leakage current for an SVT-to-LVT cell exchange increases exponentially. (If a circuit timing delay path is far less than the allocated clock period, the positive timing slack could be applied to the substitution of HVT cells for SVT equivalents, reducing leakage power substantially.) A visual representation of this trade-off is provided by the Ion-versus-Ioff curve from the foundry shown in Figure 1.3, which illustrates the process target for transistor on-current drive strength (in saturation mode) versus the off-leakage current, for a reference transistor size.

Figure 1.3 Example of an Ion-versus-Ioff curve from the foundry, illustrating the process target for device currents with different Vt threshold voltages (in saturation mode, for a reference device size). The curve allows a (rough) comparison between processes. The “device current gain at constant leakage power” is depicted for two process targets (with scaled reference device sizes).

Note that the graph axes in Figure 1.3 are log-linear, highlighting the exponential dependence of Ioff on transistor Vt. The line connecting the LVT, SVT, and HVT points is artificial; it simply illustrates the linear Ion and exponential Ioff dependence on Vt. There are no transistor design offerings along the curve; they are strictly at the different Vt fabrication options. Nevertheless, the curve is extremely informative, in case the foundry and its customers opt to evaluate an investment in another transistor Vt offering for specific PPA requirements (e.g., extra high Vt [EHVT]). Such a curve is also often used to compare successive process generations. The horizontal distance between the curves for two processes is a benchmark for performance improvement at constant leakage power (for the reference size transistor in the two processes). The vertical distance estimates the leakage power improvement at constant performance. It should be highlighted that these are transistor-specific data points; to realize these gains between processes, there are also scaling assumptions on interconnect lengths, interconnect R*C parasitics, and power supply values that need to be evaluated. The selection of the optimum combination of drive strengths and Vt offerings for millions of cells in a netlist for PPA optimization is perhaps the most complex set of EDA tool algorithms.

1.2.2 General-Purpose I/Os (GPIOs)

The base cell library also includes input pad receivers and output pad drivers (not integrated into a specific physical IP interface block). These general-purpose input/output (GPIO) cells have numerous variants with different characteristics, such as:

Vin and Vout logic threshold levels (Vout measured with a specific DC load current)

Output driver impedance, for matching to the package impedance to minimize transmission line reflections

Bidirectional driver/receiver circuits (with high-impedance driver enable control), in addition to unidirectional drivers

Receivers with hysteresis feedback to improve noise immunity

Electrostatic discharge (ESD) protection (see Section 16.3)

The I/O cells have their own layout template, which is much larger than the standard cell template. These circuits incorporate very-high-output drive strengths with many parallel transistor fingers and much larger power/ground rails to deliver greater currents at low voltage drop. In addition, the voltage levels connected to these GPIO circuits are typically distinct from the internal IP voltages. For example, external interface voltages may be in the range of 1.2 to 1.8V, while the internal circuitry for current process nodes operates below 1V. The GPIO circuits may also use a different set of thick gate oxide dielectric field-effect transistors to support exposure to higher voltages, with additional layout constraints. Although some methodologies support the placement of GPIO cells scattered throughout the die area, the majority of methodologies allocate the GPIO templates (and related I/O power/ground rails) strictly to the die perimeter.

1.2.3 Macro-cells

The elementary logic circuits in the standard cell library are the components for the physical implementation of larger functional designs. However, additional productivity in generating the logic netlist could be achieved if more complex logic cells were available. A larger set of PPA optimizations would likely be enabled as well. For example, an n-bit register consisting of n flops sharing a common clock is a typical complex logical design element in a library. An n-bit multiplexer sharing common select signal(s) is also common. An n-bit adder is another, with a performance dependence on the specific adder implementation. In all these cases, there is a goal to minimize the delay of a critical timing path by reducing the loading on a high fan-out input connection or optimizing internal (multi-stage) logic circuit delays.

Two types of offerings have been added to cell libraries for these more complex macro-cells: a custom physical implementation and a logic-only representation. As mentioned earlier, physical design methodologies are often required to support larger cell dimensions than a one-high template row standard cell layout. Macro layouts can be added to the library if the methodology supports more varied physical cell dimensions. Within the layout, special focus would be given to ensuring low skew of high fan-out inputs and short delays for critical paths within the macro. (There is also the decision of whether the macro layout would be given the flexibility to discard the template power/ground rail definitions from the standard cell library and employ a unique rail design, with continuity only present at the macro edges for abutted cells.) However, offering these additional custom physical layout components would add to the task of library timing model characterization and electrical analysis.

An alternative would be to add a level of hierarchy to the cell library; in this case, a logical macro would be defined, and it would consist of a specific netlist of base standard cells to implement the function. Figure 1.4 depicts an example of an n-bit multiplexor, consisting of a hierarchy of n single-bit mux cells. Buffer cells could also be judiciously added to the netlist for high fan-out internal signals and, potentially, macro outputs.

Figure 1.4 A logical macro defines a complex function composed of existing standard cells. An n-bit 2:1 multiplexor is depicted. Critical signals within the macro definition would receive specific buffering. Relative cell placements optimize the routing track demand and interconnect loading within the macro.

The unique feature of the logical macro would be the inclusion of relative placement directives with the library model. The placement flow would encounter the logical macro in the netlist, expand to its primitive cell netlist, and apply the relative placement description to treat the constituent cells as a single component that would be moved in unison during placement optimization.

There are several advantages of using logical macros, including the following:

No additional characterization and electrical analysis of the power and timing delays of a complex function are required. The final netlist of expanded (characterized) cell primitives is used.

Subsequent cell-based optimizations are available. Once the logical macro is part of the physical implementation, cell-based power and performance optimizations are applied, such as the substitution of HVT cells to save leakage power. The drive strength of macro output cells could be optimized for the actual loading in the specific context of the larger physical block layout. (The cell dimensions could vary during optimization; the placement algorithm would resolve any cell overlaps while maintaining the relative positioning.)

The relative cell placement should (ideally) guide the router to short connections for critical signals. With alignment of cell pin locations resulting from the relative placement, simple and direct route segments are likely. The risk of a circuitous connection on a critical macro signal can be further reduced by utilizing router features that accept a signal priority and/or preferred route layer setting for specific signals; these router directives could also be added to the logical macro library model.

Layout engineering resources required for library development are reduced. It is typically much easier to develop and verify the logical macro (and relative placement and router directives) than to prepare a custom circuit schematic and layout for the function.

The primary disadvantage of the logical macro approach is that the resulting implementation typically has less optimal PPA characteristics than a custom design. Whereas there is a clear granularity in logic cell drive strengths in the library (e.g., 1X, 2X, 4X), a custom schematic design could utilize greater variability, tuning the drive strength optimally for the loading within the custom macro layout. Also, due to the discrete size of the template in terms of wiring tracks, individual cell layouts may not fully utilize the area of a single-row 1xN (or double-row 2xN) template. A custom layout would likely be more area efficient.

These are complex trade-offs that need to be made by the library development team. The decisions on these trade-offs also have a strong interdependence with the features of the physical flows in the design methodology.

1.2.4 Hard, Soft, and Firm IP Cores

The evolution of SoC design integration has been enabled by the availability of complex IP functions that design teams can quickly integrate. The most prevalent examples are large memory arrays; analog blocks for high-speed external signal interfaces; and (especially) microprocessor, microcontroller, and digital signal processing cores. More recent complex IP examples include cryptographic units, graphics/image processing units (GPUs), and on-chip bus arbitration logic for managing communication between cores, potentially using advanced network communication protocols. (The IP core is also commonly referred to as a specific application “unit,” as in GPU or CPU.)

These IP designs are typically not developed by the design team but are licensed from external suppliers, each of which offers expertise in a specific microprocessor architecture or high-speed interface design standard.

Complex IP may be provided in different formats that have been given a unique terminology. The following sections discuss the various terms.

Hard IP

A hard IP offering is a large, custom physical layout implemented and qualified in a specific foundry technology. The IP vendor has developed the implementation to its own PPA targets, which hopefully align with customer requirements. The IP layout typically fully utilizes internal signal interconnect routes on several metal layers—more than for the standard cell library and macros. The IP vendor has also made an assumption on the appropriate metallization stack choices for the entire SoC, up to the top metal layer used in the hard IP layout. The physical integration of this layout in an SoC design involves providing global power distribution and signal routing to the layout pins (on the corresponding pin metal layers and route wire widths) that satisfy the vendor’s specifications.

The vendor provides a functional model(s) for the IP to use in SoC validation. For microprocessor or microcontroller core IP, a software development kit (SDK) is included for the generation of system firmware. Functional simulation testbench suites may also be provided to the SoC team to assist with validation of the processor bus arbitration logic with other units. The IP vendor will also have implemented a specific manufacturing test strategy for the core. A special test mode may be defined to allow the core to be functionally isolated from the remainder of the SoC, to enable the vendor’s test patterns to be applied and responses observed. Indeed, an industry-standard core wrap architecture has been developed to support isolating a core in an SoC for production testing.[1,2]

The hard IP release provides the vendor with the greatest degree of protection of proprietary information. (Although license agreements include strict policies on unauthorized disclosure of the vendor’s model data, IP leaks are nevertheless a major concern for the vendor.) A special feature that has been incorporated in EDA vendor functional simulation tools is the capability to link a binary executable when building the full SoC model. The IP vendor may thus provide the hard IP simulation model as a compiled executable rather than as a source logical description. A unique programming interface standard has been defined by EDA tool and IP vendors. This code is added to the model to allow the IP vendor to limit access within the simulation environment to selected micro-architectural signal values for query and debug.[3,4]

The main disadvantages of the hard IP release are the direct ties to a specific foundry process and metallization stack (which must be consistent with all the IP on the SoC, of course) and the lack of configurability of micro-architectural features. The other forms of IP release offer greater flexibility in IP core features and production sourcing, with a corresponding reduction in IP vendor information protection.

Memory arrays and register files for SoC integration are a special case of hard IP. The diversity in required array sizes is great, as are specific features that may be desired, such as partial write (read/modify/write). Register files vary widely in size, as do the number of uniquely addressable (and simultaneously accessible) read/write ports. It would not be feasible to release a specific hard IP design for each unique application, nor would it be attractive to SoC design teams to be required to adapt their architectures to a very limited set of array and register file offerings. However, these functions require an aggressive layout style, as their PPA is typically critical to the overall SoC design goals.

IP vendors have addressed these customer requirements by offering array generators. Customers provide array configuration parameters as inputs to the generator. The output is equivalent to a hard IP release—that is, a physical layout (ideally, close to the density of a full custom layout); a functional simulation model; timing models for clock/address/data read-write access operations; and a production test model. The generator infrastructure allows the IP vendor to more easily support multiple foundries for customers. The foundry may offer different, proprietary high-performance or high-density array bit cell designs for use in the generators, enabling a wider range of PPA support.

There are some minor considerations when incorporating an array or a register file generator into the design methodology:

The range of sizes supported by the generator must fit the SoC architecture.

The timing model calculator within the generator must fit the operating temperature and supply voltage ranges of the target SoC application.

The test model from the array generator must fit the overall SoC test architecture for applied patterns and response observability. Specifically, large register files present a trade-off in terms of whether the test model represents an addressable array or expands to individual register bits, with the corresponding test architecture for each bit as a flip-flop.

Soft IP

A soft IP release is strictly a functional model, without a corresponding physical implementation. The model is typically provided using the semantics of a hardware description language (HDL). The common HDLs, SystemVerilog and VHDL, both readily support passing configuration parameters down through the logical model hierarchy, offering considerable flexibility in micro-architecture exploration before finalizing the functional design.

The physical implementation is realized through the synthesis of the HDL model to a target standard cell plus macro library (and the mapping of arrays to a generator). EDA tool vendors provide logic synthesis tools to compile the HDL model and subsequently implement the function as a netlist of cell library components, optimizing to PPA constraints.

The use of soft IP provides an easy path to evaluating multiple foundries, using different libraries when re-exercising logic synthesis and physical design flows. Similarly, it allows for rapid experimentation with different PPA targets. The primary design disadvantage is the larger area and reduced performance compared to a hard IP implementation.

Firm IP

A less common format is for the IP vendor to provide a cell-level netlist rather than an HDL model. The netlist may be in terms of instances of a cell library targeting a specific foundry or consisting of generic Boolean logic primitives. The latter would allow for multiple foundry evaluation by a straightforward, but perhaps suboptimal, synthesis mapping of Boolean primitives to different cell libraries.

This firm IP release resembles the logical macro methodology. The cell-based implementation would enable drive strength and power optimizations during physical design, from the “manually synthesized” firm IP netlist. Some limited configurability may be offered by the vendor providing the firm IP, adapting the output cell netlist to customer input parameters. With the ongoing improvements in logic synthesis algorithms and the increased experience with synthesis in SoC design teams, the release of firm IP formats by vendors has declined in favor of releasing soft IP HDL models.

The soft IP and firm IP approaches provide greater design flexibility in micro-architecture and foundry sourcing. In return, the IP vendor relinquishes some degree of information protection.

1.2.5 “Backfill Cells”

A special class of cells are included in the library and are crucial to all block implementations. These cells are added to complete the physical layout without a corresponding instance in the netlist.

The cell template dimension is typically chosen to enable intra-cell signal connections to be completed on the most complex circuits, on the allocated metal layers for cell layouts. The access points to the cells for inter-cell routing are associated with pin shapes in the cell layout, placed to align with wiring tracks on upper-level metals. The metallization stack utilizes thicker metals for improved electrical characteristics on upper layers, with corresponding increases in the (minimum) wire width and space; thus, the wiring track density is reduced for these layers. In addition, the cell library may include high fan-in logic primitives for efficiency in the total logic gate path length to realize a timing-critical function. For example, wide AND*OR cells are useful in many datapath-centric designs (e.g., a 2-2-2-2 AND*OR with each AND gate receiving a data and select input). Placement of these cell layouts with high fan-in is likely to introduce a high local pin density. The reduced number of available tracks on the inter-cell routing layers combined with a high local pin density may result in routing congestion when attempting to complete the block physical design. The routing tool may be unable to find suitable (horizontal and vertical) segments for all the netlist connections, resulting in incomplete signals (known as overflows).

The physical block layout area allocated to route the complete netlist may thus need to be enlarged. Cell area utilization substantially less than 100% is used to gain confidence in achieving a fully routed solution. The library vendor provides utilization guidelines, based on previous experimentation; for example, a recommendation of ~80% utilization is common. (Placement algorithms evaluate local pin density during cell positioning and space cells apart to reduce route congestion.) The net result is a significant vacant area within the block layout, both within and around the perimeter of the block boundary.

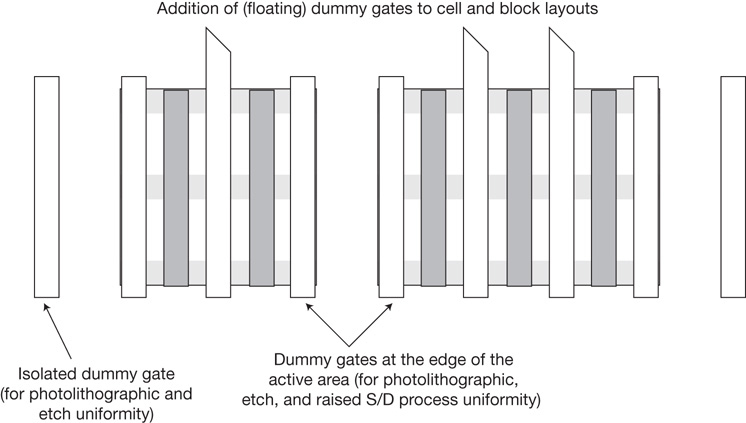

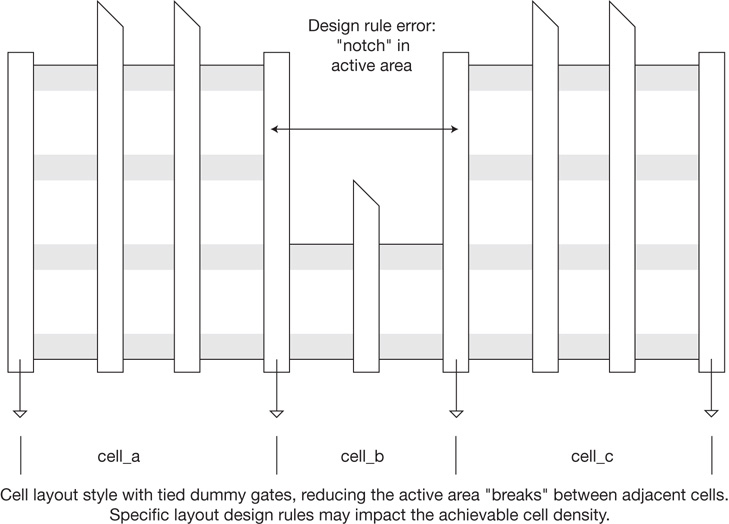

The library contains backfill cells (of varying widths) to insert into vacant locations in the block. These completion cells can be quite varied in content and purpose:

Dummy cells for photolithographic uniformity—The backfill cell may add dummy transistors to maintain a more uniform grid of transistors between functional cells to reduce the variability in lithographic patterning of the critical dimension transistor gate length.

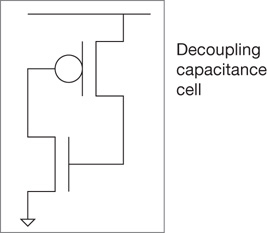

Local decoupling capacitance—Expanding upon the addition of non-functional transistors in vacant cells, a relatively simple connection of devices provides a capacitance between the VDD supply and GND rails (see Figure 1.5). These decap cells can be easily inserted in the unoccupied cell locations after routing and prior to electrical analysis.[5]

Figure 1.5 Schematic for the “cross-coupled devices” decoupling capacitance cell, inserted in vacant cell locations. Both devices are operating in linear mode, with maximum Cgate-channel.

The switching activity of logic cells draws supply current. Local decoupling can efficiently provide charge to this transient, minimizing the resistive I*R voltage drop along the rails and without the delayed inductive response through package pins. Indeed, a key facet of the power distribution network (PDN) analysis flow step is to calculate the total dynamic switching transients, the local (inherent circuit and explicit decoupling) capacitance, and the PDN electrical response characteristics to ensure rail voltage transients are within design margins (see Section 14.3).

Gate Array Logic ECO Cells

After an SoC design is submitted for initial fabrication, it may become evident that a logic design modification is required. A change in product requirements may arise while awaiting the first-pass prototypes from the foundry and OSAT. Or, more likely, a bug in the functional design may have been discovered during the ongoing system model validation, after release of the design to the foundry. In either case, there is a requirement to apply an engineering change order (ECO) to the (first-pass) netlist.

The simplest approach would be to simply remove any erroneous standard cells (and their routes) from the physical layout, insert the standard cells to fix the bug, and reroute the updated netlist connections. Indeed, physical design tools commonly have an incremental mode, which accepts a unique netlist syntax for cell instance adds/deletes. The goal of this ECO methodology is to leave the existing physical design as unperturbed as possible to minimize new issues arising during electrical analysis. However, adding and deleting standard cells for a second-pass design implies that all lithography layers for the SoC will likely have been edited, starting with transistor fabrication. The cost of a full set of new lithographic masks will be considerable, and the schedule impact of the full fabrication cycle time will be maximum before updated packaged parts are available.

An alternative approach is to backfill some percentage of the vacant block area with uncommitted transistors. A specific ECO logic function would be realized by adding local metal segments between transistors, vias, and pin locations. An array of transistors could be personalized to a logic function, using only metal and via physical layout edits.

The collection of individual logic functions implemented by the metal personalization of uncommitted transistors is known as the gate array library. An IP library provider may include gate array cells with the standard cell release. Typically, the gate array offering will be a small subset of the standard library, with limited drive strengths and transistor Vt variants available. The ECO design with gate array logic inserted (and, likely, signal buffers added) will not be optimized to PPA design targets. There is certainly a risk that the gate array backfill cells will not be able to implement a set of logic ECO changes, due to limited availability, location, or the impact on overall performance that no longer meets design specifications.

If a gate array library is available, the ECO methodology needs to ensure that all flow steps are consistent with incremental design at the cell netlist level (see Chapter 17, “ECOs”):

Placement and routing tools must accept the incremental netlist syntax and restrict additions to unpersonalized gate array locations.

The full-chip SoC data model used for test pattern generation must reflect the incremental updates.

The extraction of signal interconnect parasitics for electrical analysis must (ideally) also be able to recognize the incremental inter-cell route segments and efficiently update the parasitic network.

A complete logical-to-physical equivalence ECO flow step is required; a netlist update applied directly to the physical layout also needs to be reflected in the HDL source model for functional validation. Designers make functional changes to the HDL model related to the ECO netlist adds and deletes and recompile the HDL for functional simulation. The ECO methodology flow needs to verify the logical equivalence of the updated cell netlist to the revised HDL.

The data version management policies adopted by the methodology need to apply a nomenclature that maintains the evolution of the first-pass design and any succession of ECO netlists. When first-pass prototypes are received from the foundry, hardware system bring-up and debug will be referenced to the original release data while ongoing updates for a subsequent fabrication pass are being developed.

Standard Cell Logic ECO Backfill

A couple of methodology considerations regarding backfill in preparation for subsequent ECOs are worth specific mention. If a gate array cell library is not available, an alternative methodology would be to add a small quantity of standard cells throughout the vacant block area. The inputs to the standard cell would initially be routed to a power or ground rail to tie all inputs to a fixed logic value. Unlike the flexibility of the gate array logic function provided by the personalization of uncommitted transistors, a spare standard cell implies a specific logic function available at that location. The number and diversity of spare standard cells added to the block is a judgment choice. There is a risk that an ECO netlist change may not be efficiently realizable with metal-only lithography updates if the spare logic standard cells are limited in location and function.

Another methodology decision pertains to whether spare flip-flop cells would be inserted to support an ECO that adds a sequential state signal. The gate array backfill library offering may not include flip-flop cells; the connections between transistors to implement a flip-flop are the most difficult within the standard cell template and may not be viable for the uncommitted transistor array. Alternatively, flip-flops from the standard cell library could be selected for insertion and judiciously placed in vacant areas.

The more difficult decision pertains to what consideration should be given to the clock input(s) to the spare flops. During physical design, an effort is made to provide clock signal buffering and load balancing to minimize the arrival skew at the clock signal fan-out endpoints—namely flip-flop inputs and clock gating cells. If spare flops are provided, the ECO routing updates to add the clock connection to the flop will perturb the existing balanced skew; previously valid timing paths (unrelated to the logic ECO modification) may now fail due to increased clock arrival skew. To avoid the issue, the spare flop clock inputs could be part of the original physical optimization, so their subsequent use would not adversely impact the clock timing. However, in this case, spare flops that remain unused result in wasted power dissipation due to the additional clock loading. And, for logical-to-physical equivalence verification, these visible spare flop cells would need to be added to the logical model for the block despite having no functional contribution. A potential requirement to include spare flops in the test model for the original design also needs to be assessed. If the test architecture includes unique test mode clocking, the spare flop may need to be included in the functional description.

The methodology approach selected to optimize the cost/schedule impact of ECO updates requires several trade-off assessments with regard to the specific spare cell library chosen, the number and placement of spare cell logic functions, the number of mask layout layers impacted to implement the ECO, and, for the insertion of spare flops, the impact on the (functional and test) clock distribution.

1.2.6 Cell Views and Abstracts

The cell library release includes numerous views for each element for different methodology flow steps:

Functional model for netlist-level validation—Example flows are logic simulation and logical-to-physical equivalency.

Test model—A description of the prevalent circuit-level manufacturing faults is needed to investigate with manufacturing test patterns.

Timing model—The timing model includes cell pin-to-pin delay arcs, with delays represented as a function of supply voltage, temperature, input pin signal slew, output pin loading, and (potentially) conditional arcs based upon the logic values at other input pins; sequential functions have additional timing data, such as clock-to-data setup and hold constraints. Recent timing models also include detailed data on the output pin transient current waveforms for more accurate signal interconnect propagation.

Physical model—A representation of the physical cell layout, used for placement and routing, includes cell size, route blockages, and pin shapes (including either “connect to” or “full cover” route constraints for the large area pins).

Power model—The power model includes the static leakage power and internal power dissipation for arc transitions, with comparable coverage of voltage, temperature, and signal transitions as the timing model.

Electrical and thermal models—A collection of data derived from the physical layout is used for other flows (e.g., pin capacitance, input pin noise pulse rejection, output pin impedance, power and ground rail currents for signal transitions, self-heating thermal energy).

These views are developed by library generation methodologies to provide model abstracts that are most efficient for the cell-based flows. Library model generation is also commonly referred to as characterization (see Section 10.2). EDA vendors have utilized unique abstract data best suited to their tools. The vendors have collaborated with IP providers to prepare these specific formats as part of library generation methods. Due to the critical importance of these models for all tools and SoC methodologies and the costs associated with library generation, the IC industry is increasingly pushing for (de facto) standards for model view abstract data. Several EDA vendors have responded to this trend by releasing their formats into the open source community.[6] Nevertheless, methodology development requires coordination between specific tool requirements and the cell abstracts released by the IP library supplier.

The automated logic synthesis of a cell-based netlist from an HDL functional description employs optimization algorithms that use data from multiple views for initial logic mapping and subsequent PPA optimizations. There are assumptions inherent to these tool optimizations about the relative ordering of the drive strength and Vt cell variants of each logic function in the library release. For example, when seeking to reduce path delay during timing optimization, the algorithms seek to deploy the “next higher” performance entry for the mapped logic function. The methodology team and IP library supplier should review the criteria used to determine the ordering for cell variants of a logic function to ensure that these criteria are consistent with the SoC design goals. If a design is extremely constrained by static leakage power, the relative choice of LVT cell variants could be reduced (or excluded altogether, with a separate “don’t use” directive to the synthesis flow).

1.2.7 Model Constraints and Properties

In addition to the abstract data that describe the cell to the EDA tools, there is a view for some cells that reflect any restrictions on the cell usage in the context of the full netlist. The cell design may have incorporated specific assumptions about its usage, which need to be provided as constraints for subsequent validation. For example, a flip-flop cell with separate clock inputs for functional data and test data capture requires the clocks to be mutually exclusive as the circuit behavior may be indeterminate (“undefined” to a validation tool) if both clocks are active simultaneously. The model constraints view would include this restriction, to be validated against the netlist model.

The number and complexity of IP usage constraints also grows with the size of the IP design. An IP core or memory array release will likely include a set of functional properties, which describe the interface requirements. EDA vendors have developed unique property specification language semantics to efficiently describe the required behavior. The property would commonly be compiled with the functional simulation model. If the property is violated during functional simulation, the simulator tool will be directed to report an error. EDA vendors have also released (static) property prover tools to use in lieu of functional simulation flows. If the prover determines that the logic connected to the IP core or array could potentially violate the interface behavior described in the property in any possible scenario, the tool will flag an error (and derive a functional counter-example for further debug).

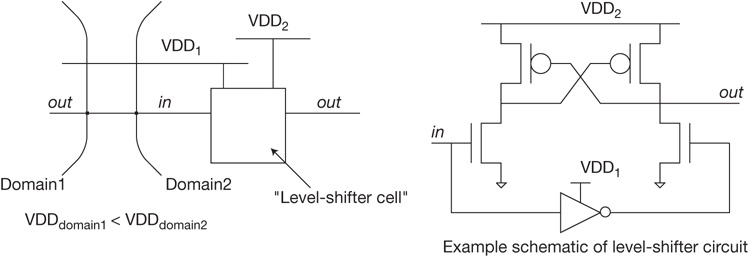

The increasing number of discrete power supply domains on current SoC designs has resulted in a significant number of additional cell constraints. An example would be a “level shifter” cell, intended as the logic interface between two different voltage domains on the SoC. The level shifter cell usage is restricted to receiving a lower-voltage signal logic 1 level at the input to a higher-supply-voltage domain.

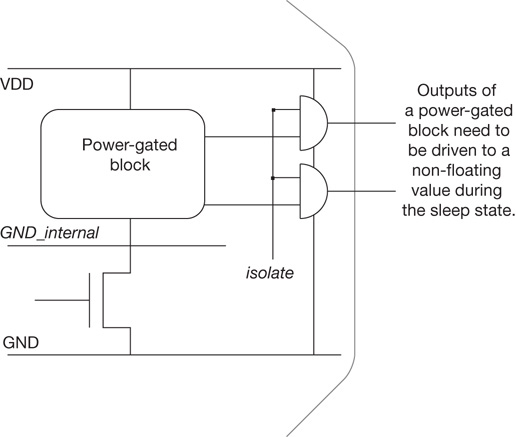

The addition of power-gated “sleep” functionality to IP cores adds to the usage constraints. As mentioned in the introduction to this chapter, the complexity of power domain management on-chip has motivated the EDA industry to define a unique power format description language to capture the voltage/power states of cores and their interface signals.[7] Tools specifically developed to confirm the power format constraints against the SoC model need to be incorporated into the validation methodology.

1.2.8 Process Design Kit (PDK)

A foundry releases a process design kit (PDK) to IP developers and SoC customers that provides documentation, models, techfiles, and runsets to enable designs to be released for fabrication. Transistor models are used for detailed circuit simulation. The process definition for physical design is provided in techfiles, for use with physical layout, placement/routing, and electrical analysis flows (e.g., lithography mask layer nomenclature, electrical characteristics of interconnect and dielectric layers for parasitic extraction from a layout). A runset refers to a sequence of operations exercised against the physical design. For example, runsets for physical design checking include mask layout geometry operations to identify devices and signal continuity between layers, perform measures on devices, and execute checks to ensure that the layout satisfies photolithography rules and manufacturing yield guidelines. In addition, the foundry may provide software scripts and utilities to enhance layout productivity (e.g., device layout generation from a schematic instance, the insertion of dummy fill shapes for improved lithography uniformity).

The formats for the physical and electrical techfiles are specific to the EDA vendor tool. Multiple formats are actively used to represent the process cross-section and resistance/capacitance layer data for parasitic extraction. (There is an effort under way to promote a de facto standard interoperable PDK format [iPDK] and adopt a common representation for this process data.[8]) Similarly, there are different runset formats for layout operations, measures, and checks. EDA vendors regard their runset language semantics and geometric algorithm optimizations as highly proprietary and as being product differentiators. As a result, a foundry’s PDK and design enablement teams release design kits that are qualified for multiple reference EDA tools. The SoC methodology team needs to work closely with the foundry to ensure that flows utilize the EDA vendor tools for which foundry support is provided.

The foundry may engage early SoC customers for a new process still in development and provide preproduction PDK data prior to the version 1.0 process qualification release; for example, PDK v0.1, v0.5, v0.9, and so on could be made available to key customers. Certainly, IP providers may also want to engage with the foundry using these early process descriptions to have silicon test chip hardware measured and qualified in time for SoC designs using the v1.0 PDK. For these early adopter customers, in addition to addressing the risks associated with designing to preliminary process models, the schedule availability of preproduction PDK techfiles and runsets for a specific EDA tool needs to be reviewed by the methodology team.

As mentioned earlier, a foundry is typically also a provider of cell libraries to IP vendors and SoC customers (e.g., memory array bit cells, ESD structures to integrate with I/O circuits, process control monitoring and metrology structures to add within the die area [used during fabrication], perhaps even a base standard cell logic IP library). These foundry libraries are distinct from the PDK releases and are covered by separate license agreements.

The v1.0 production PDK is also likely to be superseded by subsequent releases (e.g., v1.1, v2.0). After achieving production status with v1.0, the foundry will pursue continuing process improvement (CPI) experiments to optimize performance, power, or, especially, manufacturing yields. New materials and/or process equipment may be introduced to enhance electrical characteristics and/or reduce statistical variations in the fabricated devices, interconnects, and dielectrics. The transition of these ongoing process improvements to the production fabrication lines will result in subsequent PDK releases.

An SoC design underway using a specific PDK release may need to assess the impact of moving to a new PDK version in terms of project schedule, resource, and anticipated manufacturing costs. Discussions with the foundry will indicate whether an existing PDK release will continue to be supported (and for how long) or whether the transition to a new PDK is required. The methodology team is an integral part of this review, to evaluate the impact of a new PDK on existing tools/flows—specifically whether any new EDA tool features are also required to be supported. If the new PDK is accepted, the methodology and CAD teams will coordinate the transition to this PDK release. The release update involves qualifying EDA tools (especially any new features), releasing the new PDK, and recording PDK version information as part of the flow step output to verify consistency across the design project.

1.3 Tapeout and NRE Fabrication Cost

The culmination of an SoC design project is the release of the physical design and test pattern data to the foundry for fabrication, a project milestone known as tapeout (see Chapter 20, “Preparation for Tapeout”). Fabrication data used to be written on magnetic tape and sent to the foundry. Today, although encrypted data is sent electronically to the customer dropbox maintained by the foundry, the tapeout name continues to be used.

Tapeout is a major milestone for any project. SoC design data has been previously frozen. A snapshot configuration of design data, flows, and PDK releases is recorded so that all full-chip electrical and physical verification steps can be performed on the database targeted for tapeout release. Often, special IT compute resources are allocated prior to tapeout to ensure that the requisite servers with suitable memory capacity are readily available for these steps. Full-chip flows may utilize parallel and/or distributed algorithms for improved throughput; appropriate servers from the data center may need to be reserved.

A number of tapeout-specific methodology checks are performed to collect the results of the full-chip flows exercised on the tapeout database. Any error/warning messages from electrical analysis and physical verification flows that are highlighted by the tapeout checks need to be reviewed by key members of the design engineering and methodology teams to assess whether the PPA impact can be waived. These final full-chip checks (with the documented review team’s recommendations) are collectively denoted as the “signoff flow.”

Any errors/warnings from physical verification flows using PDK runset data also need to be reviewed with the foundry customer support team to determine if they can be waived (e.g., a minor yield impact) or whether a design modification prior to tapeout is indeed mandatory.

Tapeout is also a key project milestone for financial considerations. The SoC project manager communicates the tapeout date to the foundry, with sufficient advance notification to reserve a slot in the mask generation and wafer fabrication pipeline. This tapeout slot is associated with payment to the foundry for lithographic masks and a quantity of wafers suitable to provide an adequate number of parts for product bring-up and stress-testing qualification. This payment to the foundry is often denoted as a non-recurring expense (NRE) when preparing the initial project cost estimates. The NRE amount is dominated by the foundry quote for a set of masks rather than the subsequent wafer fabrication costs. The OSAT is also notified of the “expected wafers out” date, based on the target tapeout date plus wafer fabrication turnaround time. The NRE payment to the OSAT is another project cost estimate line item.

A yield estimate provided by the foundry based on manufacturing defect densities (not a circuit design yield detractor based on power/performance targets) is used to determine the number of wafers in the prototype tapeout fabrication lots. Multiple lots may be started in succession to provide enough parts and/or to provide a reserve of wafers for subsequent ECO design submission. Assuming that a second-pass ECO design submission could indeed be implemented with physical layout changes limited to only metal and via layers using a cell backfill approach, a number of first-pass wafers could be held after initial processing prior to metallization. The second NRE would be limited to only the new masks, and the reduced wafer cost to re-commence fabrication of the wafers that have been held in reserve during first-pass fabrication.

The foundry is likely to offer engineering prototype lot fabrication on a unique manufacturing line, different from the volume production facility. Foundries promote the feature that multiple lines for the same process use a “copy exact” approach to allow the customer to confidently assume that prototype evaluations are directly applicable to production parts.

The prototype-focused line allows for some experimentation that would not easily be supported in high-volume production. Specifically, the design team may request split lot processing, in which a set of intentional variations are introduced. A relatively small number of prototype wafers would normally not provide a statistically significant sample that would be representative of volume production parts. The foundry may offer a split lot option with intentional process variations to provide a wider range of slow-to-fast parts for the design team to better assess any circuit sensitivities.

The prototype line may also offer expedited processing, which would not typically be available in production. The fabrication line may support a limited number of customer designs that receive priority scheduling at each process module station. There is a considerable NRE surcharge to the customer for a “hot lot” turnaround schedule.

The IP vendor has a different set of tapeout criteria. The IP will be much smaller than for a full SoC and may only need wafer probe-level testing for characterization rather than requiring the full package assembly and final test services from an OSAT. The foundry may offer an option for a multi-project wafer (MPW) fabrication slot. A number of different IP-level designs could be merged together into a single set of mask data. Each IP submission would be allocated an area on the MPW die site and would use an array of pads added to the IP layout matching the test probe fixture(s) available at the foundry. The MPW provides a cost-effective option for the IP vendor, as the NRE costs are apportioned among the IP contributors. The disadvantage is that the foundry is likely to offer only limited MPW slots (also known as “shuttles”), scheduled infrequently. In addition, the foundry may have limited test engineering resources available to exercise the IP test specification and collect characterization data. As a result, the IP vendor may need to seek additional external test engineering services. The IP vendor needs to collaborate closely with the foundry support team on the MPW area allocation and test strategy and then work aggressively to meet the committed MPW shuttle date.

1.4 Fabrication Technology

1.4.1 Definitions

VLSI Process Nodes and Scaling

Although each silicon foundry offers a unique set of fabrication processes and features, a foundry generally follows a lithography roadmap, as outlined by the (evolving) International Technology Roadmap for Semiconductors (ITRS). As a result, VLSI technologies are commonly associated with a process node from the roadmap. The succession of nodes in recent history has been 0.5um, 0.35um, 0.25um, 180nm, 130nm, 90nm, 65nm, 45/40nm, 32/28nm, 22/20nm, 16/14nm, 10nm, 7nm, and 5nm. The duration between the availability of these processes (in high-volume production) has typically been on the order of two years, following a trend forecasted by Gordon Moore over 50 years ago, in what has become known as Moore’s law.[9]

Note that there is a consistent scaling factor of 0.7X between successive process nodes. The goal of each new technology offering has been to provide a physical layout dimensional scaling of 0.7X linear, or equivalently 0.5X areal, effectively doubling the transistor density (per mm**2) with each new node. The PPA benefits of scaling were captured in a landmark technical paper by Robert Dennard; project teams have applied “Dennard’s rules” when planning product specifications for the next process node.[10,11]

Indeed, for older process nodes, where the light wavelength used for photolithographic exposure was significantly smaller than the minimum dimension on the mask plate, scaling of (essentially) all layout design rules was the fabrication goal. The focus for process scaling was to improve material deposition and etch process steps, and to reduce defect densities; photolithographic scaling was readily achievable. IP development in these older process nodes was rather straightforward. Existing IP physical layout data were easily scaled by 0.7X, and electrical analysis was performed using the new process node techfiles. The Dennard scaling of interconnects resulted in an increase in wire current density, necessitating a materials transition in the late 1990s from aluminum to copper as the principal metallurgy due to electromigration issues (see Chapter 15, “Electromigration Reliability Analysis”).

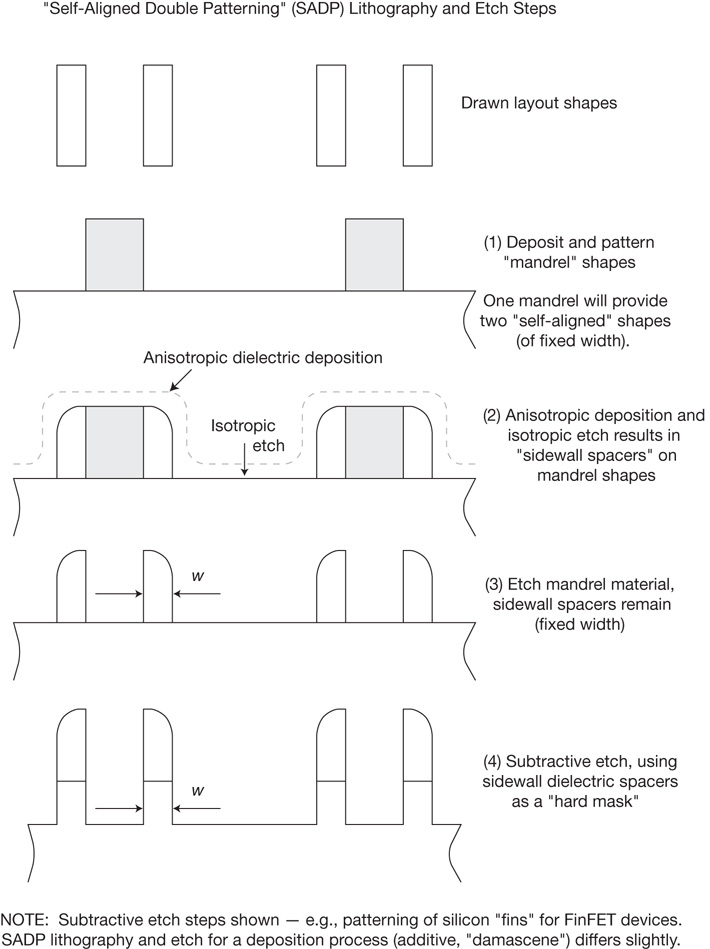

More recent process nodes utilize wavelengths longer than the mask plate dimensions. The drawn layout must undergo sophisticated algorithmic modifications prior to mask manufacture, applying optical diffraction and interference principles to the light transmission path from mask to wafer. As illustrated in Figure I.1, the most recent process nodes and exposure wavelengths necessitate decomposition of the layout data for a dense design layer into multiple masks prior to optical optimizations. In addition, the scaling of interconnect wires and via openings in dielectrics has required greater diversity in metals (and the use of multiple metals in the wire cross-sections) for both electromigration and resistivity control. As a result, although the ITRS node designation continues to use a 0.7X factor, the actual scaling multiplier between process nodes for individual layer layout design width, spacing, and overlap rules varies significantly, from 0.7X to 1.0X (i.e., no scaling). Some layers will continue to use fabrication process modules and materials unchanged from the previous node, with no dimensional scaling; this is especially true for the upper metal interconnect layers.

The engineering effort to prepare an IP design in a new node has thus increased, as the physical layout needs to be re-implemented. The quantization of allowed transistor dimensions for new VLSI technologies also necessitates a design re-optimization. (FinFET devices are discussed later in this section.)

Shrink Nodes and Half Nodes

In addition to the ITRS scaling roadmap on the Moore’s law cadence, some foundries offered an intermediate offering, a layout “shrink” of 0.9X applied to design data. The production process steps for the base process node needed to have sufficient latitude and variation margins to use the same materials and fabrication equipment for lateral dimensions scaled by a 0.9X factor.

This “half-node” offering was achieved by adjusting the optical lens reduction in the photolithography exposure equipment (nominally, a 5X reduction). As a result, new mask plates were not required. This option was extremely attractive to design teams. An improvement to the PPA specifications was provided at minimum NRE expense. Typically, the cost of transitioning an existing design to a half-node consisted of fabrication of a small quantity of wafers to complete an updated qualification of the new silicon before ramping production. This allowed an existing part in volume production to be offered with a “mid-life kicker” in performance and/or reduced customer cost (or improved profit margins) from the reduced die size. The half-node process introduction occurred on a production schedule between the major node transitions.

More recently, half-node introduction has not been viable. There is insufficient process latitude at current nodes to apply a 0.9X scale across all existing fabrication modules without new optical mask data generation and additional process engineering. There has been some confusion introduced in the nomenclature used by foundries when describing their fabrication process capabilities, especially since lithographic scaling is much more constrained. Some foundries chose to focus solely on half-node process transitions without offering an ITRS base node (e.g., 40nm, 28nm, 20nm, 14nm, 10nm). Some foundries describe their process using a general ITRS process node designation but diverge from detailed lithography dimension targets in the ITRS specification. Process comparisons currently require much more detailed technical evaluations, using transistor and wiring density estimates, materials selection and dimensional cross-section data, and Ion-versus-Ioff device measures (refer to Figure 1.3).

“Second Sourcing”

At older VLSI nodes, it was not uncommon for customers to expect a foundry to accept tapeout data developed for a different manufacturer. Customers were seeking a “second source” of silicon wafers, both for competitive cost comparison and as a continuous supply chain if a specific foundry experienced an unforeseen interruption in production. Foundries were expected to have sufficient process latitude to accommodate a design completed to layout rules from another manufacturer. At current design nodes, layout design rule compatibility between foundries is no longer the norm. Although multiple foundries offer a 7nm process, for example, there is an increasing diversity on layout design rules and power/performance targets. The SoC design project decision to select a specific foundry involves an early and thorough assessment of the PPA, available IP, and production cost; it is no longer straightforward to redirect a project to a different foundry at the same node. Concerns about continuity of production supply are typically covered by the multiple production lines available for each process at a single foundry, ideally manufacturing at geographically separate fabrication facilities, or “fabs.”

Process Variants at a Single Node

The application markets for current SoCs are increasingly diverse. Consumer, mobile, automotive, military/aerospace, and medical equipment products span the gamut of performance, power, and reliability specifications. Foundries may offer several process variants at the same lithographic node, specifically addressing these different markets. For example, a low-leakage mobile process would set transistor Ion-versus-Ioff targets to minimize leakage for SVT and HVT devices, whereas a process variant targeting high-performance computing customers may establish different targets for SVT and LVT devices.

In addition, there may be different IP library offerings for these market segments. For example, a standard cell library for aggressive cost, lower-performance applications may use a smaller number of wiring tracks in the template definition. A high-performance library would benefit from larger devices in the circuit design (e.g., for 1X and 2X drive strengths) and thus might incorporate a taller standard cell template definition.

The diversity of the product application requirements across these markets is driving the need for additional process and library options at each advanced process node. The engineering resource investment required from the foundry and IP providers is certainly greater, to capture a broader set of customer design wins.

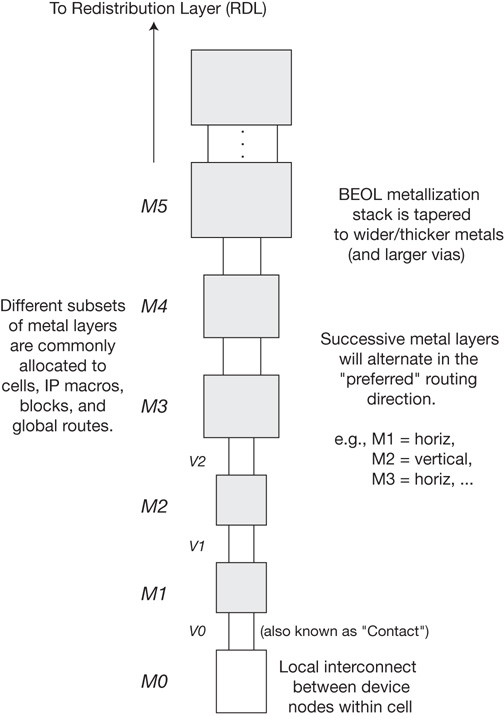

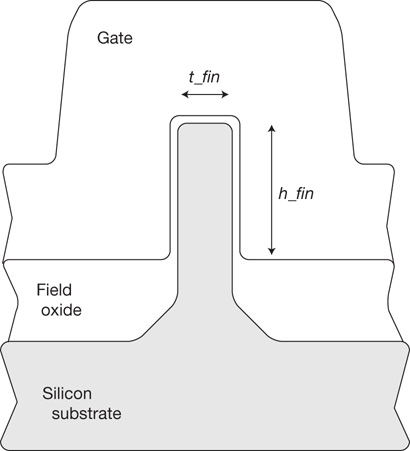

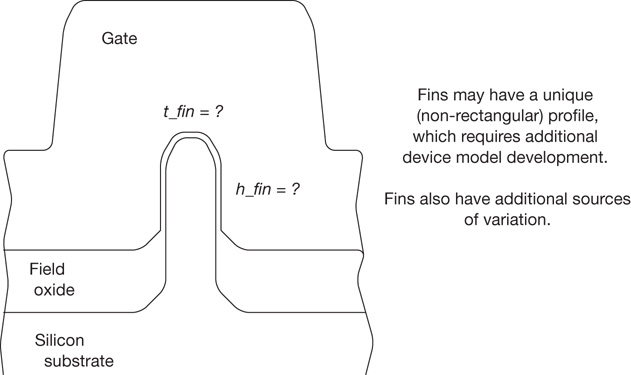

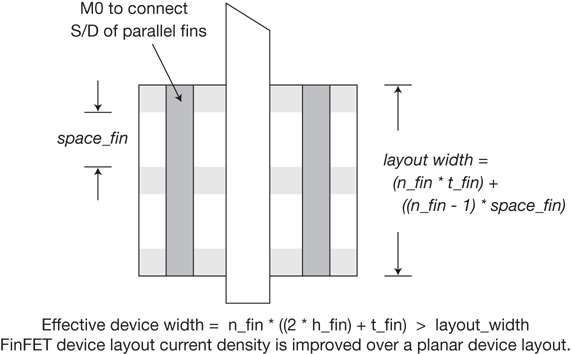

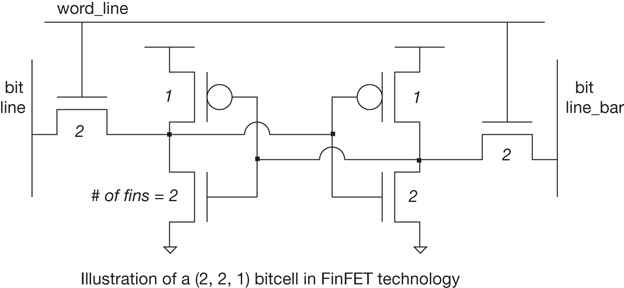

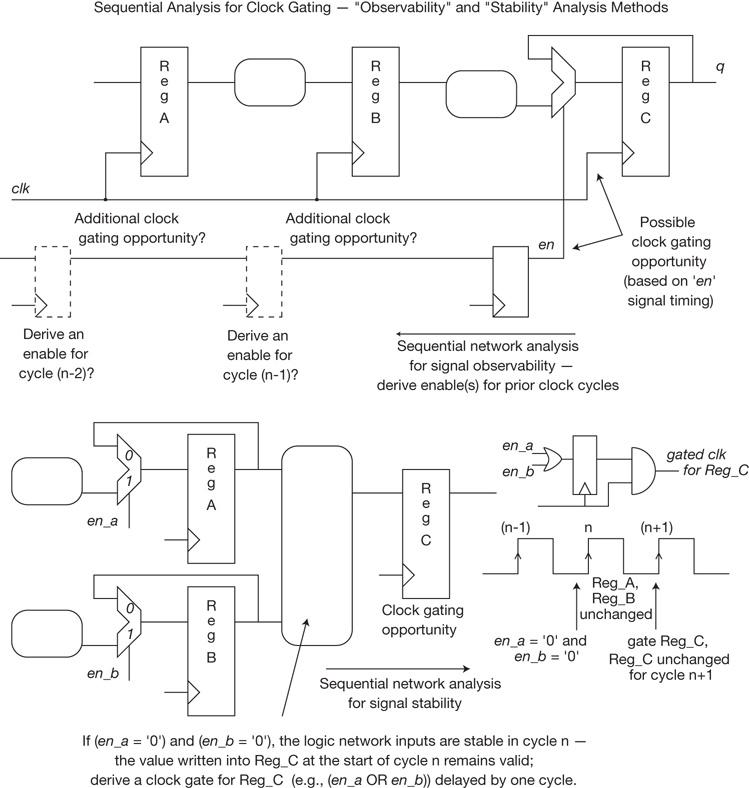

1.4.2 Front-End-of-Line (FEOL) and Back-End-of-Line (BEOL) Process Options