This chapter is dedicated to giving a full introduction to polynomial fraction description, which is important to the main theme in the sequel. The complete description of polynomial fraction description can be found in [32, 55]. For polynomial fraction descriptions of time-varying linear systems, one is referred to [56]. For an introduction to coprime factorization, one finds details in [40]. For the expositions of the Smith form for a polynomial matrix and Smith-McMillan form for a rational matrix, one finds the discussions, for example, in [57]. For the polynomial matrix description and structural controllability of the composite system connected by some structural controllable and observable linear time-invariant subsystems in the representations of the irreducible matrix fraction description, recent developments can be found in [58]. For expositions on system poles and zeros, see [59–65]. A recent paper [66] introduces a block decoupling control technique, which is based on the spectral factors of the denominator of the right matrix fraction description in control design and low order controller. Applications of polynomial matrix factorization into resource allocation of optical communication systems are seen from [67]. The paper [68] proposes an alternative option to model a dynamical power system process, which is based on a matrix polynomial form. The model can be obtained naturally writing the high order differential equations of some components (e.g., transmission line models modeled by sequences of RLC PI circuits in power systems) or some of the subsystems that compose the whole system. For other relevant discussions, see also [13, 66, 69–75].

4.2 Right polynomial fractions

Matrices of real-coefficient polynomials in s, equivalently polynomials in s with coefficients that are real matrices, provide the mathematical foundation for the new transfer function representation.

Definition 4.2.1

A p × r polynomial matrix P(s) is a matrix with entries that are real-coefficient polynomials in s. A square (p = r) polynomial matrix P(s) is called nonsingular if detP(s) is a nonzero polynomial, and unimodular if detP(s)

is a nonzero polynomial, and unimodular if detP(s) is a nonzero real number.

is a nonzero real number.

The determinant of a square polynomial is a polynomial (a sum of products of the polynomial entries). Thus an alternative characterization is that a square polynomial matrix P(s) is nonsingular if and only if detP(s0)≠0 for all but a finite number of complex numbers s0. And P(s) is unimodular if and only if detP(s0)≠0

for all but a finite number of complex numbers s0. And P(s) is unimodular if and only if detP(s0)≠0 for all complex numbers s0.

for all complex numbers s0.

The adjugate-overdeterminant formula shows that if P(s) is square and nonsingular, then P−1(s) exits and (each entry) is a rational function of s. Also P−1(s) is a polynomial matrix if P(s) is unimodular. Sometimes a polynomial is viewed as a rational function with unity denominator. From the reciprocal-determinant relationship between a matrix and its inverse, P−1(s) is unimodular if P(s) is unimodular. Conversely if P(s) and P−1(s) both are polynomial matrices, then both are unimodular.

Definition 4.2.2

A right polynomial fraction description for the p × m strictly proper rational transfer function G(s) is an expression of the form

G(s)=N(s)D−1(s),

(4.1)

(4.1)

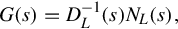

where N(s) is a p × m polynomial matrix and D(s) is an m × m nonsingular polynomial matrix. A left polynomial fraction description for G(s) is an expression

G(s)=D−1L(s)NL(s),

(4.2)

(4.2)

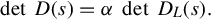

where NL(s) is a p × m polynomial matrix and DL(s) is a p × p nonsingular polynomial matrix. The degree of a right polynomial fraction description is the degree of the polynomial detD(s). Similarly the degree of a left polynomial fraction is the degree of detDL(s).

Similarly the degree of a left polynomial fraction is the degree of detDL(s).

Of course this definition is familiar if m = p = 1. In the multiinput, multioutput case, a simple device can be used to exhibit so-called elementary polynomial fractions for G(s). Suppose d(s) is a least common multiple of the denominator polynomials of entries of G(s). (In fact, any common multiple of the denominators can be used.) Then

Nd(s)=d(s)G(s)

is a p × m polynomial matrix, and we can write either a right or left polynomial fraction description

G(s)=Nd(s)[d(s)Im]−1=[d(s)Ip]−1Nd(s).

(4.3)

(4.3)

The degrees of the two descriptions are different in general, and it should not be surprising that lower-degree polynomial fraction descriptions typically can be found if some effort is invested.

In the single-input, single-output case, the issue of common factors in the scalar numerator and denominator polynomials of G(s) arises at this point. The utility of the polynomial fraction representation begins to emerge from the corresponding concept in the matrix case.

Definition 4.2.3

An r × r polynomial matrix R(s) is called a right divisor of the p × r polynomial matrix P(s) if there exists a p × r polynomial matrix P˜(s) such that

such that

P(s)=P˜(s)R(s).

If a right divisor R(s) is nonsingular, then P(s)R−1(s) is a p × r polynomial matrix. Also if P(s) is square and nonsingular, then every right divisor of P(s) is nonsingular.

To become accustomed to these notions, it helps to reflect on the case of scalar polynomials. There a right divisor is simply a factor of the polynomial. For polynomial matrices the situation is roughly similar.

Next we consider a matrix-polynomial extension of the concept of a common factor of two scalar polynomials. Since one of the polynomial matrices is always square in our application to transfer function representation, attention is restricted to that situation.

Definition 4.2.4

Suppose P(s) is a p × r polynomial matrix and Q(s) is an r × r polynomial matrix. If the r × r polynomial matrix R(s) is a right divisor of both, then R(s) is called a common right divisor of P(s) and Q(s). We call R(s) a greatest common right divisor of P(s) and Q(s) if it is a common right divisor, and if any other common right divisor of P(s) and Q(s) is a right divisor of R(s). If all common right divisors of P(s) and Q(s) are unimodular, then P(s) and Q(s) are called right coprime.

For polynomial fraction descriptions of a transfer function, one of the polynomial matrices is always nonsingular, so only nonsingular common right divisors occur. Suppose G(s) is given by the right polynomial fraction description

G(s)=N(s)D−1(s)

and that R(s) is a common right divisor of N(s) and D(s). Then

N˜(s)=N(s)R−1(s),D˜(s)=D(s)R−1(s)

(4.4)

(4.4)

are polynomial matrices, and they provide another right polynomial fraction description for G(s) since

N˜(s)D˜−1(s)=N(s)R−1(s)R(s)D−1(s)=G(s).

The degree of this new polynomial fraction description is no greater than the degree of the original since

deg[detD(s)]=deg[detD˜(s)]+deg[detR(s)].

Of course the largest degree reduction occurs if R(s) is a greatest common right divisor, and no reduction occurs if N(s) and D(s) are right coprime. This discussion indicates that exacting common right divisors of a right polynomial fraction is a generalization of the process of canceling common factors in a scalar rational function.

Computation of the greatest common right divisors can be based on the capabilities of elementary row operations on a polynomial matrix-operation similar to the elementary row operations on a matrix of real numbers. To set up this approach we present a preliminary result.

Theorem 4.2.1

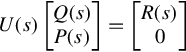

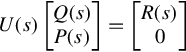

Suppose P(s) is a p × r polynomial matrix and Q(s) is an r × r polynomial matrix. If a unimodular (p + r) × (p + r) polynomial matrix U(s) and an r × r polynomial matrix R(s) are such that

U(s)[Q(s)P(s)]=[R(s)0]

(4.5)

(4.5)

then R(s) is a greatest common right divisor of P(s) and Q(s).

Proof

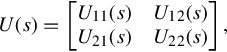

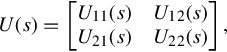

Partition U(s) in the form

U(s)=[U11(s)U21(s)U12(s)U22(s)],

(4.6)

(4.6)

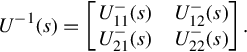

where U11(s) is r × r, and U22(s) is p × p. Then the polynomial matrix U−1(s) can be partitioned similarly as

U−1(s)=[U−11(s)U−21(s)U−12(s)U−22(s)].

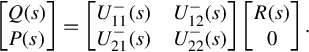

Using this notation to rewrite Eq. (4.5) gives

[Q(s)P(s)]=[U−11(s)U−21(s)U−12(s)U−22(s)][R(s)0].

That is,

Q(s)=U−11(s)R(s),P(s)=U−21(s)R(s).

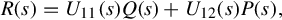

Therefore R(s) is a common right divisor of P(s) and Q(s). But, from Eqs. (4.5), (4.6),

R(s)=U11(s)Q(s)+U12(s)P(s),

(4.7)

(4.7)

so that if Ra(s) is another common right divisor of P(s) and Q(s), say

Q(s)=Q˜a(s)Ra(s),P(s)=P˜a(s)Ra(s),

then Eq. (4.7) gives

R(s)=[U11(s)Q˜a(s)+U12(s)P˜a(s)]Ra(s).

This shows Ra(s) also is a right divisor of R(s), and thus R(s) is a greatest common right divisor of P(s) and Q(s).

To calculate the greatest common right divisors using Theorem 4.2.1, we consider three types of elementary row operations on a polynomial matrix. First is the interchange of two rows, and second is the multiplication of a row by a nonzero real number. The third is to add to any row a polynomial multiple of another row. Each of these elementary row operations can be represented by premultiplication by a unimodular matrix, as is easily seen by filling in the following argument.

Interchange of rows i and j≠i correspond to premultiplying by a matrix Ea that has a very simple form. The diagonal entries are unity, except that [Ea]ii = [Ea]jj = 0, and the off-diagonal entries are zero, except that [Ea]ij = [Ea]ji = 1. Multiplication of the ith-row by a real number α≠0 corresponds to premultiplication by a matrix Eb that is diagonal with all diagonal entries unity, except [Eb]ii = α. Finally, adding to row i a polynomial p(s) times row j, j≠i, corresponds to premultiplication by a matrix Ec(s) that has unity diagonal entries, with off-diagonal entries zero, except [Ec(s)]ij = p(s).

It is straightforward to show that the determinants of matrices of the form Ea, Eb, and Ec(s) described above are nonzero real numbers. That is, these matrices are unimodular. Also it is easy to show that the inverse of any of these matrices corresponds to another elementary row operation. The diligent might prove that multiplication of a row by a polynomial is not an elementary row operation in the sense of multiplication by a unimodular matrix, thereby burying a frequent misconception.

It should be clear that a sequence of elementary row operations can be represented as premultiplication by a sequence of these elementary unimodular matrices, and thus as a single unimodular premultiplication. We also want to show the converse—that premultiplication by any unimodular matrix can be represented by a sequence of elementary row operations. Then Theorem 4.2.1 provides a method based on elementary row operations for computing a greatest common right divisor R(s) via Eq. (4.5).

That any unimodular matrix can be written as a product of matrices of the form Ea, Eb, and Ec(s) derives easily from a special form for polynomial matrices. We present this special form for the particular case where the polynomial matrix contains a full-dimension nonsingular partition. This suffices for our application to polynomial fraction descriptions, and also avoids some fussy but trivial issues, such as how to handle identical columns, or all-zero columns. Recall the terminology; that a scalar polynomial is called monic if the coefficient of the highest power of s is unity, that the degree of a polynomial is the highest power of s with nonzero coefficient, and that the degree of the zero polynomial is, by convention, −∞.

Theorem 4.2.2

Suppose P(s) is a p × r polynomial matrix and Q(s) is an r × r, nonsingular polynomial matrix. Then elementary row operations can be used to transform

M(s)=[Q(s)P(s)]

(4.8)

(4.8)

into row Hermite form described as follows. For k = 1, …, r, all entries of the kth-column below the k, k-entry are zero, and the k, k-entry is nonzero and monic with higher degree than every entry above it in column k. (If the k, k-entry is unity, then all entries above it are zero.)

Proof

Row Hermite form can be computed by an algorithm that is similar to the row reduction process for constant matrices.

Step (i): In the first column of M(s) use row interchange to bring to the first row a lowest-degree entry among nonzero first-column entries. (By nonsingularity of Q(s), there is a nonzero first-column entry.)

Step (ii): Multiply the first row by a real number so that the first column entry is monic.

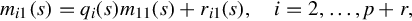

Step (iii): For each entry mi1(s) below the first row in the first column, use polynomial division to write

mi1(s)=qi(s)m11(s)+ri1(s),i=2,…,p+r,

(4.9)

(4.9)

where each remainder is such that deg ri1(s) < deg m11(s). (If mi1(s) = 0, that is degmi1(s)=−∞, we set qi(s) = ri1(s) = 0. If deg mi1(s) = 0, then by Step (i) deg m11(s) = 0. Therefore deg qi(s) = 0 and degri1=−∞,

we set qi(s) = ri1(s) = 0. If deg mi1(s) = 0, then by Step (i) deg m11(s) = 0. Therefore deg qi(s) = 0 and degri1=−∞, that is, ri1(s) = 0.)

that is, ri1(s) = 0.)

Step (iv): For i = 2, …, p + r, add to the ith-row the product of − qi(s) and the first row. The resulting entries in the first column, below the first row, are r21(s), …, rp+r, 1(s), all of which have degrees less than deg m11(s).

Step (v): Repeat Steps (i) through (iv) until all entries of the first column are zero except the first entry. Since the degrees of the entries below the first entry are lowered by at least one in each iteration, a finite number of operations is required.

Proceed to the second column of M(s) and repeat the above steps while ignoring the first row. This results in a monic, nonzero entry m22(s), with all entries below it zero. If m12(s) does not have lower degree than m22(s), then polynomial division of m12(s) by m22(s) as in Step (iii) and an elementary row operation as in Step (iv) replaces m12(s) by a polynomial of degree less than degm22(s). Next repeat the process for the third column of M(s), while ignoring the first two rows. Continuing yields the claimed form on exhausting the columns of M(s).

To complete the connection between unimodular matrices and elementary row operations, suppose in Theorem 4.2.2 that p = 0, and Q(s) is unimodular. Of course the resulting row Hermite form is upper triangular. The diagonal entries must be unity, for a diagonal entry of positive degree would yield a determinant of positive degree, contradicting unimodularity. But then entries above the diagonal must have degree −∞. Thus row Hermite form for a unimodular matrix is the identity matrix. In other words for a unimodular polynomial matrix U(s) there is a sequence of elementary row operations, say Ea, Eb, Ec(s), …, Eb, such that

Thus row Hermite form for a unimodular matrix is the identity matrix. In other words for a unimodular polynomial matrix U(s) there is a sequence of elementary row operations, say Ea, Eb, Ec(s), …, Eb, such that

[EaEbEc(s)⋯Eb]U(s)=I.

(4.10)

(4.10)

This obviously gives U(s) as the sequence of elementary row operations on the identity specified by

U(s)=[E−1b⋯E−1c(s)E−1bE−1a]I

and premultiplication of a matrix by U(s) thus corresponds to application of a sequence of elementary row operations. Therefore Theorem 4.2.1 can be restated, for the case of nonsingular Q(s), in terms of elementary row operations rather than premultiplication by a unimodular U(s). If reduction to row Hermite form is used in implementing Eq. (4.5), then the greatest common right divisor R(s) will be an upper-triangular polynomial matrix. Furthermore, if P(s) and Q(s) are right coprime, then Theorem 4.2.2 shows that there is a unimodular U(s) such that Eq. (4.5) is satisfied for R(s) = Ir.

Two different characterizations of right coprimeness are used in the sequel. One is in the form of a polynomial matrix equation, while the other involves rank properties of a complex matrix obtained by evaluation of a polynomial matrix at complex values of s.

Theorem 4.2.3

For a p × r polynomial matrix P(s) and a nonsingular r × r polynomial matrix Q(s), the following statements are equivalent:

(i) The polynomial matrices P(s) and Q(s) are right coprime.

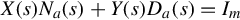

(ii) There exist an r × p polynomial matrix X(s) and an r × r polynomial matrix Y (s) satisfying the so-called Bezout identity

X(s)P(s)+Y(s)Q(s)=Ir.

(4.11)

(4.11)

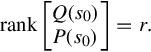

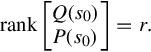

(iii) For every complex number s0,

rank[Q(s0)P(s0)]=r.

(4.12)

(4.12)

Proof

Beginning a demonstration that each claim implies the next, first we show that (i) implies (ii). If P(s) and Q(s) are right coprime, then reduction to row Hermite form as in Eq. (4.5) yields polynomial matrices U11(s) and U12(s) such that

U11(s)Q(s)+U12(s)P(s)=Ir

and this has the form of Eq. (4.11).

To prove that (ii) implies (iii), write the condition (4.11) in the matrix form

[Y(s)X(s)][Q(s)P(s)]=Ir.

If s0 is a complex number for which

rank[Q(s0)P(s0)]<r,

then we have a rank contradiction.

To show (iii) implies (i), suppose that Eq. (4.12) holds and R(s) is a common right divisor of P(s) and Q(s). Then for some p × r polynomial matrix P˜(s) and some r × r polynomial matrix Q˜(s)

and some r × r polynomial matrix Q˜(s)

[Q(s)P(s)]=[Q˜(s)P˜(s)]R(s).

(4.13)

(4.13)

If detR(s) is a polynomial of degree at least one and s0 is a root of this polynomial, then R(s0) is a complex matrix of less than full rank. Thus we obtain the contradiction

is a polynomial of degree at least one and s0 is a root of this polynomial, then R(s0) is a complex matrix of less than full rank. Thus we obtain the contradiction

rank[Q(s0)P(s0)]≤rankR(s0)<r.

Therefore detR(s) is a nonzero constant, that is, R(s) is unimodular. This proves that P(s) and Q(s) are right coprime.

is a nonzero constant, that is, R(s) is unimodular. This proves that P(s) and Q(s) are right coprime.

A right polynomial fraction description with N(s) and D(s) right coprime is called simply a coprime right polynomial fraction description. The next result shows that in an important sense all coprime right polynomial fraction descriptions of a given transfer function are equivalent. In particular they all have the same degree.

Theorem 4.2.4

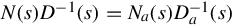

For any two coprime right polynomial fraction descriptions of a strictly proper rational transfer function

G(s)=N(s)D−1(s)=Na(s)D−1a(s),

there exists a unimodular polynomial matrix U(s) such that

N(s)=Na(s)U(s),D(s)=Da(s)U(s).

Proof

By Theorem 4.2.3 there exist polynomial matrices X(s), Y (s), A(s), and B(s) such that

X(s)Na(s)+Y(s)Da(s)=Im

(4.14)

(4.14)

and

A(s)N(s)+B(s)D(s)=Im.

(4.15)

(4.15)

Since N(s)D−1(s)=Na(s)D−1a(s) , we have Na(s) = N(s)D−1(s)Da(s). Substituting this into Eq. (4.14) gives

, we have Na(s) = N(s)D−1(s)Da(s). Substituting this into Eq. (4.14) gives

X(s)N(s)D−1(s)Da(s)+Y(s)Da(s)=Im

or

X(s)N(s)+Y(s)D(s)=D−1a(s)D(s).

A similar calculation using N(s)=Na(s)D−1a(s)D(s) in Eq. (4.15) gives

in Eq. (4.15) gives

A(s)Na(s)+B(s)Da(s)=D−1(s)Da(s).

Therefore both D−1a(s)D(s) and D−1(s)Da(s) are polynomial matrices, and since they are inverses of each other both must be unimodular. Let

and D−1(s)Da(s) are polynomial matrices, and since they are inverses of each other both must be unimodular. Let

U(s)=D−1a(s)D(s).

Then

N(s)=Na(s)U(s),D(s)=Da(s)D−1a(s)D(s)=Da(s)U(s)

and the proof is complete.

4.3 Left polynomial fraction

Before going further we pause to consider left polynomial fraction descriptions and their relation to right polynomial fraction descriptions of the same transfer function. This means repeating much of the right-handed development, and proofs of the results are left as unlisted exercises.

Definition 4.3.1

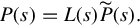

A q × q polynomial matrix L(s) is called a left divisor of the q × p polynomial matrix P(s) if there exists a q × p polynomial matrix P˜(s) such that

such that

P(s)=L(s)P˜(s).

(4.16)

(4.16)

Definition 4.3.2

If P(s) is a q × p polynomial matrix and Q(s) is a q × q polynomial matrix, then a q × q polynomial matrix L(s) is called a common left divisor of P(s) and Q(s) if L(s) is a left divisor of both P(s) and Q(s). We call L(s) a greatest common left divisor of P(s) and Q(s) if it is a common left divisor, and if any other common left divisor of P(s) and Q(s) is a left divisor of L(s). If all common left divisors of P(s) and Q(s) are unimodular, then P(s) and Q(s) are called left coprime.

Theorem 4.3.1

Suppose P(s) is a q × p polynomial matrix and Q(s) is a q × q polynomial matrix. If a (q + p) × (q + p) unimodular polynomial matrix U(s) and a q × q polynomial matrix L(s) are such that

[Q(s)P(s)]U(s)=[L(s)0],

(4.17)

(4.17)

then L(s) is a greatest common left divisor of P(s) and Q(s).

Three types of elementary column operations can be represented by postmultiplication by a unimodular matrix. The first is interchange of two columns, and the second is multiplication of any column by a nonzero real number. The third elementary column operation is addition to any column of a polynomial multiple of another column. It is easy to check that a sequence of these elementary column operations can be represented by postmultiplication by a unimodular matrix. That postmultiplication by any unimodular matrix can be represented by an appropriate sequence of elementary column operations is a consequence of another special form, introduced below for the class of polynomial matrices of interest.

Theorem 4.3.2

Suppose P(s) is a q × p polynomial matrix and Q(s) is a q × q nonsingular polynomial matrix. Then elementary column operations can be used to transform

M(s)=[Q(s)P(s)]

into a column Hermite form described as follows. For k = 1, …, q, all entries of the kth-row to the right of the k, k-entry are zero, and the k, k-entry is monic with higher degree than any entry to its left. (If the k, k-entry is unity, all entries to its left are zero.)

Theorems 4.3.1 and 4.3.2 together provide a method for computing greatest common left divisors using elementary column operations to obtain column Hermite form. The polynomial matrix L(s) in Eq. (4.17) will be lower-triangular.

Theorem 4.3.3

For a q × p polynomial matrix P(s) and a nonsingular q × q polynomial matrix Q(s), the following statements are equivalent:

(i) The polynomial matrices P(s) and Q(s) are left coprime.

(ii) There exist a p × q polynomial matrix X(s) and a q × q polynomial matrix Y (s) such that

P(s)X(s)+Q(s)Y(s)=Iq.

(4.18)

(4.18)

(iii) For every complex number s0,

rank[Q(s0)P(s0)]=q.

(4.19)

(4.19)

Naturally a left polynomial fraction description composed of left coprime polynomial matrices is called a coprime left polynomial fraction description.

Theorem 4.3.4

For any two coprime left polynomial fraction descriptions of a strictly proper rational transfer function

G(s)=D−1(s)N(s)=D−1a(s)Na(s),

there exists a unimodular polynomial matrix U(s) such that

N(s)=U(s)Na(s),D(s)=U(s)Da(s).

Suppose that we begin with the elementary right polynomial fraction description and the elementary left polynomial fraction description in Eq. (4.3) for a given strictly proper rational transfer function G(s). Then appropriate greatest common divisors can be extracted to obtain a coprime right polynomial fraction description, and a coprime left polynomial fraction description for G(s). We now show that these two coprime polynomial fraction descriptions have the same degree. An economical demonstration relies on a particular polynomial-matrix inversion formula.

Lemma 4.3.1

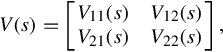

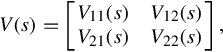

Suppose that V11(s) is a m × m nonsingular polynomial matrix and

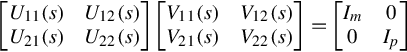

V(s)=[V11(s)V21(s)V12(s)V22(s)],

(4.20)

(4.20)

is an (m + p) × (m + p) nonsingular polynomial matrix. Then defining the matrix of rational functions Va(s)=V22(s)−V21(s)V−111(s)V12(s)

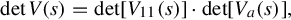

(i) detV(s)=det[V11(s)]⋅det[Va(s)],

(ii) detVa(s) is a nonzero rational function,

is a nonzero rational function,

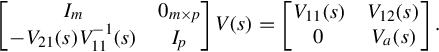

(iii) the inverse of V (s) is

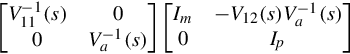

V−1(s)=[V−111(s)+V−111(s)V12(s)V−1a(s)V21(s)V−111(s)−V−1a(s)V21(s)V−111(s)−V−111(s)V12(s)V−1a(s)V−1a(s).]

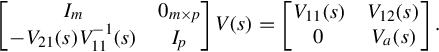

Proof

A partitioned calculation verifies

[Im−V21(s)V−111(s)0m×pIp]V(s)=[V11(s)0V12(s)Va(s)].

(4.21)

(4.21)

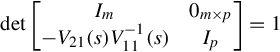

Using the obvious determinant identity for block-triangular matrices, in particular

det[Im−V21(s)V−111(s)0m×pIp]=1

gives

detV(s)=det[V11(s)]⋅det[Va(s)].

Since V (s) and V11(s) are nonsingular polynomial matrices, this proves that detVa(s) is a nonzero rational function, that is, V−1a(s)

is a nonzero rational function, that is, V−1a(s) exists. To establish (iii), multiply Eq. (4.21) on the left by

exists. To establish (iii), multiply Eq. (4.21) on the left by

[V−111(s)00V−1a(s)][Im0−V12(s)V−1a(s)Ip]

to obtain

[V−111(s)+V−111(s)V12(s)V−1a(s)V21(s)V−111(s)−V−1a(s)V21(s)V−111(s)−V−111(s)V12(s)V−1a(s)V−1a(s)]V(s)=[Im00Ip]

and the proof is complete.

Theorem 4.3.5

Suppose that a strictly proper rational transfer function is represented by a coprime right polynomial fraction and a coprime left polynomial fraction

G(s)=N(s)D−1(s)=D−1L(s)NL(s).

(4.22)

(4.22)

Then there exists a nonzero constant α such that detD(s)=αdetDL(s).

Proof

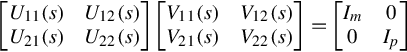

By right-coprimeness of N(s) and D(s) there exists an (m + p) × (m + p) unimodular polynomial matrix

U(s)=[U11(s)U21(s)U12(s)U22(s)]

such that

[U11(s)U21(s)U12(s)U22(s)][D(s)N(s)]=[Im0].

(4.23)

(4.23)

For notational convenience let

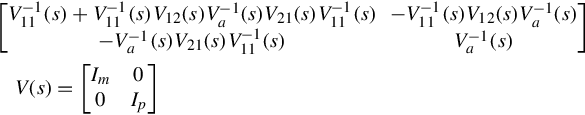

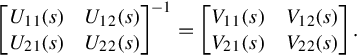

[U11(s)U21(s)U12(s)U22(s)]−1=[V11(s)V21(s)V12(s)V22(s)].

Each Vij(s) is a polynomial matrix, and in particular Eq. (4.23) gives

V11(s)=D(s),V21(s)=N(s).

Therefore V11(s) is nonsingular, and calling on Lemma 4.3.1 we have that

U22(s)=[V22(s)−V21(s)V−111(s)V12(s)]−1,

which of course is a polynomial matrix, is nonsingular. Furthermore writing

[U11(s)U21(s)U12(s)U22(s)][V11(s)V21(s)V12(s)V22(s)]=[Im00Ip]

(4.24)

(4.24)

gives, in the 2,2-block,

U21(s)V12(s)+U22(s)V22(s)=Ip.

By Theorem 4.3.3 this implies that U21(s) and U22(s) are left coprime. Also, from the 2,1-block,

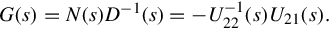

U21(s)V11(s)+U22(s)V21(s)=U21(s)D(s)+U22(s)N(s)=0.

(4.25)

(4.25)

Thus we can write, from Eq. (4.25)

G(s)=N(s)D−1(s)=−U−122(s)U21(s).

(4.26)

(4.26)

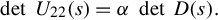

This is a coprime left polynomial fraction description for G(s). Again using Lemma 4.3.1, and the unimodularity of V (s), there exists a nonzero constant α such that

det[V11(s)V21(s)V12(s)V22(s)]=det[V11(s)]⋅det[V22(s)−V21(s)V−111(s)V12(s)]=det[D(s)]⋅det[U−122(s)]=detD(s)detU22(s)=1α.

(4.27)

(4.27)

Therefore, for the coprime left polynomial fraction description in Eq. (4.26), we have detU22(s)=αdetD(s). Finally, using the unimodular relation between coprime left polynomial fractions in Theorem 4.3.4, such a determinant formula, with possibly a different nonzero constant, must hold for any coprime left polynomial fraction description for G(s).

Finally, using the unimodular relation between coprime left polynomial fractions in Theorem 4.3.4, such a determinant formula, with possibly a different nonzero constant, must hold for any coprime left polynomial fraction description for G(s).

4.4 Column and row degrees

There is an additional technical consideration that complicates the representation of a strictly proper rational transfer function by polynomial fraction descriptions. First, we introduce terminology for matrix polynomials that is related to the notion of the degree of a scalar polynomial. Recall again conventions that the degree of a nonzero constant is zero, and the degree of the polynomial 0 is −∞.

Definition 4.4.1

For a p × r polynomial matrix P(s), the degree of the highest-degree polynomial in the jth-column of P(s), written cj[P], is called the jth-column degree of P(s). The column degree coefficient matrix for P(s), written Phc, is the real p × r matrix with i, j-entry given by the coefficient of scj[P] in the i, j-entry of P(s). If P(s) is square and nonsingular, then it is called column reduced if

in the i, j-entry of P(s). If P(s) is square and nonsingular, then it is called column reduced if

deg[detP(s)]=c1[P]+⋯+cp[P].

(4.28)

(4.28)

If P(s) is square, then the Laplace expansion of the determinant about columns shows that the degree of detP(s) cannot be greater than c1[P] + ⋯ + cp[P]. But it can be less.

cannot be greater than c1[P] + ⋯ + cp[P]. But it can be less.

The issue that requires attention involves the column degrees of D(s) in a right polynomial fraction description for a strictly proper rational transfer function. It is clear in the m = p = 1 case that this column degree plays an important role in realization considerations, for example. The same is true in the multiinput, multioutput case, and the complication is that column degrees of D(s) can be artificially high, and they can change in the process of postmultiplication by a unimodular matrix. Therefore two coprime right polynomial fraction descriptions for G(s), as in Theorem 4.2.4, can be such that D(s) and Da(s) have different column degrees, even though the degrees of the polynomials detD(s) and detDa(s)

and detDa(s) are the same.

are the same.

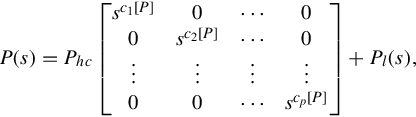

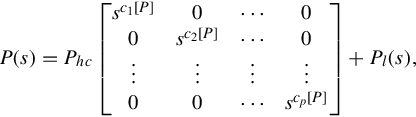

The first step in investigating this situation is to characterize column-reduced polynomial matrices in a way that does not involve computing a determinant. Using Definition 4.4.1 it is convenient to write a p × p polynomial matrix P(s) in the form

P(s)=Phc⎡⎣⎢⎢⎢⎢⎢sc1[P]0⋮00sc2[P]⋮0⋯⋯⋮⋯00⋮scp[P]⎤⎦⎥⎥⎥⎥⎥+Pl(s),

(4.29)

(4.29)

where Pl(s) is a p × p polynomial matrix in which each entry of the jth-column has degree strictly less than cj[P]. (We use this notation only when P(s) is nonsingular, so that c1[P], …, cp[P] ≥ 0.)

Theorem 4.4.1

If P(s) is a p × p nonsingular polynomial matrix, then P(s) is column reduced if and only if Phc is invertible.

Proof

We can write, using the representation (4.29)

s−c1[P]−⋯−cp[P]⋅detP(s)=det[P(s)⋅diagonal{s−c1[P],…,s−cp[P]}]=det[Phc+Pl(s)⋅diagonal{s−c1[P],…,s−cp[P]}]=det[Phc+P˜(s−1)],

(4.30)

(4.30)

where P˜(s−1) is a matrix with entries that are polynomials in s−1 that have no constant terms, that is, no s0 terms. A key fact in the remaining argument is that, viewing s as real and positive, letting s→∞

is a matrix with entries that are polynomials in s−1 that have no constant terms, that is, no s0 terms. A key fact in the remaining argument is that, viewing s as real and positive, letting s→∞ yields P˜(s−1)→0.

yields P˜(s−1)→0. Also the determinant of a matrix is a continuous function of the matrix entries, so limit and determinant can be interchanged. In particular we can write

Also the determinant of a matrix is a continuous function of the matrix entries, so limit and determinant can be interchanged. In particular we can write

lims→∞[s−c1[P]−⋯−cp[P]⋅detP(s)]=lims→∞det[Phc+P˜(s−1)]=det{lims→∞[Phc+P˜(s−1)]}=detPhc.

(4.31)

(4.31)

Using Eq. (4.28) the left side of Eq. (4.31) is a nonzero constant if and only if P(s) is column reduced, and thus the proof is complete.

Consider a coprime right polynomial fraction description N(s)D−1(s), where D(s) is not column reduced. We next show that elementary column operations on D(s) (postmultiplication by a unimodular matrix U(s)) can be used to reduce individual column degrees, and thus compute a new coprime right polynomial fraction description

N˜(s)=N(s)U(s),D˜(s)=D(s)U(s),

(4.32)

(4.32)

where D˜(s) is column reduced. Of course U(s) need not be constructed, explicitly simply perform the same sequence of elementary column operations on N(s) as on D(s) to obtain N˜(s)

is column reduced. Of course U(s) need not be constructed, explicitly simply perform the same sequence of elementary column operations on N(s) as on D(s) to obtain N˜(s) along with D˜(s).

along with D˜(s).

To describe the required calculations, suppose the column degrees of the m × m polynomial matrix D(s) satisfy c1[D] ≥ c2[D], …, cm[D], as can achieved by column interchanges. Using the notation

D(s)=DhcΔ(s)+Dl(s),

(4.33)

(4.33)

there exists a nonzero m × 1 vector z such that Dhcz = 0, since D(s) is not column reduced. Suppose that the first nonzero entry in z is zk, and define a corresponding polynomial vector by

z=⎡⎣⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢0⋮0zkzk+1⋮zm⎤⎦⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥→z(s)=⎡⎣⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢0⋮0zkzk+1sck[D]−ck+1[D]⋮zmsck[D]−cm[D]⎤⎦⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥.

(4.34)

(4.34)

Then

D(s)z(s)=DhcΔ(s)z(s)+Dl(s)z(s)=Dhczsck[D]+Dl(s)z(s)=Dl(s)z(s)

(4.35)

(4.35)

and all entries of Dl(s)z(s) have degree no greater than ck[D] − 1. Choosing the unimodular matrix

U(s)=[e1⋯ek−1z(s)ek+1⋯em],

where ei denotes the ith-column of Im, it follows that D˜(s)=D(s)U(s) has column degrees satisfying

has column degrees satisfying

ck[D˜]<ck[D];cj[D˜]=cj[D],j=1,…,k−1,k+1,…,m.

If D˜(s) is not column reduced, then the process is repeated, beginning with the reordering of columns to obtain nonincreasing column degrees. A finite number of such repetitions builds a unimodular U(s) such that D˜(s)

is not column reduced, then the process is repeated, beginning with the reordering of columns to obtain nonincreasing column degrees. A finite number of such repetitions builds a unimodular U(s) such that D˜(s) in Eq. (4.32) is column reduced.

in Eq. (4.32) is column reduced.

Another aspect of the column degree issue involves determining when a given N(s) and D(s) are such that N(s)D−1(s) is a strictly proper rational transfer function. The relative column degrees of N(s) and D(s) play important roles, but not as simply as the single-input, single-output case suggests.

Theorem 4.4.2

If the polynomial fraction description N(s)D−1(s) is a strictly proper rational function, then cj[N] < cj[D], j = 1, …, m. If D(s) is column reduced and cj[N] < cj[D], j = 1, …, m, then N(s)D−1(s) is a strictly proper rational function.

Proof

Suppose G(s) = N(s)D−1(s) is strictly proper. Then N(s) = G(s)D(s), and in particular

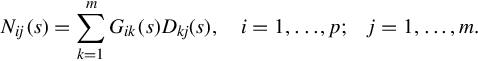

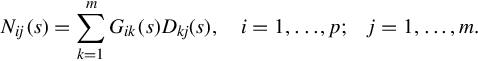

Nij(s)=∑mk=1Gik(s)Dkj(s),i=1,…,p;j=1,…,m.

(4.36)

(4.36)

Then for any fixed value of j,

Nij(s)s−cj[D]=∑mk=1Gik(s)Dkj(s)s−cj[D],i=1,…,p.

(4.37)

(4.37)

As we let (real) s→∞ each strictly proper rational function Gik(s) approaches 0, and each Dkj(s)s−cj[D]

each strictly proper rational function Gik(s) approaches 0, and each Dkj(s)s−cj[D] approaches a finite constant, possibly zero. In any case this gives

approaches a finite constant, possibly zero. In any case this gives

lims→∞Nij(s)s−cj[D]=0,i=1,…,p.

Therefore deg Nij(s) < cj[D], i = 1, …, p, which implies cj[N] < cj[D].

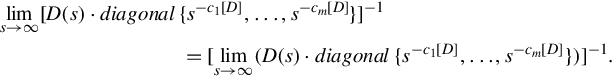

Now suppose that D(s) is column reduced, and cj[N] < cj[D], j = 1, …, m. We can write

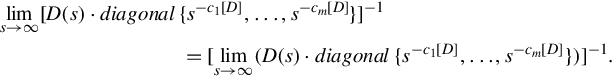

N(s)D−1(s)=[N(s)⋅diagonal{s−c1[D],…,s−cm[D]}]⋅[D(s)⋅diagonal{s−c1[D],…,s−cm[D]}]−1

(4.38)

(4.38)

and since cj[N] < cj[D], j = 1, …, m,

lims→∞[N(s)⋅diagonal{s−c1[D],…,s−cm[D]}]=0.

The adjugate-overdeterminant formula implies that each entry in the inverse of a matrix is a continuous function of the entries of the matrix. Thus limit can be interchanged with matrix inversion,

lims→∞[D(s)⋅diagonal{s−c1[D],…,s−cm[D]}]−1=[lims→∞(D(s)⋅diagonal{s−c1[D],…,s−cm[D]})]−1.

(4.39)

(4.39)

Writing D(s) in the form (4.29), the limit yields D−1hc. Then, from Eq. (4.38),

Then, from Eq. (4.38),

lims→∞N(s)D−1(s)=0⋅D−1hc=0,

which implies strict properness.

It remains to give the corresponding development for left polynomial fraction descriptions, though details are omitted.

Definition 4.4.2

For a q × p polynomial matrix P(s), the degree of the highest-degree polynomial in the ith-row of P(s), written ri[P], is called the ith-row degree of P(s). The row degree coefficient matrix of P(s), written Phr, is the real q × p matrix with i, j-entry given by the coefficient of sri[P] in Pij(s). If P(s) is square and nonsingular, then it is called row reduced if

in Pij(s). If P(s) is square and nonsingular, then it is called row reduced if

deg[detP(s)]=r1[P]+⋯+rq[P].

(4.40)

(4.40)

Theorem 4.4.3

If p(s) is a p × p nonsingular polynomial matrix, then P(s) is row reduced if and only if Phr is invertible.

Theorem 4.4.4

If the polynomial fraction description D−1(s)N(s) is a strictly proper rational function, then ri[N] < ri[D], i = 1, …, p. If D(s) is row reduced and ri[N] < ri[D], i = 1, …, p, then D−1(s)N(s) is a strictly proper rational function.

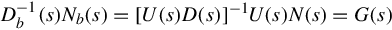

Finally, if G(s) = D−1(s)N(s) is a polynomial fraction description and D(s) is not row reduced, then a unimodular matrix U(s) can be computed such that Db(s) = U(s)D(s) is row reduced. Letting Nb(s) = U(s)N(s), the left polynomial fraction description

D−1b(s)Nb(s)=[U(s)D(s)]−1U(s)N(s)=G(s)

(4.41)

(4.41)

has the same degree as the original.

Because of machinery developed in this chapter, a polynomial fraction description for a strictly proper rational transfer function G(s) can be assumed as either a coprime right polynomial fraction description with column-reduced D(s), or a coprime left polynomial fraction with row-reduced DL(s). In either case the degree of the polynomial fraction description is the same, and is given by the sum of the column degrees or, respectively, the sum of the row degrees.

4.5 Minimal realization

We assume that a p × m strictly proper rational transfer function is specified by a coprime right polynomial fraction description

G(s)=N(s)D−1(s)

(4.42)

(4.42)

with D(s) column reduced. Then the column degrees of N(s) and D(s) satisfy cj[N] < cj[D], j = 1, …, m. Some simplification occurs if one uninteresting case is ruled out. If cj[D] = 0 for some j, then by Theorem 4.4.2G(s) is strictly proper if and only if all entries of the jth-column of N(s) are zero, that is, cj[N]=−∞. Therefore a standing assumption in this chapter is that c1[D], …, cm[D] ≥ 1, which turns out to be compatible with assuming rank B = m for a linear state equation. Recall that the degree of the polynomial fraction description (4.42) is c1[D] + ⋯ + cm[D], since D(s) is column reduced.

Therefore a standing assumption in this chapter is that c1[D], …, cm[D] ≥ 1, which turns out to be compatible with assuming rank B = m for a linear state equation. Recall that the degree of the polynomial fraction description (4.42) is c1[D] + ⋯ + cm[D], since D(s) is column reduced.

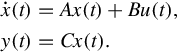

We know there exists a minimal realization for G(s),

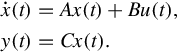

x.(t)=Ax(t)+Bu(t),y(t)=Cx(t).

(4.43)

(4.43)

In exploring the connection between a transfer function and its minimal realizations, an additional bit of terminology is convenient.

Definition 4.5.1

Suppose N(s)D−1(s) is a coprime right polynomial fraction description for the p × m, strictly proper, rational transfer function G(s). Then the degree of this polynomial fraction description is called the McMillan degree of G(s).

The first objective is to show that the McMillan degree of G(s) is precisely the dimension of minimal realizations of G(s). Our roundabout strategy is to prove that minimal realizations cannot have dimension less than the McMillan degree, and then compute a realization of dimension equal to the McMillan degree. This forces the conclusion that the computed realization is a minimal realization.

Lemma 4.5.1

The dimension of any realization of a strictly proper rational transfer function G(s) is at least the McMillan degree of G(s).

Proof

Suppose that the linear state equation (4.43) is a dimension-n minimal realization for the p × m transfer function G(s). Then Eq. (4.43) is both controllable and observable, and

G(s)=C(sI−A)−1B.

Define a n × m strictly proper transfer function H(s) by the left polynomial fraction description

H(s)=D−1L(s)NL(s)=(sI−A)−1B.

(4.44)

(4.44)

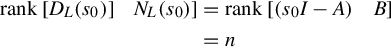

Clearly this left polynomial fraction description has degree n. Since the state equation (4.43) is controllable, then

rank[DL(s0)]NL(s0)]=rank[(s0I−A)B]=n

(4.45)

(4.45)

for every complex s0. Thus by Theorem 4.3.3 the left polynomial fraction description (4.44) is coprime. Now suppose Na(s)D−1a(s) is a coprime right polynomial fraction description for H(s). Then this right polynomial fraction description also has degree n, and

is a coprime right polynomial fraction description for H(s). Then this right polynomial fraction description also has degree n, and

G(s)=[CNa(s)]D−1a(s)

(4.46)

(4.46)

is a degree-n right polynomial fraction description for G(s), though not necessarily coprime. Therefore the McMillan degree of G(s) is no greater than n, the dimension of a minimal realization of G(s).

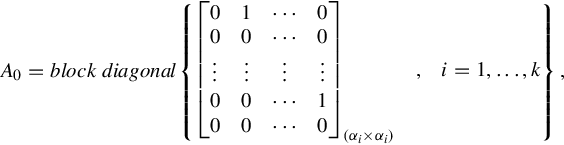

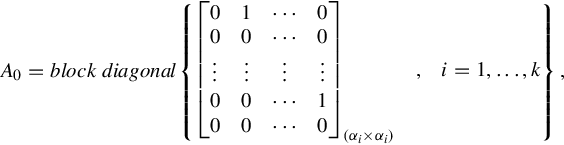

For notational assistance in the construction of a minimal realization, recall the integrator coefficient matrices corresponding to a set of k positive integers, α1, …, αk, with α1 + ⋯ + αk = n. These matrices are

A0=blockdiagonal⎧⎩⎨⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎡⎣⎢⎢⎢⎢⎢⎢⎢00⋮0010⋮00⋯⋯⋮⋯⋯00⋮10⎤⎦⎥⎥⎥⎥⎥⎥⎥(αi×αi),i=1,…,k⎫⎭⎬⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪,

(4.47)

(4.47)

B0=blockdiagonal⎧⎩⎨⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎡⎣⎢⎢⎢⎢0⋮01⎤⎦⎥⎥⎥⎥(αi×1),i=1,…,k⎫⎭⎬⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪.

(4.48)

(4.48)

Define the corresponding integrator polynomial matrices by

ψ(s)=blockdiagonal⎧⎩⎨⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪⎡⎣⎢⎢⎢⎢⎢1s⋮sαi−1⎤⎦⎥⎥⎥⎥⎥,i=1,…,k⎫⎭⎬⎪⎪⎪⎪⎪⎪⎪⎪⎪⎪,

(4.49)

(4.49)

Δ(s)=diagonal{sα1,…,sαk}.

(4.50)

(4.50)

The terminology couldn’t be more appropriate, as we now demonstrate.

Lemma 4.5.2

The integrator polynomial matrices provide a right polynomial fraction description for the corresponding integrator state equation. That is,

(sI−A0)−1B0=ψ(s)Δ−1(s).

(4.51)

(4.51)

Proof

To verify Eq. (4.51), first multiply on the left by (sI − A0) and on the right by Δ(s) to obtain

B0Δ(s)=sψ(s)−A0ψ(s).

(4.52)

(4.52)

This expression is easy to check in a column-by-column fashion using the structure of the various matrices. For example the first column of Eq. (4.52) is the obvious

⎡⎣⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢0⋮0sα10⋮0⎤⎦⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥=⎡⎣⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢s⋮sα1−1sα10⋮0⎤⎦⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥−⎡⎣⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢⎢s⋮sα1−100⋮0⎤⎦⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥⎥.

(4.53)

(4.53)

Proceeding similarly through the remaining columns in Eq. (4.52) yields the proof.

Completing our minimal realization strategy now reduces to comparing a special representation for the polynomial fraction description and a special structure for a dimension-n state equation.

Theorem 4.5.1

Suppose that a strictly proper rational transfer function is described by a coprime right polynomial fraction description (4.42), where D(s) is column reduced with column degrees c1[D], …, cm[D] ≥ 1. Then the McMillan degree of G(s) is given by n = c1[D] + ⋯ + cm[D], and minimal realizations of G(s) have dimension n. Furthermore, writing

N(s)=Nlψ(s)D(s)=DhcΔ(s)+Dlψ(s),

(4.54)

(4.54)

where ψ(s) and Δ(s) are the integrator polynomial matrices corresponding to c1[D], …, cm[D], a minimal realization for G(s) is

x.(t)=(A0−B0D−1hcDl)x(t)+B0D−1hcu(t)y(t)=Nlx(t),

(4.55)

(4.55)

where A0 and B0 are the integrator coefficient matrices corresponding to c1[D], …, cm[D].

Proof

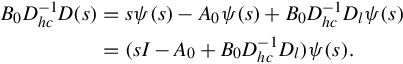

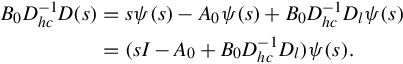

First we verify that Eq. (4.55) is a realization for G(s). It is straightforward to write down the representation in Eq. (4.54), where Nl and Dl are constant matrices that select for appropriate polynomial entries of N(s), and Dl(s). Then solving for Δ(s) in Eq. (4.54) and substituting into Eq. (4.52) gives

B0D−1hcD(s)=sψ(s)−A0ψ(s)+B0D−1hcDlψ(s)=(sI−A0+B0D−1hcDl)ψ(s).

(4.56)

(4.56)

This implies

(sI−A0+B0D−1hcDl)−1B0D−1hc=ψ(s)D−1(s)

(4.57)

(4.57)

from which the transfer function for Eq. (4.55) is

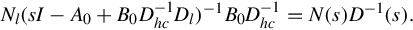

Nl(sI−A0+B0D−1hcDl)−1B0D−1hc=N(s)D−1(s).

(4.58)

(4.58)

Thus Eq. (4.55) is a realization of G(s) with dimension c1[D] + ⋯ + cm[D], which is the McMillan degree of G(s). Then by invoking Lemma 4.5.1 we conclude that the McMillan degree of G(s) is the dimension of minimal realizations of G(s).

In the minimal realization (4.55), note that if Dhc is upper triangular with unity diagonal entries, then the realization is in the controller form. (Upper triangular structure for Dhc can be obtained by elementary column operations on the original polynomial fraction description.) If Eq. (4.55) is in controller form, then the controllability indices are precisely ρ1 = c1[D], …, ρm = cm[D]. We see that all minimal realizations of N(s)D−1(s) have the same controllability indices up to reordering. All minimal realizations of a strictly proper rational transfer function G(s) have the same controllability indices up to reordering.

Calculations similar to those in the proof of Theorem 4.5.1 can be used to display a right polynomial fraction description for a given linear state equation.

Theorem 4.5.2

Suppose the linear state equation (4.43) is controllable with controllability indices ρ1, …, ρm ≥ 1. Then the transfer function for Eq. (4.43) is given by the right polynomial fraction description

C(sI−A)−1B=N(s)D−1(s),

(4.59)

(4.59)

where

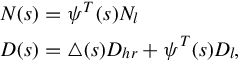

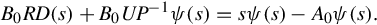

N(s)=CP−1ψ(s)D(s)=R−1Δ(s)−R−1UP−1ψ(s)

(4.60)

(4.60)

and D(s) is column reduced. Here ψ(s) and Δ(s) are the integrator polynomial matrices corresponding to ρ1, …, ρm, P is the controller-form variable change, and U and R are the coefficient matrices. If the state equation (4.43) also is observable, then N(s)D−1(s) is coprime with degree n.

Proof

We can write

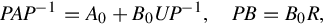

PAP−1=A0+B0UP−1,PB=B0R,

(4.61)

(4.61)

where A0 and B0 are the integrator coefficient matrices corresponding to ρ1, …, ρm. Let Δ(s) and ψ(s) be the corresponding integrator polynomial matrices. Using Eq. (4.60) to substitute for Δ(s) in Eq. (4.52) gives

B0RD(s)+B0UP−1ψ(s)=sψ(s)−A0ψ(s).

(4.62)

(4.62)

Rearranging this expression yields

ψ(s)D−1(s)=(sI−A0−B0UP−1)−1B0R

(4.63)

(4.63)

and therefore

N(s)D−1(s)=CP−1(sI−A0−B0UP−1)−1B0R=CP−1(sI−PAP−1)−1PB=C(sI−A)−1B.

(4.64)

(4.64)

This calculation verifies that the polynomial fraction description defined by Eq. (4.60) represents the transfer function of the linear state equation (4.43). Also, D(s) in Eq. (4.60) is column reduced because Dhc = R−1. Since the degree of the polynomial fraction description is n, if the state equation also is observable, hence a minimal realization of its transfer function, then n is the McMillan degree of the polynomial fraction description (4.60).

For left polynomial fraction descriptions, the strategy for right fraction descriptions applies since the McMillan degree of G(s) also is the degree of any coprime left polynomial fraction description for G(s). The only details that remain in proving a left-handed version of Theorem 4.5.1 involve construction of a minimal realization. But this construction is not difficult to deduce from a summary statement.

Theorem 4.5.3

Suppose that a strictly proper rational transfer function is described by a coprime left polynomial fraction description D−1(s)N(s), where D(s) is row reduced with row degrees r1[D], …, rp[D] ≥ 1. Then the McMillan degree of G(s) is given by n = r1[D] + ⋯ + rp[D], and minimal realizations of G(s) have dimension n. Furthermore, writing

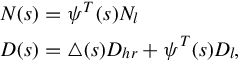

N(s)=ψT(s)NlD(s)=Δ(s)Dhr+ψT(s)Dl,

(4.65)

(4.65)

where ψ(s) and Δ(s) are the integrator polynomial matrices corresponding to r1[D], …, rp[D], a minimal realization for G(s) is

x.(t)=(AT0−DlD−1hrBT0)x(t)+Nlu(t)y(t)=D−1hrBT0x(t),

(4.66)

(4.66)

where A0 and B0 are the integrator coefficient matrices corresponding to r1[D], …, rp[D].

Analogous to the discussion following Theorem 4.5.3, in the setting of Theorem 4.5.3 the observability indices of minimal realizations of D−1(s)N(s) are the same, up to reordering, as the row degrees of D(s).

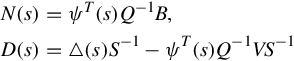

Theorem 4.5.4

Suppose the linear state equation (4.43) is observable with observability indices η1, …, ηp ≥ 1. Then the transfer function for Eq. (4.43) is given by the left polynomial fraction description

C(sI−A)−1B=D−1(s)N(s),

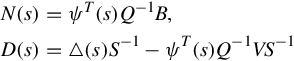

where

N(s)=ψT(s)Q−1B,D(s)=Δ(s)S−1−ψT(s)Q−1VS−1

(4.67)

(4.67)

and D(s) is row reduced. Here ψ(s) and Δ(s) are the integrator polynomial matrices corresponding to η1, …, ηp, Q is the observer-form variable change, and V and S are the coefficient matrices. If the state equation (4.43) also is controllable, then D−1(s)N(s) is coprime with degree n.

![]() for all but a finite number of complex numbers s0. And P(s) is unimodular if and only if

for all but a finite number of complex numbers s0. And P(s) is unimodular if and only if ![]() for all complex numbers s0.

for all complex numbers s0.![]()

![]() Thus row Hermite form for a unimodular matrix is the identity matrix. In other words for a unimodular polynomial matrix U(s) there is a sequence of elementary row operations, say Ea, Eb, Ec(s), …, Eb, such that

Thus row Hermite form for a unimodular matrix is the identity matrix. In other words for a unimodular polynomial matrix U(s) there is a sequence of elementary row operations, say Ea, Eb, Ec(s), …, Eb, such that ![]() and some r × r polynomial matrix

and some r × r polynomial matrix ![]()

![]() is a polynomial of degree at least one and s0 is a root of this polynomial, then R(s0) is a complex matrix of less than full rank. Thus we obtain the contradiction

is a polynomial of degree at least one and s0 is a root of this polynomial, then R(s0) is a complex matrix of less than full rank. Thus we obtain the contradiction ![]() is a nonzero constant, that is, R(s) is unimodular. This proves that P(s) and Q(s) are right coprime.

is a nonzero constant, that is, R(s) is unimodular. This proves that P(s) and Q(s) are right coprime.![]() Finally, using the unimodular relation between coprime left polynomial fractions in Theorem 4.3.4, such a determinant formula, with possibly a different nonzero constant, must hold for any coprime left polynomial fraction description for G(s).

Finally, using the unimodular relation between coprime left polynomial fractions in Theorem 4.3.4, such a determinant formula, with possibly a different nonzero constant, must hold for any coprime left polynomial fraction description for G(s).![]()

![]() cannot be greater than c1[P] + ⋯ + cp[P]. But it can be less.

cannot be greater than c1[P] + ⋯ + cp[P]. But it can be less.![]() and

and ![]() are the same.

are the same.![]() is column reduced. Of course U(s) need not be constructed, explicitly simply perform the same sequence of elementary column operations on N(s) as on D(s) to obtain

is column reduced. Of course U(s) need not be constructed, explicitly simply perform the same sequence of elementary column operations on N(s) as on D(s) to obtain ![]() along with

along with ![]()

![]() has column degrees satisfying

has column degrees satisfying ![]() is not column reduced, then the process is repeated, beginning with the reordering of columns to obtain nonincreasing column degrees. A finite number of such repetitions builds a unimodular U(s) such that

is not column reduced, then the process is repeated, beginning with the reordering of columns to obtain nonincreasing column degrees. A finite number of such repetitions builds a unimodular U(s) such that ![]() in Eq. (4.32) is column reduced.

in Eq. (4.32) is column reduced.![]() Therefore a standing assumption in this chapter is that c1[D], …, cm[D] ≥ 1, which turns out to be compatible with assuming rank B = m for a linear state equation. Recall that the degree of the polynomial fraction description (4.42) is c1[D] + ⋯ + cm[D], since D(s) is column reduced.

Therefore a standing assumption in this chapter is that c1[D], …, cm[D] ≥ 1, which turns out to be compatible with assuming rank B = m for a linear state equation. Recall that the degree of the polynomial fraction description (4.42) is c1[D] + ⋯ + cm[D], since D(s) is column reduced.