This section interactively walks you through a session in the interpreter to perform NLP with NLTK. The NLP pipeline we’ll follow is typical and resembles the following high-level flow:

| End of Sentence (EOS) Detection→ |

| Tokenization→ |

| Part-of-Speech Tagging→ |

| Chunking→ |

| Extraction |

We’ll use the following sample text for purposes of illustration: “Mr. Green killed Colonel Mustard in the study with the candlestick. Mr. Green is not a very nice fellow.” Remember that even though you have already read the text and understand that it’s composed of two sentences and all sorts of other things, it’s merely an opaque string value to a machine at this point. Let’s look at the steps we need to work through in more detail:

- EOS detection

This step breaks a text into a collection of meaningful sentences. Since sentences generally represent logical units of thought, they tend to have a predictable syntax that lends itself well to further analysis. Most NLP pipelines you’ll see begin with this step because tokenization (the next step) operates on individual sentences. Breaking the text into paragraphs or sections might add value for certain types of analysis, but it is unlikely to aid in the overall task of EOS detection. In the interpreter, you’d parse out a sentence with NLTK like so:

>>>

import nltk>>>txt = "Mr. Green killed Colonel Mustard in the study with the candlestick....Mr. Green is not a very nice fellow.">>>sentences = nltk.tokenize.sent_tokenize(txt)>>>sentences['Mr. Green killed Colonel Mustard in the study with the candlestick.', 'Mr. Green is not a very nice fellow.']We’ll talk a little bit more about what is happening under the hood with

sent_tokenizein the next section. For now, we’ll accept at face value that proper sentence detection has occurred for arbitrary text—a clear improvement over breaking on characters that are likely to be punctuation marks.- Tokenization

This step operates on individual sentences, splitting them into tokens. Following along in the example interpreter session, you’d do the following:

>>>

tokens = [nltk.tokenize.word_tokenize(s) for s in sentences]>>>tokens[['Mr.', 'Green', 'killed', 'Colonel', 'Mustard', 'in', 'the', 'study', 'with', 'the', 'candlestick', '.'], ['Mr.', 'Green', 'is', 'not', 'a', 'very', 'nice', 'fellow', '.']]Note that for this simple example, tokenization appeared to do the same thing as splitting on whitespace, with the exception that it tokenized out end-of-sentence markers (the periods) correctly. As we’ll see in a later section, though, it can do a bit more if we give it the opportunity, and we already know that distinguishing between whether a period is an end of sentence marker or part of an abbreviation isn’t always trivial.

- POS tagging

This step assigns part-of-speech information to each token. In the example interpreter session, you’d run the tokens through one more step to have them decorated with tags:

>>>

pos_tagged_tokens = [nltk.pos_tag(t) for t in tokens]>>>pos_tagged_tokens[[('Mr.', 'NNP'), ('Green', 'NNP'), ('killed', 'VBD'), ('Colonel', 'NNP'), ('Mustard', 'NNP'), ('in', 'IN'), ('the', 'DT'), ('study', 'NN'), ('with', 'IN'), ('the', 'DT'), ('candlestick', 'NN'), ('.', '.')], [('Mr.', 'NNP'), ('Green', 'NNP'), ('is', 'VBZ'), ('not', 'RB'), ('a', 'DT'), ('very', 'RB'), ('nice', 'JJ'), ('fellow', 'JJ'), ('.', '.')]]You may not intuitively understand all of these tags, but they do represent part-of-speech information. For example, ‘NNP’ indicates that the token is a noun that is part of a noun phrase, ‘VBD’ indicates a verb that’s in simple past tense, and ‘JJ’ indicates an adjective. The Penn Treebank Project provides a full summary of the part-of-speech tags that could be returned. With POS tagging completed, it should be getting pretty apparent just how powerful analysis can become. For example, by using the POS tags, we’ll be able to chunk together nouns as part of noun phrases and then try to reason about what types of entities they might be (e.g., people, places, organizations, etc.).

- Chunking

This step involves analyzing each tagged token within a sentence and assembling compound tokens that express logical concepts—quite a different approach than statistically analyzing collocations. It is possible to define a custom grammar through NLTK’s

chunk.RegexpParser, but that’s beyond the scope of this chapter; see Chapter 9 (“Building Feature Based Grammars”) of Natural Language Processing with Python (O’Reilly), available online at http://nltk.googlecode.com/svn/trunk/doc/book/ch09.html, for full details. Besides, NLTK exposes a function that combines chunking with named entity extraction, which is the next step.- Extraction

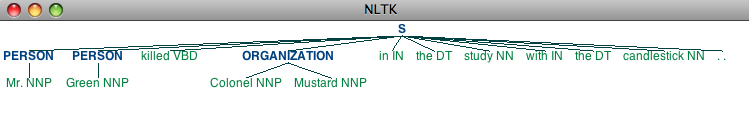

This step involves analyzing each chunk and further tagging the chunks as named entities, such as people, organizations, locations, etc. The continuing saga of NLP in the interpreter demonstrates:

>>>

ne_chunks = nltk.batch_ne_chunk(pos_tagged_tokens)>>>ne_chunks[Tree('S', [Tree('PERSON', [('Mr.', 'NNP')]), Tree('PERSON', [('Green', 'NNP')]), ('killed', 'VBD'), Tree('ORGANIZATION', [('Colonel', 'NNP'), ('Mustard', 'NNP')]), ('in', 'IN'), ('the', 'DT'), ('study', 'NN'), ('with', 'IN'), ('the', 'DT'), ('candlestick', 'NN'), ('.', '.')]), Tree('S', [Tree('PERSON', [('Mr.', 'NNP')]), Tree('ORGANIZATION', [('Green', 'NNP')]), ('is', 'VBZ'), ('not', 'RB'), ('a', 'DT'), ('very', 'RB'), ('nice', 'JJ'), ('fellow', 'JJ'), ('.', '.')])] >>>ne_chunks[0].draw() # You can draw each chunk in the treeDon’t get too wrapped up in trying to decipher exactly what the tree output means just yet. In short, it has chunked together some tokens and attempted to classify them as being certain types of entities. (You may be able to discern that it has identified “Mr. Green” as a person, but unfortunately categorized “Colonel Mustard” as an organization.) Figure 8-1 illustrates the effect of calling

draw()on the results fromnltk.batch_ne_chunk.

As interesting as it would be to continue talking about the intricacies of NLP, producing a state-of-the-art NLP stack or even taking much of a deeper dive into NLTK for the purposes of NLP isn’t really our purpose here. The background in this section is provided to motivate an appreciation for the difficulty of the task and to encourage you to review the aforementioned NLTK book or one of the many other plentiful resources available online if you’d like to pursue the topic further. As a bit of passing advice, if your business (or idea) depends on a truly state-of-the-art NLP stack, strongly consider purchasing a license to a turn-key product from a commercial or academic institution instead of trying to home-brew your own. There’s a lot you can do on your own with open source software, and that’s a great place to start, but as with anything else, the investment involved can be significant if you have to make numerous improvements that require specialized consulting engagements. NLP is still very much an active field of research, and the cutting edge is nowhere near being a commodity just yet.

Unless otherwise noted, the remainder of this chapter assumes you’ll be using NLTK “as-is” as well. (If you had a PhD in computational linguistics or something along those lines, you’d be more than capable of modifying NLTK for your own needs and would probably be reading much more scholarly material than this chapter.)

With that brief introduction to NLP concluded, let’s get to work mining some blog data.