7

Mixing domains and objectives

Mixing can be seen as involving two main concerns: macromixing and micromixing. Macromixing is concerned with the overall mix; for example, its frequency balance. Micromixing is concerned with the individual treatment of each instrument; for instance, how natural the vocals sound. When we judge a mix, we evaluate both macromixing and micromixing. When macromixing, there is generally a set of areas and objectives that should be considered. These will also affect micromixing.

The process of mixing can be divided into five main domains or core aspects: time, frequency, level, stereo, and depth. Two of these aspects—stereo and depth—together form a higher domain: space. We often talk about the mix as if it exists on an imaginary sound stage, where instruments can be positioned left and right (stereo) or front and back (depth).

![]()

The term stereo does not necessarily denote a two-channel system. Any system that is not monophonic can be regarded as stereophonic; for example, Dolby’s 4.1 stereo. For convenience, however, throughout this book, the term “stereo” means a two-channel system.

In many cases, instruments in the mix are fighting for space; for example, guitars and vocals that mask one another. Each instrument has properties in each domain. We can establish separation between competing mix elements by utilizing various mixing tools to manipulate the sonic aspects of instruments, and their presentation in each domain.

Mixing objectives

The key objective with mixing is to best convey the performance and emotional features of a musical piece so that it moves the listener. As the saying goes: there are no rules. If a mix is exciting, who cares how it was done? But there is a wealth of experience available and strategies that have been devised to help those who are yet to develop that guiding inner spark or mixing engineer’s instinct, or to help grasp the technical aspects of mixing.

We can define four principal objectives that apply in most mixes: mood, balance, definition, and interest. When evaluating the quality of a mix, we can start by considering how coherent and appealing each domain is and then assess how well each objective has been satisfied within that domain. While such an approach is rather technical, it provides a system that can be followed and that is already part of the mixing process anyway, even for the most creative engineers. Also, such an approach will not detract from the artistic side of mixing, which is down to the individual. First, we will discuss each of the objectives and then we will see how they can be achieved. We will also discuss the key issues and common problems that affect each domain.

Mood

The mood objective is concerned with reflecting the emotional context of the music in the mix. Of all the objectives, this one involves the most creativity and is central to the entire project. Heavy compression, aggressive equalization, dirty distortion, and a loud punchy snare will sound incongruent in a mellow jazz number, and could destroy the song’s emotional qualities. Likewise, sweet reverberant vocals, a sympathetic drum mix, and quiet guitars will only detract from the raw emotional energy of an angsty heavy metal song. Mixing engineers that specialize in one genre can find mixing a different genre like eating soup with a fork—they might try to apply their usual techniques and familiar sonic vision to a mix that has very different needs.

Balance

We are normally after balance in three domains:

- frequency balance;

- stereo image balance; and

- level balance (which consists of relative and absolute—which will be explained shortly).

An example of frequency imbalance is a shortfall of high-mids that can make a mix sound muddy, blurry, and distant. However, when it comes to the depth domain, we usually seek coherency, not balance. An in-your-face kick with an in-your-neighbor’s-house snare would create a very distorted depth image for drums.

There are generally two things that we will consider trading balance for—a creative effect and interest. For example, in a few sections of The Delgados’ “The Past That Suits You Best,” Dave Fridmann chose to pan the drums and vocal to one channel only, creating an imbalanced stereo image but an engaging effect. This interest/imbalance trade-off usually lasts for a very brief period—rolling off some low frequencies during the break section of a dance tune is one of many examples.

Definition

Primarily, definition stands for how distinct and recognizable sounds are. Mostly, we associate definition with instruments, but we can also talk about the definition of a reverb. Not every mix element requires a high degree of definition. In fact, some mix elements are intentionally hidden; for example, a low-frequency pad that fills a missing frequency range but does not play any important musical role. A subset of definition also deals with how well each instrument is presented in relation to its timbre—can you hear the plucking on the double bass, or is it pure, low energy?

Interest

On an exceptionally cold evening late in 1806, a certain young man sat at the Theatre an der Wien in Vienna, awaiting the premier of Beethoven’s Violin Concerto in D Major. Shortly after the concert began, a set of four dissonant D-sharps were played by the first violin section and the orchestra responded with a phrase. Shortly after, the same thing happened again, only this time it was the second violin section, accompanied by the violas, playing the same D-sharps. On the repetition, the sound appeared to shift to the center of the orchestra and had a slightly different texture. “Interesting!” thought the young man.

We have already established that our brain very quickly gets used to sounds, and without changes we can lose interest. The verse-chorus-verse structure, double-tracking, arrangement changes, and many other production techniques all result in variations that grab the attention of the listener. It is important to know that even subtle changes can give a sense of development, of something happening, even though most listeners are unconscious of it. Even when played in the background, some types of music can distract our attention—most people would find it easier studying for an exam with classical music playing in the background rather than death metal. Part of mixing is concerned with accommodating inherent interest in productions. For example, we might apply automation to adapt to a new instrument introduced during the chorus.

A mix must retain and increase the intrinsic interest in a production.

The 45 minutes of Beethoven’s violin concerto mentioned above probably contain as many musical ideas as all the songs that a commercial radio station plays in an entire day, but it requires active listening and develops very slowly compared with a pop track. Radio stations usually restrict a track’s playing time to around 3 minutes so that more songs (and advertisements) can be squeezed in. This way, the listener’s attention is held and they are less likely to switch to another station.

Many demo recordings that have not been properly produced include limited ideas and variations, and result in a mundane listening experience. While mixing, we have to listen to these productions again and again, and so our boredom and frustration are tenfold. Luckily, we can introduce some interest into boring songs through mixing—we can create a dynamic mix of a static production. Even when a track has been well produced, a dynamic mix is often beneficial. Here are just a few examples of how we can increase dynamism in a recording:

- Automate levels.

- Have a certain instrument playing in particular sections only—for instance, introducing the trumpets from the second verse.

- Use different EQ settings for the same instrument and toggle them between sections.

- Apply more compression on the drum mix during the choruses.

- Distort a bass guitar during the choruses.

- Set different snare reverbs in different sections.

A mix can add or create interest.

With all of this in mind, it is worth remembering that not all music is meant to be attention-grabbing. Brian Eno once said about ambient music that it should be ignorable and interesting at the same time. Some genres, such as trance, are based on highly repetitive patterns that might call for more subtle changes.

Frequency domain

The frequency domain is probably the hardest aspect of mixing to master. Some approximate that half of the work that goes into a mix involves frequency treatment.

The frequency spectrum

Most people are familiar with the bass and treble controls found on many hi-fi systems. Most people also know that the bass control adjusts the low frequencies (lows) and the treble the high frequencies (highs). Some people are also aware that in between the lows and highs are the mid frequencies (mids).

Our audible frequency range is 20 Hz to 20 kHz (20,000 Hz). It is often subdivided into four basic bands: lows, low-mids, high-mids, and highs. This division originates from the common four-band equalizers found on many analog desks. There is no standard as to the amount of bands or where exactly each band begins and ends, and on most equalizers the different bands overlap. Roughly speaking, the crossover points are at 250 Hz, 2 kHz, and 6 kHz, as illustrated on the frequency-response graph in Figure 7.1. Of the four bands, the extreme bands are easiest to recognize since they either open or seal the frequency spectrum. Having the ability to identify the lower or higher midrange can take a bit of practice.

Figure 7.1 The four basic bands of the frequency spectrum.

Frequency balance and common problems

Achieving frequency balance (also referred to as tonal balance) is a prime challenge in most mixes. Here again, it is hard to define what is a good tonal balance, and our ears very quickly get used to different mix tonalities. In his book Mastering Audio, Bob Katz suggests that the tonal balance of a symphony orchestra can be used as a reference for many genres. Although there might not be an absolute reference, my experience shows that seasoned engineers have an unhesitating conception of frequency balance. Moreover, having a few engineers listening to an unfamiliar mix in the same room would result in remarkably similar opinions. Although different tonal balances might all be approved, any of the issues presented below are rarely argued on a technical basis (although they might be argued on an artistic basis—”I agree it’s a bit dull, but I think this is how this type of music should sound”).

![]()

The following tracks demonstrate the isolation of each of the four basic frequency bands. All tracks were produced using an HPF, LPF, or a combination of both. The crossover frequencies between the bands were set to 250 Hz, 2 kHz, and 6 kHz. The filter slope was set to 24 dB/oct.

Track 7.1: Drums Source

The source, unprocessed track used in the following samples.

Track 7.2: Drums LF Only

Notice the trace of crashes in this track.

Track 7.3: Drums LMF Only

Track 7.4: Drums HMF Only

Track 7.5: Drums HF Only

And the same set of samples with vocals:

Track 7.6: Vocal Source

Track 7.7: Vocal LF Only

Track 7.8: Vocal LMF Only

Track 7.9: Vocal HMF Only

Track 7.10: Vocal HF Only

Plugin: Digidesign DigiRack EQ 3

The most common problems with frequency balance involve the extremes. A mix is boomy if there is an excess of low-frequency content, and thin if there is a deficiency. A mix is dull if there are not enough highs, and brittle if there are too many. Many novice engineers come up with mixes that present these problems, mainly due to their colored monitors and lack of experience in evaluating these ranges. Generally, the brighter the instruments are, the more defined they become and the more appealing they can sound. There is a dangerous tendency to brighten up everything and end up with very dominant highs. This is not always a product of equalization—enhancers and distortions also add high-frequency content. Either a good night’s sleep or a comparison to a reference track will normally help pinpoint these problems. Overemphasized highs are also a problem during mastering, since mastering engineers can use some high-frequency headroom for enhancement purposes. A lightly dull mix can be made brighter with relative ease. A brittle mix can be softened, but this might limit the mastering engineer when applying sonic enhancements.

Low-frequency issues are also prevalent due to the great variety of playback systems and their limited accuracy in reproducing low frequencies (such as in most bedroom studios). For this very reason, low frequencies are usually the hardest to stabilize, and it is always worth paying attention to this range when comparing a mix on different playback systems.

![]()

Track 7.11: Hero Balanced

Subject to taste, the monitors used, and the listening environment, this track presents a relatively balanced frequency spectrum. The following tracks are exaggerated examples of mixes with extreme excesses or deficiencies.

Track 7.12: Hero Lows Excess

Track 7.13: Hero Lows Deficiency

Track 7.14: Hero Highs Excess

Track 7.15: Hero Highs Deficiency

In general, it is more common for a mix to have an excess of uncontrolled low-end than to be deficient in it. The next most problematic frequency band is the low-mids, where most instruments have their fundamentals. Separation and definition in the mix is largely dependent on the work done in this busy area, which can very often be cluttered with nonessential content.

One question always worth asking is: Which instrument contributes to which part of the frequency spectrum? Muting the kick and the bass, which provide most of the low-frequency content, will cause most mixes to sound powerless and thin. Based on the percussives weigh less axiom, muting the bass is likely to cause more low-end deficiency. Yet, our ears might not be very discriminating when it comes to short absences of some frequency ranges—a hi-hat can appear to fill the high-frequency range, even when played slowly.

One key aspect of the frequency domain is separation. In our perception, we want each instrument to have a defined position and size on the frequency spectrum. It is always a good sign if we can say that, for instance, the bass is the lowest, followed by the kick, then the piano, snare, vocals, guitar, and cymbals. The order matters less—the important thing is that we can separate one instrument from the others. Then we can try to see whether there are empty areas: Is there a smooth frequency transition between the vocals and the hi-hats, or are they spaced apart with nothing between them? This type of evaluation, as demonstrated in Figure 7.2, is rather abstract. In practice, instruments can span the majority of the frequency spectrum. But we can still have a good sense of whether instruments overlap, and whether there are empty frequency areas.

The frequency domain and other objectives

Since masking is a frequency affair, definition is bound to the way various instruments are crafted into the frequency spectrum. Fighting elements mask one another and have competing content on specific frequency ranges. For example, the bass might mask the kick since both have healthy low-frequency content; the kick’s attack might be masked by the snare, as both appear in the high-mids. We equalize various instruments to increase their definition, a practice often done in relation to other masking instruments. Occasionally, we might also want to decrease the definition of instruments that stand out too much.

Frequency content relates to mood. Generally, low-frequency emphasis relates to a darker, more mysterious mood, while high-frequency content relates to happiness and liveliness. Although power is usually linked to low frequencies, it can be achieved through all the areas of the frequency spectrum. There are many examples of how the equalization of individual instruments can help in conveying one mood or another; these are discussed later, in Chapter 15.

Figure 7.2 Instrument distribution on the frequency spectrum. (a) An im balanced mix with some instruments covering others (no separation) and a few empty regions. (b) A balanced mix where each instrument has a well defined range that does not overlap with other instruments. Altogether, the various instruments constitute a full, continuous frequency response. (c) The actual frequency ranges of the different instruments.

Many DJs are very good at balancing the frequency spectrum. An integral part of their job is making each track in the set similar in tonality to its preceding track. One very common DJ move involves sweeping up a resonant high-pass filter for a few bars and then switching it off. The momentary loss of low frequencies and the resonant high frequencies creates some tension and makes the reintroduction of the kick and bass very exciting for some clubbers, with those already excited sometimes turning overexcited. Mixing engineers do not go to the same extremes as DJs, but attenuating momentarily low frequencies during a drop or a transition section can achieve a similar effect. Some frequency interest occurs naturally with arrangement changes—choruses might be brighter than verses due to the addition of some instruments. Low frequencies usually remain intact despite these changes, but, whether mixing a recorded or an electronic track, it is possible to automate a shelving EQ to add more lows during the exciting sections.

Level domain

High-level signals can cause unwanted distortion—once we have cleared up this technical issue, our mix evaluation process is much more concerned with the relative levels between instruments rather than their absolute level (which varies in relation to the monitoring levels). Put another way, the question is not “How loud?” but “How loud compared with other instruments?” The latter question is the basis for most of our level decisions—we usually set the level of an instrument while comparing it to the level of another. There are huge margins for personal taste when it comes to relative levels (as your excerpt set from Chapter 1 should prove), and the only people who are likely to get it truly wrong are the fledglings—achieving a good relative level balance is a task that for most of us will come naturally.

However, as time goes by, we learn to appreciate how subtle adjustments can have a dramatic effect on the mix. For instance, even a boost of 2 dB on pads can make a mix much more appealing. In contrast to the widespread belief, setting relative levels involves much more than just moving faders; equalizers and compressors are employed to adjust the perceived loudness of instruments in more sophisticated ways—getting an exceptional relative level balance is an art and requires experience.

Levels and balance

It is worth discussing what a good relative level balance is. Some mixing engineers are experienced enough to create a mix where all the instruments sound as loud. But only a few, mostly sparse mixes might benefit from such an approach. All other mixes usually call for some variety. In fact, trying to make everything equally loud is often a self-defeating habit that novice engineers adopt—it can be both impractical and totally unsuitable. It is worth remembering that the relative level balance of a mastered mix is likely to be tighter— raw mixes usually have greater relative levels variety.

![]()

Track 7.16: Level Balance Original

This track presents a sensible relative level balance between the different instruments. The following tracks demonstrate variations of that balance, arguably for the worse.

Track 7.17: Level Balance 1 (Guitars Up)

Track 7.18: Level Balance 2 (Kick Snare Up)

Track 7.19: Level Balance 3 (Vocal Up)

Track 7.20: Level Balance 4 (Bass Up)

Setting relative balance between the various instruments is usually determined by their importance. For example, the kick in a dance track is more important than any pads. Vocals are usually the most important in rock and pop music. (A very common question is: “Is anything louder than the vocals?”) Maintaining sensible relative levels usually involves gain-rides. As our song progresses, the importance of various instruments might change, as in the case of a lead guitar that is made louder during its solo section.

Steep level variations of the overall mix might also be a problem. These usually occur as a result of major arrangement changes that are very common in sections such as intros, breaks, and outros. If we do not automate levels, the overall mix level can dive or rise in a disturbing way. The level of the crunchy guitar on the first few seconds of Nirvana’s “Smells Like Teen Spirit” was gain-ridden exactly for this purpose—having an overall balanced mix level. One commercial example of how disturbing the absence of such balance can be can be heard on “Everything for Free” by K’s Choice. The mix just explodes after the intro and the level burst can easily make you jump off your seat. This track also provides, in my opinion, an example of vocals not being loud enough.

Levels and interest

Although notable level changes of the overall mix are undesirable, we want some degree of level variations in order to promote interest and reflect the intensity of the song. Even if we do not automate any levels, the arrangement of many productions will mean a quieter mix during the verse and a louder one during the chorus. Figure 7.3 shows the waveform of “Witness” by The Delgados, and we can clearly see the level variations between the different sections.

Figure 7.3 The level changes in this waveform clearly reveal the verses, choruses, and various breaks.

Very often, however, we spice up these inherent level changes with additional automation. While mastering engineers achieve this by automating the overall mix level, during mixdown we mostly automate individual instruments (or instrument groups). The options are endless: the kick, snare, or vocals might be brought up during the chorus; the overheads might be brought up on downbeats or whenever a crash hits (crashes often represent a quick intensity burst); we might bring down the level of reverbs to create a tighter, more focused ambiance; we can also automate the drum mix in a sinusoidal fashion with respect to the rhythm—it has been done before.

Level automation is used to preserve overall level balance, but also to break it.

Levels, mood, and definition

If we consider a dance track, the level of the kick is tightly related to how well the music will affect us—a soft kick will fail to move people in a club. If a loud piano competes with the vocals in a jazz song, we might miss the emotional message of the lyrics or the beauty of the melody. We should always ask ourselves what the emotional function of each instrument is and how it can enhance or damage the mood of a song, then set the levels accordingly. In mixing, there are always alternatives—it is not always the first thing that comes to mind that works best. For example, the novice’s way to create power and aggression in a mix is to have very loud, distorted guitars. But the same degree of power and aggression can be achieved using other strategies, which will enable us to bring down the level of the masking guitars and create a better mix altogether.

Definition and levels are linked: the louder an instrument is, the more defined it is in the mix. However, an instrument with frequency deficiencies might not benefit from a level boost—it might still be undefined, just louder. Remember that bringing up the level of a specific instrument can cause the loss in definition of another.

Dynamic processing

So far, we have discussed the overall level of the mix, and the relative levels of the individual instruments it consists of. Another very important aspect of a mix relates to noticeable level changes in the actual performance of each instrument (micromixing). Inexperienced musicians can often produce a single note, or a drum hit, that is either very loud or quiet compared with the rest of the performance. Rarely do vocalists produce a level-even performance. These level variations, whether sudden or gradual, break the relative balance of a mix, and therefore we need to contain them using gain-riding, compression, or both. We can also encounter the opposite, mostly caused by over-compression, where the perceived level of a performance is too flat, making instruments sound lifeless and unnatural.

![]()

Track 7.21: Hero No Vocal Compression

In this track, the vocal compressor was bypassed. Not only is the level fluctuation of the vocal disturbing, but the relative level between the voice and other instruments alters.

Stereo domain

Stereo image criteria

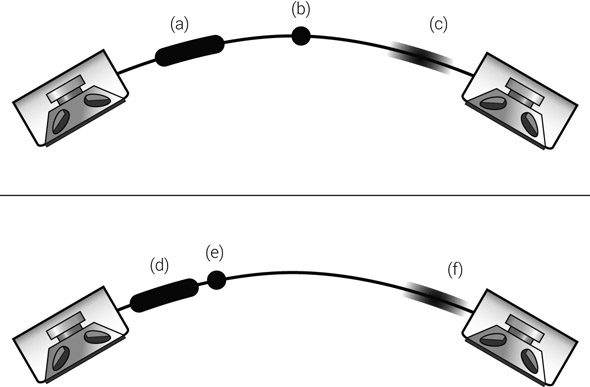

The stereo panorama is the imaginary space between the left and right speakers. When we talk about stereo image, we either talk about the stereo image of the whole mix or the stereo image of individual instruments within it—a drum kit, for example. One incorrect assumption is that stereo image is only concerned with how far to the left or right instruments are panned. But stereo image involves slightly more advanced concepts, classified like so (also illustrated in Figure 7.4):

- Localization—concerned with where the sound appears to come from on the left–right axis.

- Stereo width—how much of the stereo image the sound occupies. A drum kit can appear narrow or wide, as can a snare reverb.

- Stereo focus—how focused the sounds are. A snare can appear to be emanating from a very distinct point in the stereo image, or can be unfocused, smeared, and appear to be coming from “somewhere over there.”

- Stereo spread—how exactly the various elements are spread across the stereo image. For example, the individual drums on an overhead recording can appear to be coming mostly from the left and right, and less from the center.

To control these aspects of the stereo panorama, we use various processors and stereo effects, mainly pan pots and reverbs. As we will learn in Part II, stereo effects such as reverbs can fill gaps in the stereo panorama, but can also damage focus and localization.

Figure 7.4 Stereo criteria. Localization is concerned with our ability to discern the exact position of an instrument. For example, the position of instrument (b)/(e). Stereo width is concerned with the length of stereo space the instrument occupies. For example, (a) might be a piano wider in image than a vocalist (b). If we can’t localize precisely the position of an instrument, its image is said to be smeared (or diffused), like that of (c). The difference between (a, b, c) and (d, e, f) is the way they are spread across the stereo panorama.

Stereo balance

Balance is the main objective in the stereo domain. Above all, we are interested in the balance between the left and right areas of the mix. Having a stereo image that shifts to one side is unpleasant and can even cause problems if the mix is later cut to vinyl. Mostly, we consider the level balance between left and right—if one channel has more instruments panned to it, and if these instruments are louder than the ones panned to the other channel, the mix image will converge on one speaker. It is like having a play with most of the action happening stage left. Level imbalance between the left and right speakers is not very common in mixes, since we have a natural tendency to balance this aspect of the mix. It is worth remembering that image shifting can also happen at specific points throughout the song, for example when a new instrument is introduced. One tool that can help us in identifying image shifting is the L/R swap switch.

![]()

There are more than a few commercial mixes that involve stereo imbalance. One example is “Give It Away” by Red Hot Chili Peppers, where John Frusciante’s guitar appears nearly fully to the right, with nothing to balance the left side of the mix. The cymbals panned to the left on Kruder & Dorfmeister’s remix for “Useless” are another example.

A slightly more common type of problem is stereo frequency imbalance. While the frequency balance between the two speakers is rarely identical, too much variation can result in image shifting. Even two viola parts played an octave apart can cause image shifting if panned symmetrically left and right (the higher-octave part will draw more attention). Stereo frequency balance is much to do with arrangement—if there is only one track of an instrument with a very distinguished frequency content, it can cause imbalance when panned. We either pan such an instrument more toward the center (to minimize the resultant image shifting) or we employ a stereo effect to fill the other side of the panorama. Hi-hats are known for causing such problems, especially in recorded productions, where it makes less sense to add delay to them.

While left/right imbalance is the most noticeable of all stereo problems, stereo spread imbalance is the most common. In most mixes, we expect to have the elements spread across the stereo panorama so there are no lacking areas, like a choir is organized onstage. The most obvious type of stereo spread imbalance is a nearly monophonic mix—one that makes very little use of the sides, also know as an I-mix (Figure 7.5). While such a mix can be disturbing, it can sometimes be appropriate. Nearly monophonic mixes are most prevalent in hip-hop—the beat, bass, and vocals are gathered around the center, and only a few sounds, such as reverbs or backing pads, are sent to the sides. One example of such a mix is “Apocalypse” by Wyclef Jean, although not all hip-hop tracks are mixed that way.

Figure 7.5 An I-mix. The dark area between the two speakers indicates the intensity area. The white area indicates the empty area. An I-mix has most of its intensity around the center of the stereo panorama, with nothing or very little toward the extremes.

Another possible issue with stereo spread involves a mix that has a weak center, and most of its intensity is panned to the extremes (it is therefore called a V-mix) (Figure 7.6). V-mixes are usually the outcome of a creative stereo stratagem (or the result of three-state pan switches such as those found on very old consoles); otherwise, they are very rare.

Figure 7.6 A V-mix. The term describes a mix that has very little around the center, and most of the intensity is spread to the extremes.

The next type of stereo spread imbalance, and a very common one, is a combination of the previous two, known as the W-mix— a mix that has most of its elements panned hard-left, center, and hard-right (Figure 7.7). Many novices produce such mixes because they tend to pan every stereo signal to the extremes. A W-mix is not only unpleasant, but in a dense arrangement can be considered a waste of stereo space. Here again, the arrangement plays a role—if there are few instruments, we need to widen them to fill spaces in the mix, but this is not always appropriate. An example of a W-mix is the verse sections of “Hey Ya!” by OutKast; during the chorus, the stereo panorama becomes more balanced, and the change between the two is an example of how interest can originate from stereo balance variations.

Figure 7.7 A W-mix involves intensity around the extremes and center, but not much in between.

The last type of stereo spread imbalance involves a stereo panorama that is lacking in a specific area, say between 13:00 and 14:00. It is like having three adjacent players missing from a row of trumpet players. Figure 7.8 illustrates this. Identifying such a problem is easier with a wide and accurate stereo setup. This type of problem is usually solved with panning adjustments.

Figure 7.8 A mix lacking a specific area on the stereo panorama. The empty area is seen next to the right speaker.

I- and W-mixes are the most common types of stereo spread imbalance.

![]()

Track 7.22: Full Stereo Spread

The full span of the stereo panorama has been utilized in this track. The following tracks demonstrate the stereo spread issues discussed above.

Track 7.23: W-Mix

Track 7.24: I-Mix

Track 7.25: V-Mix

Track 7.26: Right Hole

In this track, there is an empty area on the right-hand side of the mix.

Stereo image and other objectives

Although it might seem unlikely, stereo image can also promote various moods. Soundscape music, chill-out, ambient, and the like tend to have a wider and less-focused stereo image compared with less relaxed genres. The same old rule applies—the less natural, the more powerful. A drum kit as wide as the stereo panorama may sound more natural than a drum kit panned around the center. In “Witness” by The Delgados, Dave Fridmann chose to have a wide drum image for the verse, then a narrow image during the more powerful chorus.

We have seen a few examples of how the stereo image can be manipulated in order to achieve interest. Beethoven achieved this by shifting identical phrases across the orchestra, while in “Hey Ya!” the stereo spread changes between the verse and the chorus. It is worth listening to Nirvana’s “Smells Like Teen Spirit” and noting the panning strategy and the change in stereo image between the interlude and the chorus. Other techniques include panning automation, auto-pans, stereo delays, and so forth. We will see in Chapter 14 how panning can also improve definition.

Depth

For a few people, the fact that a mix involves a front/back perspective comes as a surprise. Various mixing tools, notably reverbs, enable us to create a sense of depth in our mix— a vital extension to our sound stage, and our ability to position instruments within it.

All depth considerations are relative. We never talk in terms of how many meters away— we talk in terms of in front of or behind another instrument. The depth axis of our mix starts with the closest sound and finishes with the farthest away. A mix where instruments are very close can be considered tight, and a mix that has an extended depth can be considered spacious. This is used to reflect the mood of the musical piece.

The depth field is an outsider when it comes to our standard mixing objectives. We rarely talk about a balanced depth field since we do not aim to have our instruments equally spaced in this domain. A coherent depth field is a much more likely objective. Also, in most cases, the farther away an instrument is, the less defined it becomes; crafting the depth field while retaining the definition of the individual instruments can be a challenge. A classical concert with the musicians walking back and forth around the stage would be chaotic. Likewise, depth variations are uncommon, and usually instruments move back and forth in the mix as a creative effect, or in order to promote importance (just like a trumpet player would walk to the front of the stage during his or her solo).

![]()

Track 7.27: Depth Demo

This track involves three instruments in a spatial arrangement. The lead is dry and foremost, the congas are positioned behind it, and the flute-like synth is placed way at the back. The depth perception in this track should be maintained whether listening on the central plan between the speakers, at random points in the room, or even outside the door.

Track 7.28: No Depth Demo

This track is the same as the previous arrangement, but excludes the mix elements that contributed to depth perception.

Plugin: Audio Ease Altiverb

Percussion: Toontrack EZdrummer

We are used to sonic depth in nature and we want our mixes to recreate this sense. The main objective is to create something natural or otherwise artificial but appealing. Our decisions with regard to instrument placement are largely determined by importance and what we are familiar with in nature. We expect the vocals in most rock productions to be the closest, just like a singer is frontmost on stage. But in many electronic dance tracks, the kick will be in front and the vocals will appear behind it, sometimes even way behind it. Then we might want all drum kit components to be relatively close on the depth field because this is how they are organized in real life.

Every two-speaker system has an integral depth, and sounds coming from the sides appear closer than sounds coming from the center. As demonstrated in Figure 7.9, the bending of the front image changes between speaker models, and when we toggle between two sets of speakers our whole mix might appear to shift forward or backward. The sound stage created by a stereo setup has the shape of a skewed rectangle and its limits are set to the angle between our head and the speakers (although we can create an out-of-speaker effect using phase tricks). As illustrated in Figure 7.10, the wider the angle between our head and the speakers, the wider the sound stage will become, especially for distant instruments.

Figure 7.9 The perceived frontline of the mix appears in the stereo image as an arch, the shape of which varies between speaker models.

Figure 7.10 The width of the sound stage will change as our head moves forward or backward.

![]()

These two tracks demonstrate the integral depth of a two-speaker system. Pink noise and a synth are swept in a cyclic fashion between left and right. When listening on the central plane between properly positioned monitors, sounds should appear to be closest when they reach the extremes, and farther away as they approach the center. It should also be possible to imagine the actual depth curve. Listening to these tracks with eyes shut might make the apparent locations easier to discern.

Track 7.29: Swept Pink Noise

Track 7.30: Swept Synth

These tracks were produced using Logic, with –3 dB pan law (which will be explained later).