2.7. Vector Spaces and Modules

Vector spaces and linear transformations between them are the central objects of study in linear algebra. In this section, we investigate the basic properties of vector spaces. We also generalize the concept of vector spaces to get another useful class of objects called modules. A module which also carries a (compatible) ring structure is referred to as an algebra. Study of algebras over fields (or more generally over rings) is of importance in commutative algebra, algebraic geometry and algebraic number theory.

2.7.1. Vector Spaces

Unless otherwise specified, K denotes a field in this section.

Definition 2.42.

Example 2.12.

|

Corollary 2.8.

|

Let V be a K-vector space. For every

Proof Easy verification. |

Definition 2.43.

|

Let V be a vector space over K and S a subset of V. We say that S is a generating set or a set of generators of V (over K), or that S generates V (over K), if every element |

Example 2.13.

|

Definition 2.44.

If ![]() , then S is linearly dependent, since a · 0 = 0 for any

, then S is linearly dependent, since a · 0 = 0 for any ![]() . One can easily check that all the generating sets of Example 2.13 are linearly independent too. This is, however, not a mere coincidence, as the following result demonstrates.

. One can easily check that all the generating sets of Example 2.13 are linearly independent too. This is, however, not a mere coincidence, as the following result demonstrates.

Theorem 2.25.

|

A subset S of a K-vector space V is a minimal generating set for V if and only if S is a maximal linearly independent set of V. Proof [if] Given a maximal linearly independent subset S of V, we first show that S is a generating set for V. Take any non-zero [only if] Given a minimal generating set S of V, we first show that S is linearly independent. Assume not, that is, a1x1 + · · · + anxn = 0 for some |

Definition 2.45.

|

Let V be a K-vector space. A minimal generating set S of V is called a basis of V over K (or a K-basis of V). By Theorem 2.25, S is a basis of V if and only if S is a maximal linearly independent subset of V. Equivalently, S is a basis of V if and only if S is a generating set of V and is linearly independent. |

Any element of a vector space can be written uniquely as a finite linear combination of elements of a basis, since two different ways of writing the same element contradict the linear independence of the basis elements.

A K-vector space V may have many K-bases. For example, the elements 1, aX + b, (aX + b)2, · · · form a K-basis of K[X] for any a, ![]() , a ≠ 0. However, what is unique in any basis of a given K-vector space V is the cardinality[8] of the basis, as shown in Theorem 2.26.

, a ≠ 0. However, what is unique in any basis of a given K-vector space V is the cardinality[8] of the basis, as shown in Theorem 2.26.

[8] Two sets (finite or not) S1 and S2 are said to be of the same cardinality, if there exists a bijective map S1 → S2.

For the sake of simplicity, we sometimes assume that V is a finitely generated K-vector space. This assumption simplifies certain proofs greatly. But it is important to highlight here that, unless otherwise stated, all the results continue to remain valid without the assumption. For example, it is a fact that every vector space has a basis. For finitely generated vector spaces, this is a trivial statement to prove, whereas without our assumption we need to use arguments that are not so simple. (A possible proof follows from Exercise 2.63 with U = {0}.)

Theorem 2.26.

|

Let V be a K-vector space. Then any K-basis of V has the same cardinality. Proof We assume that V is finitely generated. Let S = {x1, . . . , xn} be a minimal finite generating set, that is, a basis, of V. Let T be another basis of V. Assume that m := #T > n. (We might even have m = ∞.) We can choose distinct elements |

Theorem 2.26 holds even when V is not finitely generated. We omit the proof for this case here.

Definition 2.46.

For example, dimK Kn = n, ![]() , and dimK K[X] = ∞.

, and dimK K[X] = ∞.

Definition 2.47.

|

Let V be a K-vector space. A subgroup U of V, which is closed under the scalar multiplication of V, is again a K-vector space and is called a (vector) subspace of V. In this case, we have dimK U ≤ dimK V (Exercise 2.63). |

Example 2.14.

|

Let V be a vector space over K.

|

Definition 2.48.

|

Let V and W be K-vector spaces. A map f : V → W is called a homomorphism (of vector spaces) or a linear transformation or a linear map over K, if f(ax + by) = af(x) + bf(y) for all a,

|

Theorem 2.27.

|

Let V and W be K-vector spaces. Then V and W are isomorphic if and only if dimK V = dimK W. Proof If dimK V = dimK W and S and T are bases of V and W respectively, then there exists a bijection f : S → T. One can extend f to a linear map |

Corollary 2.9.

|

A K-vector space V with n := dimK V < ∞ is isomorphic to Kn. |

Let V be a K-vector space and U a subspace. As in Section 2.3 we construct the quotient group V/U. This group can be given a K-vector space structure under the scalar multiplication map a(x + U) := ax + U, ![]() ,

, ![]() . If T ⊆ V is such that the residue classes of the elements of T form a K-basis of V/U and if S is a K-basis of U, then it is easy to see that S ∪ T is a K-basis of V. In particular,

. If T ⊆ V is such that the residue classes of the elements of T form a K-basis of V/U and if S is a K-basis of U, then it is easy to see that S ∪ T is a K-basis of V. In particular,

Equation 2.2

![]()

For ![]() , the set

, the set ![]() is called the kernel Ker f of f, and the set

is called the kernel Ker f of f, and the set ![]() is called the image Im f of f. We have the isomorphism theorem for vector spaces:

is called the image Im f of f. We have the isomorphism theorem for vector spaces:

Theorem 2.28. Isomorphism theorem

|

Ker f is a subspace of V, Im f is a subspace of W, and V/Ker f ≅ Im f. Proof Similar to Theorem 2.3 and Theorem 2.9. |

Definition 2.49.

|

For |

Theorem 2.29.

|

Rank f + Null f = dimK V for any |

*2.7.2. Modules

If we remove the restriction that K is a field and assume that K is any ring, then a vector space over K is called a K-module. More specifically, we have:

Definition 2.50.

|

Let R be a ring. A module over R (or an R-module) is an (additively written) Abelian group M together with a multiplication map · : R × M → M called the scalar multiplication map, such that for every a, |

Example 2.15.

Modules are a powerful generalization of vector spaces. Any result we prove for modules is equally valid for vector spaces, ideals and Abelian groups. On the other hand, since we do not demand that the ring R be necessarily a field, certain results for vector spaces are not applicable for all modules.

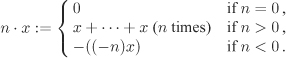

It is easy to see that Corollary 2.8 continues to hold for modules. An R-submodule of an R-module M is a subgroup of M, that is closed under the scalar multiplication of M. For a subset S ⊆ M, the set of all finite linear combinations of the form a1x1 + · · · + anxn, ![]() ,

, ![]() ,

, ![]() , is an R-submodule N of M, denoted by RS or

, is an R-submodule N of M, denoted by RS or ![]() . We say that N is generated by S (or by the elements of S). If S is finite, then N is said to be finitely generated. A (sub)module generated by a single element is called cyclic. It is important to note that unlike vector spaces the cardinality of a minimal generating set of a module is not necessarily unique. (See Exercise 2.68 for an example.) It is also true that given a minimal generating set S of M, there may be more than one ways of writing an element of M as finite linear combinations of elements of S. For example, if

. We say that N is generated by S (or by the elements of S). If S is finite, then N is said to be finitely generated. A (sub)module generated by a single element is called cyclic. It is important to note that unlike vector spaces the cardinality of a minimal generating set of a module is not necessarily unique. (See Exercise 2.68 for an example.) It is also true that given a minimal generating set S of M, there may be more than one ways of writing an element of M as finite linear combinations of elements of S. For example, if ![]() and S = {2, 3}, then 1 = (–1)·2+1·3 = 2·2+(–1)·3. The nice theory of dimensions developed in connection with vector spaces does not apply to modules.

and S = {2, 3}, then 1 = (–1)·2+1·3 = 2·2+(–1)·3. The nice theory of dimensions developed in connection with vector spaces does not apply to modules.

For an R-submodule N of M, the Abelian group M/N is given an R-module structure by the scalar multiplication map a(x + N) := ax + N. This module is called the quotient module of M by N.

For R-modules M and N, an R-linear map or an R-module homomorphism (from M to N) is defined as a map f : M → N with f(ax+by) = af(x)+bf (y) for all a, ![]() and x,

and x, ![]() (or equivalently with f(x + y) = f(x) + f(y) and f(ax) = af(x) for all

(or equivalently with f(x + y) = f(x) + f(y) and f(ax) = af(x) for all ![]() and x,

and x, ![]() ). An isomorphism, an endomorphism and an automorphism are defined in analogous ways as in case of vector spaces. The set of all (R-module) homomorphisms M → N is denoted by HomR(M, N) and the set of all (R-module) endomorphisms of M is denoted by EndR M. These sets are again R-modules under the definitions: (f + g)(x) := f(x) + g(x) and (af)(x) := af(x) for all

). An isomorphism, an endomorphism and an automorphism are defined in analogous ways as in case of vector spaces. The set of all (R-module) homomorphisms M → N is denoted by HomR(M, N) and the set of all (R-module) endomorphisms of M is denoted by EndR M. These sets are again R-modules under the definitions: (f + g)(x) := f(x) + g(x) and (af)(x) := af(x) for all ![]() and

and ![]() (and f, g in HomR(M, N) or EndR M).

(and f, g in HomR(M, N) or EndR M).

The kernel and image of an R-linear map f : M → N are defined as the sets Ker ![]() and Im

and Im ![]() . With these notations we have the isomorphism theorem for modules:

. With these notations we have the isomorphism theorem for modules:

Theorem 2.30. Isomorphism theorem

|

Ker f and Im f are submodules of M and N respectively and M / Ker f ≅ Im f. |

For an R-module M and an ideal ![]() of R, the set

of R, the set ![]() consisting of all finite linear combinations

consisting of all finite linear combinations ![]() with

with ![]() ,

, ![]() and

and ![]() is a submodule of M. On the other hand, for a submodule N of M the set

is a submodule of M. On the other hand, for a submodule N of M the set ![]() is an ideal of R. In particular, the ideal (M : 0) is called the annihilator of M and is denoted as AnnR M (or as Ann M). For any ideal

is an ideal of R. In particular, the ideal (M : 0) is called the annihilator of M and is denoted as AnnR M (or as Ann M). For any ideal ![]() , one can view M as an

, one can view M as an ![]() under the map

under the map ![]() . One can easily check that this map is well-defined, that is, the product

. One can easily check that this map is well-defined, that is, the product ![]() is independent of the choice of the representative a of the equivalence class

is independent of the choice of the representative a of the equivalence class ![]() .

.

Definition 2.51.

|

A free module M over a ring R is defined to be a direct sum |

Any vector space is a free module (Theorem 2.27 and Corollary 2.9). The Abelian groups ![]() ,

, ![]() , are not free.

, are not free.

Theorem 2.31. Structure theorem for finitely generated modules

|

M is a finitely generated R-module if and only if M is a quotient of a free module Rn for some Proof [if] The free module Rn has a canonical generating set ei, ei = (0, . . . , 0, 1, 0, . . . , 0) (1 in the i-th position). If M = Rn/N, then the equivalence classes ei + N, i = 1, ..., n, constitute a finite set of generators of M. [only if] If x1, ..., xn generate M, then the R-linear map f : Rn → M defined by (a1, ..., an) ↦ a1x1 + · · · + anxn is surjective. Hence by the isomorphism theorem M ≅ Rn / Ker f. |

**2.7.3. Algebras

Let ![]() be a homomorphism of rings. The ring A can be given an R-module structure with the multiplication map

be a homomorphism of rings. The ring A can be given an R-module structure with the multiplication map ![]() for

for ![]() and

and ![]() . This R-module structure of A is compatible with the ring structure of A in the sense that for every a,

. This R-module structure of A is compatible with the ring structure of A in the sense that for every a, ![]() and x,

and x, ![]() one has (ax)(by) = (ab)(xy).

one has (ax)(by) = (ab)(xy).

Conversely, if a ring A has an R-module structure with (ax)(by) = (ab)(xy) for every a, ![]() and x,

and x, ![]() , then there is a unique ring homomorphism

, then there is a unique ring homomorphism ![]() taking a ↦ a · 1 (where 1 denotes the identity of A). This motivates us to define the following.

taking a ↦ a · 1 (where 1 denotes the identity of A). This motivates us to define the following.

Definition 2.52.

|

Let R be a ring. An algebra over R or an R-algebra is a ring A together with a ring homomorphism |

Example 2.16.

|

Let R be a ring.

|

An R-algebra A is an R-module with the added property that multiplication of elements of A is now legal. Exploiting this new feature leads to the following concept of algebra generators.

Definition 2.53.

Example 2.17.

One may proceed to define kernels and images of R-algebra homomorphisms and frame and prove the isomorphism theorem for R-algebras. We leave the details to the reader. We only note that algebra homomorphisms are essentially ring homomorphisms with the added condition of commutativity with the structure homomorphisms.

Theorem 2.32.

|

A ring A is a finitely generated R-algebra if and only if A is a quotient of a polynomial algebra (over R). Proof [if] Immediate from Example 2.17. [only if] Let A := R[x1, . . . , xn]. The map η : R[X1, . . . , Xn] → A that takes f(X1, . . . , Xn) ↦ f(x1, . . . , xn) is a surjective R-algebra homomorphism. By the isomorphism theorem, one has the isomorphism A ≅ R[X1, . . . , Xn]/Ker η of R-algebras. |

This theorem suggests that for the study of finitely generated algebras it suffices to investigate only the polynomial algebras and their quotients.

Exercise Set 2.7

| 2.63 | Let V be a K-vector space, U a subspace of V, and T an arbitrary K-basis of U. Show that there is a K-basis of V, that contains T. [H] |

| 2.64 |

|

| 2.65 | Let V and W be K-vector spaces and f : V → W a K-linear map. Show that f is uniquely determined by the images f(x), |

| 2.66 | Let V and W be K-vector spaces. Check that HomK(V, W) is a vector space over K. Show that dimK(HomK(V, W)) = (dimK V)(dimK W). In particular, if W = K, then HomK(V, K) is isomorphic to V. The space HomK(V, K) is called the dual space of V. |

| 2.67 | Let V and W be m- and n-dimensional K-vector spaces, S = {x1, . . . , xm} a K-basis of V, T = {y1, . . . , yn} a K-basis of W, and f : V → W a K-linear map. For each i = 1, . . . , m, write f(xi) = ai1y1 + · · · + ainyn,

Let V1, V2, V3 be K-vector spaces, f, f1,

(Remark: This exercise explains that the linear transformations of finite-dimensional vector spaces can be explained in terms of matrices.) |

| 2.68 | Show that for every |

| 2.69 | Let M be an R-module. A subset S of M is called a basis of M, if S generates M and is linearly independent over R in the sense that |

| 2.70 | We define the rank of a finitely generated R-module M as

RankR M := min{#S | M is generated by S}. If N is a submodule of M, show that RankR M ≤ RankR N + RankR(M/N). Give an example where the strict inequality holds. |

| 2.71 | Let M be an R-module. An element |

| 2.72 | Show that:

This shows that the converse of Exercise 2.71(c) is not true in general. |