The Taylor series formula for the cosine(x) function is as follows:

Write, compile, and run this serial cosine(x) code (see the following code, which shows serial cosine(x) code) to get a feel of the program:

/***********************************

* Serial cosine(x)code. *

* *

* Taylor series representation *

* of the trigonometric cosine(x).*

* *

* Author: Carlos R. Morrison *

* *

* Date: 1/10/2017 *

**********************************/

#include <math.h>

#include <stdio.h>

int main(void)

{

unsigned int j;

unsigned long int k;

long long int B,D;

int num_loops = 17;

float y;

double x;

double sum0=0,A,C,E;

/******************************************************/

printf("

");

printf("Enter angle(deg.):

");

printf("

");

scanf("%f",&y);

if(y <= 180.0)

{

x = y*(M_PI/180.0);

}

else

x = -(360.0-y)*(M_PI/180.0);

/******************************************************/

sum0 = 0;

for(k = 0; k < num_loops; k++)

{

A = (double)pow(-1,k);// (-1^k)

B = 2*k;

C = (double)pow(x,B);// x^2k

D = 1;

for(j=1; j <= B; j++)// (2k!)

{

D *= j;

}

E = (A*C)/(double)D;

sum0 += E;

}

printf("

");

printf(" %.1f deg. = %.3f rads

", y, x);

printf("Cosine(%.1f) = %.4f

", y, sum0);

return 0;

}

Next, write, compile, and run the MPI version of the preceding serial cosine(x) code (see MPI cosine(x) code below), using one processor from each of the 16 nodes.

/**********************************

* MPI cosine(x) code. *

* *

* Taylor series representation *

* of the trigonometric cosine(x).*

* *

* Author: Carlos R. Morrison *

* *

* Date: 1/10/2017 *

**********************************/

#include<mpi.h>// (Open)MPI library

#include<math.h>// math library

#include<stdio.h>// Standard Input/Output library

int main(int argc, char*argv[])

{

long long int total_iter,B,D;

int n = 17,rank,length,numprocs,i,j;

unsigned long int k;

double sum,sum0,rank_integral,A,C,E;

float y,x;

char hostname[MPI_MAX_PROCESSOR_NAME];

MPI_Init(&argc, &argv); // initiates MPI

MPI_Comm_size(MPI_COMM_WORLD, &numprocs); // acquire number of processes

MPI_Comm_rank(MPI_COMM_WORLD, &rank); // acquire current process id

MPI_Get_processor_name(hostname, &length); // acquire hostname

if (rank == 0)

{

printf("

");

printf("#######################################################");

printf("

");

printf("*** Number of processes: %d

",numprocs);

printf("*** processing capacity: %.1f GHz.

",numprocs*1.2);

printf("

");

printf("Master node name: %s

", hostname);

printf("

");

printf("Enter angle(deg.):

");

printf("

");

scanf("%f",&y);

if(y <= 180.0)

{

x = y*(M_PI/180.0);

}

else

x = -(360.0-y)*(M_PI/180.0);

}

// broadcast to all processes, the number of segments you want

MPI_Bcast(&n, 1, MPI_INT, 0, MPI_COMM_WORLD);

MPI_Bcast(&x, 1, MPI_INT, 0, MPI_COMM_WORLD);

// this loop increments the maximum number of iterations, thus providing

// additional work for testing computational speed of the processors

//for(total_iter = 1; total_iter < n; total_iter++)

{

sum0 = 0.0;

// for(i = rank + 1; i <= total_iter; i += numprocs)

for(i = rank + 1; i <= n; i += numprocs)

{

k = (i-1);

A = (double)pow(-1,k);// (-1^k)

B = 2*k;

C = (double)pow(x,B);// x^2k

D = 1;

for(j=1; j <= B; j++)// (2k!)

{

D *= j;

}

E = (A*C)/(double)D;

sum0 += E;

}

rank_integral = sum0;// Partial sum for a given rank

// collect and add the partial sum0 values from all processes

MPI_Reduce(&rank_integral, &sum, 1, MPI_DOUBLE,MPI_SUM, 0,

MPI_COMM_WORLD);

}// End of for(total_iter = 1; total_iter < n; total_iter++)

if(rank == 0)

{

printf("

");

printf(" %.1f deg. = %.3f rads

", y, x);

printf("Cosine(%.3f) = %.3f

", x, sum);

}

// clean up, done with MPI

MPI_Finalize();

return 0;

}// End of int main(int argc, char*argv[])

The following is the MPI cosine(x) run:

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

0

0.0 deg. = 0.000 rads

Cosine(0.000) = 1.000

real 0m5.309s

user 0m1.280s

sys 0m0.280s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

30

30.0 deg. = 0.524 rads

Cosine(0.524) = 0.866

real 0m13.045s

user 0m1.270s

sys 0m0.400s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

60

60.0 deg. = 1.047 rads

Cosine(1.047) = 0.500

real 0m18.477s

user 0m1.150s

sys 0m0.450s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

90

90.0 deg. = 1.571 rads

Cosine(1.571) = -0.000

real 0m10.567s

user 0m1.240s

sys 0m0.360s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

120

120.0 deg. = 2.094 rads

Cosine(2.094) = -0.500

real 0m7.056s

user 0m1.310s

sys 0m0.330s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

150

150.0 deg. = 2.618 rads

Cosine(2.618) = -0.866

real 0m8.202s

user 0m1.200s

sys 0m0.390s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

180

180.0 deg. = 3.142 rads

Cosine(3.142) = -1.001

real 0m11.139s

user 0m1.250s

sys 0m0.350s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

210

210.0 deg. = -2.618 rads

Cosine(-2.618) = -0.866

real 0m6.400s

user 0m1.290s

sys 0m0.330s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

240

240.0 deg. = -2.094 rads

Cosine(-2.094) = -0.500

real 0m11.837s

user 0m1.320s

sys 0m0.340s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

270

270.0 deg. = -1.571 rads

Cosine(-1.571) = -0.000

real 0m8.228s

user 0m1.200s

sys 0m0.360s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

300

300.0 deg. = -1.047 rads

Cosine(-1.047) = 0.500

real 0m7.509s

user 0m1.270s

sys 0m0.350s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

330

330.0 deg. = -0.524 rads

Cosine(-0.524) = 0.866

real 0m7.167s

user 0m1.180s

sys 0m0.350s

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8,

Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_cosine

#######################################################

*** Number of processes: 16

*** processing capacity: 19.2 GHz.

Master node name: Mst0

Enter angle(deg.):

360

360.0 deg. = -0.000 rads

Cosine(-0.000) = 1.000

real 0m10.469s

user 0m1.250s

sys 0m0.300s

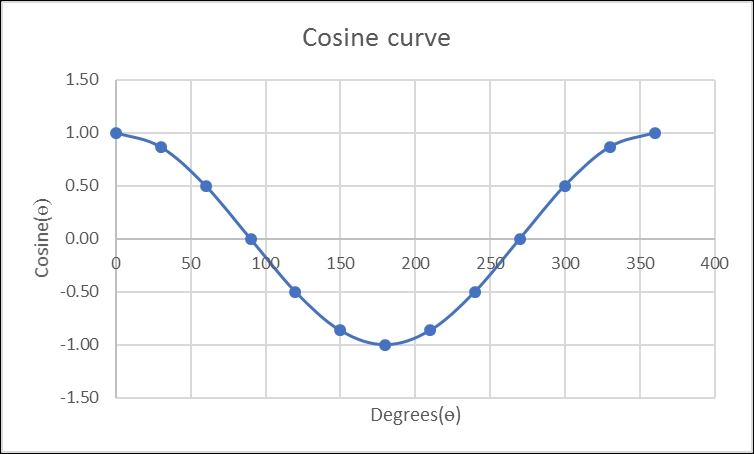

The following figure is a plot of the MPI cosine(x) run:

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.