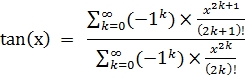

Since tan(x) = sine(x)/cosine(x), we could combine the Taylor series formula of the sine(x) and cos(x) to construct the tangent function as follows:

Write, compile, and run this Serial tangent(x) code (see the following serial tangent(x) code) to get a feel of the program:

/**********************************

* Serial tangent(x) code. *

* *

* Taylor series representation *

* of the trigonometric tan(x). *

* *

* Author: Carlos R. Morrison *

* *

* Date: 1/10/2017 *

**********************************/

#include <math.h>

#include <stdio.h>

int main(void)

{

/************************************************/

unsigned int i,j,l;

unsigned long int k;

long long int B,D,G,I;

int num_loops = 17;

float y;

double x;

double sum=0,sum01=0,sum02=0;

double A,C,E,F,H,J;

/************************************************/

/************************************************/

printf("

");

Z:printf("Enter angle(deg.):

");

printf("

");

scanf("%f",&y);

if(y >= 0 && y < 90.0)

{

x = y*(M_PI/180.0);

}

else

if(y > 90.0 && y <= 180.0)

{

x = -(180.0-y)*(M_PI/180.0);

}

else

if(y >= 180.0 && y < 270)

{

x = -(180-y)*(M_PI/180.0);

}

else

if(y > 270.0 && y <= 360)

{

x = -(360.0-y)*(M_PI/180.0);

}

else

{

printf("

");

printf("Bad input!! Please try another angel

");

printf("

");

goto Z;

}

/*************************************************

* ***** SINE BLOCK ***** *

*************************************************/

sum01 = 0;

for(i = 0; i < num_loops; i++)

{

A = (double)pow(-1,i);

B = 2*i+1;

C = (double)pow(x,B);

D = 1;

for(j = 1; j <= B; j++)

{

D *= j;

}

E = (A*C)/(double)D;

sum01 += E;

}// End of for(i = 0; i < num_loops; i++)

/*****************************************************

* ***** COSINE BLOCK ***** *

*****************************************************/

sum02 = 0;

for(k = 0; k < num_loops; k++)

{

F = (double)pow(-1,k);

G = 2*k;

H = (double)pow(x,G);

I = 1;

for(l = 1; l <= G; l++)

{

I *= l;

}

J = (F*H)/(double)I;

sum02 += J;

}// End of for(k = 0; k < num_loops; k++)

/*****************************************************

* ***** TANGENT BLOCK ***** *

*****************************************************/

/*******************/

sum = sum01/sum02; // Tan(x) ==> Sine(x)/Cos(x)

/*******************/

/*****************************************************/

printf("

");

printf("%.1f deg. = %.3f rads

", y, x);

printf("Tan(%.1f) = %.3f

", y, sum);

return 0;

}

Next, write, compile, and run the MPI version of the preceding serial tan(x) code (see MPI tan(x) code below), using one processor from each of the 16 nodes.

/**********************************

* MPI tangent(x) code. *

* *

* Taylor series representation *

* of the trigonometric tan(x). *

* *

* Author: Carlos R. Morrison *

* *

* Date: 1/10/2017 *

**********************************/

#include <mpi.h> // (Open)MPI library

#include <math.h> // math library

#include <stdio.h>// Standard Input/Output library

int main(int argc, char*argv[])

{

/******************************************************************************/

unsigned int i,j,l;

unsigned long int k,m,n;

long long int B,D,G,I;

int Q = 17,rank,length,numprocs;

float x,y;

double sum,sum1,sum2,sum01=0,sum02=0,rank_sum,rank_sum1,rank_sum2;

double A,C,E,F,H,J;

char hostname[MPI_MAX_PROCESSOR_NAME];

MPI_Init(&argc, &argv); // initiates MPI

MPI_Comm_size(MPI_COMM_WORLD, &numprocs); // acquire number of processes

MPI_Comm_rank(MPI_COMM_WORLD, &rank); // acquire current process id

MPI_Get_processor_name(hostname, &length); // acquire hostname

/******************************************************************************/

if(rank == 0)

{

printf("

");

printf("#######################################################");

printf("

");

printf("*** Number of processes: %d

",numprocs);

printf("*** processing capacity: %.1f GHz.

",numprocs*1.2);

printf("

");

printf("Master node name: %s

", hostname);

printf("

");

printf("

");

Z: printf("Enter angle(deg.):

");

printf("

");

scanf("%f",&y);

if(y >= 0 && y < 90.0)

{

x = y*(M_PI/180.0);

}

else

if(y > 90.0 && y <= 180.0)

{

x = -(180.0-y)*(M_PI/180.0);

}

else

if(y >= 180.0 && y < 270)

{

x = -(180-y)*(M_PI/180.0);

}

else

if(y > 270.0 && y <= 360)

{

x = -(360.0-y)*(M_PI/180.0);

}

else

{

printf("

");

printf("Bad input!! Please try another angel

");

printf("

");

goto Z;

}

}// End of if(rank == 0)

/******************************************************************************/

//broadcast to all processes, the number of segments you want

MPI_Bcast(&Q, 1, MPI_INT, 0, MPI_COMM_WORLD);

MPI_Bcast(&x, 1, MPI_INT, 0, MPI_COMM_WORLD);

// this loop increments the maximum number of iterations, thus providing

// additional work for testing computational speed of the processors

//for(total_iter = 1; total_iter < Q; total_iter++)

{

sum01 = 0;

sum02 = 0;

// for(total_iter = 1; i < total_iter; total_iter++)

for(i = rank + 1; i <= Q; i += numprocs)

{

m = (i-1);

/*************************************************

* ***** SINE BLOCK ***** *

*************************************************/

A = (double)pow(-1,m);

B = 2*m+1;

C = (double)pow(x,B);

D = 1;

for(j = 1; j <= B; j++)

{

D *= j;

}

E = (A*C)/(double)D;

sum01 += E;

/*****************************************************

* ***** COSINE BLOCK ***** *

*****************************************************/

F = (double)pow(-1,m);

G = 2*m;

H = (double)pow(x,G);

I = 1;

for(l = 1; l <= G; l++)

{

I *= l;

}

J = (F*H)/(double)I;

sum02 += J;

}// End of for(i = rank + 1; i <= Q; i += numprocs)

rank_sum1 = sum01;

rank_sum2 = sum02;

//collect and add the partial sum0 values from all processes

MPI_Reduce(&rank_sum1, &sum1, 1, MPI_DOUBLE,MPI_SUM, 0, MPI_COMM_WORLD);

MPI_Reduce(&rank_sum2, &sum2, 1, MPI_DOUBLE,MPI_SUM, 0, MPI_COMM_WORLD);

}//End of for(total_iter = 1; total_iter < n; total_iter++)

if(rank == 0)

{

sum = sum1/sum2;// Tan(x) ==> Sine(x)/Cos(x)

printf("

");

printf("%.1f deg. = %.3f rads

", y, x);

printf("Tan(%.1f) = %.3f

", y, sum);

}

// clean up, done with MPI

MPI_Finalize();

return 0;

}// End of int main(int argc, char*argv[])

Now, we will check the MPI tan(x) run:

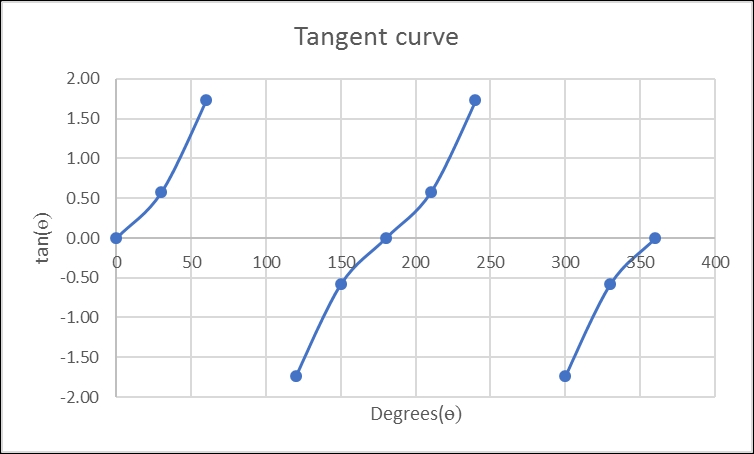

alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 0 0.0 deg. = 0.000 rads Tan(0.0) = 0.000 real 0m19.636s user 0m1.180s sys 0m0.430s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 30 30.0 deg. = 0.524 rads Tan(30.0) = 0.577 real 0m13.139s user 0m1.360s sys 0m0.260s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 60 60.0 deg. = 1.047 rads Tan(60.0) = 1.732 real 0m7.451s user 0m1.250s sys 0m0.330s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 90 Bad input!! Please try another angel Enter angle(deg.): 120 120.0 deg. = -1.047 rads Tan(120.0) = -1.732 real 0m17.420s user 0m1.210s sys 0m0.360s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 150 150.0 deg. = -0.524 rads Tan(150.0) = -0.577 real 0m11.704s user 0m1.410s sys 0m0.370s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 180 180.0 deg. = -0.000 rads Tan(180.0) = 0.000 real 0m5.871s user 0m1.190s sys 0m0.370s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 210 210.0 deg. = 0.524 rads Tan(210.0) = 0.577 real 1m8.558s user 0m1.190s sys 0m0.550s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 240 240.0 deg. = 1.047 rads Tan(240.0) = 1.732 real 0m22.489s user 0m1.310s sys 0m0.270s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 270 Bad input!! Please try another angel Enter angle(deg.): 300 300.0 deg. = -1.047 rads Tan(300.0) = -1.732 real 0m15.178s user 0m1.180s sys 0m0.410s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 330 330.0 deg. = -0.524 rads Tan(330.0) = -0.577 real 0m7.067s user 0m1.200s sys 0m0.370s alpha@Mst0:/beta/gamma $ time mpiexec -H Mst0,Slv1,Slv2,Slv3,Slv4,Slv5,Slv6,Slv7,Slv8, Slv9,Slv10,Slv11,Slv12,Slv13,Slv14,Slv15 MPI_tan ####################################################### *** Number of processes: 16 *** processing capacity: 19.2 GHz. Master node name: Mst0 Enter angle(deg.): 360 360.0 deg. = -0.000 rads Tan(360.0) = 0.000 real 0m6.489s user 0m1.200s sys 0m0.340s

The following figure is a plot of the MPI tan(x) run:

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.