CHAPTER 1

The Importance of Threat Data and Intelligence

In this chapter you will learn:

• The foundations of threat intelligence

• Common intelligence sources and the intelligence cycle

• Effective use of indicators of compromise

• Information sharing best practices

Every battle is won before it is ever fought.

—Sun Tzu

Modern networks are incredibly complex entities whose successful and ongoing defense requires a deep understanding of what is present on the network, what weaknesses exist, and who might be targeting them. Getting insight into network activity allows for greater agility in order to outmaneuver increasingly sophisticated threat actors, but not every organization can afford to invest in the next-generation detection and prevention technology year after year. Furthermore, doing so is often not as effective as investing in quality analysts who can collect and quickly understand data about threats facing the organization.

Threat data, when given the appropriate context, results in the creation of threat intelligence, or the knowledge of malicious actors and their behaviors; this knowledge enables defenders to gain a better understanding of their operational environments. Several products can be used to provide decision-makers with a clear picture of what’s actually happening on the network, which makes for more confident decision-making, increases the cost to the adversary, improves operator response time, and in the worst case, reduces recovery time for the organization in the event of an incident. Sergio Caltagirone, coauthor of The Diamond Model of Intrusion Analysis (2013), defines cyber threat intelligence as “actionable knowledge and insight on adversaries and their malicious activities enabling defenders and their organizations to reduce harm through better security decision-making.” Without a doubt, a good threat intelligence program is a necessary component of any modern information security program. Formulating a brief definition of so broad a term as “intelligence” is a massive challenge, but fortunately there are decades of studies on the primitives of intelligence analysis that we’ll borrow from for the purposes of defining threat intelligence.

Foundations of Intelligence

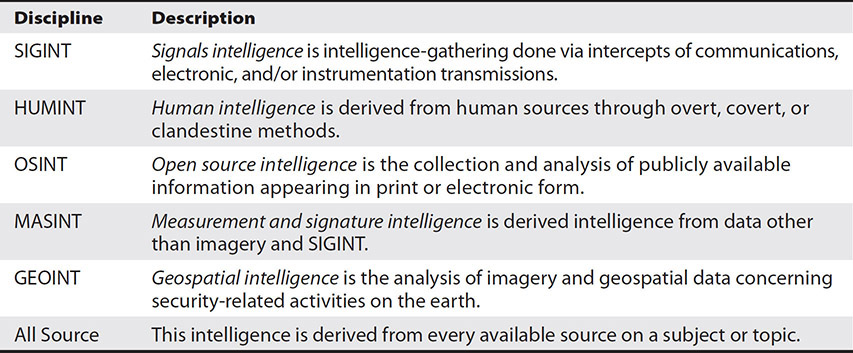

Traditional intelligence involves the collecting and processing of information about foreign countries and their agents. Usually conducted on behalf of a government, intelligence activities are carried out using nonattributable methods in foreign areas to further foreign policy goals and in support of national security. Another key aspect is the protection of the intelligence actions, the people and organizations involved, and the resulting intelligence products against unauthorized disclosure. Classically, intelligence is divided into the areas from which it is collected, as shown in Table 1-1. Functionally, a government may align one or several agencies with an intelligence discipline.

Table 1-1 A Sample of Intelligence Disciplines

Intelligence Sources

Threat intelligence teams outside of the government do not often have the luxury of on-call intelligence assets available to those within government. Energy companies and Internet service providers (ISPs) do not deploy HUMINT agents or have SIGINT operations, but they will focus on using whatever public, commercial, or in-house resources are available. Fortunately, there are a number of free and paid sources to help teams meet their intelligence requirements, including commercial threat intelligence providers, industry partners, and government organizations. And, of course, there’s also broad monitoring of social media and news for relevant data.

Open Source Intelligence

There are many ways to acquire free data associated with actor activity, be it malicious or benign. A common way to do this without interacting with those parties is by gathering open source intelligence (OSINT), free information that’s collected in legitimate ways from public sources such as news outlets, libraries, and search engines. Using OSINT, practitioners can begin to answer questions critical to the intelligence process. How can I create actionable threat intelligence from a variety of data sources? How can I share this threat information with the broader community, and what mechanism should I use? How can I use public information to limit the organization’s exposure, while enabling my understanding of what adversaries know about us?

There are several additional benefits of developing OSINT skills. Threat analysts often use OSINT sources to help them keep pace with security industry trends and discussions in near real-time. This is a useful way for practitioners to understand what may be coming around the corner, even if the content comes in an irregular or inconsistent manner. Additionally, many security analysts rely on publicly available data sets to perform research on common threat indicators and mitigating controls. The information gleaned may also help in post-incident forensics efforts or in support of penetration testing activities.

From an adversary point of view, it is almost always preferable to get information about a target without directly touching it. Why? Because the less it is touched, the fewer fingerprints (or log entries) are left behind for the defenders and investigators to find. In an ideal case, adversaries gain all the information they need to compromise a target successfully without once visiting it, using OSINT techniques. Passive reconnaissance, for example, is the process by which an adversary acquires information about a target network without directly interacting with it. These techniques can be focused on individuals as well as companies. Just like individuals, many companies maintain a public face that can give outsiders a glimpse into their internal operations. In the sections that follow, we describe some of the most useful sources of OSINT with which you should be familiar.

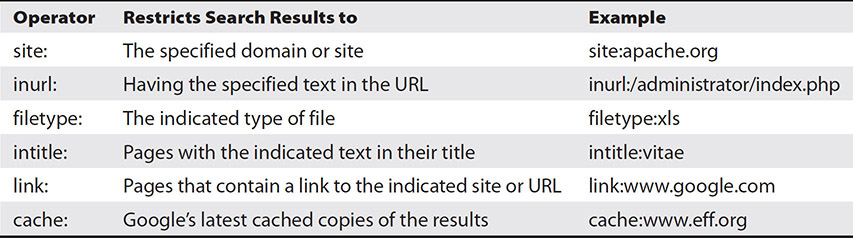

Google’s vision is to organize all of the data in the world and make it accessible for everyone in a useful way. It should therefore not be surprising that Google can help an attacker gather a remarkable amount of information about any individual, organization, or network. The use of this search engine for target reconnaissance purposes drew much attention in the early 2000s, when security researcher Johnny Long started collecting and sharing examples of search queries that revealed vulnerable systems. These queries made use of advanced operators that are meant to allow Google users to refine their searches. Though the list of operators is too long to include in this book, Table 1-2 lists some of the ones we’ve found most useful over the years. Note that many others are available from a variety of online sources, and some of these operators can be combined in a search.

Table 1-2 Useful Google Search Operators

Suppose your organization has a number of web servers. A potentially dangerous misconfiguration would be to allow a server to display directory listings to clients. This means that instead of seeing a rendered web page, the visitor could see a list of all the files (HTML, PHP, CSS, and so on) in that directory within the server. Sometimes, for a variety of reasons, it is necessary to enable such listings. More often, however, they are the result of a misconfigured and potentially vulnerable web server. If you wanted to search an organization for such vulnerable server directories, you would type the following into your Google search box, substituting the actual domain or URL in the space delineated by angle brackets:

site:<targetdomain or URL> intitle:"index of" "parent directory"

This would return all the pages in your target domain that Google has indexed as having directory listings.

You might then be tempted to click one of the links returned by Google, but this would directly connect you to the target domain and leave evidence there of your activities. Instead, you can use a page cached by Google as part of its indexing process. To see this page instead of the actual target, look for the downward arrow immediately to the right of the page link. Clicking it will give you the option to select Cached rather than connecting to the target (see Figure 1-1).

Figure 1-1 Using Google cached pages

Internet Registries

Another useful source of information about networks is the multiple registries necessary to keep the Internet working. Routable Internet Protocol (IP) addresses as well as domain names need to be globally unique, which means that there must be some mechanism for ensuring that no two entities use the same IP address or domain. The way we, as a global community, manage this deconfliction is through the nonprofit corporations described next. They offer some useful details about the footprint of an organization in cyberspace.

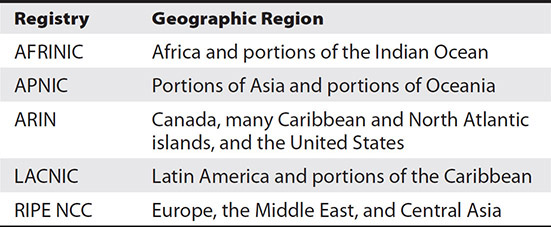

Regional Internet Registries As Table 1-3 shows, five separate corporations control the assignment of IP addresses throughout the world. They are known as the regional Internet registries (RIRs), and each has an assigned geographical area of responsibility. Thus, entities wishing to acquire an IP address in Canada, the United States, or most of the Caribbean would deal (directly or through intermediaries) with the American Registry for Internet Numbers (ARIN). The activities of the five registries are coordinated through the Number Resource Organization (NRO), which also provides a detailed listing of each country’s assigned RIR.

Table 1-3 The Regional Internet Registries

Domain Name System The Internet could not function the way it does today without the Domain Name System (DNS). Although DNS is a vital component of modern networks, many users are unaware of its existence and importance to the proper functionality of the Web. DNS is the mechanism responsible for associating domain names, such as www.google.com, with their server’s IP address(es), and vice versa. Without DNS, you’d be required to memorize and input the full IP address for any website you wanted to visit instead of the easy-to-remember uniform resource locator (URL). Using tools such as nslookup, host, and dig in the command line, administrators troubleshoot DNS and network problems. Using the same tools, an attacker can interrogate the DNS server to derive information about the network. In some cases, attackers can automate this process to reach across many DNS servers in a practice called DNS harvesting.

In some cases, it may be necessary to replicate a DNS server’s contents across multiple DNS servers through an action called a zone transfer. With a zone transfer, it is possible to capture a full snapshot of what the DNS server’s records hold about the domain; this includes name servers, mail exchange records, and hostnames. Zone transfers are a potential vulnerable point in a network because the default behavior is to accept any request for a full transfer from any host on the network. Because DNS is like a map of the entire network, it’s critical to restrict leakage to prevent DNS poisoning or spoofing.

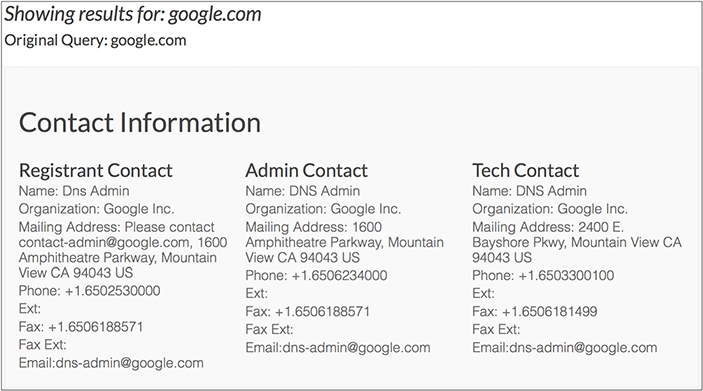

Whenever a domain is registered, the registrant provides details about the organization for public display. This may include name, telephone, and e-mail contact information; domain name system details; and mailing address. This information can be queried using a tool called WHOIS (pronounced who is). Available in both command-line and web-based versions, WHOIS can be an effective tool for incident responders and network engineers, but it’s also a useful information-gathering tool for spammers, identity thieves, and any other attacker seeking to get personal and technical information about a target. For example, Figure 1-2 shows a report returned from ICANN’s WHOIS web-based service. You should be aware that some registrars (the service that you go through to register a website) provide private registration services, in which case the registrar’s information is returned during a query instead of the registrant’s. Although this may seem useful to limit an organization’s exposure, the tradeoff is that in the case of an emergency, it may be difficult to reach that organization.

Figure 1-2 A report returned from ICANN’s WHOIS web-based service

Job Sites

Sites offering employment services are a boon for information gatherers. Think about it: The user voluntarily submits all kinds of personal data, a complete professional history, and even some individual preferences. In addition to providing personally identifiable characteristics, these sites often include indications for a member’s role in a larger network. Because so many of these accounts are often identified by e-mail address, it can often be the common link in the course of investigating a suspicious entity.

In understanding what your public exposure is, you must realize that it’s trivial for attackers to automate the collection of artifacts about a target. They may perform activities to broadly collect e-mail addresses, for example, in a practice called e-mail harvesting. An attacker can use this to his benefit by taking advantage of business contacts to craft a more convincing phishing e-mail.

Beyond the social engineering implications for the users of these sites, companies themselves can be targets. If a company indicates that it’s in the market for an administrator of a particular brand of firewall, then it’s likely that the company is using that brand of firewall. This can be a powerful piece on information, because it provides clues about the makeup of the company’s network and potential weak points.

Social Media

Social media sites can be rich sources of threat data. Twitter and Reddit, for example, are two platforms that often provide useful artifacts during high-impact events. As a vehicle to quickly spread news of major emergencies, these platforms can also be leveraged as a source for indicators about cyberattacks. They can also be highly targeted sources for personal information. As with employment sites, defenders can learn quite a bit about an attacker’s social network and tendencies, should the attacker have a public persona. Conversely, an attacker can gain awareness about an individual or company using publicly available information. The online clues captured from personal pages enable an attacker to conduct social media profiling, which uses a target’s preferences and patterns to determine their likely actions. Profiling is a critical tool for online advertisers hoping to capitalize on highly targeted ads. This information is also useful for an attacker in identifying which users in an organization may be more likely to fall victim to a social engineering attack, in which the perpetrator tricks the victim into revealing sensitive information or otherwise compromising the security of a system.

Many attackers know that the best route into a network is through a careless or untrained employee. In a social engineering campaign, an attacker uses deception, often influenced by the profile they’ve built about the target, to manipulate the target into performing an act that might not be in their best interest. These attacks come in many forms—from advanced phishing e-mails that seem to originate from a legitimate source, to phone calls requesting additional personal information. Phishing attacks continue to be a challenge for network defenders because they are becoming increasing convincing, fooling recipients into divulging sensitive information with regularity. Despite the most advanced technical countermeasures, the human element remains the most vulnerable part of the network.

Proprietary/Closed Source Intelligence

One of the key tenets of intelligence analysis is never relying on a single source of data when attempting to confirm a hypothesis. Ideally, analysts should look at multiple artifacts from multiple sources that support a hypothesis. Similarly, open source data is best used with corroborating data acquired from closed sources. Closed source data is any data collected covertly or as a result of privileged access. Common types of closed source data include internal network artifacts, dark web communications, details from intelligence-sharing communities, and private banking and medical records. Since closed source data tends to be higher quality, an analyst can confidently assess and verify findings using any number of intelligence analysis methods and tools. An added benefit of using multiple sources is that the practice reduces the effect of confirmation bias, or the tendency for an analyst to interpret information in a way that supports a prior strongly held belief.

Internal Network

Your organization’s network will inherently have the most relevant threat data available of all the sources we’ll discuss. Despite being completely germane to every security function, many organizations eschew internal threat data in favor of external data feeds. As an analyst, you must remember that by leveraging threat data from your own network, you can identify potential malicious activity with far greater speed and confidence than generic threat data. The most common sources for raw threat-related data include events, DNS, virtual private networks (VPNs), firewalls, and authentication system logs. By establishing a baseline of normal activity, analysts can use historic knowledge of past incident responses to improve awareness of emerging threats or ongoing malicious activity.

Classified Data

When handling closed source data, you must consider several important factors. In some cases, the mere disclosure of the data may jeopardize access to the source information, or worse, the individuals involved in the collection and analysis process. Since closed source data is sometimes not meant to be openly available to the public, there may be some legal stipulations regarding its handling. Classified data, or data whose unauthorized disclosure may cause harm to national security interests, is protected by several statutes that restrict its handling and sharing to trusted individuals. Those individuals undergo formal security screening to achieve clearance or the minimum eligibility required for them to handle or access classified data. Accordingly, leaks of classified data may result in steep administrative or criminal penalties. It will be your responsibility, should you encounter classified data, to handle it appropriately and to safeguard against unauthorized disclosure.

Traffic Light Protocol

The Traffic Light Protocol (TLP) was created by UK government’s National Infrastructure Security Coordination Centre (NISCC) to enable greater threat information sharing between organizations. It includes a set of color-coded designations that are used to guide responsible sharing of sensitive information to the appropriate audience, while also protecting the information’s sources. As shown in Table 1-4, the four-color designations are meant to be easily understood among participants, since its usage closely mirrors the colors of traffic lights around the world. Despite its usage as a data-sharing guideline, TLP is not a classification or control scheme, nor is it designed to use as a mechanism to enforce intellectual property terms or how the data is to be used by the recipient.

Table 1-4 Traffic Light Protocol Designations (source: US Department of Homeland Security CISA website, https://www.us-cert.gov/tlp)

Characteristics of Intelligence Source Data

Despite the many claims from vendors, there is no one solution for every organization when it comes to threat data and intelligence. Organizations must be able to map the threat intelligence products they acquire or produce to some distinct aspect of their threat profile. In short, analysts must prioritize data that is most relevant to their specific environment to ensure that they are not bogged down in unmanageable noise and that they can produce actionable, timely, and consistent results. Generic threat intelligence developed for environments that are too dissimilar from those in which the team is operating will not satisfy the organization’s unique requirements. Furthermore, developing intelligence for environments that are not specific enough may result in a waste of resources. Security analysts working in a manufacturing environment, for example, must understand their environment and seek out and obtain threat intelligence products specific to manufacturing networks, in addition to general threat information. Intelligence must include context and provide recommendations if it is to have maximum value for decision-makers.

Good threat intelligence provides three critical elements to analyst so that they can appropriately provide answers to decision-makers. The intelligence must describe the threat using consistent and clear language, illustrate the impact to the business in terms that are relevant to the business, and provide a clear set of recommended actions. In addition to being complete, good threat intelligence often has three characteristics: timeliness, relevancy, and accuracy. Teams that can effectively use quality threat intelligence are able to address known gaps in their security posture and apply this data and context to nearly every aspect of their security operations; this will improve detection, enhance response, and strengthen prevention. Furthermore, the rate of noise generation and intelligence failure is inversely proportional to the timeliness, relevancy, and accuracy of the threat intelligence information.

Timeliness

All intelligence, whether in a traditional military operation or as it applies to information security, has a temporal dimension. After all, intelligence considers environmental conditions as a part of context, so it makes sense that it is most useful given the time-related stipulations within the intelligence requirements. You may find that intelligence may be extremely useful at one time and completely useless at another. Accordingly, intelligence that is not delivered in a timely manner is not as useful to decision-makers.

Relevancy

As discussed earlier, internal network data invariably yields the most useful threat intelligence, because it reflects the nuances of an organization. Additionally, relevancy varies based on the levels of operation, even within the same organization. It is therefore important to prepare threat intelligence products for the correct audience. Details about the exact nature of an adversary’s technical capabilities, for example, may not be as useful for a strategic audience as it may be for a detection team analyst. This is an easy point to overlook, but intelligence requires human attention to consume and process, so irrelevant information is costly in terms of time and resources. Providing inconsequential intelligence is distracting, but it may be counterproductive as well.

Accuracy

Although it may seem self-evident in a field such as information security, accuracy is critical to enable a decision-maker to draw reliable conclusions and pursue a recommended course of action. The information must be factually correct. Acknowledging that it may be unrealistic to an exact understanding of the operational environment at any given time, an analyst must at minimum convey facts as they exist.

Confidence Levels

In a continuous effort to apply more rigorous standards to analytical assessment, intelligence providers often use three levels of analytic confidence made using estimative language. Estimative language aims to communicate intelligence assessments while acknowledging the existence of incomplete or fragmented information. The statements should not be seen as fact or proof, but as judgments based on analysis of collected information. As shown in Table 1-5, confidence levels reflect the scope and quality of the information supporting its judgments.

Table 1-5 Confidence Levels and Their Descriptions

Indicator Management

In discussing threat data, the term indicator will come up often to describe some observable artifact encountered on the network. Note that an indicator is not just data on its own. Indicators must include some context describing an aspect of an event, indicating something related to the intrusion of concern. Think about a time that someone has asked for your help in answering a question related to something you knew a lot about. If the question has ever begun with “what can you tell me about...,” you’re almost certainly going to ask follow-up questions to try to get as much information and context as possible before you provide an answer. Analyzing threat data is similar, in that context matters: a domain name is not an indicator on its own, but a domain name with the added context that is it used for phishing is an indicator.

Indicator Lifecycle

In working toward completing the picture of what’s happened during an incident, an analyst will want to turn a newly discovered indicator into something actionable for remediation or detection at a later point. Your first step is to vet the indicator, a process of deciding whether the indicator is valid, researching the originating signal, and determining its usefulness in actually detecting the malicious activity you expect to find in your environment. Characteristics you may want to consider during vetting include the reliability of the indicator source and additional details about the artifact that you’re able to uncover with follow-up research.

Indicators often have value beyond just your organization, even when they are derived from internal network data. Many financial service organizations use the same supporting technologies, have similar internal operations processes, and face the same kind of threat actors on a regular basis. It follows, therefore, that these organizations would be keenly interested in how others in the same space are detecting and responding to malicious activity. Sharing threat data is a key component of success of any security operations effort. Threat intelligence shared among partners, peers, and other trusted groups often helps focus detection efforts and prioritize the use of limited resources.

Structured Threat Information Expression

The Structured Threat Information Expression, or STIX, is a collaborative effort led by the MITRE Corporation to communicate threat data using a standardized lexicon. To represent threat information, the STIX 2.0 framework uses a structure consisting of twelve key STIX Domain Objects (SDOs) and two STIX Relationship Objects (SROs). In describing an event, an analyst may show the relationship between one SDO and another using an SRO. The goal is to allow for flexible, extensible exchanges while providing both human- and machine-readable data. STIX information can be represented visually for analysts or stored as JSON (JavaScript Object Notation) for use in automation.

Attack Pattern

Attack patterns are a class of tactics, techniques, and procedures (TTPs) that describe attacker tendencies in how they employ capabilities against their victims. This SDO is helpful in generally categorizing types of attacks, such as spear phishing. Additionally, these objects may be used to add insight into exactly how the attacks are executed. Using the spear phishing example, an attacker would identify a high-value target, craft a relevant phishing message, attach a malicious document, and send the message with the hope that the target downloads and opens the attachment. Each of these individual actions viewed together make up an attack pattern.

Campaign

A campaign is a collection of malicious actor behaviors against a common target over a finite timeframe. Campaign SDOs are often identified through various attributions methods to tie them to specific threat actors, but more important than the who behind the campaign is the identification of the unique use of tools, infrastructure, techniques, and targeting used by the actor. Tying this in with our phishing example, a campaign could be used to describe a threat actor group’s attack that used a specially tailored phish kit to target specific executives of a multinational energy company over the course of eight weeks in the past winter.

Course of Action

A course of action is a preventative or response action taken to address an attack. This SDO describes any technical changes, such as a modification of a firewall rule, or policy changes, such as mandatory annual security training. To address the effectiveness of spear phishing, a security team may choose a course of action that includes enhanced phishing detection, automated attachment scanning, and link sanitization.

Identity

An identity is an SDO that represents individuals, organizations, or groups. They can be specific and named, such as John Doe or Acme Corporation, or broader, to refer to an entire sector, for example. In the act of collecting identifying information about the individuals and organizations involved in an incident, we may derive previously unseen patterns. The individuals that were the target of the specially crafted phishing message would be represented as identity objects using this framework.

Indicator

Similar to the previously defined indicator, the indicator SDO describes an observable that can be used to detect suspicious activity on a network or an endpoint. Again, this specific observable or pattern of observables must be accompanied with contextual data for it to be truly useful in communicating interesting aspects of a security event. Indicators found in spear phishing messages often include links with phishing domains, generic form language, or the use of ASCII homographs.

Intrusion Set

An intrusion set is a compilation of behaviors, TTPs, or other properties shared by a single entity. As with campaigns, the focus of an intrusion set is on identifying common resources and behaviors rather than just trying to figure out who’s behind the activity. Intrusion set SDOs differ from campaigns in that they aren’t necessarily restricted to just one timeframe. An intrusion set may include multiple campaigns, creating an entire attack history over a long period of time. If a company has identified that it was the target of multiple phishing campaigns over the past few years from the same threat actor, that activity may be considered an intrusion set.

Malware

Malware is any malicious code and malicious software used to affect the integrity or availability of a system or the data contained therein. Malware may also be used against a system to compromise its confidentiality, enabling access to information not otherwise available to unauthorized parties. In the context of this framework, malware is considered a TTP, most often introduced into a system in a manner that avoids detection by the user. As a STIX object, the malware SDO identifies samples and families using plain language to describe the software’s capabilities and how it may affect an infected system. Examples of how these objects may be linked include connections to other malware objects to demonstrate similarities in its operations, or connections to identities to communicate the targets involved in an incident. The specific malicious code delivered in a phishing attempt can be described using the malware object.

Observed Data

The observed data SDO is used to describe any observable collected from a system or network device. This object may be used to communicate a single observation of an entity or the aggregate of observations. Importantly, observed data is not intelligence or even information, but is raw data, such as the number of times a connection is made or the summation of occurrences over a specified timeframe. The observed data object could be used in a phishing event to highlight the number of requests made to a particular phishing domain over the course of an hour.

Report

Reports are finished intelligence products that cover some specific detail of a security event. Reports can give relevant details about threat actors believed to be connected to an incident, the malware they may have used, or the methodologies used during a campaign. For example, a narrative that describes the details of the phish kits used in targeting energy company executives may be included in a report SDO. The report could include references to any of the other objects previously described.

Threat Actor

The threat actor SDO defines individuals or groups believed to be behind malicious activity. They conduct the activities described in campaign and intrusion set objects using the TTPs described in malware and attack pattern objects against the targets identified using identity objects. Their level of technical sophistication, personally identifiable information (PII), and assertions about motives can all be used in this object. If a determination can be made about the goals of the actors behind our fictional series of phishing messages, it could be included in the threat actor object along with any information about the individuals or groups involved.

Tool

The tool SDO describes software used by a threat actor in conducting a campaign. Unlike software described using the malware object, tools are legitimate utilities such as PowerShell or the terminal emulator. Tool objects may be connected to other objects describing TTPs to provide insight into levels of sophistication and tendencies. Understanding how and when actors use these tools can provide defenders with the knowledge necessary for developing countermeasures. Since the software described in tool objects is also used by power users, system administrators, and sometimes regular users, the challenge moves from simply detecting the presence of the software to detecting unusual usage and determining malicious intent. A caveat in using the tool object, in addition to avoiding using it to describe malware, is that this object is not meant to provide details about any software used by defenders in detecting or responding to a security event.

Vulnerability

A vulnerability SDO is used to communicate any mistake in software that may be exploited by an actor to gain unauthorized access to a system, software, or data. Although malware objects provide key characteristics about malicious software and when they are used in the course of an attack, vulnerability objects describe the exact flaw being leveraged by the malicious software. The two may be connected to show how a particular malware object targets a specific vulnerability object.

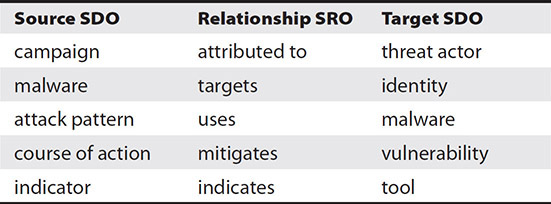

Relationship

The relationship SRO is can be thought of as the connective tissue between SDOs, linking them together and showing how they work with one another. In the previous description of vulnerability, we highlighted that there may be a connection made to a malware object to show how the malicious software may take advantage of a particular flaw. Using the relationship SRO relationship type target, we can show how a source and target are related using this framework. Table 1-6 highlights some common associations between a source SDO and target SDO using a relationship SRO.

Table 1-6 A Sample of Commonly Used Relationships

Sighting

A sighting SRO provides information about the occurrence of an SDO such as indicator or malware. It’s effectively used to convey useful information about trends and can be instrumental in developing intelligence about how an attacker’s behavior may evolve or respond to mitigating controls. The sighting SRO differs primarily from the relationship SRO in that it can provide additional properties about when an object was first or last seen, how many times it was seen, and where it was observed. The sighting SRO is similar to the observed data SDO in that they both can be used to provide details about observations on the network. However, you may recall that an observed data SDO is not intelligence and provides only the raw data associated with the observation. While you would use the observed data SDO to communicate that you observed the presence of a particular piece of malware on a system, you’d use the sighting SRO to describe that a threat actor is likely to be behind the use of this malware given additional context.

Trusted Automated Exchange of Indicator Information

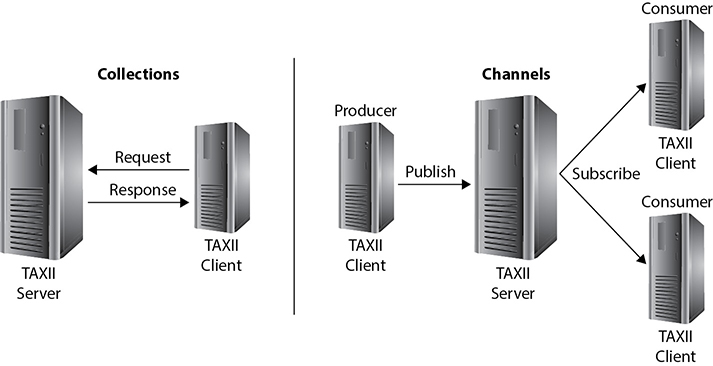

Trusted Automated Exchange of Intelligence Information (TAXII) defines how threat data may be shared among participating partners. It specifies the structure for how this information and accompanying messages are exchanged. Developed in conjunction with STIX, TAXII is designed to support STIX data exchange by providing the technical specifications for the exchange API.

TAXII 1.0 was designed to integrate with existing sharing agreements, including access control limitations, using three primary models: hub and spoke, source/subscriber, and peer-to-peer, as shown in Figure 1-3.

Figure 1-3 Primary models for TAXII 1.0

TAXII 2.0 defines two primary services, collections and channels, to facilitate exchange models. Collections are an interface to a logical store of threat data objects hosted by a TAXII server. Channels, maintained by the TAXII server, provide the pathway for TAXII clients to subscribe to the published data. Figure 1-4 illustrates the relationship between the server and clients for the collection and channel services.

Figure 1-4 Collections and channel service architecture for TAXII 2.0

OpenIOC

OpenIOC is a framework designed by Mandiant, an American cybersecurity firm that is now part of FireEye. The goal of the framework is to organize information about an attacker’s TTPs and other indicators of compromise in a machine-readable format for easy sharing and automated follow-up. The OpenIOC structure is straightforward, consisting three of main components: IOC metadata, references, and the definition. The metadata component provides useful indexing and reference information about the IOC including author name, the IOC name, and a description of the IOC. The reference component is primarily meant to enable analysts to describe how the IOC fits in operationally with their specific environments. As a result, some of the information may not be appropriate to share, because it may refer to internal systems or sensitive ongoing cases. Analysts should be particularly careful to verify that reference information is suitable for sharing before sharing it externally. Finally, the definition component provides the indicator content most useful for investigators and analysts. The definition often contains Boolean logic to communicate the conditions under which the IOC is valid. For example, the requirement for an MD5 hash AND a file size attribute above a certain threshold would have to be fulfilled for the indicator to match.

Threat Classification

Before we go too deeply into the technical details regarding threats you may encounter while preparing for or responding to an incident, you need to understand the term incident. We use the term to describe any action that results in direct harm to your system or increases the likelihood of unauthorized exposure of your sensitive data. Your first step in knowing that something is harmful and out of place is to understand what normal looks like. In other words, establishing a baseline of your systems is the first step in preparing for an incident. Without your knowing what normal is, it becomes incredibly difficult for you to see the warning signs of an attack. Without a baseline for comparison, you will likely know that you’ve been breached only when your systems go offline. Making a plan for incident response isn’t just a good idea—it may be compulsory, depending on your operating environment and line of business. As your organization’s security expert, you will be entrusted to implement technical measures and recommend policy that keeps personal data safe while keeping your organization out of court.

Known Threats vs. Unknown Threats

In attempting to find malicious activity, antivirus software and more sophisticated security devices work by using signature-based and anomaly-based methods of detection. Signature-based systems rely on prior knowledge of a threat, which means that these systems are only as good as the historical data companies have collected. Although these systems are useful for identifying threats that already exist, they don’t help much with regard to threats that constantly change form or have not been previously observed; these will slip by, undetected.

The alternative is to use a solution that looks at what the executable is doing, rather than what it looks like. This kind of system relies on heuristic analysis to observe the commands the executable invokes, the files it writes, and any attempts to conceal itself. Often, these heuristic systems will sandbox a file in a virtual operating system and allow it to perform what it was designed to do in that separate environment.

As malware is evolving, security practices are shifting to reduce the number of assumptions made when developing security policy. A report that indicates that no threat is present just means that the scanning engine couldn’t find a match, and a clean report isn’t worth much if the methods of detection aren’t able to detect the newest types of threats. In other words, the absence of evidence is not evidence of absence. Vulnerabilities and threats are being discovered at a rate that outpaces what traditional detection technologies can spot. Because threats still exist, even if we cannot detect them, we must either evolve our detection techniques or treat the entire network as an untrusted environment. There is nothing inherently wrong about the latter; it just requires a major shift in thinking about how we design our networks.

Zero Day

The term zero day, once used exclusively among security professionals, is quickly becoming part of the public dialect. It refers to either a vulnerability or exploit never before seen in public. A zero-day vulnerability is a flaw in a piece of software that the vendor is unaware of and thus has not issued a patch or advisory for. The code written to take advantage of this flaw is called the zero-day exploit. When writing software, vendors often focus on providing usability and getting the most functional product out to market as quickly as possible. This often results in products that require numerous updates as more users interact with the software. Ideally, the number of vulnerabilities decreases as time progresses, as software adoption increases, and as patches are issued. However, this doesn’t mean that you should let your guard down because of some sense of increased security. Rather, you should be more vigilant; even if an environment has protective software in place, it’s defenseless should a zero-day exploit be used against it.

Preparation

Preparing to face unknown and advanced threats like zero-day exploits requires a sound methodology that includes technical and operational best practices. The protection of critical business assets and sensitive data should never be trusted to a single solution. You should be wary of solutions that suggest they are one-stop shops for dealing with these threats, because you are essentially placing the entire organization’s fate in a single point of failure. Although the word “response” is part of your incident response plan, your team should develop a methodology that includes proactive efforts as well. This approach should involve active efforts to discover new threats that have not yet impacted the organization. Sources for this information include research organizations and threat intelligence providers. The SANS Internet Storm Center and the CERT Coordination Center at Carnegie Mellon University are two great resources for discovering the latest software bugs. Armed with new knowledge about attacker trends and techniques, you may be able to detect malicious traffic before it has a chance to do any harm. Additionally, you will give your security team time to develop controls to mitigate security incidents, should a countermeasure or patch not be available.

Advanced Persistent Threat

In 2003, analysts discovered a series of coordinated attacks against the Department of Defense, Department of Energy, NASA, and the Department of Justice. Discovered to have been in progress for at least three years by that point, the actors appeared to be on a mission and took extraordinary steps to hide evidence of their existence. These events, known later as “Titan Rain,” would be classified as the work of an advanced persistent threat (APT), which refers to any number of stealthy and continuous computer hacking efforts, often coordinated and executed by an organization or government with significant resources. The goal for an APT is to gain and maintain persistent access to target systems while remaining undetected. Attack vectors often include spam messages, infected media, social engineering, and supply-chain compromise. The support infrastructure behind their operations, their TTPs during operations, and the types of targets they choose are all part of what makes APTs stand out. It’s useful to analyze each word in the APT acronym to identify the key discriminators between APT and other actors.

Advanced

The operators behind these campaigns are often well equipped and use techniques that indicate formal training and significant funding. Their attacks indicate a high degree of coordination between technical and nontechnical information sources. These threats are often backed with a full spectrum of intelligence support, from digital surveillance methods to traditional techniques focused on human targets.

Persistent

Because these campaigns are often coordinated by government and military organizations, it shouldn’t be surprising that each operator is focused on a specific task rather than rooting around without direction. Operators will often ignore opportunistic targets and remain focused on their piece of the campaign. This behavior implies strict rules of engagement and an emphasis on consistency and persistence above all else.

Threat

APTs do not exist in a bubble. Their campaigns show capability and intent, aspects that highlight their use as the technical implementation of a political plan. Like a military operation, APT campaigns often serve as an extension of political will. Although their code might be executed by machines, the APT framework is designed and coordinated by humans with a specific goal in mind. Because of the complex nature of APTs, it may be difficult to handle them alone. The concept of automatic threat intelligence sharing is a recent development in the security community. Because speed is often the discriminator between a successful and an unsuccessful campaign, many vendors provide solutions that automatically share threat data and orchestrate technical countermeasures for them.

Threat Actors

Threat actors are not equal in terms of motivation and capability; neither are they all necessarily overtly malicious. You will learn that the term “threat actor” is wide-ranging and can be categorized by sophistication as well as intent. We’ll describe threat actors using several groups, but it’s important for you to understand that these classifications are not mutually exclusive. Threat actors and threat actor groups may span across multiple classifications, usually depending on the targets and timeframe of the activity we’re considering.

Nation-State Threat Actors

Nation-state threat actors are frequently among the most sophisticated adversaries, with dedicated infrastructure, training resources, and operational support behind their activities. Their activities are characterized by extensive planning and coordination and often reflect the strong government or military influence behind them. Like many government-supported operations, nation-state threat actor activities are often conducted to achieve political, economic, or strategic military goals. Identifying and tracking these actors can be difficult, since many of the individuals involved use common techniques across teams, operate behind robust infrastructure, and use methods to actively obfuscated their behavior. Alternatively, they many use toolsets that are not often seen or impossible to detect at the time of the security event, such as a zero-day exploit.

There are a few interesting notes about nation-state operations that make them unique. The first is that, depending on the countries involved, businesses can quickly become a part of the activity in either a direct or supporting capacity. Second, more sophisticated threat actors may incorporate false flag techniques, performing activities that lead defenders to falsely attribute their activity to another. Given the high degree of coordination that some nation-state actor activities require, this is becoming a frequent challenge for defenders to address. Finally, there’s an aspect of perspective worth noting here: one nation’s intelligence apparatus is another nation’s malicious actor.

Hacktivists

Hacktivists are threat actors that typically operate with less resourcing than their nation-state counterparts, but nonetheless work to coordinate efforts to bring light to an issue or promote a cause. They often rely on readily available tools and mass participation to achieve their desired effects against a target. Though not always the case, their actions often have little lasting damage to their targets. Hacktivists are also known to use social media and defacement tactics to affect the reputation of their targets, hoping to erode public trust and confidence in their targets. Unlike other threat actors, hacktivists rarely seek to operate with stealth and look to bring attention to their cause along with notoriety for their own organization. As defenders, knowing that hacktivists frequently employ techniques to affect the availability of a system, we can use defensive techniques to mitigate denial-of-service (DoS) attacks. Furthermore, we can reduce the likelihood of successful social engineering efforts or unauthorized access to services and applications by enforcing multifactor authentication on system and social media accounts.

Organized Crime

Threat actors operating on behalf of organized crime groups are becoming an increasingly visible challenge for enterprise defenders to confront. Whether targeting theft of intellectual property or personal user data, these criminals’ primary objective is to make money by selling stolen data. When compared to nation-state actors, organized crime may have a more moderate sophistication level, but as financial gain is often the goal, attacks will many times include the use of cryptojacking, ransomware, and bulk data exfiltration techniques. Despite having a well-understood operational model, organized crime threat actors still contribute to a significant percentage of security incidents. This is due in part to the comparatively low-risk, high-reward nature of their activities and the ease with which they can hide their activities online. Moreover, the rise in usage of digital currencies worldwide has allowed for these criminals to launder vast sums of money more easily.

Insider Threat Actors

Insider threat actors work within an organization and represent a particularly high risk of causing catastrophic damage due to their privileged access to internal resources. Because access is often an early goal for insider threat actors, having access as result of role or position in a company often means that traditional perimeter-focused security mechanisms are not effective in detecting and stopping their destructive activity. To address internal threats, it’s critical that the security program is designed in a way that adheres to the principle of least privilege, as it relates to access. Furthermore, network security devices should be configured to allow or deny access based on robust access control rules and not simply as a result of a device’s location within the network. Insider threats are unique in that they may be influenced by a combination of factors, from personal, to organizational, to technical. Accordingly, the solution to address them cannot be one-dimensional and must include policies, procedures, and technologies to mitigate the overall threat. For example, mandating annual training on cybersecurity awareness along with implementing technical controls to prevent unauthorized file access have been shown to reduce the occurrence and impact of insider threat events.

Intentional

Intentional insider threat actors may be employees, contractors, or any other business partners with established access to internal service, or any of these who have severed ties with the organization but have not lost access. Intentional actors behave in a manner that may be damaging to the organization, through data theft, data deletion, or vandalism. In many cases, these malicious insiders look to acts against the organization with the goal of personal financial gain, revenge, or both. Malicious insiders looking to steal intellectual property in order to facilitate a secondary income source will typically remove data slowly to avoid detection. A disgruntled employee, on the other hand, may work deliberately to sabotage an organization’s critical systems. As defenders, we should be aware of anomalous activity such as high-volume network activity or indiscriminate file access attempts, especially following an employee’s resignation or firing.

Unintentional

It’s easy to think of insider threats as actors with malicious intent, but other factors may lead to an increased insider threat risk. Lack of security education, negligence, and human error are among the top contributors to unintentional insider security events. Such actions, though unwitting, may cause as much harm as those done intentionally by other threat actors. Hanlon’s razor famously expresses that one shouldn’t attribute to malice that which can be adequately explained by ignorance (or stupidity, per the actual adage). Mistakes happen, and it may be counterproductive for a security team to treat every user who is responsible for a security incident as a willful malicious actor. Not only would this ensure that the user would have a difficult learning experience, but it may lull the security team into the perception that the root issue is sufficiently addressed. If, for example, there are ten occurrences of data spillage that all point to a similar type of user error, perhaps the problem is less about the user and more about the usability of the system.

Intelligence Cycle

The intelligence cycle is a core process used by most government and business intelligence and security teams to process raw signals into finished intelligence for use in decision-making. Depending on the environment, the process is a five- or six-step method of adding clarity to a dynamic and ambiguous environment. Figure 1-5 shows the five-step cycle. Among the many benefits of its application is the increased situational awareness about the environment and the delineation of easily understood work efforts. Importantly, the intelligence cycle is also continuous and does not require perfect knowledge about one phase to begin the next. In fact, the cycle is best used when output from one phase is used to feed the next while also refining the previous. For example, you may discover a new bit of information in a later stage than can be used to improve the inputs into the overall cycle.

Figure 1-5 The five-step intelligence cycle

Requirements

The requirements phase involves the identification, prioritization, and refinement of uncertainties about the operational environment that the security team must resolve to accomplish its mission. It includes key tasks related to the planning and direction of the overall intelligence effort. In simple terms, requirements are steps that are needed. The results of this phase are not always derived from authority, but are determined by aspects of the customer’s operations as well as the capabilities of the intelligence team. As gaps in understanding are identified and prioritized, analysts will move on to figuring out ways to close these gaps, and a plan is set forth as to how they will get the data they need. This in turn will drive the collection phase.

Collection

At this phase, the plan that was previously defined is executed, and data is collected to fill the intelligence gap. Unlike a collection effort at a traditional intelligence setting, this effort at a business will likely not involve dispatching of HUMINT or SIGINT assets, but will instead mean the instrumentation of technical collection methods, such as setting up a network tap or enabling enhanced logging on certain devices. The sources of the raw data arrive from outside of the network, from news reports, social media, and public documents, or from closed and proprietary sources.

Analysis

Analysis is the act of making sense of what you observe. With the use of automation, highly trained analysts will try to give meaning to the normalized, decrypted, or otherwise processed information by adding context about the operational environment. They then prioritize it against known requirements, improve those requirements, and potentially identify new collection sources. The product of this phase is finished, actionable intelligence that is useful to the customer. Analysis can be a difficult process, but there are many structured analytical techniques that we may use to mitigate the effects of biases, to challenge judgments, and to manage uncertainty.

Dissemination

Distributing the requested intelligence to the customer occurs at the dissemination phase. Intelligence is communicated in whichever manner was previous identified in the requirements phase and must provide a clear way forward for the customer. The customer, who may be the security team itself, can then use these analytical products and recommendations to improve defense, gain a greater understanding of an adversary’s social or computer network for counterintelligence, or even move toward legal action. This also highlights an important concept in intelligence: the product provided must be useful. The words “actionable intelligence” are often misused, because intelligence is always meant to be actionable.

Feedback

Once intelligence is disseminated, more questions may be raised, which leads to additional planning and direction of future collection efforts. At each phase of the cycle, analysts are evaluating the quality of their input and outputs, but explicitly requesting feedback from consumers is extremely important to enable the security team to improve its activities and better align products to meet consumers’ evolving intelligence needs. This phase also enables analysts to review their own analytical performance and to think about how to improve their methods for soliciting information, interacting with internal and external partners, and communicating their findings to the decision-makers.

Commodity Malware

Commodity malware includes any pervasive malicious software that’s made available to threat actors via sale. Often made available in underground communities, this type of malware enables criminals to focus less on improving their technical sophistication and more on optimizing their illegal operations. There’s a well-known military axiom that states that great organizations do routine things routinely well. Correspondingly, while commodity malware may not always be the most advanced or stealthy software, good security teams will know how to handle this malware quickly and effectively.

Information Sharing and Analysis Communities

Information sharing communities were created to make threat data and best practices more accessible by lowering the barrier to entry and standardizing how threat information is shared and stored between organizations. While information sharing occurs frequently between industry peers, it’s usually in an informal and ad hoc fashion. One of the most effective formal methods of information sharing comes through information sharing and analysis centers (ISACs). ISACs, as highlighted in Table 1-7, are industry-specific bodies that facilitate sharing of threat information and best practices relevant to the specific and common infrastructure of the industry.

Table 1-7 A List and Description of Several ISACs

Another mechanism to achieve similar goals has been made possible via a 2015 executive order by then US President Barack Obama in the creation of Information Sharing and Analysis Organizations (ISAOs). These public information sharing organizations are similar to those of ISACs but without the alignment to a specific industry. ISAOs, by definition, are designed to be voluntary, transparent, inclusive, and flexible. Additionally, they are strongly encouraged to provide actionable products.

Chapter Review

Threat intelligence is not a “one-size-fits-all” solution, and it is not meant to be a perfect guide as to what to do next. If it were, we’d all be out of jobs, since security would be solved in short order. The usefulness of threat intelligence to your business depends on how well integrated business requirements are with intelligence requirements and the security operations effort. Threat actors of all kinds are constantly looking to gain advantages where ever they can find it. As security professionals, we’re looking to set the conditions to disrupt their decision cycles with efficient and well-informed choices about how we prepare and defend our organizations. Developing an intelligence program, sharing indicators with peer organizations, and placing effective controls in defense of a complex network environment are all things we can do to help us achieve these goals.

Questions

1. Which of the following is not considered a form of passive or open source intelligence reconnaissance?

A. Google hacking

B. nmap

C. ARIN queries

D. nslookup

2. Which of the following is the term for collection and analysis of publicly available information appearing in print or electronic form?

A. Signals intelligence

B. Covert intelligence

C. Open source intelligence

D. Human intelligence

3. Information that may not be shared with parties outside of the specific exchange, meeting, or conversation in which it was originally disclosed is designated by which of the following?

A. TLP:RED

B. TLP:AMBER

C. TLP:GREEN

D. TLP:WHITE

4. Which of the following sources will most often produce intelligence that is most relevant to an organization?

A. Open source intelligence

B. Deep and dark web forums and communications platforms

C. The organization’s network

D. Closed source vendor data

5. Which of the following is not a characteristic of high-quality threat intelligence source data?

A. Timeliness

B. Transparency

C. Relevancy

D. Accuracy

6. In the STIX 2.0 framework, which object may be used to represent individuals, organizations, or groups?

A. Campaign

B. Persona

C. Intrusion set

D. Identity

7. In which phase of the five-step intelligence cycle would an analyst communicate his or her findings to the customer?

A. Communication

B. Dissemination

C. Collection

D. Feedback

8. Threat actors whose activities lead to increased risk as a result of their privileged access or employment are best described by what term?

A. Unwilling participant

B. Nation-state actor

C. Hacktivist

D. Insider threat

Answers

1. B. nmap is a scanning tool that requires direct interaction with the system under test. All the other responses allow a degree of anonymity by interrogating intermediary information sources.

2. C. Open source intelligence, or OSINT, is free information that’s collected in legitimate ways from public sources such as news outlets, libraries, and search engines. It should be used alongside intelligence gathered from closed sources to answer key intelligence questions.

3. A. TLP:RED information is limited to those present at a particular engagement, meeting, or joint effort. In most circumstances, TLP:RED should be exchanged verbally or in person. The use of TLP:RED outside of approved parties could lead to impacts on a party’s privacy, reputation, or operations if misused.

4. C. An organization’s network will inherently have the most relevant threat data available of all the sources listed.

5. B. Transparency is not a characteristic of high-quality threat intelligence source data. In addition to being complete, good threat intelligence often has three characteristics: timeliness, relevancy, and accuracy.

6. D. An identity is a STIX domain object that represents individuals, organizations, or groups. The object may be a specific and may include the names of the person or organization referenced, or it may be used to identify an entire industry sector, such as transportation.

7. B. Distributing the requested intelligence to the customer occurs at the dissemination phase. The product may be used to gain a greater understanding of an adversary’s motivation or strengthen internal defenses, or to support legal action.

8. D. Insider threat actors are those who work within an organization and represent a particularly high risk of causing catastrophic damage due to their privileged access to internal resources.