CHAPTER 2

Threat Intelligence in Support of Organizational Security

In this chapter you will learn:

• Types of threat intelligence

• Attack frameworks and their use in leveraging threat intelligence

• Threat modeling methodologies

• How threat intelligence is best used in other security functions

By “intelligence” we mean every sort of information about the enemy and his country—the basis, in short, of our own plans and operations.

—Carl von Clausewitz

Depending on the year and locale, fire departments may experience around a 10 percent false alarm rate resulting from accidental alarm tripping, hardware malfunction, and nuisance behavior. Given the massive amount of resources required for a response and the scarce and specialized nature of the responders, this presents a significant issue for departments to manage. After all, they cannot respond to a perceived emergency with anything less that their full attention. As with fire departments, false alarms are more than just an annoyance for security operations teams. As the volume of data that traverses the network increases, so do the alerts and logs that have to be triaged, interpreted, and actioned—and the chance for any of those alerts being a false alarm also rises. Unfortunately, growth of specialized security teams that work endlessly to protect an enterprise from threats isn’t growing at the same pace.

Although organizations invest in new types of threat detection technologies, they may only add to the already overwhelming amount of noise that exists, resulting in alert fatigue. Not only are analysts simply unable to assess, prioritize, and act upon every alert that comes in, but they may often ignore some of them because of the high rate of false positives. Much like the townspeople who responded to the boy crying “wolf!” only to learn that there was no wolf, security responders may learn to ignore alarm bells over time if no malicious activity exists.

One effective way to mitigate the dangers of overwhelming alerts and the often-associated alert fatigue is to integrate a threat intelligence program into all aspects of security operations. As covered in Chapter 1, threat intelligence is about adding context to internal signals to make risk-based decisions. Whether these choices occur at the tactical level in the security operations center or at the strategic level in the boardroom, good threat intelligence makes for a far better-informed security professional. In this chapter we’ll explore frameworks and best practices for integrating threat intelligence into your security program to improve operations at every level.

Levels of Intelligence

In his doctrine on characterizing the adversary, famed Prussian general Carl von Clausewitz states that the adversary is a thinking, animate entity that reacts to the decisions of his enemy. The essence of developing a strong operational plan is to discover the enemy’s strategy, develop your own plan to confront the enemy, and execute it with precision. The delineation of levels of war has become a keystone in the military decision-making process for armies throughout the world. The key concepts of providing intelligence at these levels can also be applied to cybersecurity.

At the highest level, organizations think about their conduct in a strategic manner, the results of which should impair adversaries’ abilities to carry out what they’re trying to do. Strategic effects should aim to disrupt the enemy’s ability to operate by neutralizing its centers of gravity or key resource providers. Strategic threat intelligence therefore should support decisions at this level by delivering products that are anticipatory in nature. These products will provide a comprehensive view of the environment, identify the key actors, and offer a glimpse into the future based on recommended courses of action or inaction. This type of intelligence is often designed to inform the decisions of senior leaders in an organization and is accordingly not overtly technical. It’s aimed at addressing the concerns of that particular audience, covering topics such as regulatory and financial impacts to the organization.

The application of a company’s cybersecurity strategy occurs at the operational level, and planning will address concepts such as what the organization is trying to defend, from whom, for what duration, and with what capabilities. Before defining exactly how all this is to happen, defenders must determine what major efforts need to be in place to accomplish strategic goals, what resources might be needed to accomplish them, and what defines the nature of the problem. Without this direction, organizations will fail to adequately confront the challenges posed by the adversary, possibly squandering resources and frustrating security analysts. Operational threat intelligence products will provide insight into conditions particular to the environment that the organization is looking to defend. Products of this type will inform decision-makers about how best to allocate resources to defend against specific threats.

Finally, at the lowest level of war are the engagements between attacker and defender. Decisions at the tactical level are focused on how, exactly, a defender will engage with the adversary, to include any technical countermeasures. The results of these activities may sometimes ripple out broadly to affect operational and strategic decision-making. For example, a decision to block a service on a network may be required in response to a particularly damaging ongoing attack. If that decision then affects legitimate operations, or how the organization shares data with a strategic partner, it clearly extends beyond the immediate engagement at hand, and its second- and third-order effects will need to be considered moving forward. Tactical threat intelligence focuses on attacker tactics, techniques, and procedures (TTPs) and relates to the specific activity observed. These products are highly actionable and the results of the follow-up decisions they inform will be used by operational staff to ensure that technical controls and processes are in place to protect the organization moving forward.

Attack Frameworks

Security teams use frameworks as analytical tools to add structure when thinking about the lifecycle of a security incident and the actors involved. Frameworks allow for broad understanding of the key concepts, timelines, and motivations of attackers by providing consistent language and syntax to communicate most aspects of an attack. With knowledge of how attackers think, the tools they use, and where they might employ a particular technique, defenders are in a better position to make a decision earlier and can reduce the opportunity for an attacker to cause disruption.

MITRE ATT&CK

The MITRE Corporation manages a significant number of federally funded research groups throughout the United States. It has developed a number of systems and frameworks that are very important for the security industry, to include the Cyber Observable eXpression (CybOX) framework, the Common Vulnerabilities and Exposures (CVE) system, the Trusted Automated Exchange of Intelligence Information (TAXII), and Structured Threat Information Expression (STIX), some of which were covered in Chapter 1.

Beginning in 2013, MITRE began development on a model that would allow US government agencies and industry security teams to share information about attacker TTPs with one another in an effective manner. The model would come to be known as the Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK) framework. It’s currently one of the most effective methods of tracking adversarial behavior over time, based on observed activity shared from the security community. Within ATT&CK are three flavors: Enterprise ATT&CK, PRE-ATT&CK, and Mobile ATT&CK. As you may be able to guess from the names, PRE-ATT&CK models the TTPs that an attacker may use before conducting an attack, while Mobile ATT&CK models the TTPs an attacker may use to gain access to mobile platforms. We’ll take some time to explore Enterprise ATT&CK, the most widely used and relevant model of the three to enterprise cybersecurity analysts.

The framework serves as an encyclopedia of previously observed tactics from bad actors. These behaviors are divided into 12 categories based on real-world observations:

• Initial Access The steps the adversary takes to get into your network

• Execution The methods the adversary uses to run malicious sode on a local or remote system

• Persistence The means by which the adversary maintains a presence on your systems

• Privilege Escalation The techniques the adversary uses to gain positions of higher privilege

• Defense Evasion The maneuvers the adversary uses to avoid detection

• Credential Access The way the adversary gathers credentials such as account names, passwords and tokens

• Discovery The way in which the adversary gains an understand of your network layout and its technologies

• Lateral Movement The techniques the adversary uses to pivot and gain access to other systems on the network

• Collection The methods the adversary uses to capture artifacts on hosts and servers

• Command and Control The techniques the adversary uses to communicate with systems on a victim network

• Exfiltration The methods the adversary employs to move data out of the network

• Impact The steps an adversary takes to prevent access to, damage or destroy data on your network

These activities are communicated using a common language so that different teams and organizations worldwide can use the same reference to describe activities that might otherwise have other names. This aspect of the framework makes it particularly useful with prioritizing behaviors to focus on. What makes this framework so useful for defenders is that the behaviors are not meant to include strictly red-teaming or theoretical attacks, but rather those that adversaries have conducted and that they are likely to do based on observations in the wild. Furthermore, the model itself is free and built for global accessibility, reducing the barriers to entry for participation and usage, such as team maturity or organizational resources.

Although there are only 12 tactics in the Enterprise ATT&CK framework, there are hundreds of techniques that offer details about activity related to specific operating systems including Windows, macOS, and Linux, and several cloud platforms. Many of the techniques listed under the tactic categories can be used in other categories. A useful way to determine when and how a particular technique can be used is by browsing MITRE’s open source web app, MITRE ATT&CK Navigator (https://mitre.github.io/attack-navigator/enterprise/). It allows for basic navigation of all of the framework’s components from one pane. A user can select a particular technique and see it highlighted across multiple categories, as shown in Figure 2-1.

Figure 2-1 MITRE ATT&CK Navigator with technique highlights

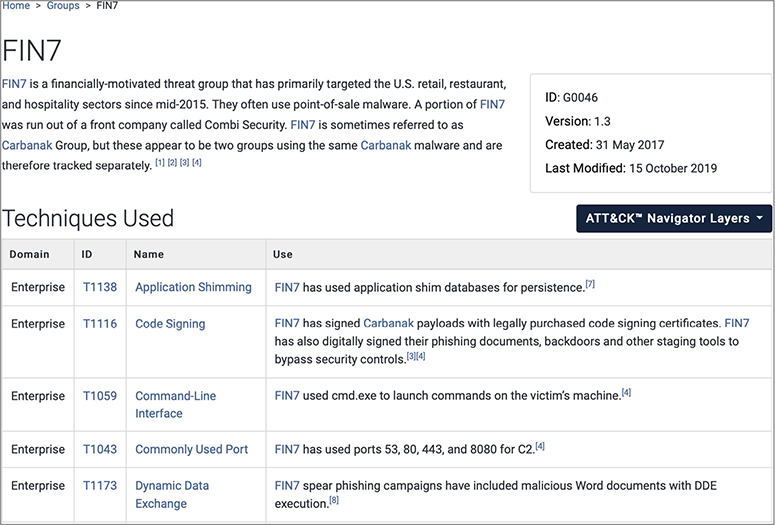

Accompanying each of the techniques are details that will be very useful for defenders. Using the group FIN7 as an example, shown in Figure 2-2, you can see that the information provided includes a unique identification number, dates the group was first observed, and a brief description that often includes alternate group names. The information page goes on to list all of the techniques associated with that group. An analyst can then select a technique to find examples of the technique as seen in the wild, detection methods, and mitigations.

Figure 2-2 Snapshot of FIN7 threat actor group

Using the framework has quantitative benefits for the security team as well. Outside of simply being aware of the most commonly used attack techniques, a security team can compare TTPs associated with groups that it believes are priority and highlight the TTPs associated with them, identifying overlaps, while providing an effective visual reference. For example, security analysts at a bank may use the framework to highlight the TTPs used by FIN7 and Cobalt Group, two threat actor groups known for targeting financial institutions. Using separate colors to identify the groups, let’s say red for FIN7 and blue for Cobalt Group, analysts can overlay the color-coded TTPs on a single matrix to determine which techniques to prioritize defenses against, in this case identified in purple. Additionally, structuring TTPs in this way easily enables defenders to measure the adversaries they’re tracking and countermeasures they’ve put in place. In addition, presenting hard figures alongside trends observed over time to show progress in addressing specific TTPs often resonates well with senior leadership.

The Diamond Model of Intrusion Analysis

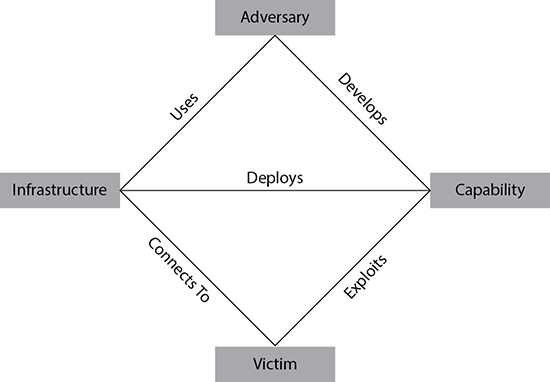

While looking for an effective way to analyze and track the characteristics of cyberintrusions by advanced threat actors, security professionals Sergio Caltagirone, Andrew Pendergast, and Christopher Betz developed the Diamond Model of Intrusion Analysis, shown in Figure 2-3, which emphasizes the relationships and characteristics of four basic components: Adversary, Capability, Victim, and Infrastructure. The components are represented as vertices on a diamond, with connections between them. Using the connections between these entities, you can use the model to describe how an adversary uses a capability in an infrastructure against a victim. A key feature of the model is that enables defenders to pivot easily from one node to the next in describing a security event. As entities are populated in their respective vertex, an analyst will be able to read across the model to describe the specific activity (for example, FIN7 [the Adversary] uses phish kits [the Capability] and actor-registered domains [the Infrastructure] to target bank executives [the Victim]).

Figure 2-3 The Diamond Model

It’s important to note that the model is not static, but rather adjusts as the adversary changes TTPs, infrastructure, and targeting. Because of this, this model requires a great deal of attention to ensure that the details of each component are updated as new information is uncovered. If the model is used correctly, analysts can easily pivot across the edges that join vertices in order to learn more about the intrusion and explore new hypotheses.

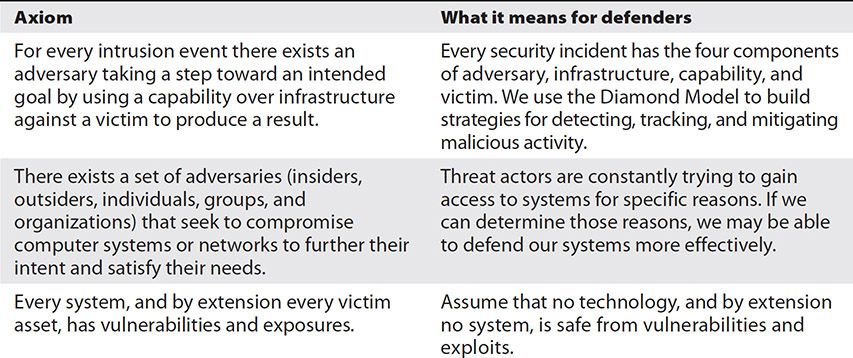

As we use this model to capture and communicate details about malicious activity we may encounter, we should also consider the seven axioms that Caltagirone describes as capturing the nature of all threats. Table 2-1 lists the axioms and what they mean for defenders.

Table 2-1 Diamond Model Axioms

Kill Chain

The kill chain is a phase-based model that categorizes the activities that an enemy may conduct in a kinetic military operation. One of the first and most commonly used kill chain models in the military is the 2008 F2T2EA, or Find, Fix, Track, Target, Engage, and Assess. Driven by the need to improve response times for air strikes, then Air Force Chief of Staff General John Jumper pushed for the development of an agile and responsive framework to achieve his goals, and thus F2T2EA was born. Similar to the military kill chain concept, the cyber kill chain defines the steps used by cyberattackers in conducting their malicious activities. The idea is that by providing a structure that breaks an attack into stages, defenders can pinpoint where along the lifecycle of an attack an activity is and deploy appropriate countermeasures. The kill chain is meant to represent the deterministic phases adversaries need to plan and execute in order to gain access to a system successfully.

The Lockheed Martin Cyber Kill Chain is perhaps the most well-known version of the kill chain as applied to cybersecurity operations. It was introduced in a 2011 whitepaper authored by security team members Michael Cloppert, Eric Hutchins, and Rohan Amin. Using their experience from many years on intelligence and security teams, they describe a structure consisting of seven stages of an attack. Figure 2-4 shows the progression from stage to stage in the Cyber Kill Chain. Since the model is meant to approach attacks in a linear manner, if defenders can stop an attack early on at the exploitation stage, they can have confidence that the attacker is far less likely to have progressed to further stages. Defenders can therefore avoid conducting a full incident response plan. Furthermore, understanding the phase progression, typical behavior expected at each phase, and inherent dependencies in the overall process allows for defenders to take appropriate measures to disrupt the kill chain.

Figure 2-4 The Lockheed Martin Cyber Kill Chain

The kill chain begins with Reconnaissance, the activities associated with getting as much information as possible about a target. Reconnaissance will often show the attacker focusing on gaining understanding about the topology of the network and key individuals with system or specific data access. As described in Chapter 1, reconnaissance actions (sometimes referred to as recon) can be passive or active in nature. Often an adversary will perform passive recon to acquire information about a target network or individual without direct interaction. For example, an actor may monitor for new domain registration information about a target company to get technical points of contact information. Active reconnaissance, on the other hand, involves more direct methods of interacting with the organization to get a lay of the land. An actor may scan and probe a network to determine technologies used, open ports, and other technical details about the organization’s infrastructure. The downside (for the actor) is that this type of activity may trigger detection rules designed to alert defenders on probing behaviors. It’s important for the defender to understand what types of recon activities are likely to be leveraged against the organization and develop technical or policy countermeasures to mitigate those threats. Furthermore, detecting reconnaissance activity can be very useful in revealing the intent of an adversary.

With the knowledge of what kind of attack may be most appropriate to use against a company, an attacker would move to prepare attacks tailored to the target in the Weaponization phase. This may mean developing documents with naming schemes similar to those used by the company, which may be used in a social engineering effort at a later point. Alternatively, an attacker may work to create specific malware to affect a device identified during the recon. This phase is particularly challenging for defenders to develop mitigations for, because weaponization activity often occurs on the adversary side, away from defender-controlled network sensors. It’s nonetheless an essential phase for defenders to understand, because it occurs so early in the process. Using artifacts discovered during the Reconnaissance phase, defenders may be able to infer what kind of weaponization may be occurring and prepare defenses for those possibilities. Even after discovery, it may be useful for defenders to reverse the malware to determine how it was made. This can inform detection efforts moving forward.

The point at which the adversary goes fully offensive is often at the Delivery phase. This is the stage when the adversary transmits the attack. This can happen via a phishing e-mail or Short Message Service (SMS), by delivery of a tainted USB device, or by convincing a target to switch to an attacker-controlled infrastructure, in the case of a rogue access point or physical man-in-the-middle attack. For defenders, this can be a pivotal stage to defend. It’s often measurable since the rules developed by defenders in the previous stages can be put into use. The number of blocked intrusion attempts, for example, can be a quick way to determine whether previous hypotheses are likely to be true. It’s important to note that technical measures combined with good employee security awareness training continually proves to be the most effect way to stop attacks at this stage.

In the unfortunate event that the adversary achieves successful transmission to his victim, he must hope to somehow take advantage of a vulnerability on the network to proceed. The Exploitation phase includes the actual execution of the exploit against a flaw in the system. This is the point where the adversary triggers the exploit against a server vulnerability, or when the user clicks a malicious link or executes a tainted attachment, in case of a user-initiated attack. At this point, an attack can take one of two courses of action. The attacker can install a dropper to enable him to execute commands, or he can install a downloader to enable additional software to be installed at a later point. The end goal here is often to get as much access as possible to begin establishing some permanence on the system. Hardening measures are extremely important at this stage. Knowing what assets are present on the network and patching any identified vulnerabilities improves resiliency against such attacks. This, combined with more advanced methods of determining previously unseen exploits, puts defenders in a better position to prevent escalation of the attack.

For the majority of attacks, the adversary aims to achieve persistence, or extended access to the target system for future activities. The attacker has taken a lot of steps to get to this point, and would likely want to avoid going through them every time he wants access to the target. Installation is the point where the threat actor attempts to emplace a backdoor or implant. This is frequently seen during insertion of a web shell on a compromised web server, or a remote access Trojan (RAT) on a compromised machine. Endpoint detection is frequently effective against activities in the stage; however, security analysts may sometimes need to use more advanced logging interpretation techniques to identify clever or obfuscated installation techniques.

In the Command and Control (C2) phase, the attacker creates a channel in order to facilitate continued access to internal systems remotely. C2 is often accomplished through periodic beaconing via a previously identified path outside of the network. Correspondingly, defenders can monitor for this kind of communication to detect potential C2 activity. Keep in mind that many legitimate software packages perform similar activity for licensing and update functionality. The most common malicious C2 channels are over the Web, Domain Name System (DNS), and e-mail, sometimes with falsified headers. For encrypted communications, beacons tend to use self-signed certificates or custom encryption to avoid traffic inspection. When the network is monitored correctly, it can reveal all kinds of beaconing activity to defenders hunting for this behavior. When looking for abnormal outbound activities such as this, we must think like our adversary, who will try to blend in with the scene and use techniques to cloak his beaconing. To complicate things more, beaconing can occur at any time or frequency, from a few times a minute to once or twice weekly.

The final stage of the kill chain is the Actions on Objectives, or the whole reason the attacker wanted to be there in the first place. It could be to exfiltrate sensitive intellectual property, encrypt critical files for extortion, or even sabotage via data destruction or modification. Defenders can use several tools at this stage to prevent or at least detect these actions. Data loss prevention software, for example, can be useful in preventing data exfiltration. In any case, it’s critical that defenders have a reliable backup solution that they can restore from in the worst-case scenario. Much like the Reconnaissance stage, detecting activity during this phase can give insight into attack motivations, albeit much later than is desirable.

While the Cyber Kill Chain enables organizations to build defense-in-depth strategies that target specific parts of the kill chain, it may fail to capture attacks that aren’t as dependent on all of the phases to achieve end goals. One such example is modern phishing attacks in which attackers rely on victims to execute an attached script. Additionally, the kill chain is very malware-focused and doesn’t capture the full scope of other common threat vectors such as insider threats, social engineering, or any intrusion in which malware wasn’t the primary vehicle for access.

Threat Research

Thanks to the increasing acceptance of intelligence concepts across security teams and the use of attack frameworks, threat research is gaining analytic rigor and completeness required to be a repeatable and scalable practice. Though threat research can be used to enrich alerts raised with existing detection technologies, it can also be used to uncover novel attacker TTPs within an environment not already discovered by detection rules. As an analyst coming across an artifact, you should work to answer a few initial questions about it: Is this benign? Has anyone seen this before, and if so, what do they have to say about it? Why is this present in my system? There are many different approaches we can use to get answers to these questions, but often we must conduct some sort of initial enrichment to determine the next steps. Conducting threat research is a critical part of the threat intelligence process, and we’ll step through a few effective methods to getting answers to those questions.

Reputational

Security team members need valid malware signatures and reputation data about IPs and domains to help filter the vast amounts of data that flow through the network. They use this information to enable firewalls, gateways, and other security devices to make decisions that prevent attacks while maintaining access for legitimate traffic. There are many free and commercial services that assign reputation scores to URLs, domains, and IP addresses across the Internet. Scores that correlate to the highest risk are associated with malware, spyware, spam, phishing, C2, and data exfiltration servers. Reputation scores can also be assigned to computers and websites that have already been identified as compromised. Google’s Safe Browsing is a useful service that enables users to check the status of a website manually. The service also allows for automatic URL blacklisting for users of Chrome, Safari, and Firefox web browsers, helping users identify whether they are attempting to access web resources that contain malware or phishing content.

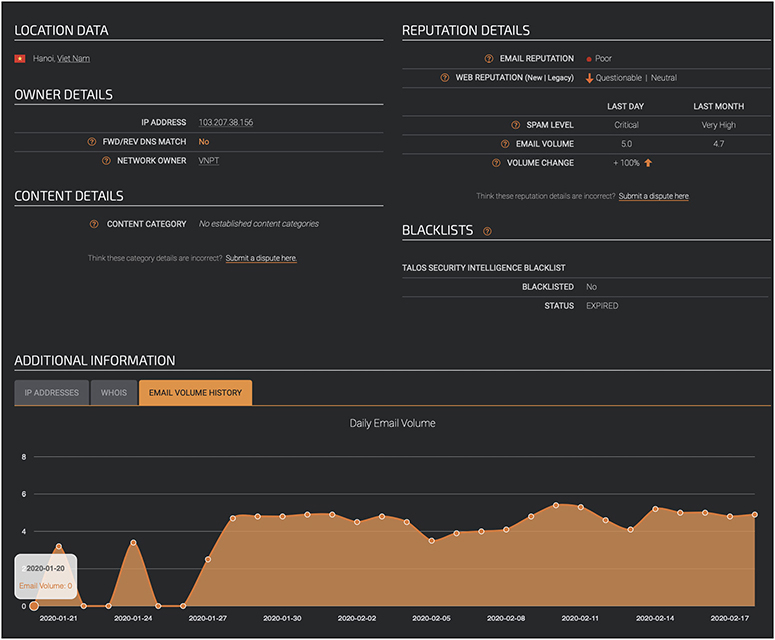

Cisco’s threat intelligence team, Talos, provides excellent reputational lookup features in a single dashboard in its Reputation Center service. Figure 2-5 is a snapshot of the report page generated for a suspicious IP address. Included in the report are details about location, blacklist status, and IP address owner information. For the Reputation Details section, Talos breaks the reputational assessments out by e-mail, malware, and spam, with historical information to give a sense of trends associated with the IP address. Finally, at the bottom of the report is a section for additional information. In this case, the IP address carried “critical” and “very high” spam levels from the previous day and month, respectively. The Additional Information section in this case provides daily e-mail volume information to give some historical context to the rating.

Figure 2-5 Talos Reputation Center report

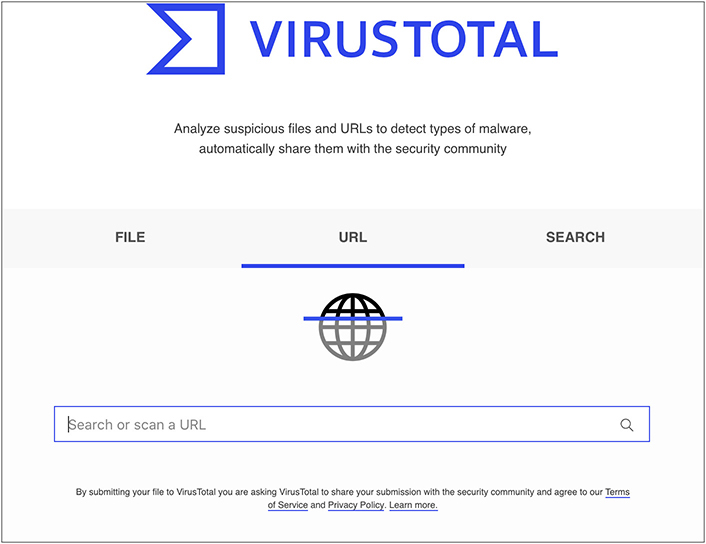

For high-volume reputational information, VirusTotal, a security-focused subsidiary of Google, is one of the most reliable services. VirusTotal aggregates the results of submitted URLs and samples from more than 70 antivirus scanners and URL/domain blacklisting services to return a verdict about the likelihood of content being malicious. In addition to the web-based submission method shown in Figure 2-6, users may submit samples programmatically using any number of scripting options via VirusTotal’s application programming interface (API).

Figure 2-6 VirusTotal homepage

Behavioral

Sometimes we are unable or unwilling to invest the effort into reverse engineering a binary executable, but we still want to find out what it does. This is where an isolation environment, or sandbox, comes in handy. Unlike endpoint protection sandboxes, this tool is usually instrumented to assist the security analyst in understanding what a running executable is doing as samples of malware are executed to determine their behaviors.

Cuckoo Sandbox is a popular open source isolation environment for malware analysis. It uses either VirtualBox or VMware Workstation to create a virtual computer on which to run the suspicious binary safely. Unlike other environments, Cuckoo is just as capable in Windows, Linux, macOS, or Android virtual devices. Another tool with which you may want to experiment is REMnux, which is a Linux distribution loaded with malware reverse engineering tools.

Using these tools, we may get insight into how a particular piece of software behaves when executed in the target environment. Be aware that malware writers are increasingly leveraging techniques to detect sandboxes and control various malware activities in the presence of a virtualized environment.

Indicator of Compromise

An indicator of compromise (IOC) is an artifact that indicates the possibility of an attack or compromise. As covered in Chapter 1, IOCs need two primary components: data and context. Of the countless commercial feeds available to security teams, the most appropriate one to use depends on the industry and specific organizational requirements. As for free sources of IOC, there are a few high-quality sources that we’ll highlight. In addition to the ISACs covered earlier, the Computer Incident Response Center Luxembourg (CIRCL) operates several open source malware information sharing platforms, or MISPs, to facilitate automated sharing of IOCs across private and public sectors. Domestically, the FBI’s InfraGard Portal provides historical and ongoing threat data relevant to 16 sectors of critical infrastructure.

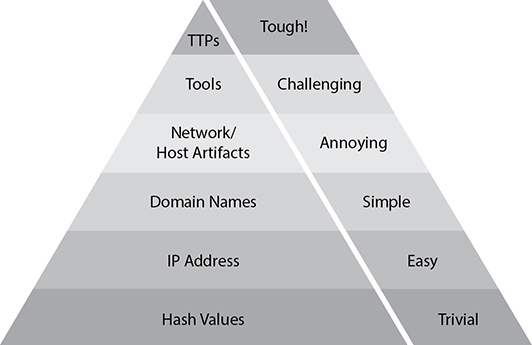

Security architect David Bianco developed a great model to categorize IOCs. His model, the Pyramid of Pain, shown in Figure 2-7, is used to show how much cost we can impose on the adversary when security teams address indicators at different levels. Hashes are easy to alert upon with high confidence; however, they are also easy to change and can therefore cause a trivial amount of pain for the adversary if detected and actioned. Changing IP addresses is more difficult than changing hashes, but most adversaries have disposable infrastructure and can also change the IP addresses of their hop points and command and control nodes once they are compromised. The bottom half of the pyramid contains the indicators that are most likely uncovered using highly automated solutions, while the top half includes more behavioral based indicators.

Figure 2-7 Bianco’s Pyramid of Pain

As security professionals, we want to operate right at the top whenever possible, where the TTPs, if identified, require that attackers change nearly every aspect of how they operate. As you can probably guess, it is more difficult to alert on network and host artifacts and TTPs as well, but if we can address these high-confidence indicators, it will have a lasting impact on the security of our networks.

Common Vulnerability Scoring System

A well-known standard for quantifying severity is the Common Vulnerability Scoring System (CVSS). As a framework designed to standardize the severity ratings for vulnerabilities, this system ensures accurate quantitative measurement so that users can better understand the impact of these weaknesses. With the CVSS scoring standard, members of industries, academia, and governments can communicate clearly across their communities.

Threat Modeling Methodologies

Threat modeling promotes better security practices by taking a procedural approach to thinking like the adversary. At their core, threat modeling techniques are used to create an abstraction of the system, develop profiles of potential attackers, and bring awareness to potential weaknesses that may be exploited. Exactly how the threat modeling activity is conducted depends on the goals of the model. Some threat models may be used to gain a general understanding about all aspects of security, while others may be focused on related aspects such as user privacy.

To gain the greatest benefit from threat modeling, it should be performed early and continuously as an input directly into the software development lifecycle (SDLC). Not only might this prevent a catastrophic security issue in the future, but it may lead to architectural decisions that help reduce vulnerabilities without sacrificing performance. For some systems, such as industrial control systems and cyber-physical systems, threat modeling may be particularly effective, because the costs of failure are not just monetary. By promoting development with security considerations, rather than security as an afterthought, defenders should have an easier time with incident response because of their increased awareness of the software architecture.

Adversary Capability

Understanding what a potential attacker is capable of can be a daunting task, but it is often a key competence of any good threat intelligence team. The first step in understanding adversary capability is to document the types of threat actors that would likely be threats, what their intent might be, and what capabilities they might bring to bear in the event of a security incident. As previously described, we can develop an understanding of adversary TTPs with the help of various attack frameworks and resources such as MITRE ATT&CK.

Total Attack Surface

The attack surface is the logical and physical space that can be targeted by an attacker. Logical areas include infrastructure and services, while physical areas include server rooms and workstations. Mapping out what parts of a system need to be reviewed and tested for security vulnerabilities is a key part of understanding the total attack surface. As each component is addressed, defenders need to keep track of how the overall attack might change as compensating controls are put into place. Analysis of the attack surface is usually conducted by penetration testers, software developers, and system architects, but as a security analyst, you will have significant influence in the architecture decisions as the local expert on security operations in the organization.

Attack Vector

With a potential adversary in mind and critical assets identified, the natural next step is to determine the most likely path for the adversary to get their hands on the goods. This can be done using visual tools, as part of a red-teaming exercise, or even as a tabletop exercise. The goals of mapping out attack vectors consist of identifying realistic or likely paths to critical assets and identifying which security controls are in place to mitigate specific TTPs. If no mitigation exists, security teams can put compensating controls in place while they work out a long-term remediation plan.

Impact

Impact is simply the potential damage to an organization in the case of a security incident. Impact types can include but aren’t limited to physical, logical, monetary, and reputational. Impact is used across the security field to communicate risk to an organization. We’ll cover various aspects in impact later the in the book when discussing risk analysis.

Likelihood

The possibility of a threat actor successfully exploiting a vulnerability that results in a security incident is referred to as likelihood. The Nation Institute of Standards and Technology, or NIST, provides a formal definition of likelihood as it relates to security operations as “a weighted factor based on a subjective analysis of the probability that a given threat is capable of exploiting a given vulnerability or a set of vulnerabilities.”

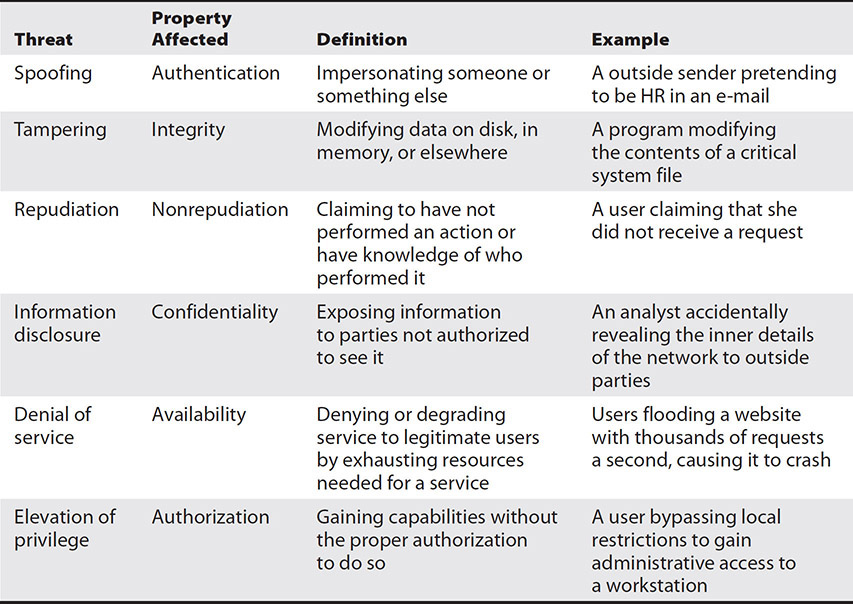

STRIDE

STRIDE is a threat modeling framework that evaluates a system’s design using flow diagrams, system entities, and events related to a system. The framework name is a mnemonic, referring to the security threats in six categories shown in Table 2-2. Invented in 1999 and developed over the last 20 years by Microsoft, STRIDE is among the most used threat modeling method, suitable for application to logical and physical systems alike.

Table 2-2 STRIDE Threat Categories

PASTA

PASTA, or the Process for Attack Simulation and Threat Analysis, is a risk-centric threat modeling framework developed in 2012. Focused on communicating risk to strategic-level decision-makers, the framework is designed to bring technical requirements in line with business objectives. Using seven stages show in Table 2-3, PASTA encourages analysts to solicit input from operations, governance, architecture, and development.

Table 2-3 PASTA Stages

Threat Intelligence Sharing with Supported Functions

Organizations dedicate significant resources to attracting, training, and retaining security professionals. Integrating threat intelligence concepts enables responders to act more quickly in the face of uncertainty and frees them up to deal with new and unexpected threats when they arise.

Incident Response

Incident responders have some of the most sought-after skills required by any organization because of their ability to rapidly and accurately address potentially wide-ranging issues on a consistent basis. Although many incident responders thrive in stressful environments, the job can be challenging for even the most seasoned security professionals. Looking at the upward trend of security event volume and complexity, there will likely be a constant demand for responders well into the future. Incident response is not usually an entry-level security function because it requires such a diverse skill set, from malware analysis, to forensics, to network traffic analysis. Furthermore, at the core of a responder’s modus operandi is speed—speed to confirm a potential incident, speed to remediate, and speed to address the root cause. When we take a look at what’s required across the skill spectrum from an incident responder, and combine that with the need for speed, it becomes clear why reducing response time and moving toward proactive measures are so important.

As security teams attempt to move away from a reactive nature, they must do whatever they can to prepare themselves for the possibility of a security event. Many teams are using playbooks, or predefined sets of automated actions, more and more in their response efforts. Although it may take some time to understand the company’s IT environment, the entities involved, and the external and internal threats posed against the company, preparation pays off in several ways. Threat intelligence information is a critical part of the preparation phase because it enables teams to more accurately develop strong, consistent processes to cope with issues should they arise. These not only dramatically reduce the time needed to respond, but as repeatable and scalable processes, they reduce the likelihood of an analyst error.

Vulnerability Management

Vulnerability management teams are all about making risk-based decisions. Thinking back to one of the axioms of the Diamond Model of Intrusion Analysis, we’re reminded that “every system, and by extension every victim asset, has vulnerabilities and exposures.” Vulnerabilities seem to be a fact of life, but that doesn’t mean that a team can forsake its responsibility to identify vulnerable assets and deploy patches.

Throughout the book so far, we’ve referred to useful sources for threat data, many of them providing vulnerability information. Another database, NIST’s National Vulnerability Database (NVD), makes it easy for organizations to determine whether they are likely to be affected by disclosed vulnerabilities. But the NVD and other databases miss a key feature that threat intelligence adds. Threat intelligence takes vulnerability management concepts a step further and provides awareness about vulnerabilities in an operational context—that is, answering the question, “Are these vulnerabilities actively being exploited?” Table 2-4 provides a short list of some of the authors’ favorite types of free sources for intelligence related to vulnerabilities.

Table 2-4 Sources of Intelligence Related to Vulnerabilities

Threat intelligence communicates exploitation relevant to the organization instead of general exploitability. This is important, because thinking back to the scale of vulnerabilities across the enterprise, a vulnerability management team’s core function is really prioritization. By identifying what is being exploited versus what can be exploited, these teams can make better decisions about where to place resources.

Risk Management

As with vulnerability management teams, risk management teams speaking the language of risk in terms of impact and probability. If we understand risk to mean the impact to an asset by a threat actor exploiting a vulnerability, we see that the presence of a threat actor is necessary in communicating risk accurately. Drilling down further, three components need to be present for a threat to be accurately described: capability, intent, and opportunity. Providing answers to these three components as they appear at present is exactly what threat intelligence is designed to do. Furthermore, good threat intelligence will also be able to predict what the threat will likely be in the future, or if there are likely to be more. Figure 2-8 highlights the various components necessary to describe a threat and how it all fits in with defining risk.

Figure 2-8 Relationship of vulnerability, impact, threat, and risk

Predicting the future is what all risk team members want to do, and though that’s not really possible, threat intelligence does provide answers to questions that risk managers and security leaders ask. Primary among these are identifying what type of attacks are becoming more or less prevalent and what assets are attackers likely to target in the future. In terms of quantifying the cost to an organization, risk teams may also want to know which of these attacks are likely to be most costly to the business. The logistics sector, for example, has a completely different cost of downtime than does the automotive industry.

Security Engineering

Security engineers of all flavors, whether on a product security or corporate security team, regularly benefit from threat intelligence data. Threat intelligence gathered from security research or criminal communities can offer insight into the effectiveness of security measures across a company. While the motivations of these two communities are very different, they both can provide a unique outlook on your organization’s security posture. This feedback can then be analyzed and operationalized by your organization’s security engineers.

Detection and Monitoring

As discussed throughout Chapter 1, threat intelligence as applied to security operations is all about enriching internal alerts with the external information and context necessary to make decisions. For analysts working in a security operations center (SOC) to interpret incoming detection alerts, context is critical in enabling them to triage quickly and move on to scoping potential incidents. Because a huge part of a detection analyst’s time is spent interpreting the output of dashboards, identifying relevant inputs early on reduces the cognitive load for the analyst down the line.

Let’s explore a typical workflow for a detection analyst, where threat intelligence can significantly speed up the decision-making cycle. When an analyst receives an alert, she is getting only a headline of the activity and a few artifacts to support the alert condition. Attempting to triage this initial alert without access to enough context will not give the analyst a sense of what the true story is. Even if a repository exists and is made available to the analyst with all information, it would be impractical to perform the manual steps necessary to assimilate and correlate with other data related to the alert. Automated threat intelligence tailored to the needs of the detection team improves the analyst workflow by providing timely details. A great example is enrichment around suspicious domains. A detection team can easily leverage automation techniques to query threat intelligence data to extract reputation information, passive DNS details, and malware associations linked to that domain. Joining this information with what’s already in the alert content provides so much more awareness for the analyst to make a call.

Chapter Review

Threat intelligence enables organizations to anticipate, respond to, and remediate threats. In some cases, organizations can use threat intelligence to speed up its decision-making cycle, causing increased cost to attackers conducting malicious activity with continual improvements in its security posture and response efforts. Useful threat intelligence can be generated only after establishing a clear understanding of the organization’s goals, the role of the security operations team within the organization, and the role of threat intelligence in supporting those security teams. Although the threat intelligence team may often reside within or adjacent to a security operations center, it serves customers throughout the organization. Risk managers, vulnerability managers, incident responders, financial analysts, and C-suite members can all benefit from threat intelligence products. Key to providing the best products possible is to have a baseline understanding of adversaries that may target the organization, what their capabilities are, and what motivates them. Understanding these aspects focuses the threat intelligence effort and ensures not only that the value delivered by the security team is in line with organizational goals, but that it is also relevant and actionable.

Questions

1. Which of the following is a commonly utilized four-component framework used to communicate threat actor behavior?

A. Kill chain

B. STIX 2.0

C. The Diamond Model

D. MITRE ATT&CK

2. Developed by Microsoft, which threat modeling technique is suitable for application to logical and physical systems alike?

A. PASTA

B. STRIDE

C. The Diamond Model

D. MITRE ATT&CK

3. Defining what a threat actor might be able to achieve in the event of an attack is also known as determining the actor’s _______________?

A. Means

B. Skillset

C. Intent

D. Capability

4. Details about domains that may include scoring information, blacklist status, and association with malware are also known as what kind of data?

A. Behavioral

B. Threat

C. Reputational

D. Malware

5. Accurately describing a threat includes all but which of the following components?

A. Intent

B. Capability

C. Opportunity

D. Operations

6. What is the term for a predefined set of automated actions that incident responders and security operations center analysts use to enhance their operations?

A. CVSS

B. Playbook

C. Repudiation

D. Detection set

7. Consisting of the two components of data and context, what term describes an artifact that indicates the possibility of an attack?

A. Indicator of compromise

B. Security indicators and event monitor

C. Security information and event management

D. Simulations, indications, and environmental monitors

8. Which of the following is an open framework for communicating the characteristics and severity of software vulnerabilities?

A. STRIDE

B. NVD

C. CVSS

D. CVE

Answers

1. C. The Diamond Model of Intrusion Analysis describes how an adversary uses a capability in an infrastructure against a victim.

2. B. STRIDE is a threat modeling framework that evaluates a system’s design using flow diagrams, system entities, and events related to a system.

3. D. Defining a threat actor’s capability, the ability to use skills and tools to perform an attack, helps indicate what the actor might be able to achieve in the event of an attack.

4. C. Reputational services often assign scores to URLs, domains, and IP addresses across the Internet that are generated based on the entity’s links with malware, spyware, spam, phishing, C2, and data exfiltration servers.

5. D. Operations is not one of the three components that need to be present for a threat to be accurately described. The components are capability, intent, and opportunity.

6. B. Security playbooks are customized, scalable security workflows that use integration with software and hardware platforms, usually via APIs, to automate parts of security operations.

7. A. An indicator of compromise (IOC) is an artifact consisting of context applied to observable data that indicates the possibility of an attack.

8. C. The Common Vulnerability Scoring System (CVSS) is the de facto standard for assessing the severity of vulnerabilities.