CHAPTER 6

Threats and Vulnerabilities Associated with Operating in the Cloud

In this chapter you will learn:

• How to identify vulnerabilities associated with common cloud service models

• Current and emerging cloud deployment models

• Challenges of operating securely in the cloud

• Best practices for securing cloud assets

Bronze Age. Iron Age. We define entire epics of humanity by the technology they use.

—Reed Hastings

Cloud solutions offer many benefits to an organization looking to develop new applications and services rapidly or to save costs in the setup and maintenance of an IT infrastructure. To make the best cloud solution decisions, however, an organization must be fully informed of the vulnerabilities that exist and the myriad financial, technical, and compliance risks that they may assume by using this technology. Cloud environments, for the most part, are subject to the same types of vulnerabilities faced by any other computing platform. Clouds run software and hardware and use networking devices in much the same way that traditional data centers do. Although the targeting and specific exploit techniques used in the cloud may vary, we can approach defending cloud environments as we would in any other environment. Key requirements in forming a defense plan include understanding what your organization’s exposure is as a result of using cloud technologies, what types of threat actors exist, what capabilities they may leverage against your organization, and what steps you can take to shore up defenses.

Cloud Service Models

Cloud computing enables organizations to access on-demand network, storage, and compute power, usually from a shared pool of resources. Cloud services are characterized by their ease of provisioning, setup, and teardown. There are three primary service models for cloud resources, listed next. All of these services rely on the shared responsibility model of security that identifies the requirements for both the service provider and server user for mutual security.

• Software as a Service (SaaS) A service offering in which the tasks for providing and managing software and storage are undertaken completely by the vendor. Google Apps, Office 365, iCloud, and Box are all examples of SaaS.

• Platform as a Service (PaaS) A model that offers a platform on which software can be developed and deployed over the Internet.

• Infrastructure as a Service (IaaS) A service model that enables organizations to have direct access to their cloud-based servers and storage as they would with traditional hardware.

Shared Responsibility Model

The shared responsibility model covers how organizations should approach designing their security strategy as it relates to cloud computing, as well as the day-to-day responsibilities involved in executing that strategy. Although your organization may delegate some technical responsibility to your cloud provider, your company is always responsible for the security of your deployments and the associated data, especially if the data relates to customers. You should take the approach of trust but verify. To complement your cloud provider’s policies and security operations, your organization must have the right tools and procedures in place to manage and secure the risks effectively in order to keep company and user data safe. In each of the following service models, the parts of the technology stack the vendor is primarily responsible for and those for which your organization has full control and oversight over are different, but all aspects are important, and all offer various benefits your company may choose to adopt.

Software as a Service

SaaS is among the most commonly used software delivery models on the market. In the SaaS model, organizations access applications and functionality directly from a service provider with minimal requirements to develop custom code in-house; the vendor takes care of maintaining servers, databases, and application code. To save money on licensing and hardware costs, many companies subscribe to SaaS versions of critical business applications such as graphics design suites, office productivity programs, customer relationship managers, and conferencing solutions. As Figure 6-1 shows, those applications reside at the highest level of the technology stack. The vendor provides the service and all of the supporting technologies beneath it.

Figure 6-1 Technology stack highlighting areas of responsibility in a SaaS service model

SaaS is a cloud subscription service designed to offer a number of technologies associated with hardware provision, all the way up to the user experience. A full understanding of how these services protect your company’s data at every level will be a primary concern for your security team. Given the popularity of SaaS solutions, providers such as Microsoft, Amazon, Cisco, and Google often dedicate large teams to securing all aspects of their service infrastructure. Increasingly, any security problems that arise occur at the data-handling level, where these infrastructure companies do not have the responsibility or visibility required to take action. This leaves the burden primarily on the customer to design and enforce protective controls. The most common types of SaaS vulnerabilities exist in one or more of three spaces: visibility, management, and data flow.

As organizations use more and more distributed services, simply gaining visibility on what’s being used at any time becomes a massive challenge. The “McAfee 2019 Cloud Adoption and Risk Report” describes the disconnect between the number of cloud services IT teams believe are being accessed by their users, verses what are actually being accessed. The discrepancy, according to the report, is several orders of magnitude, with the average company using nearly 2000 unique services. Given that IT teams expect service usage to be a number in the dozens or hundreds, they are often not prepared to fully map and audit usage. This is where you, as a security analyst, can assist in bringing to light the reality of your company’s usage, what it means to the attack surface, and some thoughtful courses of action.

A second major challenge to using SaaS solution security is the complexity of identity and access management (IAM). IAM in practice is a perfect example of the friction between security and usability. IAM services enable data owners or security teams to set access rights across services and provide centralized auditing reports for user privileges, provisioning, and access activity. While robust IAM systems are great for an organization because they offer granular control over every aspect of data access, they are often extremely difficult to understand and manage. If the security team finds the system too difficult to manage, the risk increases for a misconfiguration or failure to implement a critical control.

The final major SaaS challenge concerns data, the thing your organization hoped that the SaaS solution would enable you to focus on by removing the need to manage infrastructure. SaaS solutions often offer the ability for you to share data widely, both within an organization and with trusted partners. Although collaboration controls can be set on an organizational, directory, or file level, once the data has left the system, it’s impossible to bring it back. Employees, inadvertently or intentionally, will share documents through messaging apps, e-mail, and other mechanisms. Even with clear indication of a sensitive or confidential document, if there are no technical controls to keep it from leaving the network, it will leave the network.

Addressing these vulnerabilities requires a layered approach that includes policy and technical controls. Compliance and auditing policies coupled with the features provided by SaaS offerings will give the security team reporting capabilities that may help them determine whether they are in line with larger business policies or with government and industry regulations. From a technical point of view, data loss prevention (DLP) solutions will help protect intellectual property in company communications when used in SaaS products. A number of DLP products enable companies to easily define the boundaries at which DLP may be enforced. DLP is most effective when used in conjunction with a strong encryption method. Data encryption protects both data at rest in the SaaS application and during transit across the cloud from user to user. Some government regulations require encryption for financial information, healthcare data, and personally identifiable information. But even for data that is not required to be encrypted, it’s a good idea to use encryption whenever feasible.

Platform as a Service

PaaS shares a similar set of functionalities as SaaS and provides many of the same benefits in that the service provider manages the foundational technologies of the stack in a manner transparent to the end user. PaaS differs primarily from SaaS in that it offers direct access to a development environment to enable organizations to build their own solutions on a cloud-based infrastructure rather than providing their own infrastructures, as shown in Figure 6-2. PaaS solutions are therefore optimized to provide value focused on software development. PaaS, by its very nature, is designed to provide organizations with tools that interact directly with what may be the most important company asset: its source code.

Figure 6-2 Technology stack highlighting areas of responsibility in a PaaS service model

PaaS offers effective and reliable role-based controls to control access to your source code, which is a primary security feature of this model. Auditing and account management tools available in PaaS may also help determine inappropriate exposure. Accordingly, protecting administrative access to the PaaS infrastructure helps organizations avoid loss of control and potential massive impacts to an organization’s development process and bottom line.

At the physical infrastructure, in PaaS, service providers assume the responsibility of maintenance and protection and employ a number of methods to deter successful exploits at this level. This often means requiring trusted sources for hardware, using strong physical security for its data centers, and monitoring access to the physical servers and connections to and from them. Additionally, PaaS providers often highlight their protection against DDoS attacks using network-based technologies that require no additional configuration from the user.

At the higher levels, your organization will have to understand any risks that you explicitly inherit and take steps to address them. You can use any of the threat modeling techniques we discussed earlier to generate solutions. In Table 6-1, you can see that a PaaS provider may offer mitigation, or it can be implemented by your security team, based on a STRIDE (spoofing, tampering, repudiation, information disclosure, denial of service, elevation of privilege) assessment.

Table 6-1 STRIDE Model as Applied to PaaS

Infrastructure as a Service

Moving down the technology stack from the application layer to the hardware later, we next have the cloud service offering of IaaS. As a method of efficiently assigning hardware through a process of constant assignment and reclamation, IaaS offers an effective and affordable way for companies to get all of the benefits of managing their own hardware without incurring the massive overhead costs associated with acquisition, physical storage, and disposal of the hardware. In this service model, shown in Figure 6-3, the vendor would provide the hardware, network, and storage resources necessary for the user to install and maintain any operating system, dependencies, and applications they want.

Figure 6-3 Technology stack highlighting areas of responsibility in an IaaS service model

The previously discussed vulnerabilities associated with PaaS and SaaS may exist for IaaS solutions as well, but there are additional opportunities for flaws at this level, because we’re now dealing with hardware resource sharing across customers. Any of the vulnerabilities that could take advantage of flaws in hard disks, RAM, CPU caches, and GPUs can affect IaaS platforms. One attack scenario affecting IaaS cloud providers could enable a malicious actor to implant persistent backdoors for data theft into bare-metal cloud servers. A vulnerability in either the hypervisor supporting the visualization of various tenant systems or a flaw in the firmware of the hardware in use could introduce a vector for this attack. This attack would be difficult for the customer to detect because it would be possible for all services to appear unaffected at a higher level of the technology stack.

In 2019, researchers at Eclypsium, an enterprise firmware security company, described such a scenario using a flaw they uncovered and named Cloudborne. The vulnerability existed in the baseboard management controller (BMC) of the device, the mechanism responsible for managing the interface between system software and platform hardware. Usually embedded on the motherboard of a service, BMCs grant total management power over the hardware. Among the most noteworthy part of Eclypsium’s research efforts was the demonstration of long-term persistence even after the infrastructure had been reclaimed. The security team got initial access to a bare-metal server, verified the correct BMC, initiated the exploit, and made a few innocuous changes to the firmware. Later, after they had given up access to that original server, they attempted to locate and reacquire the same server from the provider. Though surface changes had been made, the team was able to note that the BMC remained modified, indicating that any malicious code implanted into the firmware would have remained unchanged throughout the reclamation process.

Though the likelihood of a successful exploit of this kind of vulnerability is quite low, there may still be significant cost to vulnerabilities detected at this level not related to an actual exploit. Take, for example, the 2014 hypervisor update performed by Amazon Web Services (AWS), which essentially forced a complete restart of a major cloud offering, the Elastic Compute Cloud (EC2). In response to the discovery of a critical security flaw in the open source hypervisor Xen, Amazon forced EC2 instances globally to restart to ensure the patch would take correctly and that customers remained unaffected. In most cases, though, as with many other cloud services, attacks against IaaS environments are possible because of misconfiguration on the customer side.

Cloud Deployment Models

Although the public cloud is often built on robust security principles to support the integrity of customer data, the possibility of spillage may rise above the risk appetite of an organization, particularly organizations operating with loads of sensitive data. Additionally, concerns about the permanence of data, or the possibility that data might remain after deletion, can push an organization to adopt a cloud deployment model that is not open to all. Before implementing or adopting a cloud solution, your organization should understand what it’s trying to protect and what model works best to achieve that goal. We’ll discuss three models, their security benefits, and their disadvantages.

Public

In public cloud solutions, the cloud vendor owns the servers, infrastructure, network, and hypervisor used in the provision of service to your company. As tenants to the cloud solution provider, your organization borrows a portion of that shared infrastructure to perform whatever operations are required. Services offered by Google, Amazon, and Microsoft are among the most popular cloud-based services worldwide. So, for example, although the data you provide to Gmail may be yours, the hardware used to store and process it is all owned by Google.

Private

In private cloud solutions, organizations accessing the cloud service own all the hardware and underlying functions to provide services. For a number of regulatory or privacy reasons, many healthcare, government, and financial firms opt to use a private cloud in lieu of shared hardware. Using private cloud storage, for example, enables organizations that operate with sensitive data to ensure that their data is stored, processed, and transmitted by trusted parties, and that these operations meet any criteria defined by regulatory guidelines. In this model, the organization is wholly responsible for the operation and upkeep of the physical network, infrastructure, hypervisors, virtual network, operating systems, security devices, configurations, identity and access management, and, of course, data.

Community

In a community cloud, the infrastructure is shared across organizations with a common interest in how the data is stored and processed. These organizations usually belong to the same larger conglomerate, or all operate under similar regulatory environments. The focus for this model may be the consistency of operation across the various organizations as well as lowered costs of operation for all concerned parties. While the community cloud model helps address common challenges across partnering organizations, including issues related to information security, it adds more complexity to the overall operations process. Many vulnerabilities, therefore, are likely related to processes and policy rather than strictly technical. Security teams across these organizations should have, at minimum, standard operation procedures that outline how to communicate security events, indicators of compromise, and remediation efforts to other partners participating in the community cloud infrastructure.

Hybrid

Hybrid clouds combine on-premises infrastructure with a public cloud, with a significant effort placed in the management of how data and applications leverage each solution to achieve organizational goals. Organizations that use a hybrid model can often see benefits offered by both public and private models while remaining in compliance with any external or internal requirements for data protection. Often organizations will also use a public cloud as a failover to their private cloud, allowing the public cloud to take increased demand on resources if the private cloud is insufficient.

Serverless Architecture

Hosting a service usually means setting up hardware, provisioning and managing servers, defining load management mechanisms, setting up requirements, and running the service. In a serverless architecture, the services offered to end users, such as compute, storage, or messaging, along with their required configuration and management, can be performed without a requirement from the user to set up any server infrastructure. The focus is strictly on functionality. These models are designed primarily for massive scaling and high availability. Additionally, from a cost perspective, they are attractive, because billing occurs based on what cycles are actually used versus what is provisioned in advance. Integrating security mechanisms into serverless models is not as simple as ensuring that the underlying technologies are hardened. Because there is limited visibility into host infrastructure operations, implementing countermeasures for remote code execution or modifying access control lists isn’t as straightforward as it would be with traditional server design. In this model, security analysts are usually restricted to applying controls at the application or function level.

Function as a Service

Function as a Service (FaaS) is a type of cloud-computing service that further abstracts the requirements for running code, well beyond that of even PaaS solutions. FaaS lets developers focus on the action to be performed and scale it in a manner than works best for them. In the FaaS model, logic is executed at the time it’s required and no sooner. Accordingly, cloud service providers invoice based on the time the function operates and the resources required in completing the task. The basic requirement for FaaS is that the code is presented in a manner that is compliant with the service provider’s platform. FaaS promotes code that is simple, highly scoped, and efficiently written. Because it is oriented around functions, code is written to execute a specific task in response to a specific trigger.

Amazon Lambda is currently the most popular example of this event-driven, serverless computing framework. As shown in Figure 6-4, the only requirement for initial Lambda function setup is to define the runtime. Amazon supports several programming languages by default, although you can implement your own runtime in any programming language, provided the code can be compiled and executed in the Amazon Linux environment.

Figure 6-4 Amazon Lambda runtime requirements setup screen showing supported runtimes

Infrastructure as Code

The pace at which developers can create code and iterate through various virtual environments for configuration and testing purposes has accelerated, in large part because of the ability to provision hardware and system configurations automatically with a great deal of granularity. The repeatable nature of the provision process through human- and machine-readable code is the core of what is offered by Infrastructure as Code (IaC).

IaC aims to reduce the impact of environment drift on a development cycle. As small changes are made to environments, they transform over time to a state that cannot be easily replicated. In deploying an IaC solution, developers can rely on the fact that their predefined environments will be the same every time, regardless of external or internal factors. Modern continuous integration and continuous delivery (CI/CD), as the standard practice governing how we ship software today, relies heavily on automation as part of the software development lifecycle (SDLC). Using IaC, developers can orchestrate the creation of these standard platforms and environments to deliver software products more quickly and with fewer problems.

IaC tools generally take one of two approaches: imperative or declarative. In terms of software engineering, these are two ways that a programmer can get a computer to perform functions to achieve an end state. Imperative approaches involve the programmer specifying exactly how a computer is to reach the goal with explicit step-by-step instructions. Declarative approaches, on the other hand, involve describing the desired end state and relying on the computer to perform whatever is necessary to get there. Regardless of which method is used, IaC tools will invoke the intermediate functions and create the abstraction layer necessary to interface with the infrastructure to achieve the end state.

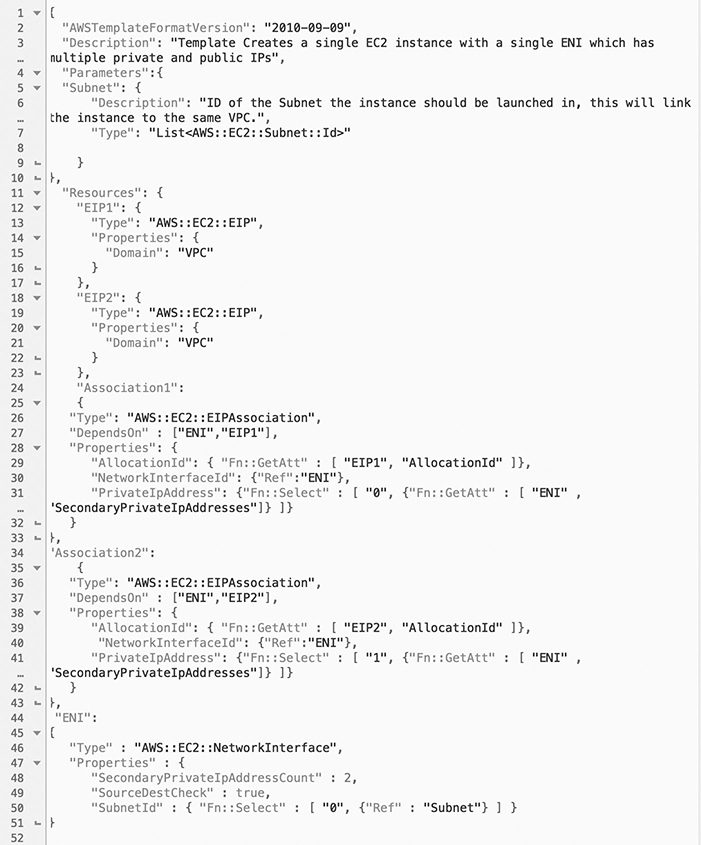

Figure 6-5 is a snapshot of an AWS CloudFormation template used to create an EC2 instance with a network interface that has both public and private IPs. CloudFormation is among the well-supported IaC solutions on the market right now.

Figure 6-5 Amazon CloudFormation template example

Though IaC is often used to improve a development team’s security posture by ensuring consistent environments that are up-to-date on patches, you should be aware of some issues when using the technology. The vulnerabilities associated with IaC solutions don’t lie in the templates used to create the environments, but in the processes used to spin up services. The templates don’t cause the issue per se, but if a developer trusts a template that then calls an insecure service, or a developer establishes a service with insecure qualities, she unwittingly introduces risk into her operations. So with better processes in place, this potential vulnerability can actually turn into a power tool to standardize security practices across the software development process at an organization.

Insecure Application Programming Interface

Nearly all cloud service providers offer a means for users to interface with their services by way of an exposed application programming interface (API). With the correct credentials, users can interact via the API with the service to initiate actions such as provisioning, management, orchestration, and monitoring. Since the API is a potential avenue for unwanted action, it must be protected from abuse, and any API credentials must be protected in the same way as any other secret.

In addition to securing API credentials, you must assess the security of the interfaces themselves. Among the many web security projects run by the Open Web Application Security Project (OWASP) foundation is the API Security Project, an effort to identify and provide solutions to mitigate vulnerabilities unique to APIs. We’ll explore the top ten vulnerabilities in the following sections.

Broken Object Level Authorization

When exposing services via API, some servers fail to authorize on an object basis, potentially creating the opportunity for attackers to access resources without the proper authorization to do so. In some cases, and attacker can simply change a URI to reflect a target resource and gain access. Object level authorization checks should always be implemented and access granted based on the specific role of the user. Additionally, universally unique identifiers (UUIDs) should be implemented in a random manner to avoid the chance that an attacker can successfully enumerate them.

Broken User Authentication

Authentication mechanisms exist to give legitimate users access to resources, provided they are able to present the correct credentials. When these mechanisms fail to validate credentials correctly, allow credentials that are too weak, accept credentials in an insecure manner, or allow brute-forcing of credentials, they create conditions that an attacker can take advantage of. To prevent potential issues related to broken authentication, analysts should determine and test all the ways a user can authenticate to all APIs, use short-lived tokens, mandate two-factor authentication where practical, and enforce rate-limiting and password strength policies.

Excessive Data Exposure

Many APIs return the full contents of an object and rely on the client to do further filtering. This may create a situation where the response contains irrelevant data—or worse yet, it exposes the user to too much data he or she should not have access to. API responses should be built to be as specific as possible while also being as complete as possible. This will require thought and effort into creating complete descriptions of what each API schema looks like, and constant monitoring of the contents of the response data. If developers expect that an API return may include protected health information (PHI), personally identifiable information (PII), or other sensitive information, they should consider enforcing additional checks or require separate API calls for that subset of special data.

Lack of Resources and Rate Limiting

APIs do not always impose restrictions on the data returned to users after a legitimate request, which may make them vulnerable to resource exhaustion if abused. As with excessive data exposure, this occurs when the API developer expects the client to perform the heavy lifting. The most effective solution to prevent this is rate limiting or throttling. Rate limiting measures are often put in place to protect services from excessive use and to maintain service availability. Even though cloud services are built to be highly scalable and available, they are still susceptible to overconsumption of resources or bandwidth from malicious or unwitting users. Combining this with limitations on payload or the use of pagination will prevent responses from overwhelming clients.

Broken Function Level Authorization

As with object level authorization problems, function level authorization vulnerabilities arise because of the complex nature of setting up access policies. The simplest solutions are to take a more restrictive default deny approach to access and to apply permissions to roles and groups as they come in for evaluation.

Mass Assignment

Mass assignment is a vulnerability that may allow an attacker to guess fields that they might not normally have access to, or to modify server-side variables not intended by the web app. Back in 2012, GitHub experienced a security incident as a result of an exploited mass assignment vulnerability. An unauthorized attacker was able to upload his public key to the platform to facilitate read/write access to the Ruby on Rails organization code repository. While the attacker did not cause widespread problems, the vulnerability demonstrated the importance of validating inputs and restricting users to read-only as a default condition.

Security Misconfiguration

Default configurations, open access, open cloud storage, misconfigured headers, and verbose error messages all fall under the security misconfiguration umbrella. Misconfigurations are responsible for a significant number of security issues with APIs, but by following a checklist, you can mitigate vulnerabilities consistently and easily. Automation tools may also assist you in creating deployments that are hardened and well-defined, and that contain the minimal set of features required to provide a service.

Injection

Injection flaws occur when untrusted data is inputted into a system and executed blindly by the interpreter. An attacker’s malicious input can trick the interpreter into executing commands, granting access to otherwise closed-off data. The most common types of API call injection include SQL, LDAP, operating system commands, and XML. Input sanitization, validation, and filters are particularly effective against this technique. From the response point of view, developers should limit API outputs to prevent data leaks.

Improper Asset Management

At this point, you’ve no doubt realized that asset management plays as important a role as any technical implementation when it comes to managing vulnerabilities. Mismanaged API assets lead to all kinds of data exposure problems. Attackers will often find access to the data they’re looking for in deprecated, testing, or staging API environments that developers have forgotten about or have failed to harden as they would production environments. Keeping an up-to-date inventory of all API endpoints and retiring those no longer in use will prevent many vulnerabilities related to improper asset management. For testing environments, limiting access to training data while maintaining the same level of rigor in the authentication process are effective controls.

Insufficient Logging and Monitoring

You cannot act on what you can’t see. As in all security events, visibility is important in knowing when traffic, user behavior, and, in this case, API usage deviates from the norm. The lack of proper logging, monitoring, and alerting enables attackers to go unnoticed. This applies not just to the existence of logging capability, but to the protections around them as well. Security teams should log failed attempts, denied access, input validation failures, and any other relevant security-related events. Logs should be formatted and normalized so that they are easy to ingest into centralized monitoring systems or to hand off to other tools. Finally, logs should be protected from access and tampering, like any other sensitive data.

Improper Key Management

In many cases, data owners cannot physically reach out and touch the hard drives on which their data is stored, and this reality often brings to light the importance of finding a reliable encryption solution. Regardless of which service or deployment model the organization chooses, encryption provides the means to protect data at every phase of processing, storage, and transport. Even with customers with security or data residency policies that mandate that they use on-premises private cloud solutions, encryption is just as important. Whether it is used to protect against internal threats or unauthorized disclosure reliable encryption remains a priority.

The distributed and decentralized nature of cloud computing, however, often makes key management and encryption more complex for administrators and defenders. Cloud customers can often take advantage of service provider–provided encryption services. Often this means more reliable integration and superior performance than can be offered by homebrew solutions. Additionally, using a solution from a vendor reduces the requirement for your organization to perform any processing of the data on your end. Some others may offer hardware-based solutions such as a hardware security module (HSM) service to enable customers to generate and manage their own encryption keys easily in the cloud.

One common method to ensure that sensitive data is never exposed to cloud providers is to ship data that is already encrypted at the customer interface. This not only ensures that the data cannot be viewed in transit, but also protects the data in the case of a failure of tenancy controls. This method is frequently used by organizations that want to enjoy the benefits of the cloud but may not have the resources or personnel to manage their own private setup. The main drawbacks of this method include the upfront cost of architecting such a solution and the increased computational resource required on the customer side to perform the initial encryption.

As with credentials, if an attacker gains access to encryptions keys, the fallout can be major. Protecting keys from human error is also crucial. Although some cloud service providers may have a mechanism to recover from lost private keys, if an organization manages its own keys and somehow loses them, the data may be lost forever.

Unprotected Storage

Along with malicious internal or external actor activities, misconfiguration of a server is often at the root of security issues. Enterprise data sets can easily be exposed if administrators aren’t aware that they are marked for the public cloud. Cloud storage options such as Amazon Simple Storage Service (S3) are frequent targets of inadvertent exposure. Although these storage services are private by default, permissions may be altered in error, causing the data to become accessible to anyone.

The impact of such leaks can be quite high. Consider, for example, the 2017 discovery of a publicly accessible S3 bucket linked to the defense and intelligence contracting company Booz Allen Hamilton. Analysts at security firm UpGuard were able to identify that the unprotected bucket contained files connected to the US National Geospatial-Intelligence Agency (NGA), the US military’s intelligence agency responsible for providing imagery intelligence. Later that year, the same team discovered data belonging to the US Army Intelligence and Security Command (INSCOM), an organization tasked with gathering intelligence for US military, intelligence, and political leaders. Data from that exposure included details on sensitive communications networks. Exposures of such sensitive information highlights in part the difficulty with using cloud storage solutions. Surely there is no lack of understanding as to the nature of this data and the impact of its unauthorized disclosure, so how could organizations so experienced with protecting data fall victim?

It’s important to reiterate that these kinds of leaks aren’t always the result of hackers or careless IT admins, but are more often a product of flaws in business processes that don’t fully consider the access that cloud services provide. Though security through obscurity may have been a marginally viable practice years ago, it will absolutely not fly in today’s highly connected and automated world. Protecting these assets requires full visibility into the real-time state cloud resources and the ability to remediate as quickly as these flaws are detected.

Logging and Monitoring

When transitioning over to a cloud solution, an organization may lose visibility of certain points on the technology stack, particularly if it’s subscribing to PaaS or SaaS solutions. Because the responsibility of protecting portions of the stack falls to the service provider, it does sometimes mean the organization loses monitoring capabilities, for better or worse. A major byproduct of the shared responsibility model in practice is that it’s forced a paradigm shift for security teams. In a manner familiar to many security professionals, we now have to do more with less and learn to perform monitoring and analysis related to our data and users without using network-based methodologies.

Chapter Review

A good cloud security strategy begins with implementing the policies and technical controls to identify and authenticate users correctly, the ability to assign users correct access rights, and the ability to create and enforce access control policies for resources. It is important to remember that cloud service providers use a shared responsibility model for security. Although providers accept responsibility for some portion of security, usually as it relates to the part of infrastructure under the provider’s purview, the subscribing organization is also responsible for taking the necessary steps to protect the rest. Throughout this chapter, we’ve provided awareness of the most common vulnerabilities related to cloud technologies and provided steps and resources to assist you in remediating them. Use these as a starting point in crafting your own cloud security strategy or in developing tactical guides on how to address cloud-related security issues that may come up.

Questions

1. Which cloud service model provides customers direct access to hardware, the network, and storage?

A. SaaS

B. IaaS

C. PaaS

D. SECaaS

2. Mechanisms that fail to provide access to resources on a granular basis may fall under which of the following categories?

A. Improper key management

B. Improper asset management

C. Injection

D. Broken object level authorization

3. Rate limiting is most often used as a mitigation to prevent exploitation of what kind of flaw?

A. Excessive data exposure

B. Broken function level authorization

C. Lack of resources

D. Mass assignment

Use the following scenario to answer Questions 4–8:

Your company is looking to cut costs by migrating several internally hosted services to the cloud. The company is primarily interested in providing all global employees access to productivity software and custom company applications, built by internal developers. The CIO has asked you to join the migration planning committee.

4. Which cloud service model do you recommend to enable access to developers to write custom code while also providing all employees access from remote offices?

A. SaaS

B. PaaS

C. IaaS

D. SECaaS

5. Pleased with the stability and access that the cloud solution has provided after six months, the CIO is now considering hiring more staff to manage more aspects of the cloud solution to include the underlying hardware, network, and storage resources required to support the company’s custom code. What model would you suggest that the company consider using?

A. SaaS

B. PaaS

C. IaaS

D. SECaaS

6. Although the new team is having success managing cloud-based hardware and software resources, they are looking for a new way to create development environments more efficiently and consistently. What technology do you recommend they adopt?

A. SECaaS

B. FaaS

C. IaC

D. SaaS

7. What is a major security consideration as your organization invests in the cloud service model you recommend?

A. Key management complexity

B. Increased cost of maintenance

C. Hypervisor update management

D. Physical security of new company data center

8. As your organization expands into offering more financial services, it wants to ensure that there is sufficient redundancy to maintain service availability while also allowing for the possibility for on-premises data retention. Which cloud deployment model is most suitable for these goals?

A. Public

B. Private

C. Community

D. Hybrid

Answers

1. B. Infrastructure as a Service (IaaS) offers an effective and affordable way for companies to get all of the benefits of managing their own hardware without massive overhead costs associated with acquisition, physical storage, and disposal of the hardware.

2. D. When exposing services via API, some servers fail to authorize on an object-level basis, potentially creating the opportunity for unauthorized attackers to access resources.

3. C. In situations where resourced are lacking, rate limiting measures are often put in place to protect services from excessive use and to maintain service availability.

4. B. PaaS solutions optimized to provide value focused on software development, offering direct access to a development environment to enable an organization to build its own solutions on the cloud infrastructure, rather than providing its own infrastructure.

5. C. An IaaS solution provides the hardware, network, and storage resources necessary for the customer to install and maintain any operating system, dependencies, and applications they want.

6. C. IaC solutions enable developers to orchestrate the creation of custom development environments to deliver software products more quickly and with fewer problems.

7. A. The nature of cloud computing often makes key management and encryption more complex for administrators, especially as more stacks of the network have to be managed.

8. D. Hybrid clouds combine on-premises infrastructure with public clouds and offer the benefits of both models, while allowing for the flexibility to remaining in compliance with any external or internal requirements on data protection.