Chapter 12

Message Ordering

12.1 Introduction

Distributed programs are difficult to design and test because of their nondeterministic nature, that is, a distributed program may exhibit multiple behaviors on the same external input. This nondeterminism is caused by reordering of messages in different executions. It is sometimes desirable to control this nondeterminism by restricting the possible message ordering in a system.

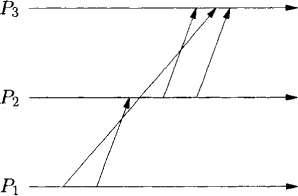

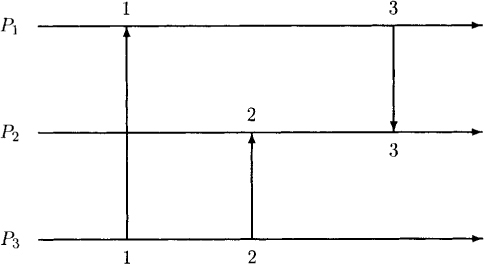

Figure 12.1: A FIFO computation that is not causally ordered

A fully asynchronous computation does not have any restriction on the message ordering. It permits maximum concurrency, but algorithms based on fully asynchronous communication can be difficult to design because they are required to work for all ordering of the messages. Therefore, many systems restrict message delivery to a FIFO order. This results in simplicity in design of distributed algorithms based on the FIFO assumption. For example, we used the FIFO assumption in Lamport’s algorithm for mutual exclusion and Chandy and Lamport’s algorithm for a global snapshot.

A FIFO-ordered computation is implemented generally by using sequence numbers for messages. However, observe that by using FIFO ordering, a program loses some of its concurrency. When a message is received out of order, its processing must be delayed.

A stronger requirement than FIFO is that of causal ordering. Intuitively, causal ordering requires that a single message not be overtaken by a sequence of messages. For example, the computation in Figure 12.1 satisfies FIFO ordering of messages but does not satisfy causal ordering. A sequence of messages from P1 to P2 and from P2 to P3 overtakes a message from P1 to P3 in this example. Causal ordering of messages is useful in many contexts. In Chapter 8, we considered the problem of mutual exclusion. Assume that we use a centralized coordinator for granting requests to the access of the critical section. The fairness property requires that the requests be honored in the order they are made (and not in the order they are received). It is easy to see that if the underlying system guaranteed a causal ordering of messages, then the order in which requests are received cannot violate the happened-before order in which they are made. For another example of the usefulness of causal ordering, see Problem 12.1.

The relationship among various message orderings can be formally specified on the basis of the happened-before relation. For convenience, we denote the receive event corresponding to the send event si by ri and vice versa. The message is represented as (si, ri). We also use si ↝ ri to denote that ri is the receive event corresponding to the send event si. Finally, we use e ≺ f to denote that e occurred before f in the same process.

Now, FIFO and causally ordered computations can be defined as follows:

FIFO: Any two messages from a process Pi to Pj are received in the same order as they were sent. Formally, let s1 and s2 be any two send events and r1 and r2 be the corresponding receive events. Then

s1 ≺ s2 ⇒ ¬(r2 ≺ r1) (FIFO)

Causally Ordered: Let any two send events s1 and s2 in a distributed computation be related such that the first send happened before the second send. Then, the second message cannot be received before the first message by any process. Formally, this can be expressed as

s1 → s2 ⇒ ¬(r2 ≺ r1) (CO)

12.2 Causal Ordering

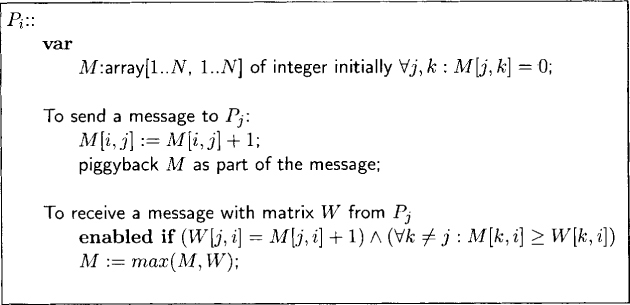

Figure 12.2: An algorithm for causal ordering of messages at Pi

We now describe an algorithm to ensure causal ordering of messages. We assume that a process never sends any message to itself. Each process maintains a matrix M of integers. The entry M[j, k] at Pi records the number of messages sent by process Pj to process Pk as known by process Pi. The algorithm for process Pi is given in Figure 12.2. Whenever a message is sent from Pi to Pj, first the entry M[i, j] is incremented to reflect the fact that one more message is known to be sent from Pi to Pj. The matrix M is piggybacked with the message. Whenever messages are received by the communication system at Pi, they are first checked for eligibility before delivery to Pi. If a message is not eligible, it is simply buffered until it becomes eligible. A message m from Pj is eligible to be received when

1. The entry W[j, i] is one more than the entry M[j, i] that records the number of messages received by Pi from Pj.

2. The number of messages sent from any other process Pk(k ≠ j) to Pi, as indicated by the matrix W in the message, is less than or equal to the number recorded in the matrix M. Formally, this condition is

∀k ≠ j : M[k, i] ≥ W[k, i]

If for some k, W[k, i] > M[k, i], then there is a message that was sent in the causal history of the message and has not arrived yet. Therefore, P, must wait for that message to be delivered before it can accept the message m.

Whenever a message is accepted for delivery, the information at matrix M is updated with the matrix W received in the message.

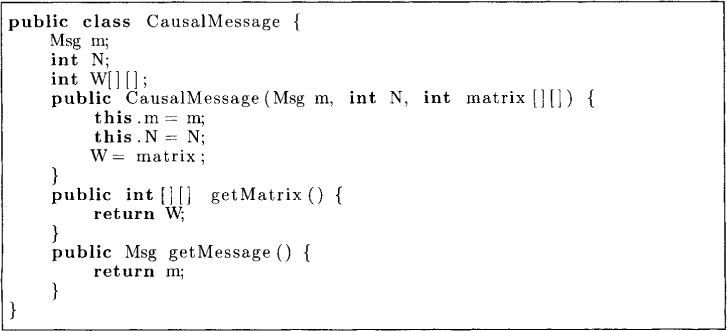

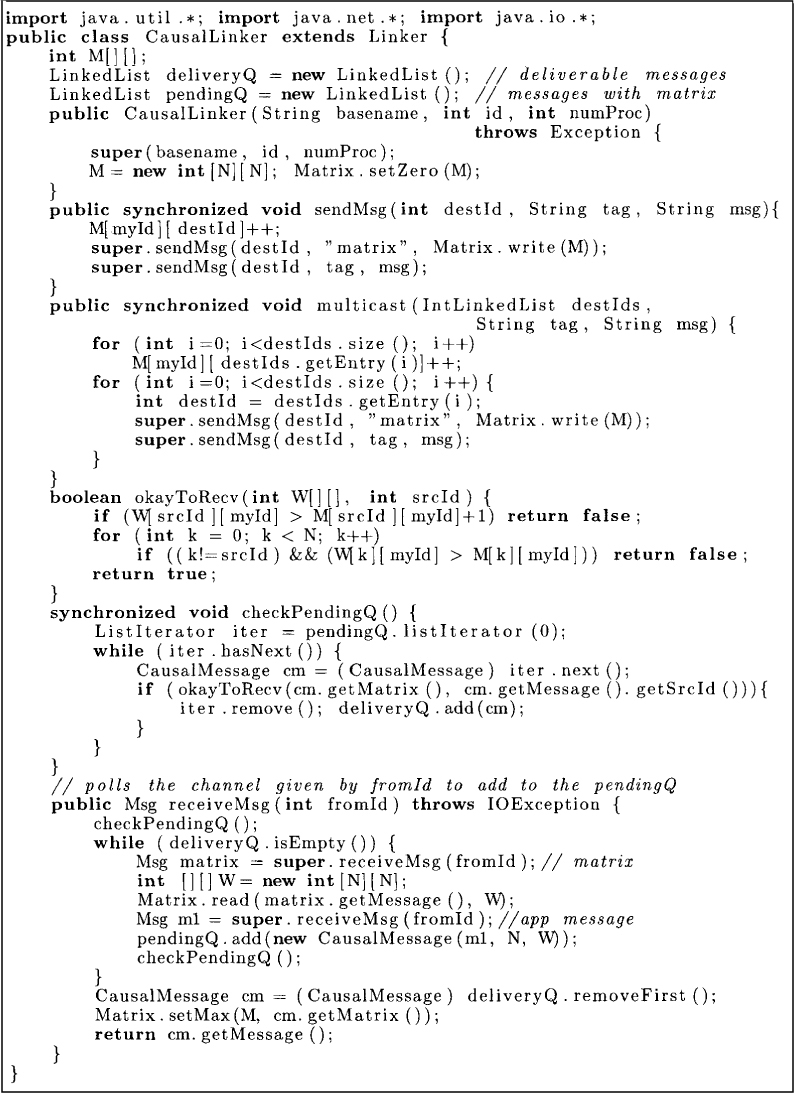

The structure of a causal message is shown in Figure 12.3, and the Java implementation of the causal ordering algorithm is shown in Figure 12.4. The causal ordering algorithm extends the class Linker to include the matrix in outgoing messages. The method sendMsg increments the entry M[myId][destId] to account for this message and attaches the matrix M with it. The method multicast is used for sending a message to multiple sites. In this method, we first increment M[myId][destId] for all destId in the list of destinations. It is this matrix that is sent with every message.

The method okayToReceive determines whether a message can be delivered to the process. The method receiveMsg uses two LinkedList for storing messages. The deliverQ stores all messages that are deliverable to the application layer. The pendingQ stores all messages that are received but are not deliverable. When the application layer asks for a message, the pendingQ is traversed first to check whether some messages are deliverable. Deliverable messages are moved from the pendingQ to the deliveryQ by the method checkPendingQ. If deliveryQ is empty, then we wait for a message to arrive by calling the blocking method super.receiveMsg. On receiving this message, it is put in the pendingQ and the method checkPendingQ is invoked again. If deliveryQ is nonempty, the first message from that queue is delivered and the matrix M updated to record the delivery of this message.

Figure 12.3: Structure of a causal message

Figure 12.4: CausalLinker for causal ordering of messages

12.2.1 Application: Causal Chat

To illustrate an application of causal ordering, we consider a chat application in which a user can send messages to multiple other users. This simple program, shown in Figure 12.5, takes as input from the user a message and the list of destination process identifiers. This message is then multicast to all the process identifiers in the list.

The application takes as an argument the message ordering to be used. The user can verify that if the plain Linker class were used in this application, then the following scenario would be possible. If P0 sends a query to both P1 and P2, and P1 sends a reply to the query to both P0 and P2, then P2 may receive the reply before the query. On the other hand, if the class CausalLinker is used, then such a scenario is not possible.

12.3 Synchronous Ordering

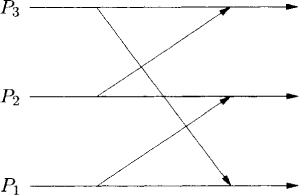

Synchronous ordering is a stronger requirement than causal ordering. A computation satisfies synchronous ordering of messages if it is equivalent to a computation in which all messages are logically instantaneous. Figure 12.6 gives an example of a synchronously ordered computation and Figure 12.7, an example of a computation that does not satisfy synchronous ordering.

Algorithms for synchronous systems are easier to design than those for causally ordered systems. The model of synchronous message passing lets us reason about a distributed program under the assumption that messages are instantaneous or “points” rather then “intervals” (i.e., we can always draw the time diagrams for the distributed programs with the message arrows being vertical). If we assume messages as points instead of intervals, we can order the messages as a partial order and therefore, we can have vector clocks with respect to messages. One application for synchronous ordering of messages is that it enables us to reason about distributed objects as if they were centralized. Assume that a process invokes an operation on a remote object by sending a message. If synchronous ordering of messages is assumed, then all operations on the objects can be ordered according to when the messages are sent because messages can be considered instantaneous.

A computation is synchronous if its time diagram can be drawn such that all message arrows are vertical, that is, all external events can be assigned a timestamp such that time increases within a single process, and for any message its send and receive are assigned the same timestamp. Formally, let ε be the set of all external events. Then, a computation is synchronous iff there exists a mapping T from ε to the set of natural numbers such that for all s, r, e, f ∈ ε

Figure 12.5: A chat program

Figure 12.6: A computation that is synchronously ordered

Figure 12.7: A computation that is not synchronously ordered

s ↝ r ⇒ T(s) = T(r)

and

e ≺ f ⇒ T(e) < T(f)

We call this condition SYNC. It is easy to see that, for any two external events e and f

(e → f) ∧ ¬(e ↝ f) ⇒ T(e) < T(f). (12.1)

We show that the hierarchy associated with the various message orderings is

Synchronous ⊆ causally ordered ⊆ FIFO ⊆ asynchronous.

FIFO ⊆ asynchronous is obvious. A causally ordered computation satisfies FIFO because

s1 ≺ s2 ⇒ s1 → s2.

We only need to show that if a computation is synchronous then it is also causally ordered. Because the communication is synchronous, there exists a function T satisfying SYNC.

For any set of send events s1, s2 and receive events r1, r2 such that s1 ↝ r1, s2 ↝ r2 and s1 → s2:

T(s1) = T(r1, T(s2) = T(r2), and T(s1) < T(s2).

It follows that T(r1) < T(r2). Therefore, (12.1) implies

¬(r2 → r1).

The algorithm for synchronous ordering uses control messages. Note that control messages do not have to be synchronously ordered. Thus ε includes the send and receive events only of application messages. It does not include send and receive of control messages sent by the algorithm to ensure synchronous ordering.

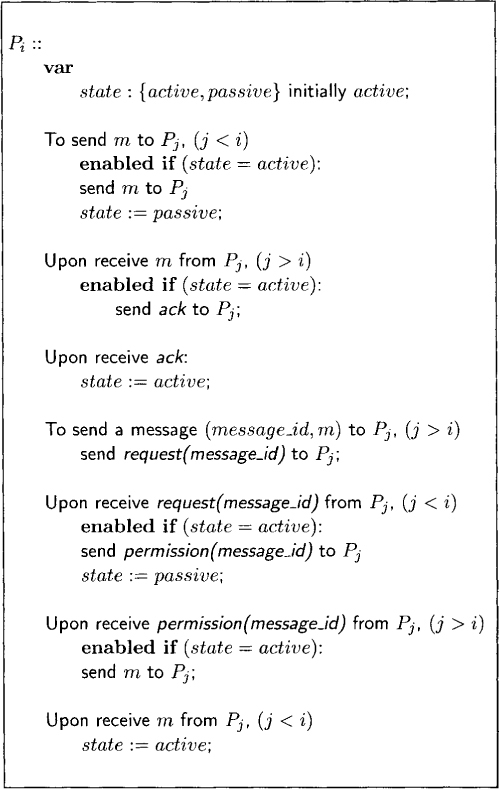

The algorithm shown in Figure 12.8 is for the process Pi. All processes have the same algorithm. Observe that the protocol to implement synchronous message ordering cannot be completely symmetric. If two processes desire to send messages to each other, then there is no symmetric synchronous computation that allows this—one of them must succeed before the other. To introduce asymmetry, we use process numbers to totally order all processes. We classify messages into big messages and small messages. A message sent from a (bigger) process to a smaller process is a big message and a message from a (smaller) process to a bigger process is called a small message. We assume that processes do not send messages to themselves.

In our algorithm, a process can be in two states—active or passive. Every process is initially active. We first consider the algorithm for a big message. A process is allowed to send a message to a smaller process only when it is active. After sending the message, it turns passive until it receives an ack message from the receiver of the message. While passive, it cannot send any other message, nor can it accept any other message. Note that the protocol for a message from a bigger process requires only one control message (ack).

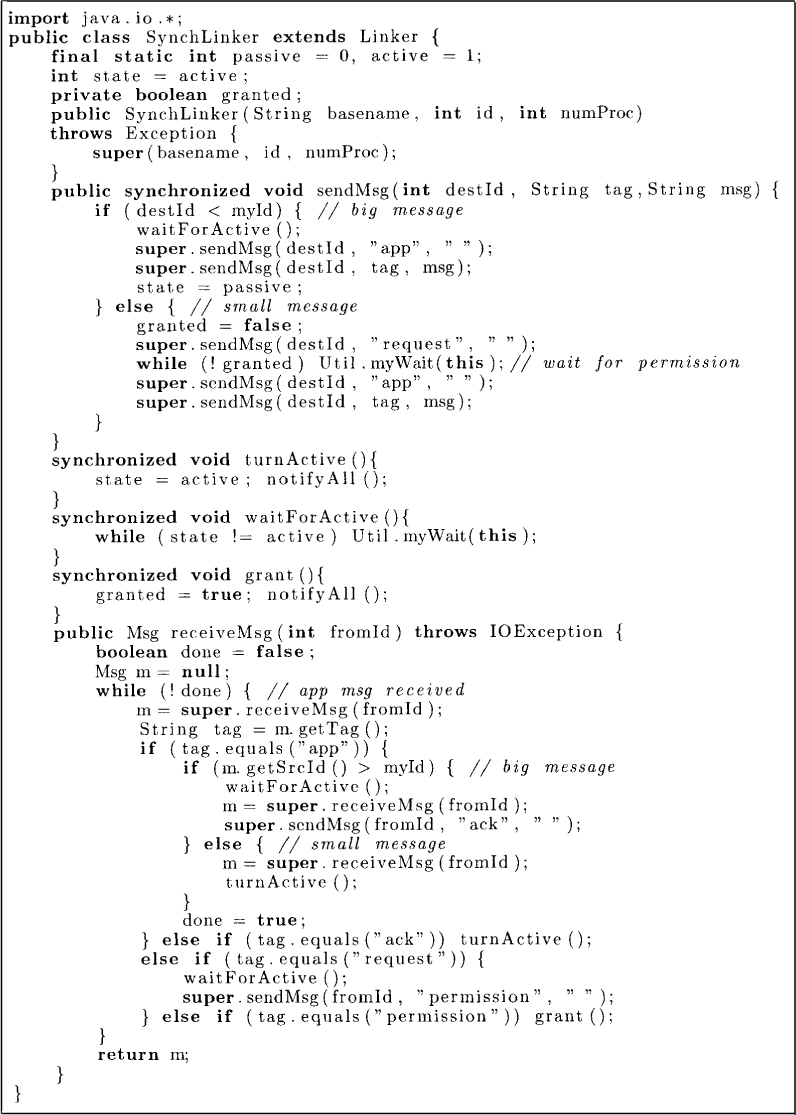

To send a message to a bigger process, say, Pj, process Pi first, needs permission from Pj. Pi can request the permission at any time. Pj can grant permission only when it is active. Furthermore, after granting the permission, Pj turns passive until it receives the message for which it has granted the permission. Thus the protocol for a message from a smaller process requires two control messages (request and permission). The implementation of synchronous ordering in Java is shown in Figure 12.9.

To prove correctness of the algorithm, we show that one can assign timestamps to all messages such that the timestamps of messages are in increasing order for any process. Each process maintains a local variable c that serves as the timestamping function for messages. The rules used by the algorithm are:

1. Timestamp proposal: When a process sends a big message, it increments c and sends c as the proposed timestamp with the message. For a small message, the timestamp is proposed in the permission message sent from the bigger process. Again, to make the proposal c is incremented and sent as a proposal. Thus the proposal of the timestamp is made by the bigger process for both types of messages. Note that as soon as a proposal is made, the process turns passive and cannot make any further proposals. A process can propose a timestamp only if it is active.

2. Timestamp finalization: When a process receives a proposal for a timestamp t, it can finalize the timestamp only if it is active. The timestamp assigned to this message is max(c + 1, t). This timestamp is sent with the ack message or the app message, depending on whether the message is big or small. The new value of c is set to the finalized timestamp. When the proposer receives the final timestamp of the message, it assigns that timestamp to the message and sets its own clock to the maximum of the timestamp received and its own timestamp.

It is easy to verify that

Figure 12.8: The algorithm at Pi for synchronous ordering of messages

Figure 12.9: The algorithm for synchronous ordering of messages

1. No process decreases its clock, and each process increases its clock by at least one for successive messages.

2. The send and receive points of a message have the same timestamp.

12.4 Total Order for Multicast Messages

For synchronous ordering, we had assumed that messages were point-to-point. In applications where a message may be sent to multiple processes, it is often desirable that all messages be delivered in the same order at all processes. For example, consider a server that is replicated at multiple sites for fault tolerance. If a client makes a request to the server, then all copies of the server should handle requests in the same order. The total ordering of messages can be formally specified as follows:

For all messages x and y and all processes P and Q, if x is received at P before y, then y is not received before x at Q. (Total Order)

We require that y not be received before x, rather than that x be received before y, to address the case where x is not sent to Q. Observe that we do not require that a message be broadcast to all processes.

In this section we discuss algorithms for the total ordering of messages. Observe that the property of total order of messages does not imply causal or even FIFO property of messages. Consider the case when P sends messages m1 followed by m2. If all processes receive m2 before m1, then the total order is satisfied even though FIFO is not. If messages satisfy causal order in addition to the total order, then we will call this ordering of messages causal total order.

The algorithms for ensuring total order are very similar to mutual exclusion algorithms. After all, mutual exclusion algorithms ensure that all accesses to the critical section form a total order. If we ensure that messages are received in the “critical section” order, then we are done. We now discuss centralized and distributed algorithms for causal total ordering of messages.

12.4.1 Centralized Algorithm

We first modify the centralized algorithm for mutual exclusion to guarantee causal total ordering of messages. We assume that channels between the coordinator process and other processes satisfy the FIFO property. A process that wants to multicast a message simply sends it to the coordinator. This step corresponds to requesting the lock in the mutual exclusion algorithm. Furthermore, in that algorithm, the coordinator maintains a request queue, and whenever a request by a process becomes eligible, it sends the lock to that process. In the algorithm for total ordering of messages, the coordinator will simply multicast the message corresponding to the request instead of sending the lock. Since all multicast messages originate from the coordinator, and the channels are FIFO, the total-order property holds.

In this centralized algorithm, the coordinator has to perform more work than the other nodes. One way to perform load balancing over time is by suitably rotating the responsibility of the coordinator among processes. This can be achieved through the use of a token. The token assigns sequence numbers to broadcasts, and messages are delivered only in this sequence order.

12.4.2 Lamport’s Algorithm for Total Order

We modify Lamport’s algorithm for mutual exclusion to derive an algorithm for total ordering of messages. As in that algorithm, we assume FIFO ordering of messages. We also assume that a message is broadcast to all processes. To simulate multicast, a process can simply ignore a message that is not meant for it. Each process maintains a logical clock (used for timestamps) and a queue (used for storing undelivered messages). The algorithm is given by the following rules:

• To send a broadcast message, a process sends a timestamped message to all processes including itself. This step corresponds to requesting the critical section in the mutual exclusion algorithm.

• On receiving a broadcast message, the message and its timestamp are stored in the queue, and a timestamped acknowledgment is returned.

• A process can deliver the message with the smallest timestamp, t, in the request queue if it has received a message with timestamp greater than t from every other process. This step corresponds to executing the critical section for the mutual exclusion algorithm.

In this algorithm, the total order of messages delivered is given by the logical clock of send events of the broadcast messages.

12.4.3 Skeen’s Algorithm

Lamport’s algorithm is wasteful when most messages are multicast and not broadcast. Skeen’s algorithm requires messages proportional to the number of recipients of a message and not the total number of processes in the system.

The distributed algorithm of Skeen also assumes that processes have access to Lamport’s logical clock. The algorithm is given by the following rules:

• To send a multicast message, a process sends a timestamped message to all the destination processes.

• On receiving a message, a process marks it as undeliverable and sends the value of the logical clock as the proposed timestamp to the initiator.

• When the initiator has received all the proposed timestamps, it takes the maximum of all proposals and assigns that timestamp as the final timestamp to that message. This value is sent to all the destinations.

• On receiving the final timestamp of a message, it is marked as deliverable.

• A deliverable message is delivered to the site if it has the smallest timestamp in the message queue.

In this algorithm, the total order of message delivery is given by the final timestamps of the messages.

12.4.4 Application: Replicated State Machines

Assume that we are interested in providing a fault-tolerant service in a distributed system. The service is expected to process requests and provide outputs. We would also like the service to tolerate up to t faults where each fault corresponds to a crash of a processor. We can build such a service using t + 1 processors in a distributed system as follows. We structure our service as a deterministic state machine. This means that if each nonfaulty processor starts in the same initial state and executes the requests in the same order, then each will produce the same output. Thus, by combining outputs of the collection, we can get a t fault-tolerant service. The key requirement for implementation is that all state machines process all requests in the same order. The total ordering of messages satisfies this property.

12.5 Problems

12.1. Assume that you have replicated data for fault tolerance. Any file (or a record) may be replicated at more than one site. To avoid updating two copies of the data, assume that a token-based scheme is used. Any site possessing the token can update the file and broadcast the update to all sites that have that file. Show that if the communication is guaranteed to be causally ordered, then the scheme described above will ensure that all updates at all sites happen in the same order.

12.2. Let M be the set of messages in a distributed computation. Given a message x, we use x.s to denote the send event and x.r to denote the receive event. We say that a computation is causally ordered if

∀x, y ∈ M: (x.s → y.s) ⇒ ¬(y.r → x.r).

We say that a computation is mysteriously ordered if

∀x,y ∈ M: (x.s → y.r) ⇒ ¬(y.s → x.r).

(a) Prove or disprove that every causally ordered computation is also mysteriously ordered.

(b) Prove or disprove that every mysteriously ordered computation is also causally ordered.

12.3. Show the relationship between conditions (C1), (C2), and (C3) on message delivery of a system.

s1 → s2 ⇒ ¬(r2 → r1) (C1)

s1 ≺ s2 ⇒ ¬(r2 → r1) (C2)

s1 → s2 ⇒ ¬(r2 ≺ r1) (C3)

where s1 and s2 are sends of any two messages and r1 and r2 are the corresponding receives. Note that a computation satisfies a delivery condition if and only if the condition is true for all pairs of messages.

12.4. Assume that all messages are broadcast messages. How can you simplify the algorithm for guaranteeing causal ordering of messages under this condition?

12.5. Consider a system of N + 1 processes {P0, P1, . . . , PN} in which processes P1 through PN can only send messages to P0 or receive messages from P0. Show that if all channels in the system are FIFO, then any computation on this system is causally ordered.

12.6. In this chapter, we have used the happened-before model for modeling the dependency of one message to the other. Thus all messages within a process are totally ordered. For some applications, messages sent from a process may be independent. Give an algorithm to ensure causal ordering of messages when the send events from a single process do not form a total order.

12.7. Suppose that a system is composed of nonoverlapping groups such that any communication outside the group is always through the group leader, that is, only a group leader is permitted to send or receive messages outside the group. How will you exploit this structure to reduce the overhead in causal ordering of messages?

12.8. Design an algorithm for synchronous ordering for point-to-point messages that does not use a static priority scheme. (Hint: Impose an acyclic directed graph on processes. The edge from Pi to Pj means that Pi is bigger than Pj for the purpose of sending messages. Give a rule by which the direction of edges is reversed, such that acyclicity of the graph is maintained.)

12.9. Prove the correctness of Lamport’s algorithm for providing causal total ordering of messages.

12.10. Prove the correctness of Skeen’s algorithm for providing total ordering of messages.

12.11. Build a multiuser Chat application in Java that guarantees that all users see all messages in the same order.

12.6 Bibliographic Remarks

Causal ordering was first proposed by Birman and Joseph [BJ87]. The algorithm for causal ordering described in this chapter is essentially the same as that described by Raynal, Schiper, and Toueg [RST91]. The algorithm for implementing synchronous ordering is taken from a paper by Murty and Garg [MG95]. For a discussion on total ordering of messages, see the article by Birman and Joseph [BJ87]. The distributed algorithm for causal total ordering of messages is implicit in the replicated state machine construction described by Lamport [Lam78]. Skeen’s algorithm is taken from the reference [Ske82].