Chapter 14

Synchronizers

14.1 Introduction

The design of distributed algorithms is easier if we assume that the underlying network is synchronous rather than asynchronous. A prime example is that of computing a breadth-first search (BFS) tree in a network. In this chapter, we assume that the network has N nodes, E edges, and its diameter is D. Assume that we are given a distinguished node v and our job is to build a breadth-first search tree rooted at v. A synchronous algorithm for this task is quite simple. We build the tree level by level. The node v is initially at level 0. A node at level i is required to send messages to its neighbors at pulse i. A process that receives one or more of these messages, and does not have a level number assigned yet, chooses the source of one of these messages as its parent and assigns itself level number i + 1. It is clear that if the graph is connected, then every node will have its level number assigned in at most D pulses assuming that any message sent at pulse i is received at pulse i + 1.

What if the underlying network is not synchronous? The corresponding problem on an asynchronous network is more difficult. This motivates methods by which a synchronous network can be simulated by an asynchronous network. We show that, in the absence of failures, this is indeed possible using a mechanism called a synchronizer. To simulate the synchronous algorithm on an asynchronous network, all we need is to use one of the synchronizers discussed in this chapter.

A synchronous network can be abstracted with the notion of a pulse, which is a counter at each process with the property that any message sent in pulse i is received at pulse i + 1. A synchronizer is simply a mechanism that indicates to a process when it can generate a pulse. In this chapter we will study synchronizers and their complexity.

To define properties of synchronizers formally, we associate a pulse number with each states on a process. It is initialized to 0 for all processes. A process can go from pulse i to i + 1 only if it knows that it has received and acted on all the messages sent during pulse i – 1.

Given the notion of a pulse, the execution of a synchronous algorithm can be modeled as a sequence of pulses. In each pulse, a process first receives messages from neighbors that were sent in previous round. It then performs internal computation based on the received messages. It also sends messages to its neighbors as required by the application. It can execute the next pulse only when indicated by the synchronizer. Thus a synchronizer can be abstracted by the following interface:

public interface Synchronizer extends MsgHandler {

public void initialize (MsgHandler initProg );

public void sendMessage (int destId, String tag, int msg);

public void nextPulse (); // block for the next pulse

}

There are two aspects of the complexity of a synchronizer—the message complexity and the time complexity. The message complexity indicates the additional number of messages required by the synchronizer to simulate a synchronous algorithm on top of an asynchronous network. The time complexity is the number of time units required to simulate one pulse, where a time unit is defined as the time required for an asynchronous message.

Some synchronizers have a nontrivial initialization cost. Let Minit be the number of messages and Tinit be the time required for initialization of the synchronizer. Let Mpulse and Tpulse respectively be the number of messages and the time required to simulate one pulse of a synchronous algorithm. If a synchronous algorithm requires Tsynch rounds and Msynch messages, then the complexity of the asynchronous protocol based on the synchronizer is given by

Masynch = Minit + Msynch + Mpulse * Tsynch

Tasynch = Tinit + Tpulse * Tsynch

We model the topology of the underlying network as an undirected, connected graph. We assume that processes never fail. It is not possible to simulate a synchronous algorithm on an asynchronous network when processes can fail. In Chapter 15, we show algorithms that can achieve consensus despite process failures in synchronous systems and that consensus is impossible in asynchronous systems when even a single process may fail. This implies that process failures cannot be tolerated in simulating synchrony. We also assume that all channels are reliable. Again, Chapter 15 shows that the consensus problem is impossible to solve when channels are unreliable.

14.2 A Simple Synchronizer

A simple synchronizer can be built using a rule stipulating that every process send exactly one message to all neighbors in each pulse. With this rule, a process can simply wait for exactly one message from each of its neighbors. To implement this rule, even if the synchronous algorithm did not require Pi to send any message to its neighbor Pj in a particular round, it must still send a “null” message to Pj. Furthermore, if the synchronous algorithm required Pi to send multiple messages, then these messages must be packed as a single message and sent to Pj.

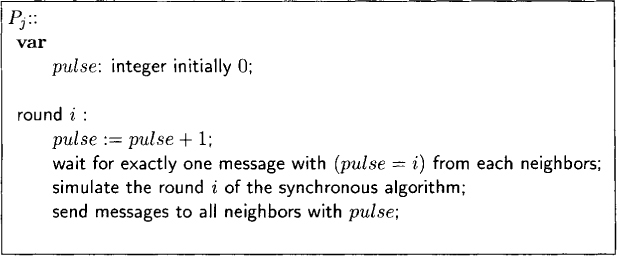

The simple synchronizer generates the next pulse for process p at pulse i when it has received exactly one message sent during pulse i from each of its neighbors. The algorithm is shown in Figure 14.1 and its Java implementation, in Figure 14.2.

Figure 14.1: Algorithm for the simple synchronizer at Pj

The algorithm in Figure 14.2 ensures that a process in pulse i receives only the messages sent in pulse i – 1.

The implementation in Java assumes FIFO and uses the following variables:

• pendingS: list of neighbors who have not been sent any message in this pulse

Figure 14.2: Implementation of the simple synchronizer

• pendingR: list of neighbors from which no message has been received in this pulse

• rcvEnabled[j]: whether the process can receive a message from Pj in this round.

The method initialize sets pendings and pendingR for all neighbors and the variable pulse to 0. We have assumed that the communication topology is given by an undirected graph and that comm.neighbors has the list of all neighbors.

The method handleMsg is implemented as follows. When a message is received at the application, it is determined whether any message has already been received from the source in the current pulse. If there is such a message, then this message belongs to the next pulse and the process waits for rcvEnabled[src] to become true. Otherwise, this message is meant for this pulse and source is removed from the list pendingR. At this point, the tag of the message is checked to see if it is a null message (of type synchNull) used only for the synchronizer. If it is not, the message is passed on to the application. If a message has been received in this pulse from each of the neighbors, that is, pendingR is empty, then the application can continue to the next pulse and the thread that may be blocked in nextPulse is signaled. To send a message, we simply remove the destination from the list pendingS.

Whenever the application layer calls nextPulse, the synchronizer first ensures that every neighbor is sent exactly one message in the last pulse. After incrementing the pulse number, it waits to receive exactly one message from every neighbor. This is achieved bu waiting for the list pendingR to be empty. When this condition becomes true, it is ready for the next pulse.

Note that there is no special requirement for initialization of this synchronizer. When any process starts pulse 1, within D time units all other processes will also start pulse 1. Therefore, the complexity of initializing the simple synchronizer is

Minit = 0; Tinit = D.

Because each pulse requires a message along every link in both directions, we get the complexity of simulating a pulse as

Mpulse = 2E; Tpulse = 1.

14.2.1 Application: BFS Tree Construction

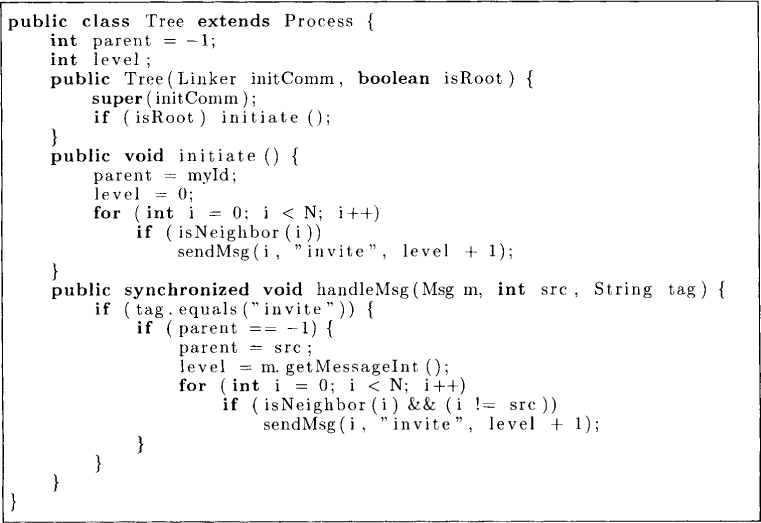

Let us use the simple synchronizer for computing the BFS tree in a network. Figure 14.3 gives an algorithm that will compute the BFS tree on a synchronous network, but not necessarily the BFS tree on an asynchronous network. The algorithm has two methods: initiate and handleMsg. The method initiate is invoked by the root from which we would like to compute the tree. In this method, the root sends an invite message to all its neighbors. Any node that receives an invite message for the first time becomes part of the tree with its parent as the node that sent the invitation. This node in turn sends invitations to all its neighbors. In an asynchronous network, this algorithm may not produce a BFS tree. Figure 14.4 gives an algorithm that runs with the simple synchronizer to ensure that the tree computed is the BFS tree even on asynchronous networks.

Figure 14.3: An algorithm that generates a tree on an asynchronous network

14.3 Synchronizer α

The synchronizer α is very similar to the simple synchronizer. We cover this synchronizer because it is a special case of a more general synchronizer γ that will be covered later. All the synchronizers discussed from now on are based around the concept of safety of a process. Process P is safe for pulse i if it knows that all messages sent from P in pulse i have been received.

Figure 14.4: BFS tree algorithm using a synchronizer

The α synchronizer generates the next pulse at process P if all its neighbors are safe. This is because if all neighbors of P are safe, then all messages sent to process P have been received.

To implement, the α synchronizer, it is sufficient for every process to inform all its neighbors whenever it is safe for a pulse. How can a process determine whether it is safe? This is a simple matter if all messages are required to be acknowledged.

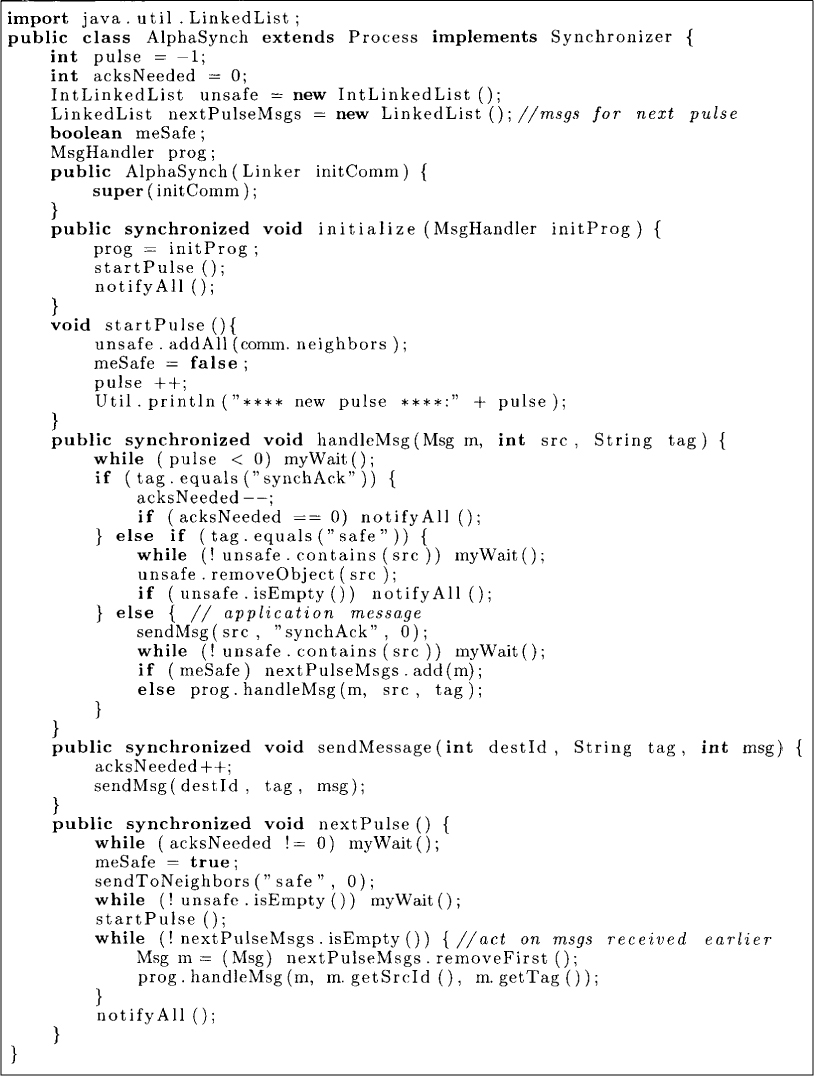

The algorithm for α synchronizer is given in Figure 14.5. We have assumed FIFO ordering of messages. The algorithm maintains a variable acksNeeded that records the number of unacknowledged messages for the current pulse. It also maintains unsafe, the list of neighbors that are unsafe for this node for the current pulse. At the beginning of each pulse, acksNeeded is initialized to 0 and unsafe to the list of all neighbors.

The synchronizer handles two types of messages: synchAck and safe. The synchAck message acknowledges an application message and acksNeeded is decremented whenever the synchAck message is received. The safe message is handled by removing the source of the message from the unsafe list. When an application message is received, it is checked whether a safe message has been received from that neighbor. Since a process sends safe messages only at the end of the pulse, if a safe message has already been received, then this message is meant for the next pulse and is recorded in nextPulseMsgs as in SimpleSynch. Otherwise, an acknowledgment is sent and the message is delivered to the application layer.

The method nextPulse is implemented as follows. First, the node waits for all pending acknowledgments. Once all acknowledgments are received, it knows that it is safe and sends the safe message to all neighbors. It then waits for all its neighbors to be safe. When that condition becomes true, the node is ready for the next pulse. At the beginning of the pulse all the messages in nextPulseMsgs are delivered.

The complexity of synchronizer α is given below:

Tinit = D; Minit = D

Tpulse = D; Mpulse = D

14.4 Synchronizer β

Although the synchronizers discussed so far appear to be efficient, they have high message complexity when the topology of the underlying network is dense. For large networks, where every node may be connected to a large number of nodes, it may be impractical to send a message to all neighbors in every pulse. The message complexity can be reduced at the expense of time complexity as illustrated by the β synchronizer.

Figure 14.5: Alpha synchronizer

The β synchronizer assumes the existence of a rooted spanning tree in the network. A node in the tree sends a message subtree safe when all nodes in its subtree are safe. When the root of the tree is safe and all its children are safe, then we can conclude that all nodes in the tree are safe. Now a simple broadcast of this fact via a pulse message can start the next pulse at all nodes. The broadcast can be done using the rooted spanning tree.

The initialization phase of this synchronizer requires a spanning tree to be built. This can be done using O(N log N + E) messages and O(N) time. For each pulse, we require messages only along the spanning tree. Thus the message complexity for each pulse is O(N). Each pulse also takes time proportional to the height of the spanning tree, which in the worst case is O(N). In summary, the complexity of the β synchronizer is

Tinit = O(N); Minit = O(N log N + E)

Tpulse = O(N); Mpulse = O(N).

14.5 Synchronizer γ

We have seen that the α synchronizer takes O(1) time but has high message complexity O(E), and the β synchronizer has low message complexity O(N) but requires O(N) time per pulse. We now describe the γ synchronizer which is a generalization of both α and β synchronizers. It takes a parameter k such that when k is N – 1, it reduces to the α synchronizer and when k is 2, it reduces to the β synchronizer.

The γ synchronizer is based on clustering. In the initialization phase, the network is divided into clusters. Within each cluster the algorithm is similar to the β synchronizer and between clusters it is similar to the α synchronizer. Thus each cluster has a cluster spanning tree. The root of the cluster spanning tree is called the cluster leader. We say that two clusters are neighboring if there is an edge connecting them. For any two neighboring clusters, we designate one of the edges as the preferred edge.

The algorithm works as follows. There are two phases in each pulse. In both phases, the messages first travel upward in the cluster tree and then travel downward. The goal of the first phase is to determine when the cluster is safe and inform all cluster nodes when it is so. In this phase, subtree safe messages first propagate up the cluster tree. When the root of the cluster gets messages from all its children and it is safe itself, it propagates the cluster safe message down the cluster tree. This phase corresponds to the β synchronizer running on the cluster. We also require that the nodes that are incident on preferred edges also send out our cluster safe (ocs) messages over preferred edges.

The goal of the second phase is to determine whether all neighboring clusters are safe. In this sense, it is like an α synchronizer. It uses two additional message types: neighboring cluster safe (ncs) and pulse. When a leaf in the cluster tree receives the our cluster safe message from all preferred edges incident on it, it sends ncs to its parent. Now consider an internal node in the cluster tree that has received ncs messages from all its children and has received ocs on all preferred edges incident on it. If it is not the cluster leader, then it propagates the ncs message upward; otherwise, it broadcasts the pulse message in its group.

For any clustering scheme c, let Ec denote the number of tree edges and preferred edges and Hc denote the maximum height of a tree in c. The complexity of the γ synchronizer is given by

Mpulse = O(Ec)

Tpulse = O(Hc)

We now show that any graph can be decomposed into clusters so that there is an appropriate tradeoff between the cluster height and the number of tree and preferred edges. In particular, we claim that for each k in the range 2 ≤ k < N, there exists a clustering c such that Ec ≤ kN and Hc, ≤ log N/log N/log k.

We give an explicit construction of the clustering. In this scheme, we add clusters one at a time. Assume that we have already constructed r clusters and there are still some nodes left that are not part of any cluster. We add the next cluster as follows.

Each cluster C consists of multiple layers. For the first layer, any node that is not part of any cluster so far is chosen. Assume that i layers (i ≥ 1) of the cluster C have already been formed. Let S be the set of neighbors of the node in layer i that are not part of any cluster yet. If the size of S is at least (k – 1) times the size of C, then S is added as the next layer of the cluster C; otherwise, C’s construction is finished.

Let us compute Hc and Ec for this clustering scheme. Since each cluster with level i has at least ki–1 nodes, it follows that Hc is at most log N/log k. Ec has two components—tree edges and preferred edges. The tree edges are clearly at most N. To count the preferred edges, we charge a preferred edge between two clusters to the first cluster that is created in our construction process. Note that for a cluster C, its construction is finished only when there are at most (k – 1)|C| neighboring nodes. Thus, for the cluster C, there can be at most (k – 1)|C| preferred edges charged to it. Adding up the contribution from all clusters, we get that the total number of preferred edges is at most (k – 1)N.

14.6 Problems

14.1. Give the Java code for the β synchronizer.

14.2. Give the Java code for the γ synchronizer.

14.3. What is the message complexity of the asynchronous algorithm for constructing a breadth-first search tree when it is obtained by combining the synchronous algorithm with (a) the α synchronizer, (b) the β synchronizer, and (c) the γ(k) synchronizer?

14.4. Show how synchronizers can be used in a distributed algorithm to solve a set of simultaneous equations by an iterative method.

*14.5. (due to Awerbuch[Awe85]) Give a distributed algorithm to carry out the clustering used by the γ synchronizer.

*14.6. (due to Luby[Lub85]) Let G = (V, E) be an undirected graph corresponding to the topology of a network. A set. V' ⊆ V is said to be independent if there is no edge between any two vertices in V'. An independent set is maximal if there is no independent set that strictly contains V'. Give a distributed synchronous randomized algorithm that terminates in O(log |V|) rounds. Also, give an algorithm that works on asynchronous networks.

14.7 Bibliographic Remarks

The concept of synchronizers, and the synchronizers α, β, and γ were introduced by Awerbuch [Awe85]. The reader is referred to the books by Raynal and Helary [RH90] and Tel [Tel94] for more details on synchronizers.