Chapter 20. Regex Table Lexer by Rebecca Parsons

Implement a lexical analyzer using a list of regular expressions.

Parsers primarily deal with the structure of a language, specifically the way components of the language can be combined. The most basic language components—such as keywords, numbers, and names—can clearly be recognized by the parser. However, we generally separate this stage out into a lexical analyzer. By using a separate pass to recognize these terminal symbols, we simplify the construction of the parser.

Directly implementing a lexical analyzer, also referred to as a lexer, is relatively straightforward. Lexical analyzers stay firmly in the space of regular languages, which means we can use standard regular expression APIs to implement them. For a Regex Table Lexer, we use a list of regular expressions, each associated with the particular terminal symbol. We scan the input, relating individual pieces of the input to the proper regular expressions and generating a stream of tokens naming the individual terminal symbols. It is this token stream that is the input to the parser.

20.1 How It Works

When using Syntax-Directed Translation, it’s common to separate lexing into a stage of its own. Take a look at that pattern for details on why we separate lexing, some of the conceptual issues around lexing, and how lexing and parsing fit into the broader picture. In this pattern, we’ll concentrate on implementing a simple lexer.

The basic algorithm is quite simple. The lexer performs a scan of the input string starting from the beginning, matching tokens as it goes along and consuming those characters. Let’s start with a very simple, admittedly ridiculous, example. We have two symbols we want to recognize, the strings Hello and Goodbye. The regular expressions for these symbols are ^Hello and ^Goodbye respectively. The ^ operator is needed to anchor the regular expression match at the start of the string. We’ll call our tokens HOWDY and BYEBYE just to be different. Let’s see how the basic algorithm works on the input string:

HelloGoodbyeHelloHelloCoodbye

The regular expression for Hello matches the beginning of the string, so we generate the HOWDY token and advance our pointer in the string to the first G. The algorithm would return to the beginning of the list of regular expressions, since ordering matters. Thus, the regular expression for Hello would again be checked against the string starting with G. This check fails, of course. So, we try the next regular expression in the list, the one for Goodbye, and this matches. We add the token BYEBYE to our output token stream, reset the string pointer to be at the second H, and continue. The final output stream for the sentence above is HOWDY, BYEBYE, HOWDY, HOWDY, BYEBYE.

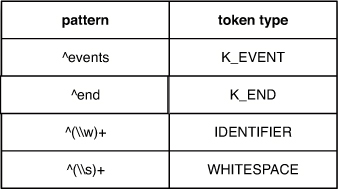

The order of checking the patterns is important so that we can properly handle things like keywords. In the state machine grammar, for example, our keywords also match the rules for identifiers. We order the checks for keywords first, so that the proper token will appear for our keywords. Selection of appropriate tokens is a design decision for the lexical analyzer. In the state machine grammar, we don’t attempt to distinguish between codes and names, using a single identifier token for each. This choice is necessary, since the lexer doesn’t have the context to know that a four-letter name should match the identifier token, if it isn’t in the position where a code is legal. Often, though, the token set includes things like keywords, names, numbers, punctuation, and operators.

We instantiate a particular lexical analyzer by specifying the recognizers, and we use a table or a list to order them. Each recognizer contains the token type, the regular expression to recognize that token, and a Boolean value specifying if this token should be in the output stream. The token type is simply used to identify the token class to the parser. The Boolean allows us to handle things like semantically meaningless whitespace and comments. While these strings are in the input stream and must be handled by the lexer, we don’t pass the corresponding tokens on to the parser. The repeated sequential scan of the table enforces the ordering of the matches, and the table of recognizers also makes it simple to introduce additional token types.

The matcher for individual tokens steps through the table of recognizers; if a match occurs, the corresponding input is consumed from the input string and the token is sent to the output stream, assuming the Boolean output flag is set. We populate the tokenValue field of the output token, whether it is needed or not. Generally, token values are only needed for identifiers, numbers, and sometimes operators, but this approach saves us another Boolean flag and simplifies the code. The main scanner method continually invokes the single match method, checking to make sure that some recognition has been successful. Once the input string is consumed and matching has been successful throughout, the token buffer is sent along for processing by the parser.

To help with error diagnostics, you can add information to the token about where that token was in the character stream—for example, a line number and column position.

20.2 When to Use It

While lexical analysis generators, such as Lex, do exist, there is little need to use them given the prevalence of regular expression APIs. One exception is using ANTLR as the Parser Generator, since the lexical analysis and parsing are more tightly integrated in that tool.

The implementation described here is an obvious one for lexical analysis. Its performance clearly depends on the specifics of the regular expression API used. The only time I would suggest not using Regex Table Lexer would be if there is no acceptable regular expression API available.

Given the simple syntax of many DSLs, it is possible for this approach to be used to recognize the full language. As long as the language is regular, this approach applies for the parser as well.

20.3 Lexing Miss Grant’s Controller (Java)

The lexical analyzer for the state machine grammar is quite typical. We call out tokens for our keywords, punctuation, and a token type for identifiers. We also have a token type for comments and whitespace, which are just consumed by the lexer. We use the java.util.regex API to specify the patterns and do the matching for us. The input to the lexer is the DSL script to be analyzed, and the output is a token buffer including the token types and their related values. This token buffer becomes the input to the parser.

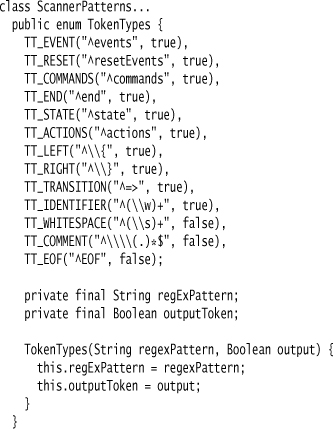

The implementation is split into the specification of the tokens to recognize and the lexical analysis algorithm itself. This approach makes it easy to add additional token types to the lexer. We use an enumerated type to specify a token’s type, with attributes specifying the relevant regular expression and the Boolean value controlling the output of the token. This approach is a clean one in Java, but you could just as easily use a more traditional object. However, the token types do need to be readily available for use in the parser itself.

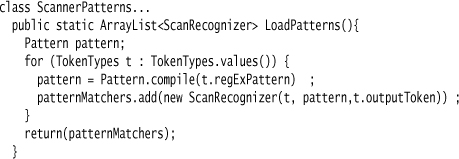

In the lexical analyzer, we instantiate a table of recognition objects, with the compiled recognizers combined with their token types and the Boolean values.

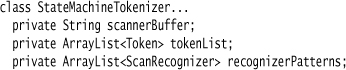

We define a class for our lexer. The instance variables for the lexer include the recognizers, the input string, and the output token list.

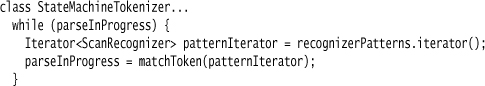

The main processing loop in the lexer is a do-while loop.

This loop continually invokes the token matcher until the buffer is exhausted or no more matches are possible for the remaining buffer.

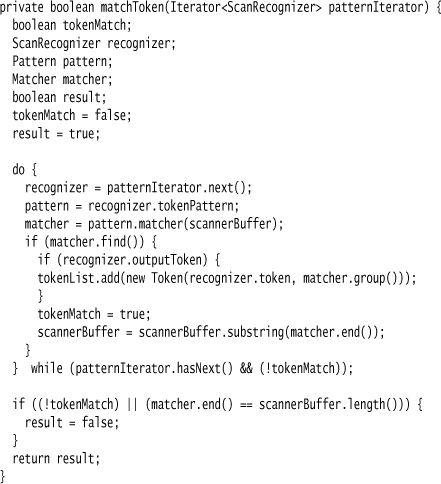

The matchToken method steps through the various recognizers in order and attempts to match a single token.

If a match succeeds, we advance this input buffer to the point just past the match, in this case retrieving the end point using matcher.end(). We check the Boolean for the recognizer that matched and generate the appropriate token if warranted. If no match occurs, we declare failure. The find method in the regex API scans to the end of the string to find matches. If a scan of the remaining string fails to match, then the overall lexical analysis fails.

The outer loop proceeds as long as the inner loop matches a token, going through the input until the end of the string is reached. The iterator is reset for each pass of the inner loop, ensuring all token patterns are matched in each pass. The result of the parse is a token buffer, where each token has a token type and the actual string value matched in the lexical analyzer.