- The Android Game Developer's Handbook

- Table of Contents

- The Android Game Developer's Handbook

- Credits

- About the Author

- About the Reviewer

- www.PacktPub.com

- Preface

- 1. Android Game Development

- 2. Introduction to Different Android Platforms

- Exploring Android mobiles

- Exploring Android tablets

- Exploring Android televisions and STBs

- Exploring Android consoles

- Exploring Android watches

- Development insights on Android mobiles

- Development insights on Android tablets

- Development insights on Android TV and STBs

- Development insights on Android consoles

- Development insights on Android watches

- Platform-specific specialties

- Summary

- 3. Different Android Development Tools

- 4. Android Development Style and Standards in the Industry

- 5. Understanding the Game Loop and Frame Rate

- 6. Improving Performance of 2D/3D Games

- 2D game development constraints

- 3D game development constraints

- The rendering pipeline in Android

- Optimizing 2D assets

- Optimizing 3D assets

- Common game development mistakes

- 2D/3D performance comparison

- Summary

- 7. Working with Shaders

- 8. Performance and Memory Optimization

- Fields of optimization in Android games

- Resource optimization

- Design optimization

- Memory optimization

- Don't create unnecessary objects during runtime

- Use primitive data types as far as possible

- Don't use unmanaged static objects

- Don't create unnecessary classes or interfaces

- Use the minimum possible abstraction

- Keep a check on services

- Optimize bitmaps

- Release unnecessary memory blocks

- Use external tools such as zipalign and ProGuard

- Performance optimization

- Relationship between performance and memory management

- Memory management in Android

- Processing segments in Android

- Different memory segments

- Importance of memory optimization

- Optimizing overall performance

- Increasing the frame rate

- Importance of performance optimization

- Common optimization mistakes

- Best optimization practices

- Summary

- Fields of optimization in Android games

- 9. Testing Code and Debugging

- 10. Scope for Android in VR Games

- Understanding VR

- VR in Android games

- Future of Android in VR

- Game development for VR devices

- Introduction to the Cardboard SDK

- Basic guide to develop games with the Cardboard SDK

- VR game development through Google VR

- Android VR development best practices

- Challenges with the Android VR game market

- Expanded VR gaming concepts and development

- Summary

- 11. Android Game Development Using C++ and OpenGL

- 12. Polishing Android Games

- 13. Third-Party Integration, Monetization, and Services

- Google Play Services

- Multiplayer implementation

- Analytic tools

- Android in-app purchase integration

- Android in-game advertisements

- Monetization techniques

- Planning game revenue

- User acquisition techniques

- User retention techniques

- Featuring Android games

- Publishing Android games

- Summary

- Index

Let's now have a look at the types of rendering pipeline in Android.

In the case of the 2D Android drawing system through Canvas, all the assets are first drawn on the canvas, and the canvas is rendered on screen. The graphic engine maps all the assets within the finite Canvas according to the given position.

Often, developers use small assets separately that cause a mapping instruction to execute for each asset. It is always recommended that you use sprite sheets to merge as many small assets as possible. A single draw call can then be applied to draw every object on the Canvas.

Now, the question is how to create the sprite and what the other consequences are. Previously, Android could not support images or sprites of a size more than 1024 x 1024 pixels. Since Android 2.3, the developer can use a 4096 x 4096 sprite. However, using such sprites can cause permanent memory occupancy during the scopes of all the small assets. Many low-configuration Android devices do not support such large images to be loaded during an application. It is a best practice that developers limit themselves to 2048 x 2048 pixels. This will reduce memory usage peak, as well as significant amounts of draw calls to the canvas.

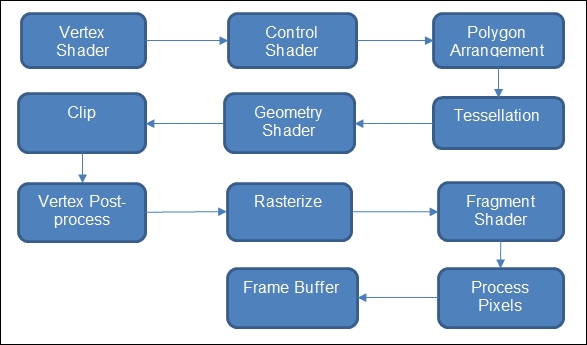

Android uses OpenGL to render assets on the screen. So, the rendering pipeline for Android 3D is basically the OpenGL pipeline.

Let's have look at the OpenGL rendering system:

Now, let's have a detailed look at each step of the preceding rendering flow diagram:

- The vertex shader processes individual vertices with vertex data.

- The control shader is responsible for controlling vertex data and patches for the tessellation.

- The polygon arrangement system arranges the polygon with each pair of intersecting lines created by vertices. Thus, it creates the edges without repeating vertices.

- Tessellation is the process of tiling the polygons in a shape without overlap or any gaps.

- The geometry shader is responsible for optimizing the primitive shape. Thus triangles are generated.

- After constructing the polygons and shapes, the model is clipped for optimization.

- Vertex post processing is used to filter out unnecessary data.

- The mesh is then rasterized.

- The fragment shader is used to process fragments generated from rasterization.

- All the pixels are mapped after fragmentation and processed with the processed data.

- The mesh is added to the frame buffer for final rendering.

-

No Comment