3D game development in Android native is very complicated. The Android framework does not support direct 3D game development platforms. 2D game development is directly supported by Android Canvas. The developer requires OpenGL support to develop 3D games for Android.

Development is supported by Android NDK, which is based on C++. We will discuss a few constraints of 3D development for Android with OpenGL support.

Android provides the OpenGL library for development. The developer needs to set up scenes, light, and camera first to start any development process.

Vertex refers to a point in 3D space. In Android, Vector3 can be used to define the vertices. A triangle is formed by three such vertices. Any triangle can be projected onto a 2D plane. Any 3D object can be simplified to a collection of triangles surrounding its surface.

For example, a cube surface is a collection of two triangles. Hence, a cube can be formed of 12 triangles as it has six surfaces. The number of triangles has a heavy impact on the rendering time.

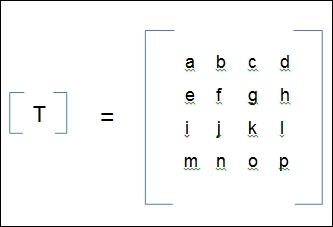

Each 3D object has its own transformation. Vector can be used to indicate its position, scaling, and rotation. Generally, this is referred through a matrix called a transform matrix. A transformation matrix is 4 x 4 in dimension.

Let's assume the matrix to be T:

Here:

- {a, b, c, e, f, g, i, j, k} represents linear transformation

- {d, h, l} represents perspective transformation

- {m, n, o} represents translations along the x, y, and z axes

- {a, f, k} represents local scaling along the x, y, and z axes

- {p} represents overall scaling

- {f, g, i, k} represents rotation along the x axis where a = 1

- {a, c, i, k} represents rotation along the y axis where f = 1

- {a, b, e, f} represents rotation along the z axis where k = 1

Any 3D object can be translated using this matrix and a respective transform 3D vector. Naturally, matrix calculation is heavier than 2D simple linear calculation. As the number of vertices increases, the number of calculations increases as well. This results in performance drop.

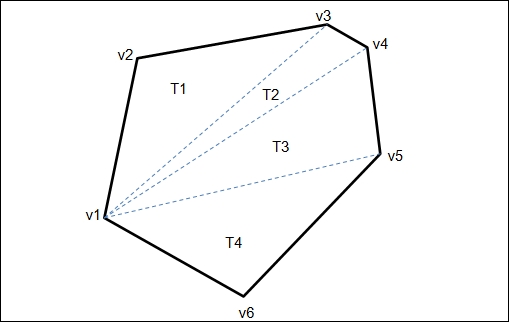

Any 3D model or object has surfaces referred to as polygons. Fewer of polygons implies fewer of triangles, which directly decreases the vertices count:

This is a simple example of a polygonal distribution of a 3D object surface. A six-sided polygon has four triangles and six vertices. Each vertex is a 3D vector. Every processor takes time to process each vertex. It is recommended that you keep a check on the total polygon count, which will be drawn in each draw cycle. Many games suffer a significant amount of FPS drop because of a high and unmanaged polygon count.

Android is specifically a mobile OS. Most of the time, it has limited device configuration. Often, managing the poly count of 3D games for Android becomes a problem for developers.

Android uses OpenGL to provide a 3D rendering platform with both framework and NDK. The Android framework provides GLSurfaceView and GLSurfaceView.Renderer to render 3D objects in Android. They are responsible for generating the model on screen. We have already discussed the 3D rendering pipeline through OpenGL.

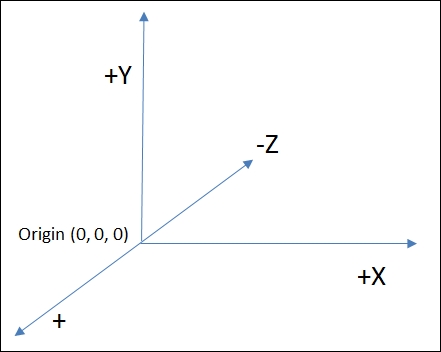

3D rendering maps all the objects on a 3D world coordinate system following the right-hand thumb system:

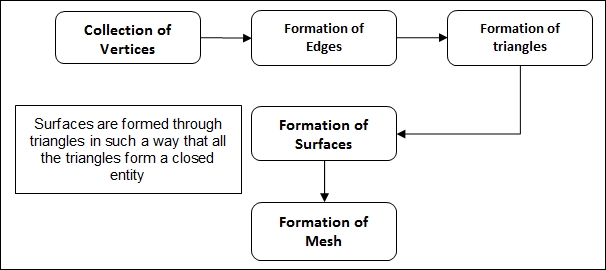

A 3D mesh is created with vertices, triangles, and surfaces. A mesh is created to determine the shape of the object. A texture is applied to the mesh to create the complete model.

Creating a mesh is the trickiest part of 3D model creation, as basic optimization can be applied here.

Here is the procedure of creating the mesh:

A 3D model can contain more than one mesh, and they may even be interchangeable. A mesh is responsible for the model detailing quality and for the rendering performance of the model. For Android development, it is recommended that you keep a certain limit of vertices and triangles for meshes to render performance.

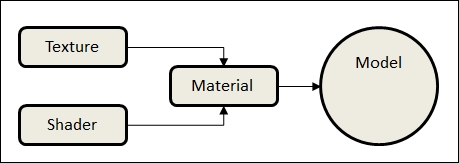

After the formation of the model structure through the mesh, the texture is applied on it to create the final model. However, the texture is applied through a material and manipulated by shaders:

Textures are 2D images applied to the model to increase detailing and view the quality of a model. This image is mapped through the surfaces of the mesh so that each surface renders a particular clip of the texture.

Shaders are used to manipulate the quality, color, and other attributes of the texture to make it more realistic. Most of the time, it is not possible to create a texture with all the attributes properly set. A 3D model visibility is dependent on light source, intensity, color, and material type.

Collision detection for 3D Android games can be categorized into two types:

- Primitive colliders

- Mesh colliders

These colliders consist of basic 3D elements such as cubes, spheres, cylinders, prisms, and so on. This collision detection system follows certain geometric patterns and rules. That's why it is comparatively less complicated than the arbitrary mesh collider.

Most of the time, the developer assigns primitive colliders to many models to increase the performance of the game. This approach is obviously less accurate than actual collider.

Mesh colliders can detect actual arbitrary collision detection. This collision detection technique is process heavy. There are few algorithms to minimize the process overhead. quadtree, kd-tree, and AABB tree are a few examples of such collision detection techniques. However, they do not minimize the CPU overhead significantly.

The oldest but most accurate method is triangle to triangle collision detection for each surface. To simplify this method, each mesh block is converted to boxes. A special AABB tree or quadtree is generated to reduce the vertex check.

This can be further reduced to octree vertex mapping by merging two box colliders. In this way, the developer can reduce the collision check to reduce CPU overhead.

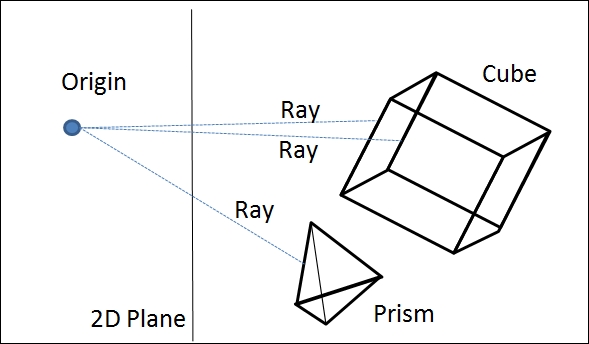

Ray casting is a geometric system to detect the surfaces of 3D graphical objects. This system is used to solve the geometric problems of 3D computer graphics. In the case of 3D games, all 3D objects are projected in a 2D view. It is not possible to determine depth without ray casting in the case of a 2D electronic display:

Each ray from the origin projected on different objects can detect the shape of the object, distance from the plane, collision detection, rotation, and scaling of the objects, and so on.

In the case of Android games, ray casting is vastly used to handle touch input on the screen. Most of the games use this method to manipulate the behavior of 3D objects used in the game.

From the point of view of development performance, ray casting is a quite costly system to use in a major scale. This requires a series of geometrical calculation, resulting in a processing overhead. As the number of rays increases, the process gets heavier.

It is always a best practice to keep a control on using multiple rays casting at one point.

The word "world" in 3D games is a real-time simulation of the actual world with a regional limitation. The world is created with 3D models, which refer to actual objects in the real world. The scope of the game world is finite. This world follows a particular scale, position, and rotation with respective cameras.

The concept of camera is a must for simulating such a world. Multiple cameras can be used to render different perspectives of the same world.

In the gaming industry, a game world is created according to requirements. This means that the worlds of different games are different. But a few of the parameters remain the same. These parameters are as follows:

- Finite elements

- Light source

- Camera

A world consists of the elements that are required in game design. Each game may require different elements. However, there are two things that are common across the games: sky and terrain. Most of the elements are usually placed on the terrain, and the light source is in the sky. However, many games offer different light sources at different scopes of the game.

Elements can be divided into two categories: movable objects and static objects. A game's rigid bodies are associated with such elements. Normally, static objects do not support motion physics.

Optimizing objects in the world is necessary for performance. Each object has a certain number of vertices and triangles. We have already discussed the processing overhead of the vertices of 3D objects. Generally, world optimization is basically the optimization of each element in the world.

A game world must have one or more light sources. Lights are used to expose the elements in the world. Multiple light sources have a great visual impact on the user experience.

The game development process always requires at least one good light artist. Modern games use light maps to amplify the visual quality. The light and shadow play in the game world is entirely dependent on light mapping.

There is no doubt that light is a mandatory element in the game world. However, the consequence of processing light and shadow is a large amount of processing. All the vertices need to be processed according to a light source with a particular shader. Use of extensive light sources results in low performance.

Light sources can be of the following types:

- Area light

- Spot light

- Point light

- Directional light

- Ambient light

- Volume light

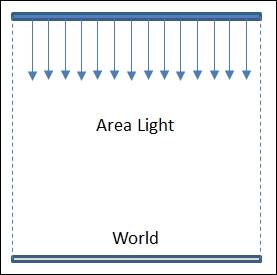

Area light

This kind of light source is used to light a rectangular or circular region. By nature, it is a directional light and lights the area with equal intensity:

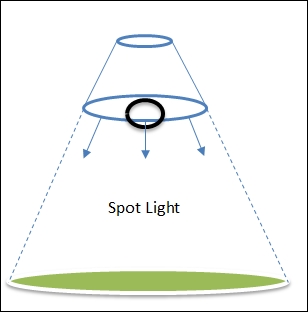

Spot light

A spot light is used to focus on a particular object in a conical directional shape:

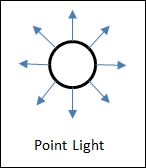

Point light

A point light illuminates in all directions of the source. A typical example is a bulb illumination:

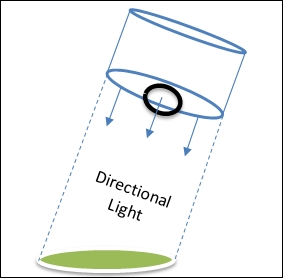

Directional light

A directional light is a set of parallel light beams projected on a place in a 3D world. A typical example is sunlight:

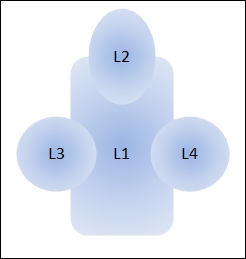

Ambient light

An ambient light is a set of arbitrary light beams in any direction. Usually, the intensity of this kind of light source is low. As a light beam does not follow a particular direction, and it does not generate any shadows:

L1, L2, L3, and L4 are ambient light sources here.

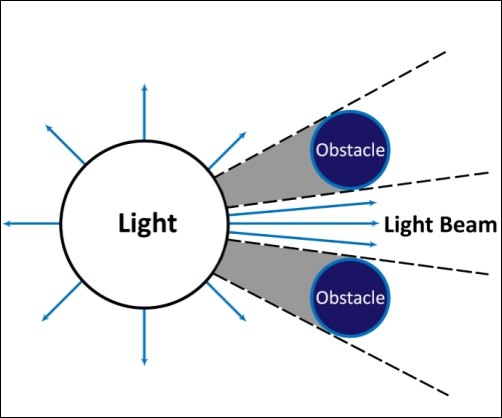

Volume light

A volume light is a modified type of point light. This kind of light source can be converted into a set of light beams within a defined geometrical shape. Any light beam is a perfect example of such a light source:

The camera is the last but the most important element of the game world. A camera is responsible for the rendering of the game screen. It also determines the elements to be added in the rendering pipeline.

There are two types of camera used in a game.

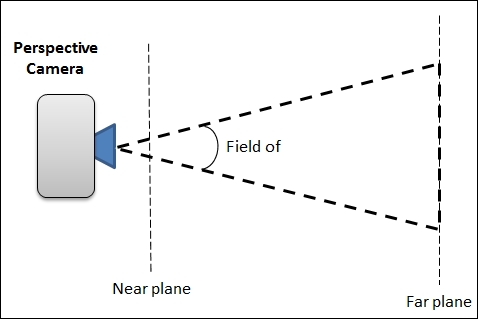

Perspective camera

This type of camera is typically used to render 3D objects. The visible scale and depth is fully dependent on this type of camera. The developer manipulates the field of view and near/far range to control the rendering pipeline:

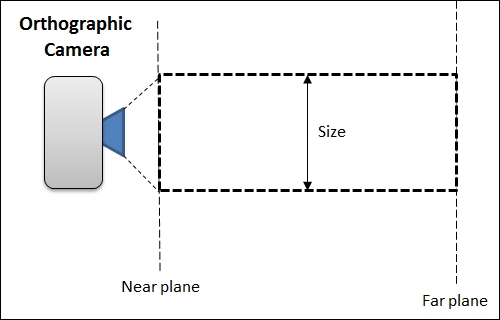

Orthographic camera

This type of camera is used to render objects from a 2D perspective, irrespective of the objects. An orthographic camera renders objects on the same plane, irrespective of the depth. The developer manipulates effective width and height of the camera to control the 2D rendering pipeline. This camera is typically used for 2D games and to render 2D objects in a 3D game:

Besides this, the game camera can also be categorized by their nature and purpose. Here are the most common variations.

Fixed camera

A fixed camera does not rotate, translate, or scale during the execution. Typically, 2D games use such cameras. A fixed camera is the most convenient camera in terms of processing speed. A fixed camera does not have any runtime manipulation.

Rotating camera

This camera has a rotating feature during runtime. This type of camera is effective in the case of sports simulation or surveillance simulation games.

Moving camera

A camera can be said to be moving when the translation can be changed during runtime. This type of camera is typically used for an aerial view of the game. A typical use of this sort of camera is for games such as Age Of Empires, Company Of Heroes, Clash Of Clans, and so on.

Third-person camera

This camera is mainly the part of gameplay design. This is a moving camera, but this camera follows a particular object or character. The character is supposed to be the user character, so all the actions and movements are tracked by this camera including the character and object. Mostly, this camera can be rotated or pushed according to the actions of the player.

First-person camera

When the player plays as the main character, this camera is used to implement a typical view of the eyes of the player. The camera moves or translates according to the actions of the player.