A recent feature addition to Kubernetes is that of the Horizontal Pod Autoscaler. This resource type is really useful as it gives us a way to automatically set thresholds for scaling our application. Currently, that support is only for CPU, but there is alpha support for custom application metrics as well.

Let's use the node-js-scale ReplicationController from the beginning of the chapter and add an autoscaling component. Before we start, let's make sure we are scaled back down to one replica using the following command:

$ kubectl scale --replicas=1 rc/node-js-scale

Now, we can create a Horizontal Pod Autoscaler, node-js-scale-hpa.yaml with the following hpa definition:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: node-js-scale

spec:

minReplicas: 1

maxReplicas: 3

scaleTargetRef:

apiVersion: v1

kind: ReplicationController

name: node-js-scale

targetCPUUtilizationPercentage: 20

Go ahead and create this with the kubectl create -f command. Now, we can list the Horizontal Pod Autoscaler and get a description as well:

$ kubectl get hpa

$ kubectl autoscale rc/node-js-scale --min=1 --max=3 --cpu-percent=20

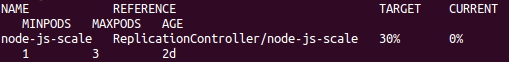

This will show us an autoscaler on node-js-scale ReplicationController with a target CPU of 30%. Additionally, you will see that minimum pods is set to 1 and maximum to 3:

Let's also query our pods to see how many are running right now:

$ kubectl get pods -l name=node-js-scale

We should see only one node-js-scale pod because our Horizontal Pod Autoscaler is showing 0% utilization, so we will need to generate some load. We will use the popular boom application common in many container demos. The following listing boomload.yaml will help us create continuous load until we can hit the CPU threshold for the autoscaler:

apiVersion: v1

kind: ReplicationController

metadata:

name: boomload

spec:

replicas: 1

selector:

app: loadgenerator

template:

metadata:

labels:

app: loadgenerator

spec:

containers:

- image: williamyeh/boom

name: boom

command: ["/bin/sh","-c"]

args: ["while true ; do boom http://node-js-scale/ -c 10 -n 100

; sleep 1 ; done"]

Use the kubectl create -f command with this listing and then be ready to start monitoring the hpa. We can do this with the kubectl get hpa command we used earlier.

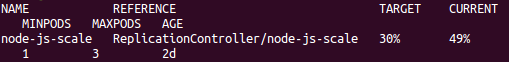

It may take a few moments, but we should start to see the current CPU utilization increase. Once it goes above the 20% threshold we set, the autoscaler will kick in:

Once we see this, we can run kubectl get pod again and see there are now several node-js-scale pods:

$ kubectl get pods -l name=node-js-scale

We can clean up now by killing our load generation pod:

$ kubectl delete rc/boomload

Now, if we watch the hpa, we should start to see the CPU usage drop. It may take a few minutes, but eventually we will go back down to 0% CPU load.