One of the useful features of the rollout API is the ability to track the deployment history. Let's do one more update before we check the history. Run the kubectl set command once more and specify version 0.3:

$ kubectl set image deployment/node-js-deploy node-js-deploy=jonbaier/pod-scaling:0.3

$ deployment "node-js-deploy" image updated

Once again, we'll see text that says deployment "node-js-deploy" image updated displayed on the screen. Now, run the get pods command once more:

$ kubectl get pods -l name=node-js-deploy

Let's also take a look at our deployment history. Run the rollout history command:

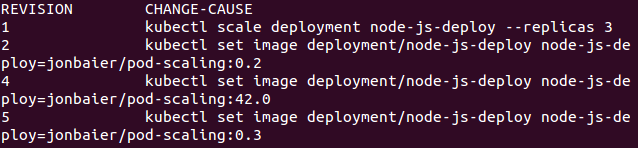

$ kubectl rollout history deployment/node-js-deploy

We should see an output similar to the following:

As we can see, the history shows us the initial deployment creation, our first update to 0.2, and then our final update to 0.3. In addition to status and history, the rollout command also supports the pause, resume, and undo sub-commands. The rollout pause command allows us to pause a command while the rollout is still in progress. This can be useful for troubleshooting and also helpful for canary-type launches, where we wish to do final testing of the new version before rolling out to the entire user base. When we are ready to continue the rollout, we can simply use the rollout resume command.

But what if something goes wrong? That is where the rollout undo command and the rollout history itself are really handy. Let's simulate this by trying to update to a version of our pod that is not yet available. We will set the image to version 42.0, which does not exist:

$ kubectl set image deployment/node-js-deploy node-js-deploy=jonbaier/pod-scaling:42.0

We should still see the text that says deployment "node-js-deploy" image updated displayed on the screen. But if we check the status, we will see that it is still waiting:

$ kubectl rollout status deployment/node-js-deploy

Waiting for rollout to finish: 2 out of 3 new replicas have been updated...

Here, we see that the deployment has been paused after updating two of the three pods, but Kubernetes knows enough to stop there in order to prevent the entire application from going offline due to the mistake in the container image name. We can press Ctrl + C to kill the status command and then run the get pods command once more:

$ kubectl get pods -l name=node-js-deploy

We should now see an ErrImagePull, as in the following screenshot:

As we expected, it can't pull the 42.0 version of the image because it doesn't exist. This error refers to a container that's stuck in an image pull loop, which is noted as ImagePullBackoff in the latest versions of Kubernetes. We may also have issues with deployments if we run out of resources on the cluster or hit limits that are set for our namespace. Additionally, the deployment can fail for a number of application-related causes, such as health check failure, permission issues, and application bugs, of course.

It's entirely possible to create deployments that are wholly unavailable if you don't change maxUnavailable and spec.replicas to different numbers, as the default for each is 1!

Whenever a failure to roll out happens, we can easily roll back to a previous version using the rollout undo command. This command will take our deployment back to the previous version:

$ kubectl rollout undo deployment/node-js-deploy

After that, we can run a rollout status command once more and we should see everything rolled out successfully. Run the kubectl rollout history deployment/node-js-deploy command again and we'll see both our attempt to roll out version 42.0 and revert to 0.3: