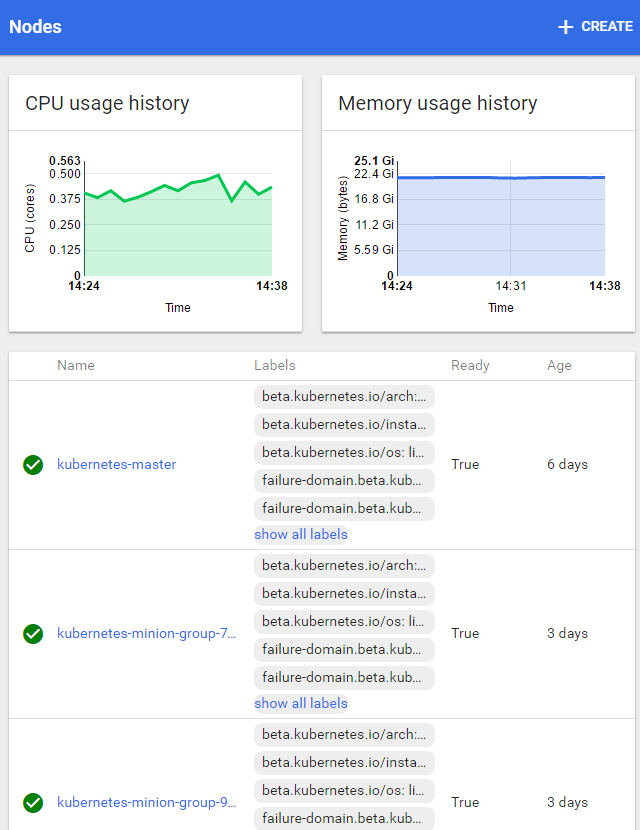

Let's take a look at a quick example of setting some resource limits. If we look at our K8s dashboard, we can get a quick snapshot of the current state of resource usage on our cluster using https://<your master ip>/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard and clicking on Nodes on the left-hand side menu.

We'll see a dashboard, as shown in the following screenshot:

This view shows the aggregate CPU and memory across the whole cluster, nodes, and Master. In this case, we have fairly low CPU utilization, but a decent chunk of memory in use.

Let's see what happens when I try to spin up a few more pods, but this time, we'll request 512 Mi for memory and 1500 m for the CPU. We'll use 1500 m to specify 1.5 CPUs; since each node only has 1 CPU, this should result in failure. Here's an example of the RC definition. Save this file as nodejs-constraints-controller.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: node-js-constraints

labels:

name: node-js-constraints

spec:

replicas: 3

selector:

name: node-js-constraints

template:

metadata:

labels:

name: node-js-constraints

spec:

containers:

- name: node-js-constraints

image: jonbaier/node-express-info:latest

ports:

- containerPort: 80

resources:

limits:

memory: "512Mi"

cpu: "1500m"

To open the preceding file, use the following command:

$ kubectl create -f nodejs-constraints-controller.yaml

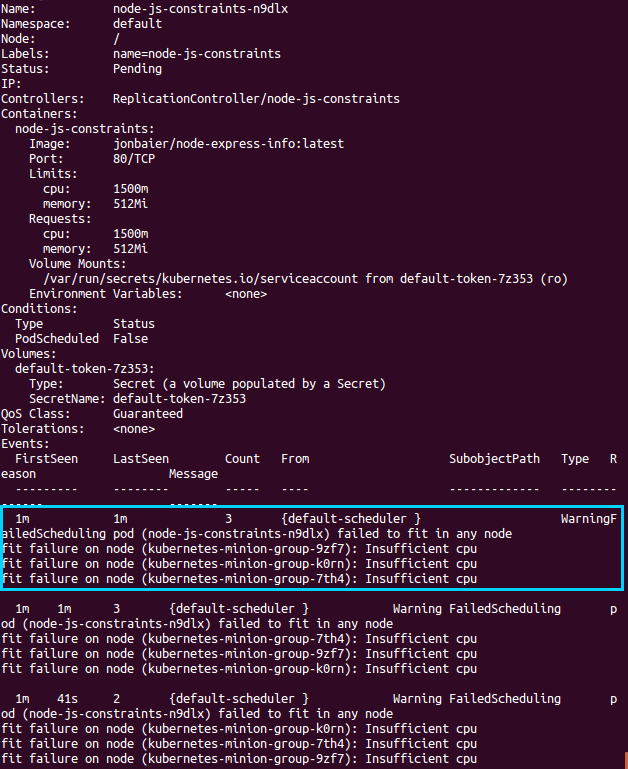

The replication controller completes successfully, but if we run a get pods command, we'll note the node-js-constraints pods are stuck in a pending state. If we look a little closer with the describe pods/<pod-id> command, we'll note a scheduling error (for pod-id use one of the pod names from the first command):

$ kubectl get pods

$ kubectl describe pods/<pod-id>

The following screenshot is the result of the preceding command:

Note, in the bottom events section, that the WarningFailedScheduling pod error listed in Events is accompanied by fit failure on node....Insufficient cpu after the error. As you can see, Kuberneftes could not find a fit in the cluster that met all the constraints we defined.

If we now modify our CPU constraint down to 500 m, and then recreate our replication controller, we should have all three pods running within a few moments.