The scaling of our application up and down as our resource demands change is useful for many production scenarios, but what about simple application updates? Any production system will have code updates, patches, and feature additions. These could be occurring monthly, weekly, or even daily. Making sure that we have a reliable way to push out these changes without interruption to our users is a paramount consideration.

Once again, we benefit from the years of experience the Kubernetes system is built on. There is built-in support for rolling updates with the 1.0 version. The rolling-update command allows us to update entire ReplicationControllers or just the underlying Docker image used by each replica. We can also specify an update interval, which will allow us to update one pod at a time and wait until proceeding to the next.

Let's take our scaling example and perform a rolling update to the 0.2 version of our container image. We will use an update interval of 2 minutes, so we can watch the process as it happens in the following way:

$ kubectl rolling-update node-js-scale --image=jonbaier/pod-scaling:0.2 --update-period="2m"

You should see some text about creating a new ReplicationControllers named node-js-scale-XXXXX, where the X characters will be a random string of numbers and letters. In addition, you will see the beginning of a loop that starts one replica of the new version and removes one from the existing ReplicationControllers. This process will continue until the new ReplicationControllers has the full count of replicas running.

If we want to follow along in real time, we can open another Terminal window and use the get pods command, along with a label filter, to see what's happening:

$ kubectl get pods -l name=node-js-scale

This command will filter for pods with node-js-scale in the name. If you run this after issuing the rolling-update command, you should see several pods running as it creates new versions and removes the old ones one by one.

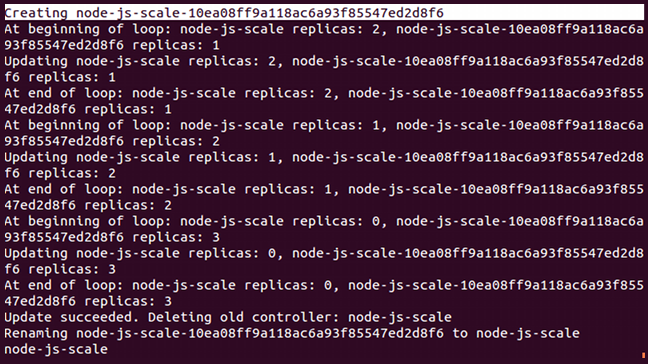

The full output of the previous rolling-update command should look something like this screenshot:

As we can see here, Kubernetes is first creating a new ReplicationController named node-js-scale-10ea08ff9a118ac6a93f85547ed28f6. K8s then loops through one by one, creating a new pod in the new controller and removing one from the old. This continues until the new controller has the full replica count and the old one is at zero. After this, the old controller is deleted and the new one is renamed with the original controller's name.

If you run a get pods command now, you'll notice that the pods still all have a longer name. Alternatively, we could have specified the name of a new controller in the command, and Kubernetes will create a new ReplicationControllers and pods using that name. Once again, the controller of the old name simply disappears after the update is completed. I recommend that you specify a new name for the updated controller to avoid confusion in your pod naming down the line. The same update command with this method will look like this:

$ kubectl rolling-update node-js-scale node-js-scale-v2.0 --image=jonbaier/pod-scaling:0.2 --update-period="2m"

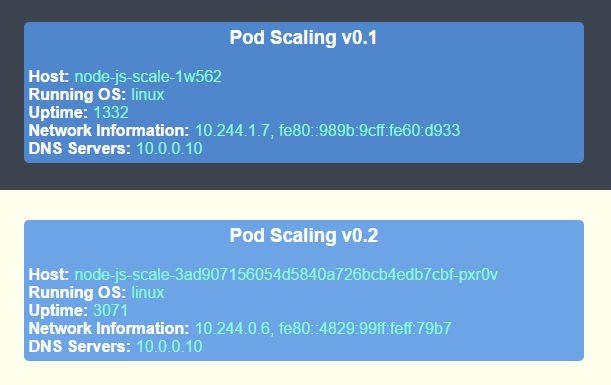

Using the static external IP address from the service we created in the first section, we can open the service in a browser. We should see our standard container information page. However, you'll notice that the title now says Pod Scaling v0.2 and the background is light yellow:

It's worth noting that, during the entire update process, we've only been looking at pods and ReplicationControllers. We didn't do anything with our service, but the service is still running fine and now directing to the new version of our pods. This is because our service is using label selectors for membership. Because both our old and new replicas use the same labels, the service has no problem using the new pods to service requests. The updates are done on the pods one by one, so it's seamless for the users of the service.