As mentioned previously, we can schedule DaemonSets to run on a subset of nodes as well. This can be achieved using something called nodeSelectors. These allow us to constrain the nodes a pod runs on, by looking for specific labels and metadata. They simply match key-value pairs on the labels for each node. We can add our own labels or use those that are assigned by default.

The default labels are listed in the following table:

|

Default node labels

|

Description

|

| kubernetes.io/hostname | This shows the hostname of the underlying instance or machine |

| beta.kubernetes.io/os | This shows the underlying operating system as a report in the Go language |

| beta.kubernetes.io/arch | This shows the underlying processor architecture as a report in the Go language |

| beta.kubernetes.io/instance-type | This is the instance type of the underlying cloud provider (cloud-only) |

| failure-domain.beta.kubernetes.io/region | This is the region of the underlying cloud provider (cloud-only) |

| failure-domain.beta.kubernetes.io/zone | This is the fault-tolerance zone of the underlying cloud provider (cloud-only) |

We are not limited to DaemonSets, as nodeSelectors actually work with pod definitions as well. Let's take a closer look at a job example (a slight modification of our preceding long-task example).

First, we can see these on the nodes themselves. Let's get the names of our nodes:

$ kubectl get nodes

Use a name from the output of the previous command and plug it into this one:

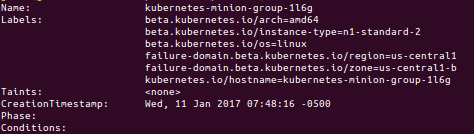

$ kubectl describe node <node-name>

Let's now add a nickname label to this node:

$ kubectl label nodes <node-name> nodenickname=trusty-steve

If we run the kubectl describe node command again, we will see this label listed next to the defaults. Now, we can schedule workloads and specify this specific node. The following listing longtask-nodeselector.yaml is a modification of our earlier long-running task with nodeSelector added:

apiVersion: batch/v1

kind: Job

metadata:

name: long-task-ns

spec:

template:

metadata:

name: long-task-ns

spec:

containers:

- name: long-task-ns

image: docker/whalesay

command: ["cowsay", "Finishing that task in a jiffy"]

restartPolicy: OnFailure

nodeSelector:

nodenickname: trusty-steve

Create the job from this listing with kubectl create -f.

Once that succeeds, it will create a pod based on the preceding specification. Since we have defined nodeSelector, it will try to run the pod on nodes that have matching labels and fail if it finds no candidates. We can find the pod by specifying the job name in our query, as follows:

$ kubectl get pods -a -l job-name=long-task-ns

We use the -a flag to show all pods. Jobs are short lived and once they enter the completed state, they will not show up in a basic kubectl get pods query. We also use the -l flag to specify pods with the job-name=long-task-ns label. This will give us the pod name, which we can push into the following command:

$ kubectl describe pod <Pod-Name-For-Job> | grep Node:

The result should show the name of the node this pod was run on. If all has gone well, it should match the node we labeled a few steps earlier with the trusty-steve label.