In this exercise, we're going to unite two clusters using Istio's multi-cloud feature. Normally, we'd create two clusters from scratch, across two CSPs, but for the purposes of exploring one single isolated concept at a time, we're going to use the GKE to spin up our clusters, so we can focus on the inner workings of Istio's multi-cloud functionality.

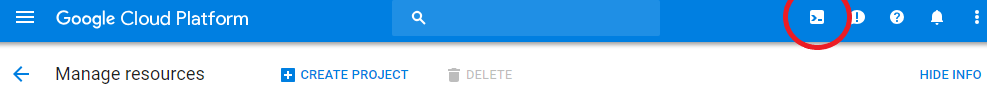

Let's get started by logging in to your Google Cloud Project! First, you'll want to create a project in the GUI called gsw-k8s-3, if you haven't already, and get your Google Cloud Shell to point to it. If you're already pointed at your GCP account, you can disregard that.

Click this button for an easy way to get access to the CLI tools:

Once you've launched the shell, you can point it to your project:

anonymuse@cloudshell:~$ gcloud config set project gsw-k8s-3

Updated property [core/project].

anonymuse@cloudshell:~ (gsw-k8s-3)$

Next, we'll set up an environment variable for the project ID, which can echo back to see:

anonymuse@cloudshell:~ (gsw-k8s-3)$ proj=$(gcloud config list --format='value(core.project)')

anonymuse@cloudshell:~ (gsw-k8s-3)$ echo $proj

Gsw-k8s-3

Now, let's create some clusters. Set some variables for the zone and cluster name:

zone="us-east1-b"

cluster="cluster-1"

First, create cluster one:

gcloud container clusters create $cluster --zone $zone --username "

--cluster-version "1.10.6-gke.2" --machine-type "n1-standard-2" --image-type "COS" --disk-size "100"

--scopes gke-default

--num-nodes "4" --network "default" --enable-cloud-logging --enable-cloud-monitoring --enable-ip-alias --async

WARNING: Starting in 1.12, new clusters will not have a client certificate issued. You can manually enable (or disable) the issuance of the client certificate using the `--[no-]issue-client-certificate` flag. This will enable the autorepair feature for nodes. Please see https://cloud.google.com/kubernetes-engine/docs/node-auto-repair for more information on node autorepairs.

WARNING: Starting in Kubernetes v1.10, new clusters will no longer get compute-rw and storage-ro scopes added to what is specified in --scopes (though the latter will remain included in the default --scopes). To use these scopes, add them explicitly to --scopes. To use the new behavior, set container/new_scopes_behavior property (gcloud config set container/new_scopes_behavior true).

NAME TYPE LOCATION TARGET STATUS_MESSAGE STATUS START_TIME END_TIME

cluster-1 us-east1-b PROVISIONING

ERROR: (gcloud.container.clusters.create) ResponseError: code=400, message=EXTERNAL: Master version "1.9.6-gke.1" is unsupported.

You can check this web page to find out the currently supported version of GKE: https://cloud.google.com/kubernetes-engine/release-notes.

Next, specify cluster-2:

cluster="cluster-2"

Now, create it, where you'll see messages above. We'll omit them this time around:

gcloud container clusters create $cluster --zone $zone --username "admin"

--cluster-version "1.10.6-gke.2" --machine-type "n1-standard-2" --image-type "COS" --disk-size "100"

--scopes gke-default

--num-nodes "4" --network "default" --enable-cloud-logging --enable-cloud-monitoring --enable-ip-alias --async

You'll see the same messaging above. You can create another Google Cloud Shell window by clicking on the + icon in order to create some watch commands to see the clusters created. Take a minute to do this while the instances are created:

In that window, launch this command: gcloud container clusters list. You should see the following:

gcloud container clusters list

<snip>

Every 1.0s: gcloud container clusters list cs-6000-devshell-vm-375db789-dcd6-42c6-b1a6-041afea68875: Mon Sep 3 12:26:41 2018

NAME LOCATION MASTER_VERSION MASTER_IP MACHINE_TYPE NODE_VERSION NUM_NODES STATUS

cluster-1 us-east1-b 1.10.6-gke.2 35.237.54.93 n1-standard-2 1.10.6-gke.2 4 RUNNING

cluster-2 us-east1-b 1.10.6-gke.2 35.237.47.212 n1-standard-2 1.10.6-gke.2 4 RUNNING

On the dashboard, it'll look like so:

Next up, we'll grab the cluster credentials. This command will allow us to set a kubeconfig context for each specific cluster:

for clusterid in cluster-1 cluster-2; do gcloud container clusters get-credentials $clusterid --zone $zone; done

Fetching cluster endpoint and auth data.

kubeconfig entry generated for cluster-1.

Fetching cluster endpoint and auth data.

kubeconfig entry generated for cluster-2.

Let's ensure that we can use kubectl to get the context for each cluster:

anonymuse@cloudshell:~ (gsw-k8s-3)$ kubectl config use-context "gke_${proj}_${zone}_cluster-1"

Switched to context "gke_gsw-k8s-3_us-east1-b_cluster-1".

If you then run kubectl get pods --all-namespaces after executing each of the cluster context switches, you should see something similar to this for each cluster:

anonymuse@cloudshell:~ (gsw-k8s-3)$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system event-exporter-v0.2.1-5f5b89fcc8-2qj5c 2/2 Running 0 14m

kube-system fluentd-gcp-scaler-7c5db745fc-qxqd4 1/1 Running 0 13m

kube-system fluentd-gcp-v3.1.0-g5v24 2/2 Running 0 13m

kube-system fluentd-gcp-v3.1.0-qft92 2/2 Running 0 13m

kube-system fluentd-gcp-v3.1.0-v572p 2/2 Running 0 13m

kube-system fluentd-gcp-v3.1.0-z5wjs 2/2 Running 0 13m

kube-system heapster-v1.5.3-5c47587d4-4fsg6 3/3 Running 0 12m

kube-system kube-dns-788979dc8f-k5n8c 4/4 Running 0 13m

kube-system kube-dns-788979dc8f-ldxsw 4/4 Running 0 14m

kube-system kube-dns-autoscaler-79b4b844b9-rhxdt 1/1 Running 0 13m

kube-system kube-proxy-gke-cluster-1-default-pool-e320df41-4mnm 1/1 Running 0 13m

kube-system kube-proxy-gke-cluster-1-default-pool-e320df41-536s 1/1 Running 0 13m

kube-system kube-proxy-gke-cluster-1-default-pool-e320df41-9gqj 1/1 Running 0 13m

kube-system kube-proxy-gke-cluster-1-default-pool-e320df41-t4pg 1/1 Running 0 13m

kube-system l7-default-backend-5d5b9874d5-n44q7 1/1 Running 0 14m

kube-system metrics-server-v0.2.1-7486f5bd67-h9fq6 2/2 Running 0 13m

Next up, we're going to need to create a Google Cloud firewall rule so each cluster can talk to the other. We're going to need to gather all cluster networking data (tags and CIDR), and then create firewall rules with gcloud. The CIDR ranges will look something like this:

anonymuse@cloudshell:~ (gsw-k8s-3)$ gcloud container clusters list --format='value(clusterIpv4Cidr)'

10.8.0.0/14

10.40.0.0/14

The tags will be per-node, resulting in eight total tags:

anonymuse@cloudshell:~ (gsw-k8s-3)$ gcloud compute instances list --format='value(tags.items.[0])'

gke-cluster-1-37037bd0-node

gke-cluster-1-37037bd0-node

gke-cluster-1-37037bd0-node

gke-cluster-1-37037bd0-node

gke-cluster-2-909a776f-node

gke-cluster-2-909a776f-node

gke-cluster-2-909a776f-node

gke-cluster-2-909a776f-node

Let's run the full command now to create the firewall rules. Note the join_by function is a neat hack that allows us to join multiple elements of an array in Bash:

function join_by { local IFS="$1"; shift; echo "$*"; }

ALL_CLUSTER_CIDRS=$(gcloud container clusters list --format='value(clusterIpv4Cidr)' | sort | uniq)

echo $ALL_CLUSTER_CDIRS

ALL_CLUSTER_CIDRS=$(join_by , $(echo "${ALL_CLUSTER_CIDRS}"))

echo $ALL_CLUSTER_CDIRS

ALL_CLUSTER_NETTAGS=$(gcloud compute instances list --format='value(tags.items.[0])' | sort | uniq)

echo $ALL_CLUSTER_NETTAGS

ALL_CLUSTER_NETTAGS=$(join_by , $(echo "${ALL_CLUSTER_NETTAGS}"))

echo $ALL_CLUSTER_NETTAGS

gcloud compute firewall-rules create istio-multicluster-test-pods

--allow=tcp,udp,icmp,esp,ah,sctp

--direction=INGRESS

--priority=900

--source-ranges="${ALL_CLUSTER_CIDRS}"

--target-tags="${ALL_CLUSTER_NETTAGS}"

That will set up our security firewall rules, which should look similar to this in the GUI when complete:

Let's create an admin role that we can use in future steps. First, set KUBE_USER to the email address associated with your GCP account with KUBE_USER="<YOUR_EMAIL>". Next, we'll create a clusterrolebinding:

kubectl create clusterrolebinding gke-cluster-admin-binding

--clusterrole=cluster-admin

--user="${KUBE_USER}"

clusterrolebinding "gke-cluster-admin-binding" created

Next up, we'll install the Istio control plane with Helm, create a namespace, and deploy Istio using a chart.

Check to make sure you're using cluster-1 as your context with kubectl config current-context. Next, we'll install Helm with these commands:

curl https://raw.githubusercontent.com/kubernetes/helm/master/scripts/get > get_helm.sh

chmod 700 get_helm.sh

./get_helm.sh

Create a role for tiller to use. Youll need to clone the Istio repo first:

git clone https://github.com/istio/istio.git && cd istio

Now, create a service account for tiller.

kubectl apply -f install/kubernetes/helm/helm-service-account.yaml

And then we can intialize Tiller on the cluster.

/home/anonymuse/.helm

Creating /home/anonymuse/.helm/repository

...

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

anonymuse@cloudshell:~/istio (gsw-k8s-3)$

Now, switch to another, Istio-specific context where we'll install Istio in its own namespace:

kubectl config use-context "gke_${proj}_${zone}_cluster-1"

Copy over the installation chart for Istio into our home directory:

helm template install/kubernetes/helm/istio --name istio --namespace istio-system > $HOME/istio_master.yaml

Create a namespace for it to be used in, install it, and enable injection:

kubectl create ns istio-system

&& kubectl apply -f $HOME/istio_master.yaml

&& kubectl label namespace default istio-injection=enabled

We'll now set some more environment variables to collect the IPs of our pilot, statsD, policy, and telemetry pods:

export PILOT_POD_IP=$(kubectl -n istio-system get pod -l istio=pilot -o jsonpath='{.items[0].status.podIP}')

export POLICY_POD_IP=$(kubectl -n istio-system get pod -l istio=mixer -o jsonpath='{.items[0].status.podIP}')

export STATSD_POD_IP=$(kubectl -n istio-system get pod -l istio=statsd-prom-bridge -o jsonpath='{.items[0].status.podIP}')

export TELEMETRY_POD_IP=$(kubectl -n istio-system get pod -l istio-mixer-type=telemetry -o jsonpath='{.items[0].status.podIP}')

We can now generate a manifest for our remote cluster, cluster-2:

helm template install/kubernetes/helm/istio-remote --namespace istio-system

--name istio-remote

--set global.remotePilotAddress=${PILOT_POD_IP}

--set global.remotePolicyAddress=${POLICY_POD_IP}

--set global.remoteTelemetryAddress=${TELEMETRY_POD_IP}

--set global.proxy.envoyStatsd.enabled=true

--set global.proxy.envoyStatsd.host=${STATSD_POD_IP} > $HOME/istio-remote.yaml

Now, we'll instill the minimal Istio components and sidecar inject in our target, cluster-2. Run the following commands in order:

kubectl config use-context "gke_${proj}_${zone}_cluster-2"

kubectl create ns istio-system

kubectl apply -f $HOME/istio-remote.yaml

kubectl label namespace default istio-injection=enabled

Now, we'll create more scaffolding to take advantage of the features of Istio. We'll need to create a file in which we can configure kubeconfig to work with Istio. First, change back into your home directory with cd. The --minify flag will ensure that you only see output associated with your current context. Now, enter the following groups of commands:

export WORK_DIR=$(pwd)

CLUSTER_NAME=$(kubectl config view --minify=true -o "jsonpath={.clusters[].name}")

CLUSTER_NAME="${CLUSTER_NAME##*_}"

export KUBECFG_FILE=${WORK_DIR}/${CLUSTER_NAME}

SERVER=$(kubectl config view --minify=true -o "jsonpath={.clusters[].cluster.server}")

NAMESPACE=istio-system

SERVICE_ACCOUNT=istio-multi

SECRET_NAME=$(kubectl get sa ${SERVICE_ACCOUNT} -n ${NAMESPACE} -o jsonpath='{.secrets[].name}')

CA_DATA=$(kubectl get secret ${SECRET_NAME} -n ${NAMESPACE} -o "jsonpath={.data['ca.crt']}")

TOKEN=$(kubectl get secret ${SECRET_NAME} -n ${NAMESPACE} -o "jsonpath={.data['token']}" | base64 --decode)

Create a file with the following cat command. This will inject the contents here into a file that's going to be located in ~/${WORK_DIR}/{CLUSTER_NAME}:

cat <<EOF > ${KUBECFG_FILE}

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: ${CA_DATA}

server: ${SERVER}

name: ${CLUSTER_NAME}

contexts:

- context:

cluster: ${CLUSTER_NAME}

user: ${CLUSTER_NAME}

name: ${CLUSTER_NAME}

current-context: ${CLUSTER_NAME}

kind: Config

preferences: {}

users:

- name: ${CLUSTER_NAME}

user:

token: ${TOKEN}

EOF

Next up, we'll create a secret so that the control plane for Istio that exists on cluster-1 can access istio-pilot on cluster-2. Switch back to the first cluster, create a Secret, and label it:

anonymuse@cloudshell:~ (gsw-k8s-3)$ kubectl config use-context gke_gsw-k8s-3_us-east1-b_cluster-1

Switched to context "gke_gsw-k8s-3_us-east1-b_cluster-1".

kubectl create secret generic ${CLUSTER_NAME} --from-file ${KUBECFG_FILE} -n ${NAMESPACE}

kubectl label secret ${CLUSTER_NAME} istio/multiCluster=true -n ${NAMESPACE}

Once we've completed these tasks, let's use all of this machinery to deploy one of Google's code examples, bookinfo, across both clusters. Run this on the first:

kubectl config use-context "gke_${proj}_${zone}_cluster-1"

kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml

kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml

kubectl delete deployment reviews-v3

Now, create a file called reviews-v3.yaml for deploying bookinfo to the remote cluster. The file contents can be found in the repository directory of this chapter:

##################################################################################################

# Ratings service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: ratings

labels:

app: ratings

spec:

ports:

- port: 9080

name: http

---

##################################################################################################

# Reviews service

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: reviews

labels:

app: reviews

spec:

ports:

- port: 9080

name: http

selector:

app: reviews

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: reviews-v3

spec:

replicas: 1

template:

metadata:

labels:

app: reviews

version: v3

spec:

containers:

- name: reviews

image: istio/examples-bookinfo-reviews-v3:1.5.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

Let's install this deployment on the remote cluster, cluster-2:

kubectl config use-context "gke_${proj}_${zone}_cluster-2"

kubectl apply -f $HOME/reviews-v3.yaml

Once this is complete, you'll need to get access to the external IP of Istio's isto-ingressgateway service, in order to view the data in the bookinfo homepage. You can run this command to open that up. You'll need to reload that page dozens of times in order to see Istio's load balancing take place. You can hold down F5 in order to reload the page many times.

You can access http://<GATEWAY_IP>/productpage in order to see the reviews.