CHAPTER 24

The Internet and Other Networks

The Internet is both an engineering marvel, as much at the management layer as in its silicon and glass, and a powerful precursor to many of the possibilities we examine in this book: Whether we consider eBay, or Web video, or globally coordinated social protest, the way the Internet was designed and built establishes the limits of possibility for much of the modern world.

History has been made by a long series of networks. The Nile, the Euphrates, the Ruhr. England's navy and colonies. The railroads. Wires for electricity and for communications. Eisenhower's interstate highways. In the United States, first three then an explosion of television networks. FedEx's air freight system. Wal-Mart's trucks, marketing and supply-chain algorithms, and distribution centers.

These networks were built at particular times for particular purposes: The interstates could not haul rail cars and in fact helped drive U.S. passenger rail essentially out of business. Electric wires could not carry information. The scale of capital investment often made network owners, whether in the public or private sectors, expand into adjoining economic niches: Railroads often owned hotels, while AT&T not only completed telephone calls, it made both the customer premise and capital equipment necessary to do so. These complementarities were typically physical, and logical extensions of the core service.

These networks had clearly visible hubs: London for the British Empire, Chicago for American rail, New York's Broad Street for transatlantic telephone cables, the Strategic Air Command's headquarters near Omaha, Memphis for FedEx, and Louisville for UPS.

To sum up, until the Internet, most major physical (as opposed to social) networks had several defining characteristics:

- A network had a single purpose, or at most two, depending on the level of abstraction: The British used sea power to protect and conduct maritime trade as well as to project military power.

- Networks were expensive to build and operate, so they often became (or were treated as) natural monopolies.

- Networks were physically and geographically delimited. Interconnection (as with airline routes or intercountry phone calls) was possible but typically mapped on a 1:1 basis, with extensive negotiation and customization for each pairing.

- Networks had visible centers of gravity, hubs where multiple links converged.

Legacy Telecom Network Principles

For roughly a century, these characteristics were tangibly embodied by AT&T and its equivalents around the world. Speaking specifically of communications networks, three facts were often taken as axiomatic before the Internet:

- Networks, whether AT&T's premise equipment or British Telecom's transatlantic assets, were closed. U.S. wireline companies in particular controlled their networks with notoriously tight standards, helping ensure reliability at the cost of innovation.

- Because one company, or a tightly linked consortium, had control, network engineering was conducted under known operating assumptions.

- Latency, or systemic delay in signal transmission, was highly predictable.

- Similarly, the parameters of available bandwidth, or carrying capacity, were known with great reliability within the network operations center or its equivalent.

- The location of network elements and their respective characteristics—the overall topology—was known with certainty.

- Network assets were closely administered, in locked-down facilities, by technicians with highly standardized training. If X happened in any facility, the correct response was almost always known to be Y.

- Given these factors, operating costs were reasonably well understood for such a complex system. At the corporate level, however, costs were only semirelevant because revenues were set by regulators in most every country.

Defense Origins of the Internet

The Internet grew from origins in the U.S. defense community in the early 1960s. It was built to solve the problem of survivable communications: Given the amount and broad dispersal of nuclear weaponry, ground troops, naval assets, and so on, the commander in chief needed to get messages to all relevant parties extremely quickly no matter what and then those various resources had to be able to coordinate.

Two key technologies define the Internet's topology. First, information is digital, chopped into binary elements of 1s and 0s rather than a continuous analog representation. Whether a pixel in an image, a slice of a musical waveform, or a digital representation of a keyboard character, bits can be moved more robustly than analog signal, which degrades with multiple reamplifications. A Xerox of a Xerox of a Xerox gets successively less legible; a bit is much more likely to survive multiple handoffs intact.

Second, the links in the network were made redundant. If each node has three or more possible short pathways available and bits can be handed off repeatedly without degradation, a system of multiple short-hop pathways can route around the fact of any given node being unavailable.

Given these two design decisions, Paul Baran of the RAND Corporation went to work:

I figured there was no limit on the amount of communications that people thought they needed. So I figured I'd give them so much communications they wouldn't know what the hell to do with it. Then that became the work—to build something with sufficient bandwidth so that there'd be no shortage of communications. The question was, how the hell do you build a network of very high bandwidth for the future? The first realization was that it had to be digital, because we couldn't go through the limited number of analog links.

For redundancy to be cost effective, the cost of the components had to drop. This was a direct contradiction of AT&T doctrine, in which very expensive, highly engineered pieces at each step of the circuit-switched infrastructure were the norm. Unfortunately, the “star” topologies of such systems provided little redundancy and very vulnerable targets at the network core.

Baran continued:

You can build very, very tough networks—by tough I mean a high probability of being able to communicate if the two end nodes survive—if you had a redundancy level of about 3. The enemy could destroy 50, 60, 70 percent of the targets or more and it would still work. It's very robust. That was the thing that struck me….

If you were going to build a network with redundancy, that tells you right there how many paths you need. There's no choice. At the same time, you don't have to use high-priced stuff anymore. Because in the analog days both ends of the connection had to work in tandem, and the probability of many things working in tandem without failing was so low that you had to make every part nearly perfect. But if you don't care about reliability any more, then the cost of the components goes way down.1

The net result is a more reliable network based on cheaper, less reliable components: The idea of inexpensive redundancy as opposed to overengineered single-path fault-tolerance has taken hold elsewhere in computer science, in the areas of cloud computing and disc storage, for example.

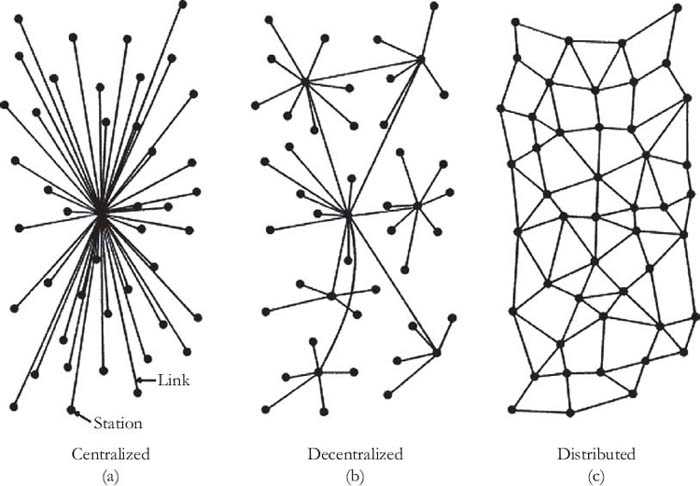

Baran's resulting conceptual picture (Figure 24.1) clearly indicates the robustness of the distributed topology.

FIGURE 24.1 Paul Baran's Original Conceptual Drawing of a Distributed Network Topology

Source: Paul Baran, “On Distributed Communications. I. Introduction to Distributed Communications Networks,” RAND Corporation memorandum RM-3420-PR (August 1964), available at www.rand.org/pubs/research_memoranda/RM3420.html.

Internet Principles

The global Internet that resulted from the work of Baran and other pioneers stood in nearly total contradiction to any network ever built. Its closest antecedent, the telephone system, did not prepare anyone for what would soon become possible after the National Science Foundation released it to commercial use in the early 1990s. The Internet was new in several important ways.

- The Internet was open. Anyone could serve content with minimal investment in infrastructure and a simple, nonproprietary naming convention. Viewing and receiving information was free: Every computer connected to the Internet can in theory connect to any other, independent of distance.

- Because of the robust network engineering underlying the Internet, engineering assumptions that held true in the Bell era had to be rethought. Because no one company could build a network of the Internet's scale, it is properly considered as a network of networks.

- Accordingly, any given interaction could traverse numerous transporting entities. Latency cannot be predicted.

- Throughput (often confusingly called bandwidth) varies, sometimes considerably. If one is on a cable modem, for example, that household is sharing resources with about 150 other homes. After work, network traffic can surge when people log on, download movies, or whatever, and available bit rate can drop. Similar phenomena occur at other layers in other networks.

- The topology of any given communication is both ad hoc and inconsistent. Packets of information are sent through available pathways; the routing decisions for any given packet are made in millionths of a second, so multiplying the number of packets times the number of “hops” will generate a truly large number for a load the size of a movie, for example. The packets seldom arrive in the order they are sent, so reconstructing the coherence of a human voice, for example, can be more technically challenging than, say, stapling together an e-mail. The location of the desired content relative to its intended recipient thus becomes an important consideration.

- The Internet is not “administered” but rather held together by working understandings and more or less formal technical standards. When things break, finding the source and solution can be difficult, as in denial-of-service and other attacks on universities, governments, and infrastructure.

- The economics of an essentially self-administered network of networks led to considerable business model challenges. In the words of Internet observers David Isenberg and David Weinberger, building on the insight of telecommunications analyst Roxane Googin, “[T]he best network is the hardest one to make money running.”2

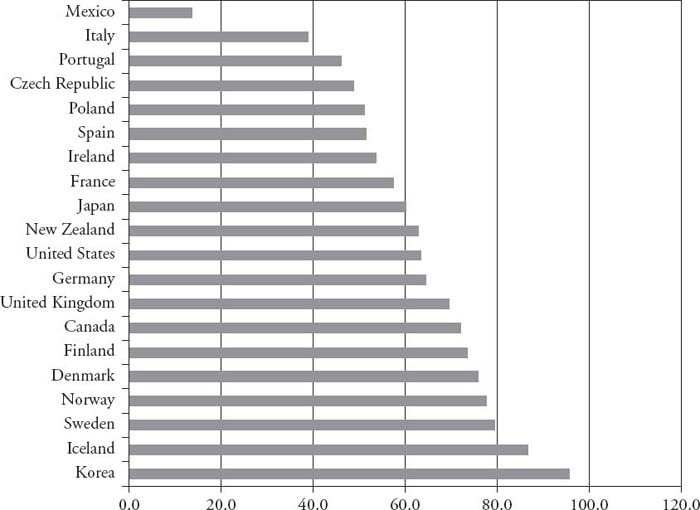

The truth of this paradox can be found in the difficult path of delivering high-speed Internet access to large numbers of people. Creating the right mix of incentives and protections has been difficult in the United States, which lags most of its peers in deployment, in network speed, and in price. According to OECD data shown in Figure 24.2, the results are consistently disappointing.3 The United States has consistently slow speeds, low penetration, and high prices relative to other developed countries.

Defenders of the status quo point to population density, but Iceland's is lower than the United States yet it leads the world in deployment. Proponents of government policy point to Korea, a country roughly the size of New Jersey and with a much more homogenous culture. Japan has very fast connections, but its economy has not obviously benefited from broadband leadership. Finland, however, has made broadband a legal right of citizenship and promises 100 megabits per second connections by 2015.4 The paradox of the best network is alive and well, as the share prices of Internet carriers attest: No carrier has beaten the Standard & Poor's 500 index in the last five years.

FIGURE 24.2 Wireline Broadband Internet Access, Percent of Households, 2009

Data Source: OECD.

Consequences of Internet Principles

We are still in the midst of discovering and understanding all of the many implications of an open, global, digital network of networks. A few of these follow:

- The vast quantity of innovation that resulted from this open position is still stunning, even in retrospect. Without rules or assumptions about how the Internet might be used, people created a vast array of techniques, services, archives, data sets, and other resources.

- The speed of innovation can be understood through analogy. Consider many different railroad companies, each working independently with tracks of slightly different gauges. Network effects are not as strong as they could be, and any innovation is stranded on its isolated network. With the Internet riding on its single set of standards, all innovation can build on the shoulders of giants and spur still more innovation. The history of search engines in the 1990s is a case in point, as commercial advances in information retrieval far outpaced academic theory. Facebook's leapfrogging of MySpace serves as another case in point.

- Vulnerabilities can be truly frightening in their scale. Bots, trojans, and viruses can move at ridiculous speeds. Compromised computers from all over the world are mobilized by remote control, out of sight of antivirus software, to invade people's privacy, steal their money, and disable their civic and technical infrastructure. Simple human error—a mistyped command into the wrong computer—can bring entire systems down.

- Once everything becomes bits, the Internet does a superb job of moving them. Telephone networks transported voice then fax. Radio stations broadcast music and voice. Television carried video, but in a different way from movie theaters. Now the same edge device (a PC or smartphone, for example) can access vast quantities of news, or music, or stock trades, or books, or photographs, or maps, or geographic coordinates. Furthermore, those many information types can be readily intermingled.

- Business models for the Internet are particularly tricky. Traditional control of choke points and toll gates, whether through patents, geography, brand, or other means, can be difficult to enact and to enforce. Because the network of networks is inherently robust, it routes around choke points as a matter of course.

- It sounds obvious, but the Internet supports phenomena with strong network effects. E-mail (and spam), social networking of the LinkedIn or Facebook sort, large-scale distributed self-organization (e.g., Wikipedia), and eBay are all examples of phenomena that could not have taken hold on any prior network structure.

- Place and space diverge. The physical location of a resource seldom matters for information purposes, as long as one knows its virtual whereabouts. While Facebook or Google operate huge data centers, their physical locations matter little to the services' users.

Looking Ahead

Despite the issues with wired broadband access, in the United States in particular, the rapid rise of the wireless high-speed Internet promises broader access, more innovation, and new unintended consequences. The science of networks, both social and technical, is advancing rapidly with developments in economics, physics, biology, and sociology.5 Routers* get bigger and faster, fiber-optic cables are still being steadily deployed, innovations in optics mean increased performance and carrying capacity from the core infrastructure, and innovations in business models mean more people can do more things, connected to more people, each year. For all of its paradoxes, challenges, and vulnerabilities, the Internet remains a cultural and engineering marvel, in many ways the salient fact of modern life in many parts of the world.

Notes

1. Stewart Brand, “Founding Father,” Wired (March 2001), www.wired.com/wired/archive/9.03/baran.html?pg= 1&topic=&topic_set=. Reproduced with permission.

2. David Isenberg and David Weinberger, “The Paradox of the Best Network,” n.d., www.netparadox.com/.

3. OECD Broadband Portal, www.oecd.org/document/54/0,3343,en_2649_34225_38690102_1_1_1_1,00.html.

4. “Finland Makes Broadband a ‘Legal Right,’” BBC News, July 1, 2010,www.bbc.co.uk/news/10461048.

5. See, for example, Albert-Laszlo Barabasi, Linked: The New Science of Networks (New York: Perseus, 2002).

* The big, expensive specialized computers that forward packets across the Internet.