CHAPTER 29

Analytics

Thanks in part to vigorous efforts by vendors (led by IBM) to bring the idea to a wider public, analytics is coming closer to the mainstream. Whether in ESPN ads for fantasy football, or election-night slicing and dicing of vote and poll data, or the ever-broadening influence of quantitative models for stock trading and portfolio development, numbers-driven decisions are no longer the exclusive province of people with hard-core quantitative skills backed by expensive, often proprietary infrastructure.

Not surprisingly, the definition of “analytics” is completely problematic. At the simple end of the spectrum, one Australian firm asserts that “[a]nalytics is basically using existing business data or statistics to make informed decisions.”1 Confronting market confusion, Gartner market researchers settled on a similarly generic and less elegant assertion: “Analytics leverage data in a particular functional process (or application) to enable context-specific insight that is actionable.”2

To avoid terminological conflict, let us merely assert that analytics uses statistical and other methods of processing to tease out business insights and decision cues from masses of data. In order to see the reach of these concepts and methods, consider a few examples drawn at random:

- The “flash crash” of May 2010 focused attention on the many forms and roles of algorithmic trading of equities. While firm numbers on the practice are difficult to find, it is telling that the regulated New York Stock Exchange has fallen from executing 80% of trades in its listed stocks to only 26% in 2010, according to Bloomberg.3 The majority occurs in other trading venues, many of them essentially “lights-out” data centers; high-frequency trading firms, employing a tiny percentage of the people associated with the stock markets, generate 60% of daily U.S. trading volume of roughly 10 billion shares.

- In part because of the broad influence of Michael Lewis's best-selling book Moneyball,4 quantitative performance analysis has moved from its formerly geeky niche at the periphery to become a central facet of many sports. MIT holds an annual conference on sports analytics that draws both sell-out crowds and A-list speakers. Statistics-driven fantasy sports continue to rise in popularity all over the world as soccer, cricket, and rugby join the more familiar U.S. staples of football and baseball.

- Social network analysis, a lightly practiced subspecialty of sociology only two decades ago, has surged in popularity within the intelligence, marketing, and technology industries. Physics, biology, economics, and other disciplines all are contributing to the rapid growth of knowledge in this domain. Facebook, al Qaeda, and countless start-ups all require new ways of understanding cell phone, GPS, and friend-/kin-related traffic.

Why Now?

Perhaps as interesting as the range of its application are the many converging reasons for the rise of interest in analytics. Here are ten, from perhaps a multitude of others:

- Total quality management and six sigma programs trained a generation of production managers to value rigorous application of data. That six sigma has been misapplied and misinterpreted there can be little doubt, but the successes derived from a data-driven approach to decisions are informing today's wider interest in statistically sophisticated forms of analysis within the enterprise.

- Quantitative finance applied ideas from operations research, physics, biology, supply chain management, and elsewhere to problems of money and markets. In a bit of turnabout, many data-intensive techniques, such as portfolio theory, are now migrating out of formal finance into day-to-day management.

- As Google CEO Eric Schmidt said in August 2010, we now create in two days as much information as humanity did from the beginning of recorded history until 2003. That's measuring in bits, obviously, and as such the estimate is skewed by the rise of high-resolution video, but the overall point is valid: People and organizations can create data far faster than any human being or process can assemble, digest, or act on it. Cell phones, seen as both sensor and communication platforms, are a major contributor, as are enterprise systems and image generation. More of the world is instrumented, in increasingly standardized ways, than ever before: Bar codes and radio-frequency identification (RFID) tags on more and more items, Facebook status updates, GPS, and the ZigBee technical specification and other “Internet of things” efforts merely begin a list.

- Even as we as a species generate more data points than ever before, Moore's law and its corollaries (such as Kryder's law of hard discs, which have periods of intensive growth without the long-term stability of microprocessor improvement5) are creating a computational fabric which enables that data to be processed more cost-effectively than ever before. That processing, of course, creates still more data, compounding the glut.

- After the reengineering/ERP push (which generated large quantities of actual rather than estimated operational data in the first place), after the Internet boom, and after the largely failed effort to make services-oriented architectures a business development theme, technology vendors are putting major weight behind analytics. It sells services, hardware, and software; it can be used in every vertical segment; it applies to every size of business; and it connects to other macro-level phenomena: smart electrical grids, carbon footprints, healthcare cost containment, e-government, marketing efficiency, lean manufacturing, and so on. In short, many vendors have good reasons to emphasize analytics in their go-to-market efforts. Investments reinforce the commitment: SAP's purchase of Business Objects was its biggest acquisition ever, while IBM, Oracle, Microsoft, and Google have also spent billions buying capability in this area.

- Despite all the money spent on ERP, on data warehousing, and on “real-time” systems, most managers still cannot fully trust their data. Multiple spreadsheets document the same phenomena through different organizational lenses, data quality in enterprise systems inconsistently inspires confidence, and timeliness of results can vary widely, particularly in multinationals. Executives across industries have the same lament: For all of our systems and numbers, we often don't have a firm sense of what's going on in our company and our markets.

- Related to this lack of confidence in enterprise data, risk awareness is on the rise in many sectors. Whether in product provenance (e.g., Mattel), recall management (Toyota, Cargill, or CVS), exposure to natural disasters (Allstate, Chubb), credit and default risk (anyone), malpractice (any hospital), counterparty risk (Goldman Sachs), disaster management, or fraud (Enron, Satyam, Société Général, UBS), events of the past decade have sensitized executives and managers to the need for rigorous, data-driven monitoring of complex situations.

- Data from across domains can be correlated through such ready identifiers as GPS location, credit reporting, cell phone number, or even Facebook identity. The “like” button, by itself, serves as a massive spur to interorganizational data analysis of consumer behavior at a scale never before available to sampling-driven marketing analytics. What happens when a “sample” population includes 100 million individuals?

- Visualization is improving. While the spreadsheet is ubiquitous in every organization and will remain so, the quality of information visualization has improved over the past decade. This may result primarily from the law of large numbers (1% of a boatload is bigger than 1% of a handful), or it may reflect the growing influence of a generation of skilled information designers, or it may be that such tools as Mathematica and Adobe Flex are empowering better number pictures, but in any event, the increasing quality of both the tools and the outputs of information visualization reinforces the larger trend toward sophisticated quantitative analysis.

- Software as a service (SaaS) can put analytics into the hands of people who lack the data sets, the computational processing power, and the rich technical training formerly required for hard-core number crunching.

Some examples follow.

Successes, Many Available as SaaS

- Financial charting and modeling continue to migrate down-market: Retail investors can now use Monte Carlo simulations and other tools well beyond the reach of individuals at the dawn of online investing in 1995 or thereabouts.

- Airline ticket prices at Microsoft's Bing search engine are rated against a historical database, so purchasers of a particular route and date are told whether to buy now or wait.

- Customer segmentation can grow richer and more sure-footed as data quality and processing tools both improve. Bananas have, in recent years, been the biggest-selling item at Wal-Mart, for example, so their merchandising has evolved to reflect market basket analysis: Seeing that bananas and breakfast cereal often showed up together, the chain experimented with putting bananas (as well as other items) in multiple locations—adding a display in the cereal aisle, for example.

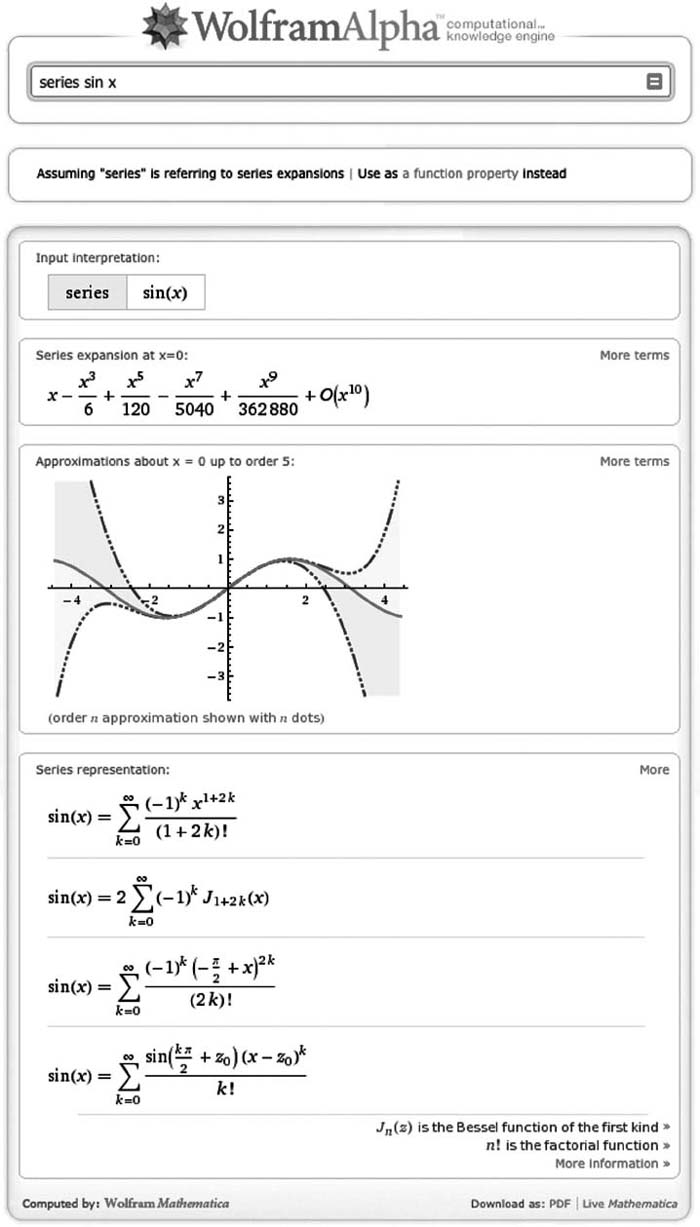

- Wolfram Alpha (Figure 29.1) is taking a search-engine approach to calculated results: A stock's price/earnings ratio is readily presented on a historical chart, for example. Scientific calculations currently are handled more readily than natural-language queries, but the tool's potential is unbelievable.

FIGURE 29.1 Wolfram Alpha Delivers a Computation Rather than a Search Engine Interface

Source: Wolfram Alpha LLC. - Google Analytics brings marketing tools formerly unavailable any-where to the owner of the smallest business: Anyone can slice and dice ad- and revenue-related data from dozens of angles, as long as they relate to the search engine in some way.

- Fraud detection through automated, quantitative tools holds great appeal because of both labor savings and rapid payback. Health and auto insurers, telecom carriers, and financial institutions are investing heavily in these technologies.

Practical Considerations: Why Analytics Is Still Hard

For all the tools, all the data, and all the computing power, getting numbers to tell stories is still difficult. There are a variety of reasons for the current state of affairs.

First, organizational realities mean that different entities collect data for their own purposes, label and format it in often-nonstandard ways, and hold it locally, usually in Excel but also in e-mails, or pdfs, or production systems. Data synchronization efforts can be among the most difficult of a chief information officer's tasks, with uncertain payback. Managers in separate but related silos may ask the same question using different terminology or see a cross-functional issue through only one lens.

Second, skills are not yet adequately distributed. Database analysts can type SQL* queries but usually don't have the managerial instincts or experience to probe the root cause of a business phenomenon. Statistical numeracy, often at a high level, remains a requirement for many analytics efforts; knowing the right tool for a given data type, or business event, or time scale takes experience, even assuming a clean data set. For example, correlation does not imply causation, as every first-year statistics student knows, yet temptations to let it do so abound, especially as electronic scenarios outrun human understanding of ground truths.

Third, odd as it sounds in an age of assumed infoglut, getting the right data can be a challenge. Especially in extended enterprises but also in extrafunctional processes, measures are rarely sufficiently consistent, sufficiently rich, or sufficiently current to support robust analytics. Importing data to explain outside factors adds layers of cost, complexity, and uncertainty: Weather, credit, customer behavior, and other exogenous factors can be critically important to either long-term success or day-to-day operations, yet representing these phenomena in a data-driven model can pose substantial challenges. Finally, many forms of data do not readily plug into the available processing tools: Unstructured data, such as e-mails or text messages, is growing at a rapid rate, adding to the complexity of analysis.

Fourth, data often relates to people, and people may not willingly give it up. Loyalty-club bar codes have been shared (often by cashiers), smart electrical metering is being viewed as a privacy invasion in some quarters,6 and tools for online privacy (cookie blockers, adware removers, etc.) are increasingly popular.

In certain situations, the algorithmic sensemaking available in common analytical tools is useful in uncovering and providing relevant information, wherever it may have originated. The cost and availability of such information are improving: In oil and gas, for example, information technology has helped drop the cost of a three-dimensional seismic map of the subsurface from $8 million per square kilometer in 1980, to $1 million in 1990, to $90,000 in 2005. But even the best analytics cannot reliably replace the human intelligence needed to draw the right conclusions from the information. Furthermore, not every quantitative question has a calculable answer.

Looking Ahead

Getting numbers to tell stories requires the ability to ask the right question of the data, assuming the data is clean and trustworthy in the first place. This unique skill requires a blend of process knowledge, statistical numeracy, time, narrative facility, and both rigor and creativity in proper proportion. Not surprisingly, such managers are not technicians and are difficult to find in many workplaces. For the promise of analytics to match what it actually delivers, the biggest breakthroughs will likely come in education and training rather than algorithms or database technology.

Notes

1. www.onlineanalytics.com.au/glossary.

2. Jeremy Kirk, “‘Analytics’ Buzzword Needs Careful Definition,” Infoworld, February 7, 2006, www.infoworld.com/t/data-management/analytics-buzzword-needs-careful-definition-567.

3. “NYSE May Merge With German Rival,” Bloomberg News, February 10, 2011, www.fa-mag.com/fa-news/6815-nyse-may-merge-with-german-rival.html.

4. Michael Lewis, Moneyball, (New York: W.W. Norton & Company, 2011)

5. “Kryder's Law,” MattsComputerTrends.com, www.mattscomputertrends.com/Kryder%27s.html.

6. “PG&E Smart Meter Problem a PR Nightmare,” smartmeter.com, November 21, 2009, www.smartmeters.com/the-news/690-pgae-smart-meter-problem-a-pr-nightmare.html.

*Structured Query Language is the language of database interrogation: An example

would be UPDATE a

SET a.[updated_column] = updatevalue

FROM articles a

JOIN classification c

ON a.articleID = c.articleID

WHERE c.classID = 1