Chapter 2. Setting Up a Project Using Maven 2

Maven and the Development Build Process

In this chapter, we look at the second major player in the Java build tools arena: Maven.[*] Maven is an increasingly popular open source build management tool for enterprise Java projects, designed to take much of the hard work out of the build process. Maven uses a declarative approach, in which the project structure and contents are described, rather then the task-based approach used in Ant or in traditional Make files or shell scripts. Maven also strongly promotes the use of standard directory structures and a well-defined build lifecycle. This helps enforce company-wide development standards and reduces the time needed to write and maintain build scripts.

Maven’s authors describe Maven as a “project management framework,” and it is indeed much more than just a simple build scripting tool. Maven’s declarative, standards-based approach to project build management simplifies many aspects of the project lifecycle. As well as catering for compiling, building, testing, and deploying your application with a minimum of effort, Maven offers a number of other key advantages:

Project dependencies are declared and managed in a clean, transparent way, which reduces the risk of dependency-related errors and makes for better documentation.

Maven lets you easily generate useful, high-quality, technical documentation and reports about the current state of the project and project team members. Note that we aren’t taking about a good user manual, which is an altogether different issue, but, rather, about technical documentation, written by developers for developers. In many technical projects, decent technical documentation is woefully inadequate. It is nevertheless a vital part of modern software development, especially when dislocated teams are involved.

Maven proposes a clear standard directory layout for source code, project resources and configuration files, generated output, and project documentation. This makes it easier to understand new Maven projects, and also makes the Maven build scripts cleaner and simpler.

Maven integrates smoothly with source code repositories, continuous integration servers, and issue tracking systems.

The Maven build cycle is flexible: it is easy to integrate additional build tasks, using existing Maven plug-ins or by writing Ant scriptlets.

All of these points make Maven an invaluable tool for Java development teams. Indeed, Maven touches so many parts of the SDLC that this book contains two distinct chapters on the subject. In this chapter, we will look at the basics of using Maven in the real world. In Chapter 29, we will focus on how to generate a technical web site for your project using Maven.

Maven and Ant

Without a doubt, the most popular and most well-known build tool in the Java sphere is Ant. Ant (see Chapter 1) is a fine tool and a hugely successful open source project. Millions of Java developers are familiar with it. And, as we will see throughout the rest of the book, there is hardly a Java tool in existence that doesn’t integrate with Ant.

However, when you write a lot of Ant build scripts, you find yourself asking yourself (and other teamg members) the same questions over and over again: Where will the source code go? What about the unit tests? How do we handle dependencies? How will we bundle up the deliverable application? What shall we call the main targets? Individually, Ant lets you deal with each of these tasks with a high degree of flexibility and power. However, you still have to write the tasks from scratch or duplicate and modify an Ant script from a previous project. And when you move to a new project or company, you need to ask these questions once again to (begin to) understand the build process in place.

Many (although not all) projects do follow fairly common and well-known patterns. A lot of what you need to configure in your build process is pretty much run-of-the-mill. It always seems a shame to redo the work again for each new project.

Maven can help you here. Maven takes a lot of the grunt work out of the build process, and tries to lever the combined experience and best practice of a large community of developers. By adhering to a certain number of conventions and best practices, Maven lets you remove the drudgery of all the low-level tasks in your build scripts. In the rest of this chapter, we will see how.

Installing Maven

In this chapter, we will go through how to install Maven 2 on various platforms. The basic installation process is straightforward, and is the same for all platforms. Maven is a pure Java tool, so first of all you need to ensure that there is a recent version of Java (1.4 or later) on your machine. Then, download the latest distribution from the Maven download site[*] and extract it into an appropriate directory. Finally, just add the bin subdirectory to the system path.

If you are familiar with installing Java tools, this should be enough to get you started. In the rest of this chapter, we discuss some more detailed environment-specific considerations.

Installing Maven on a Unix Machine

In this chapter, we run through how to install Maven into a Unix environment.

Installing Maven in a Unix-based environment is a relatively simple task. Download the latest version in the format of your choice, and extract it to an appropriate directory. Conventions vary greatly from one system to another, and from one system administrator to another: I generally place the maven installation in a nonuser-specific directory such as /usr/local, as shown here:

# cd /usr/local # tar xvfz maven-2.0.7-bin.tar.gz # ls

This

will extract the maven installation in a directory called maven-2.0.7. For convenience, on a Unix

system, I generally create a symbolic link to this directory to make

upgrades easier to manage:

# ln -s maven-2.0.7 maven # ls -al total 16 drwxr-xr-x 3 root root 4096 2006-08-06 13:18 . drwxr-xr-x 53 root root 4096 2006-07-20 21:32 .. lrwxrwxrwx 1 root root 11 2006-08-06 13:17 maven -> maven-2.0.7 drwxr-xr-x 6 root root 4096 2006-08-06 13:17 maven-2.0.7

Now just add the maven/bin directory to

your environment path. Typically, you will set this up in one of your

environment initialization scripts (for example, if you are using Bash, you

could place this configuration in the ~/.bashrc file if you just need to set it up for your

account, or in /etc/bashrc if you want to set it up for all users on this

machine). Don’t forget to make sure that the JAVA_HOME environment variable is defined as well. Here is a

typical example:

PATH=$PATH:/usr/local/maven/bin JAVA_HOME=/usr/lib/jvm/java export PATH JAVA_HOME

Now check that it works by running the maven command from the command line:

# mvn --version Maven version: 2.0.7

Installing Maven on a Windows Machine

Installing Maven on a Windows machine is also relatively straightforward, although the application still lacks the graphical installation package familiar to Windows users. First, download and unzip the Maven distribution into an appropriate directory. Most Windows machines will have a graphical compression utility that you can use to extract the ZIP file, although if you are stuck, you can always use the Java jar command-line tool, as shown here:

C:> jar -xf maven-2.0.4-bin.zip

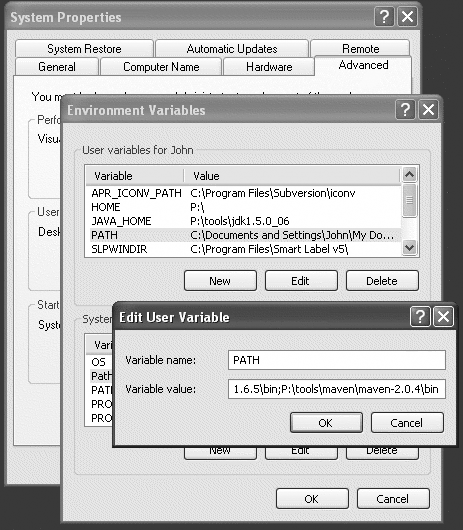

In Figure 2-1, Maven has been installed in the P: oolsmavenmaven-2.0.4 directory, although

of course you can install it anywhere that suits your particular needs. A

more conventional choice might be something like C:Program FilesApache Software Foundationmaven-2.0.4.

Because it is a Java application, Maven also expects the JAVA_HOME environment variable to be correctly

defined.

Next add the Maven bin directory to your PATH user variable (Figure 2-1). You will need to open a new console window to see the new path taken into account.

Now, check that Maven is correctly installed by running mvn --version:

C:>mvn --version Maven version: 2.0.4

Now you should have a working Maven environment ready to go!

Declarative Builds and the Maven Project Object Model

An Introduction to Declarative Build Management

Before we look at how to create and work with projects in Maven, we need to discuss some of the basics. The most fundamental of these is the Maven Project Object Model, or POM, which we will look at in this chapter. In the process, we also will cover some important basic principles of Maven development, as well as a lot of the key features of Maven. As many, if not most, new Maven users are already familiar with Ant, we will look at how the Maven approach differs from the one used by Ant, and how this can help simplify your builds.

For Ant users, the Maven philosophy can take a little getting use to.

Unlike Ant, which is very much task-oriented, Maven uses a highly declarative approach to project builds. In Ant, for example, you

list the tasks that must be performed to compile, test, and deliver your

product. In Maven, by contrast, you describe your

project and your build process, relying on conventions and sensible default

values to do much of the grunt work. The heart of a Maven 2 project, the

POM, describes your project, its structure, and its dependencies. It

contains a detailed description of your project, including information about

versioning and configuration management, dependencies, application and

testing resources, team members and structure, and much more. The POM takes

the form of an XML file (called pom.xml

by default), which is placed in your project home directory.

Let’s look at a practical example. One of the most fundamental parts of any Java build process involves compiling your Java classes. In a typical Ant build, you would use the <javac> task (see Compiling Your Java Code in Ant) to compile your classes. This involves defining the directory or directories containing your Java source code, the directory into which the compiled classes will be placed, and creating a classpath that contains any dependencies needed to compile your classes. Before invoking the compiler, you need to be sure to create the target directory. The corresponding Ant script might look something like this:

<project name="killer-app">

...

<property name="src.dir" location="src/main/java"/>

<property name="target.dir" location="target/classes"/>

...

<path id="compile.classpath">

<fileset dir="lib">

<include name="**/*.jar"/>

</fileset>

</path>

...

<target name="init">

<mkdir directory="${target.dir}"/>

</target>

<target name="compile" depends="init" description="Compile the application classes">

<javac srcdir="${src.dir}"

destdir="${target.dir}"

classpathref="compile.classpath"

source="1.5"

target="1.5"

/>

</target>

</project>To compile your application, you would invoke the “compile” target:

$ ant compile

In Maven, the build file for this project would be somewhat different. First of all, you would not need to declare the source and target directories. If you do not say otherwise, Maven will assume that you intend to respect the standard Maven directory structure (see The Maven Directory Structure), using the well-known principle of “Convention Over Configuration.” Nor do you need to create the target directory manually before compiling your code—Maven will do this for you automatically. In fact, the only thing that we need to specify is that our project code is written using Java 5 language features, for a Java 5 JVM. Maven uses components called plug-ins to do most of the serious work. The plug-in that handles Java compilation is called maven-compiler-plugin. So, to set up Java compilation in our Maven script, all we need to do is to configure this plug-in, which we do as follows:

<project...>

...

<build>

<plug-ins>

<!-- Using Java 5 -->

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.5</source>

<target>1.5</target>

</configuration>

</plugin>

</plug-ins>

</build>

...

</project>Note that had we been using the default javac source and target values, even this configuration would not have been needed.

The one thing that we glossed over here is the Maven equivalent of the

lib directory. In Ant, the libraries

required by a project are stored in a local project directory, often called

lib. In the above example, we defined

a classpath called compile.classpath,

which included all the JAR files in this directory.

Maven uses a totally different approach. In Maven, JAR files are rarely, if ever, stored in the project directory structure. Instead, dependencies are declared within the build script itself.

An extract from a list of Maven dependencies is shown here:

<project...>

...

<!-- PROJECT DEPENDENCIES -->

<dependencies>

<!-- Hibernate -->

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate</artifactId>

<version>3.2.4.</version>

</dependency>

<!-- Log4j -->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.14</version>

</dependency>

...

</dependencies>

</project>Dependency management is a major feature of Maven 2, and we look at it in much more detail in Managing Transitive Dependencies“ in ch02-dependency-management.

The third part of our POM file contains information that is largely irrelevant for the task at hand (compiling our Java class), but will come in handy later on. At the start of each Maven POM file, you will find a list of descriptive elements describing things like the project name, version number, how it is to be packaged, and so on. This is shown here:

<project...>

<!-- PROJECT DESCRIPTION -->

<modelVersion>4.0.0</modelVersion>

<groupId>com.mycompany</groupId>

<artifactId>myapp</artifactId>

<packaging>jar</packaging>

<name>Killer application</name>

<version>1.0</version>

<description>My new killer app</description>

...

</project>Here is the complete corresponding Maven build file:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org

/maven-v4_0_0.xsd">

<!-- PROJECT DESCRIPTION -->

<modelVersion>4.0.0</modelVersion>

<groupId>com.mycompany</groupId>

<artifactId>myapp</artifactId>

<packaging>war</packaging>

<name>Killer application</name>

<version>1.0</version>

<description>My new killer app</description>

<!-- BUILD CONFIGURATION -->

<build>

<plug-ins>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.5</source>

<target>1.5</target>

</configuration>

</plugin>

</plug-ins>

</build>

<!-- PROJECT DEPENDENCIES -->

<dependencies>

<!-- Hibernate -->

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate</artifactId>

<version>3.2.4.</version>

</dependency>

<!-- Log4j -->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.14</version>

</dependency>

...

</dependencies>

</project>So a Maven build file is not necessarily any shorter than an Ant build file for an equivalent project. But the nature of the information it contains is very different. Ant users will notice that there is no sign of any target-like structures, or any indication of what goals can be run:[*]

$ mvn compile

In

a similar manner, this same build file can be used to run the application’s

unit tests, stored by convention in the src/test/java directory, by invoking the “test” goal:

$ mvn test

And this same build file can be used to bundle up a JAR file containing the compiled classes, via the “package” goal:

$ mvn package

There are many other goals. We will cover the main ones in the remainder of this chapter, and in the other Maven-related chapters of this book.

This illustrates another of Maven’s strong points: all of these goals are standard Maven goals and will work in a similar way on any Maven project.

As can be gleaned here, one of the guiding principles of Maven is to use

sensible default values wherever possible. This is where the Maven

conventions play an important role. Maven projects are expected to respect a

certain number of conventions, such as placing your main source code in the

src/main/java directory and your test

code in the src/main/test directory (see The Maven Directory Structure). These conventions are largely defined in a special POM file, the

so-called Super POM, from which every POM is extended. In practice, this

means that if you respect the standard Maven conventions, you can get away

with surprisingly little in your POM file.

Even so, a typical real-world POM file can get pretty complex. In the remainder of this chapter, we will go through the main areas of the POM file, in order of appearance. This approach is intentionally superficial: because of the central nature of the POM file in all Maven projects, we will be coming back to various sections in much more detail as we look at other topics later on.

Project Context and Artifacts

The first part of a POM file basically introduces the project and its context, including the group and artifact IDs that uniquely identify this project in the Maven world, as well as how the artifact is packaged (jar, war, ear…), and the current version number. This is a small but crucial part of the Maven POM file, in which you define many key aspects of your project. A typical example is shown here:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.mycompany.accounting</groupId> <artifactId>accounting-core</artifactId> <packaging>jar</packaging> <version>1.1</version> ...

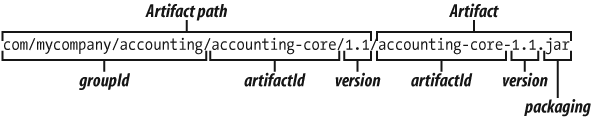

The information in this section is used to identify the project uniquely and, in particular, the artifact that it produces. This is one of the hallmarks of Maven, and it is what enables you to define very precisely your projects dependencies (see Managing Transitive Dependencies,” in Dependency Management in Maven 2). Indeed, the information in this section allows Maven to derive a unique path to the artifact generated by this project. For example, in this case, the unique path to this artifact is illustrated in Figure 2-2.

Let’s look at how Maven does this in a little more detail.

The <groupId> element is supposed to identify a particular project or set of libraries within a company or organization. By convention, it often corresponds to the initial part of the Java package used for the application classes (e.g., “org.apache.maven” for Maven projects, “org.springframework” for the Spring libraries, and so on), although this is not always the case. When the artifact is deployed to a Maven repository, the groupId is split out into a matching directory structure on the repository.

The artifactId represents the actual name of the project. This, combined with the groupId, should uniquely identify the project.

Every project also has a <version> element, which indicates the current version number. This number usually refers to major releases (“Hibernate 3.2.4,” “Spring 2.0.5,” and so on), as opposed to specific build numbers, which are different for each build. Each version has its own directory on the Maven repository, which is a subdirectory of the project directory.

So, in the above example, the generated artifact would be stored on the

Maven repository in a directory called com/mycompany/accounting/accounting-core/1.1.

When it comes to finally generating a deliverable package, Maven supports many different file formats.

At the time of this writing, supported package types included pom, jar,

maven-plugin, ejb, war, ear, rar, and par. As the name suggests, you use the

<packaging> element to indicate the packaging type. For

example, in this listing, Maven will generate a file called accounting-core-1.1.jar. The “jar” extension

comes from the <packaging> element.

Maven saves you the hassle of knowing exactly what files need to go into the

delivered package and what files were delivered. All you need to do is

provide the type and Maven will do the rest.

Finally, there is an optional element called <classifier> that can be used to distinguish different distributions of the same version of a product. For example, you might have a distribution for Java 1.4, and a different distribution for Java 5. The TestNG unit testing library does just this. The project description for the Java 5 version of this product might contain something like this:

<groupId>org.testng</groupId> <artifactId>testng</artifactId> <packaging>jar</packaging> <version>5.5</version> <classifier>jdk15</classifier>

This would produce a file called testng-5.1-jdk15.jar. The equivalent version for Java 1.4

would be testng-5.1-jdk14.jar.

A Human-Readable Project Description

The next section of the POM file is largely for human consumption, and contains information that is primarily used to generate the Maven project web site. It can contain details such as the name of the project, the URL of the project home page (if one exists), details on the issue tracking system, the Continuous Integration system, and/or the SCM system, as well as details such as the year of inception and the development team:

...

<name>Accounting Core API</name>

<url>http://myproject.mycompany.com</url>

<scm>

<connection>scm:svn:http://devserver.mycompany.com/svn/accounting

/accounting-core/trunk/accounting-core</connection>

<developerConnection>scm:svn:http://devserver.mycompany.com/

svn/accounting/accounting-core/trunk/accounting-core</developerConnection>

<url>http://devserver.mycompany.com/trac/accounting-core/browser

/accounting/accounting-core/trunk/accounting-core</url>

</scm>

<issueManagement>

<system>trac</system>

<url>http://devserver.mycompany.com/trac/accounting-core</url>

</issueManagement>

<inceptionYear>2006</inceptionYear>

...Most of this information is project documentation, and it is a recommended practice to make it as complete as possible. Some of it, such as the Issue Tracking and CI system details, may be used by Maven to generate appropriate links in the Maven site. For common version control systems such as CVS and Subversion, Maven uses the SCM section to generate a page of instructions on how to check out the project, which is very useful for new team members. Also, Continuous Integration servers such as Continuum (see Chapter 5) can read the SCM and CI details when you import the project onto the Continuous Integration server.

Declaring your Continuous Integration Server

If your project uses a continuous integration tool of some sort, such as Continuum (see Chapter 5) CruiseControl (see Chapter 6), you can tell people about it in the <ciManagement> tag, as shown in the code below. (If your project does not using such a tool, consider using one!)

<ciManagement>

<system>Continuum</system>

<url>http://integrationserver.wakaleo.com/continuum</url>

<notifiers>

<notifier>

<type>mail</type>

<address>[email protected]</address>

</notifier>

</notifiers>

</ciManagement>Maven 2 integrates well with Continuum: you can install a Maven 2 project

onto a Continuum server just by providing the pom.xml file (see Adding a Maven Project). Notifiers

declare ways that particular users can be sent notification of build results

on the CI server. In Continuum, they can be set up both from the Continuum

administration web site (Setting Up Notifiers) or from within

the Maven POM file.

Defining the Development Team

People like to know who they are working with, especially these days, when a project team can be spread across organizations and continents. In the developers section, you list details about your project team members. The time zone field is useful for international teams; this field is offset from Greenwich Mean Time (GMT), or London time, and lets people see what time it is wherever the team member is located. For example, –5 is for New York time, +1 is for Paris, and +10 is for Sydney.

A typical developer definition is shown here:

...

<developers>

<developer>

<id>smartj</id>

<name>John Smart</name>

<email>[email protected]</email>

<roles>

<role>Developer</role>

</roles>

<organization>ACME NZ</organization>

<timezone>+12</timezone>

</developer>

...

</developers>

...Although totally optional, listing your development team in your POM file can be worthwhile for several reasons. This information will be used to create a team directory page on the Maven generated site. The Maven SCM plug-ins can use the developer id to map changes made in the source code repository against developer names. And, if you are using the Continuum Continuous Integration server (see Chapter 5), Continuum can pick up the developer email addresses and use them for email notifications.

Managing Dependencies

One of the most powerful Maven features is the way it handles dependencies. A typical medium-size Java project can require dozens, or even hundreds, of JAR files. Without a strict dependency management strategy, this can quickly become out of control. It can rapidly become difficult to know exactly what library versions a particular project is using, and conflicting dependency requirements can trigger hard-to-find errors. Maven addresses these issues using a two-pronged approach, based on the notions of declarative dependencies and a central repository of JAR files.

In Maven, a project’s dependencies are declared in the pom.xml file. The <dependencies> section, shown here, lets you list the libraries that your application needs to compile, be tested, and be run. Dependencies are defined using the Maven artifact naming schema (see Project Context and Artifacts,” earlier in this section), which allows you to precisely identify the exact version of each library you need. In addition, you usually only need to list the libraries you need directly to compile your code: with a feature called Transitive Dependencies (see Managing Transitive Dependencies” in Dependency Management in Maven 2) Maven 2 will discover and retrieve any additional libraries that those libraries need to work.

Here is a simple example of the dependencies section in a POM file:

...

<dependencies>

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate</artifactId>

<version>3.1</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

</dependencies>

...We are saying that our application requires Hibernate 3.1 (and, implicitly, all the other libraries that this version of Hibernate requires). And, to run our unit tests, we need JUnit 3.8.1.

This section is not only used in the build lifecycle; it also can be used to generate reports listing the project dependencies (see Setting Up Reporting,” later in this section). We will look at dependencies in Maven in more detail in Managing Transitive Dependencies,” in Dependency Management in Maven 2.

Customizing Your Build Process

Although optional, the <build> section is a key part of any but the simplest of POM files. This section is where you tailor your Maven project build process to your exact needs, defining various plug-in configurations and setting up additional tasks that need to be performed at various points in the build lifecycle.

The Maven build process is very flexible, and it is easy to integrate new tasks by using plug-ins. Plug-ins are a powerful way to encapsulate build logic into reusable components, for use in future projects. You may use plug-ins to generate source code from a WSDL file or from Hibernate mappings, for example. Many plug-ins are available, both from the Maven web site and from other third-party providers such as Codehaus.[*]

Because they are used extensively in the standard Maven build lifecycle tasks, you also can use plug-ins to customize existing aspects of the Maven lifecycle. A common example of this type of configuration, shown in the example below, is to configure the maven-compiler-plugin, which compiles the project source code for use with Java 5 (by default, the Maven compiler generates code compatible with JDK 1.3).

The <build> section is also where

resource directories are defined. You also can define resources that will be

bundled into the final package produced by the project, and resources that

need to be on the classpath during unit tests. By default, any files placed

in the src/main/resources will be

packaged into the generated project artifact. Any files in src/test/resources will be made available on

the project classpath during unit tests.

You also can add additional resource directories. In the following example, we set up an additional resource directory for Hibernate mapping files. At build-time, these files automatically will be bundled into the resulting project artifact, along with the compiled classes and other resource files.

The following listing illustrates a typical build section, illustrating these examples:

...

<build>

<plug-ins>

<plugin>

<groupId>org.apache.maven.plug-ins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.5</source>

<target>1.5</target>

</configuration>

</plugin>

</plug-ins>

<resources>

<resource>

<directory>src/main/hibernate</directory>

</resource>

</resources>

</build>

...Setting Up Reporting

An important part of any project is internal communication. Although it is not a silver bullet, a centralized technical project web site can go a long way toward improving visibility within the team, especially with large or geographically dispersed teams. The site generation functionality in Maven 2 lets you set up a professional-quality project web site with little effort.

You use the <reporting> section to configure options for Maven site generation. In the absence of any reporting section, Maven will generate a simple site with information about the project derived from the information provided in the POM file. The <reporting> section lets you add many other additional reports, such as javadoc, unit test results, Checkstyle or PMD reports, and so on.

In this example, we add Checkstyle reporting to the generated site:

<reporting>

<plug-ins>

<plugin>

<artifactId>maven-checkstyle-plugin</artifactId>

<configuration>

<configLocation>config/company-checks.xml</configLocation>

<enableRulesSummary>false</enableRulesSummary>

<failsOnError>true</failsOnError>

</configuration>

</plugin>

</reporting>Defining Build Profiles

The final major section of the POM file is the <profiles> section. Profiles are a

useful way to customize the build lifecycle for different environments. They

let you define properties that change depending on your target environment,

such as database connections or filepaths. At compile time, these properties

can be inserted into your project configuration files. For example, you may

need to configure different database connections for different platforms. Suppose JDBC

configuration details are stored in a file called jdbc.properties, stored in the src/main/resources directory. In this file, you would use a

variable expression in the place of the property value, as shown

here:

jdbc.connection.url=${jdbc.connection.url}In this case, we will define two profiles: one for a development database, and one for a test database. The <profiles> section of the POM file would look like this:

<profiles>

<!-- Development environment -->

<profile>

<id>development</id>

<activation>

<activeByDefault>true</activeByDefault>

</activation>

<properties>

<!-- The development database -->

<jdbc.connection.url>jdbc:mysql://localhost/devdb</jdbc.connection.url>

</properties>

</profile>

<!-- Test environment -->

<profile>

<id>test</id>

<properties>

<!-- The test database -->

<jdbc.connection.url>jdbc:mysql://localhost/testdb</jdbc.connection.url>

</properties>

</profile>

</profiles> Each profile has an identifier (<id>) that lets you invoke the profile by name, and a list of property values to be used for variable substitution (in the <properties> section). For variable substitution to work correctly, Maven needs to know which files are likely to contain variables. You do this by activating filtering on resource directories in the <build> section (see Customizing Your Build Process,” earlier in this section). To do this in our case, we need to activate filtering on the resource directory entry in the build section (see Customizing Your Build Process”), as shown here:

...

<build>

<resources>

<resource>

<directory>src/main/resources</directory>

<filtering>true</filtering>

</resource>

</resources>

</build>

...Profiles can be activated in several ways. In this case, we use the activeByDefault property to define the development profile as the default profile. Therefore, running a standard Maven compile with no profiling options will use this profile:

$ mvn compile

In this case, the generated jdbc.properties file in the target/classes directory will look like this:

jdbc.connection.url=jdbc:mysql://localhost/devdb

To activate the test profile, you need to name it explicitly, using the -P command line option as shown here:

$ mvn compile -Ptest

Now, the generated jdbc.properties file, in the target/classes directory, will be configured for the test database:

jdbc.connection.url=jdbc:mysql://localhost/testdb

We look at how to use profiles in more detail in Defining Build Profiles.

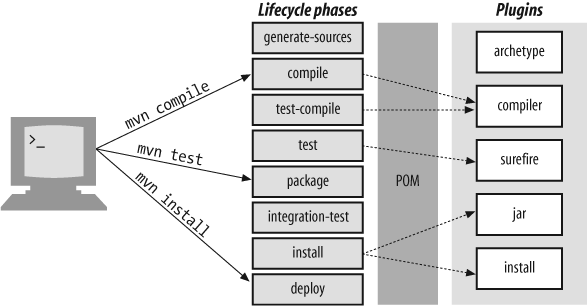

Understanding the Maven 2 Lifecycle

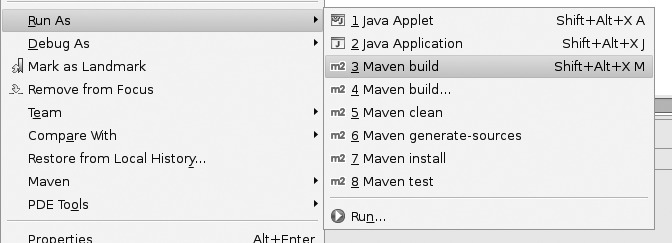

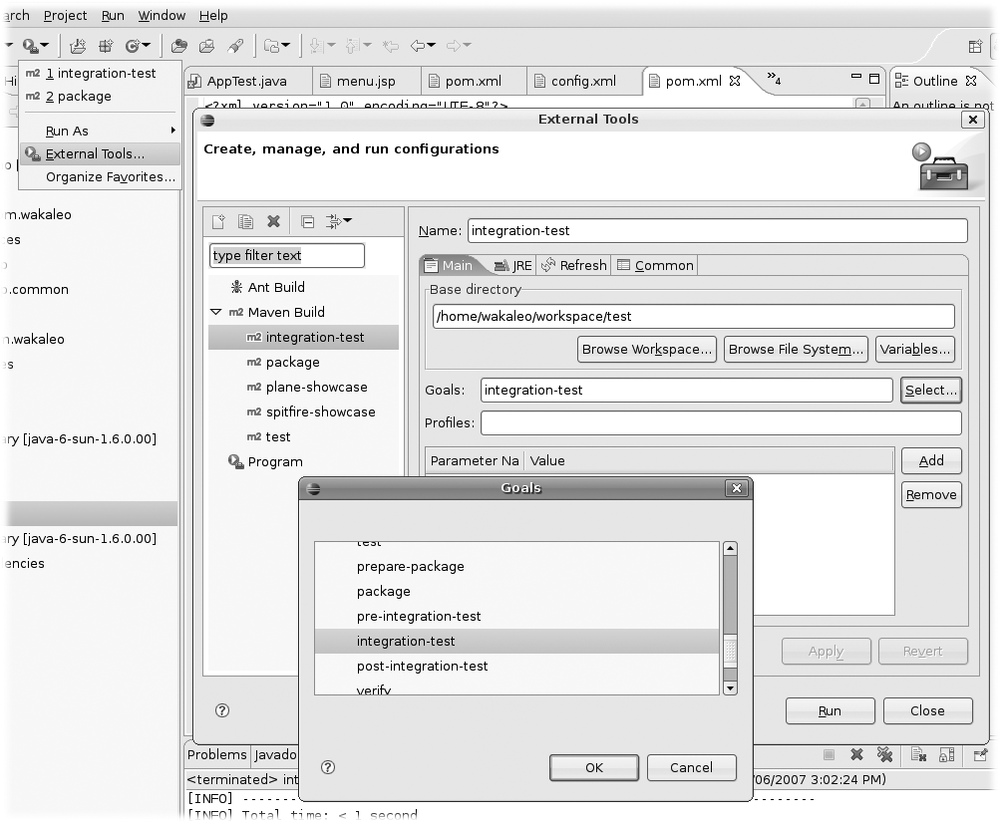

Project lifecycles are central to Maven 2. Most developers are familiar with the notion of build phases such as compile, test, and deploy. Ant build scripts typically have targets with names like these. In Maven 2, this notion is standardized into a set of well-known and well-defined lifecycle phases (see Figure 2-3). Instead of invoking tasks or targets, the Maven 2 developer invokes a lifecycle phase. For example, to compile the application source code, you invoke the “compile” lifecycle phase:

$ mvn compile

Some of the more useful Maven 2 lifecycle phases are the following (see Figure 2-3):

- generate-sources

Generates any extra source code needed for the application, which is generally accomplished using the appropriate plug-ins.

- compile

Compiles the project source code.

- test-compile

Compiles the project unit tests.

- test

Runs the unit tests (typically using JUnit) in the

src/testdirectory. If any tests fail, the build will stop. In all cases, Maven generates a set of test reports in text and XML test reports in thetarget/surefire-reportsdirectory (see Testing Your Code).- package

Packages the compiled code in its distributable format (JAR, WAR, etc.).

- integration-test

Processes and deploys the package if necessary into an environment in which integration tests can be run.

- install

Installs the package into the local repository for use as a dependency in other projects on your local machine.

- deploy

In an integration or release environment, this copies the final package to the remote repository for sharing with other developers and projects.

The full list is much longer than this, and can be found on the Maven web site.[*]

These phases illustrate the benefits of the recommended practices encouraged by Maven 2: once a developer is familiar with the main Maven lifecycle phases, he or she should feel at ease with the lifecycle phases of any Maven project. The lifecycle phase invokes the plug-ins it needs to do the job. Invoking a lifecycle phase automatically invokes any previous lifecycle phases as well. Because the lifecycle phases are limited in number, easy to understand, and well organized, becoming familiar with the lifecycle of a new Maven 2 project is easy.

Understanding the Maven lifecycle is also important when it comes to customizing your build process. When you customize your build process, you basically attach (or “bind,” to use the Maven terminology) plug-ins to various phases in the project lifecycle. This may seem more rigid than Ant, in which you basically can define any tasks you want and arrange them in any order you like. However, once you are familiar with the basic Maven phases, customizing the build lifecycle in this way is easier to understand and to maintain than the relatively arbitrary sequences of tasks that you need to implement in an Ant build process.

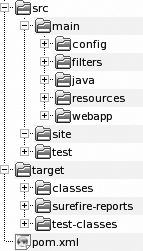

The Maven Directory Structure

Much of Maven’s power comes from the standard practices that it encourages. A developer who has previously worked on a Maven project immediately will feel familiar with the structure and organization of a new one. Time need not be wasted reinventing directory structures, conventions, and customized Ant build scripts for each project. Although you can override any particular directory location for your own specific ends, you really should respect the standard Maven 2 directory structure as much as possible, for several reasons:

It makes your POM file smaller and simpler.

It makes the project easier to understand and makes life easier for the poor guy who must maintain the project when you leave.

It makes it easier to integrate plug-ins.

The standard Maven 2 directory structure is illustrated in Figure 2-4.

The POM (pom.xml) and two

subdirectories go into the project home directory: src for all source code and

target for generated artifacts. The src directory has a number of

subdirectories, each of which has a clearly defined purpose:

src/main/javaYour Java source code goes here (strangely enough!)

src/main/resourcesOther resources your application needs

src/main/filtersResource filters, in the form of properties files, which may be used to define variables only known at runtime

src/main/configConfiguration files

src/main/webappThe web application directory for a WAR project

src/test/javaSource code for unit tests, by convention in a directory structure mirroring the one in your main source code directory

src/test/resourcesResources to be used for unit tests, but that will not be deployed

src/test/filtersResources filters to be used for unit tests, but that will not be deployed

src/siteFiles used to generate the Maven project web site

Configuring Maven to Your Environment

One of the principal aims of Maven is to produce portable project build environments. Nevertheless, each work environment has its particularities, which need to be catered for. In this chapter, we investigate some common areas where you may need to tailor Maven to suit your particular work environment, such as configuring proxy servers, defining enterprise repositories, or specifying usernames and passwords.

When it comes to defining environment-specific configuration details, the most

important tool at your disposal is the settings.xml file. Each user can

have his or her own individual settings.xml

file, which should be placed in the $HOME/.m2

directory. This file is not placed under version control, and therefore can

contain details such as usernames and passwords, which should not be shared in

the source code repository.

Using a Proxy

If you are working in a company, you may well be accessing the

Internet via a proxy. Maven relies heavily on accessing the Internet to

download the libraries that it needs for your projects and for its own

purposes. Therefore, if you are behind a proxy, you will need to tell Maven

about it. Maven stores environment-specific parameters in a file called

$HOME/.m2/settings.xml. You will have

to create this file if it doesn’t already exist. To define a proxy, just add

a <proxy> element in this file, as

follows:

<settings>

<proxies>

<proxy>

<active>true</active>

<protocol>http</protocol>

<host>proxy.mycompany.com</host>

<port>8080</port>

<username>user</username>

<password>password</password>

<nonProxyHosts>*.mycompany.com</nonProxyHosts>

</proxy>

</proxies>

</settings>The <nonProxyHosts> element is useful to define servers that do not need proxy access, such as internal enterprise repositories.

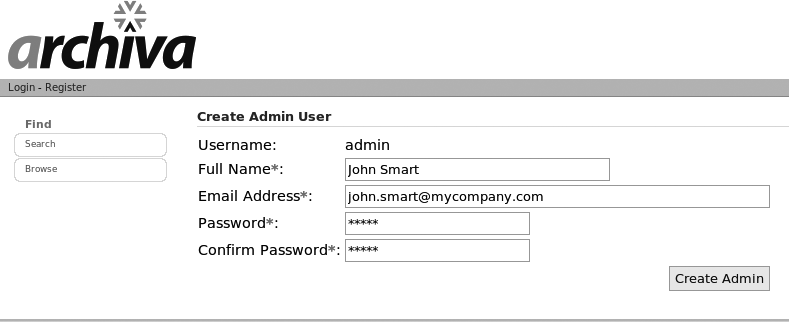

Using a Local Mirror

Another common use of the settings.xml file is to configure mirror servers. This

typically is done to configure an organization-wide repository. Many

organizations use a local repository to store and share internal packages

and to act as a proxy to external repositories. This solution is faster and

more reliable than requiring users to go to the Internet whenever a new

dependency is required.

The following example shows how to configure a Maven installation to use an Artifactory repository exclusively:

<settings>

<mirrors>

<mirror>

<id>artifactory</id>

<mirrorOf>*</mirrorOf>

<url>http://buildserver.mycomany.org:8080/artifactory/repo</url>

<name>Artifactory</name>

</mirror>

</mirrors>

</settings>Changing Your Maven Cache Location

Maven stores downloaded JAR files in a local directory on your

machine, known as the local repository. This directory generally is found at

$HOME/.m2/repository<localRepository> tag in your $HOME/.m2/settings.xml

<settings> <localRepository>C:/maven/repository</localRepository> </settings>

Defining Arbitrary Environment-Specific Variables

The settings.xml file is also

a good place to let users tailor their environment variables if they really

need to. For example, you might need to specify the directory of some

locally installed product, which may vary from machine to machine. You do

this by defining a default profile in the settings.xml

file. Any properties defined here will override property values in the POM

file. Command-line tools like SchemaSpy (see Visualizing a Database Structure with SchemaSpy)

are a good example. This is a tool that needs to be downloaded and installed

on each local machine. Of course, you can get the Maven build process to do

this automatically. However, users who have already installed SchemaSpy, and

may not want to duplicate installations, can override the SchemaSpy-related

parameters by setting up properties in their local settings.xml file. In the following example, a user sets the

installation directory (the schemaspy.home property) to P: oolsschemaspy, which will override any property values

defined in the main POM

file:

<settings>

...

<profiles>

<profile>

<id>development</id>

<activation>

<activeByDefault>true</activeByDefault>

</activation>

<properties>

<schemaspy.home>P: oolsschemaspy</schemaspy.home>

<schemaspy.version>3.1.1</schemaspy.version>

</properties>

</profile>

</profiles>

</settings>Note that we still need to provide sensible default values in the POM file so that customizing your environment becomes optional, not mandatory. Users only need to modify their local file if they really want to (and, presumably, when they know what they are doing!). The best way to set up these default values is by using the <properties> element at the end of your POM file, as shown here:

<project>

...

<properties>

<schemaspy.home>${user.home}/.schemaspy</schemaspy.home>

<schemaspy.version>3.1.1</schemaspy.version>

</properties>

<project>Don’t be tempted to put these default values in a default profile element in your POM file; in this case, the profile in the POM file would override the profile in your local settings.

Dependency Management in Maven 2

Dependency management is one of the more powerful features of Maven

2. Dependencies are the libraries you need to compile, test, and run your

application. In tools such as Ant, these libraries typically are stored in a

special directory (often called lib), and are

maintained either by hand or as project artifacts that are stored in the source

code repository along with the source code. Maven, by contrast, uses a

declarative approach. In a Maven project, you list the libraries your application needs, including the exact version number

of each library. Using this information, Maven will do its best to find,

retrieve, and assemble the libraries it needs during the different stages in the

build lifecycle. In addition, using a powerful feature called Transitive

Dependencies (see Managing Transitive Dependencies,” later in this

section), it will include not only the libraries that you declare but also all

the extra libraries that your declared libraries need to work correctly.

In this chapter, we will look at different aspects of how to handle dependencies in Maven 2.

Declaring Dependencies

One of the most powerful features of Maven 2 is its ability to

handle dependencies in a consistent and reliable manner. In the <dependencies> section of the POM file,

you declare the libraries that you need to compile, test, and run your

application. Dependencies are retrieved from local or remote repositories,

and cached locally on your development machine, in the $HOME/.m2/repository directory structure. If

you use the same jar in two projects, it will only be downloaded (and

stored) once, which saves time and disk space.

In Maven, dependencies are handled declaratively. Suppose that your project needs to use Hibernate, and that your unit tests are written in JUnit. In this case, the dependency section in your POM file might look something like the following:

...

<dependencies>

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate</artifactId>

<version>3.1</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

</dependencies>

...Each dependency is uniquely identified, using a Maven-style artifact reference (see Project Context and Artifacts,” in Declarative Builds and the Maven Project Object Model). Dependencies can refer both to other projects within your organization and to publicly available libraries on the public Maven repositories.

In some cases, libraries may have several different versions of a library with the same version number. The TestNG library, for example, has two versions for each release, one compiled for Java 1.4 and another compiled for Java 1.5:

testng-5.1-jdk14.jar 15-Aug-2006 08:55 817K testng-5.1-jdk15.jar 15-Aug-2006 08:55 676K

When you declare your dependencies, Maven needs to know exactly which version you need. You do this by providing the <classifier> element, as shown here:

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>5.1</version>

<classifier>jdk15</classifier>

<scope>test</scope>

</dependency>Dependencies declarations are not limited to precise version numbers. In fact, Maven is quite flexible about version numbers, and you can use a form of interval notation to define ranges of permissible version numbers. Interval notation comes from set theory, and is one of those things you probably learned at school or university and subsequently forgot. Here is a quick refresher. Interval notation is a flexible and succinct way of defining ranges of values using square brackets and parentheses to indicate boundary values. You use parentheses when the boundary value is not included in the set. For example, the following notation indicates a set of values greater than 1 (noninclusive) and less than 4 (noninclusive):

(1,4)

You use square brackets when the boundary values are included in the set. For example, the following notation indicates a set of values greater than or equal to 1 and less than or equal to 4:

[1,4]

You can combine different types of boundary values in the same expression. For example, this is how you would represent a set of values greater than or equal to 1, and strictly less than 4:

[1,4)

You can leave a value out to leave one side of the set unbounded. Here we include all values greater or equal to 2:

[2,)

You can even define a set made up of multiple intervals, simply by listing the intervals in a comma-separated list. The following example shows how you would define all the values between 1 and 10 inclusive, except for 5:

[1,5),(5,10]

Now that you have mastered the theory, let’s see how it applies to dependency management. By using interval notation, you can give Maven more flexibility in its dependency management, which means that you spend less time chasing the latest API updates. Maven will use the highest available version within the range you provide. For example, the following dependency will use the latest available version of Hibernate, but requires at least Hibernate 3.0:

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate</artifactId>

<version>[3.0,)</version>

</dependency>Or you may want to limit the versions of an API to a particular range. Using the following dependency, Maven will look for the highest version of the commons-collections in the 2.x series, but will exclude any versions from 3.0 onward:

<dependency>

<groupId>commons-collections</groupId>

<artifactId>commons-collections</artifactId>

<version>[2.0,3.0)</version>

</dependency>Managing Transitive Dependencies

Transitive Dependencies are arguably one of the most useful features of Maven 2. If you have ever used a tool like urpmi or apt-get on a Linux box, you will be familiar with the concept of Transitive Dependencies. Simply put, if you tell Maven 2 that your project needs a particular library, it will try to work out what other libraries this library needs, and retrieve them as well.

Let’s look at how this works with a practical example. Suppose that our project uses Hibernate 3.1. We might declare this dependency as follows:

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate</artifactId>

<version>3.1</version>

</dependency>Exactly where Maven looks for dependencies will depend on how your

repositories are set up. The default Maven 2 repository is located at

http://repo1.maven.org/maven2 (if in

doubt, this is actually defined in the Super POM file). In this case, Maven

will look for the Hibernate JAR file in the following directory:

http://repo1.maven.org/maven2/org/hibernate/hibernate/3.1/

If you look in this directory, you will see a list of files similar to the following:

hibernate-3.1-sources.jar 10-Jan-2006 07:05 1.2M hibernate-3.1-sources.jar.md5 10-Jan-2006 07:06 148 hibernate-3.1-sources.jar.sha1 10-Jan-2006 07:07 156 hibernate-3.1.jar 15-Dec-2005 11:32 1.8M hibernate-3.1.jar.md5 15-Dec-2005 11:32 32 hibernate-3.1.jar.sha1 15-Dec-2005 11:32 40 hibernate-3.1.pom 26-Dec-2005 06:22 3.8K hibernate-3.1.pom.md5 04-Jan-2006 07:33 138 hibernate-3.1.pom.sha1 04-Jan-2006 07:33 146 maven-metadata.xml 15-Dec-2005 11:32 119 maven-metadata.xml.md5 09-Jul-2006 08:41 130 maven-metadata.xml.sha1 09-Jul-2006 08:41 138

Note that there is much more than just the JAR file: there is also a POM file and (for good measure) digest files that let Maven verify the consistency of the files it downloads. The POM file here is the POM file for the Hibernate project. If your project needs to use Hibernate, it also needs to include all the Hibernate dependencies in its distribution. These secondary dependencies are listed in this POM file. Maven uses the dependencies in this POM to work out what other library it needs to retrieve.

This is the main weakness of Maven Transitive Dependency management: it relies on the accuracy and completeness of the POM files stored on the public repository. However, in some cases, the dependencies in the POM file may not be up-to-date, and, in other cases, the POM file may actually be just an empty POM file with no dependencies at all! In these cases, you will need to supply the dependencies explicitly in your own POM file.

Dependency management can be a complicated beast, and sometimes you will want to understand exactly which libraries Maven is using and why. One option is to use the –X command-line option with any Maven command to produce (among many other things) very detailed dependency information. This option generates a lot of text, so it is useful to redirect output into a text file and to view the file in a text editor, rather than to wrestle with the command line:

$ mvn -X test > out.txt

The resulting output file will contain lines like the following, detailing the resolved dependencies and the corresponding dependency graphs:

[DEBUG] org.hibernate:hibernate:jar:3.1.3:compile (setting version to: 3.1.3 from range: [3.0,)) [DEBUG] org.hibernate:hibernate:jar:3.1.3:compile (selected for compile) [DEBUG] javax.transaction:jta:jar:1.0.1B:compile (selected for compile) [DEBUG] dom4j:dom4j:jar:1.6.1:compile (selected for compile) [DEBUG] cglib:cglib:jar:2.1_3:compile (selected for compile) [DEBUG] asm:asm:jar:1.5.3:compile (selected for compile) [DEBUG] asm:asm-attrs:jar:1.5.3:compile (selected for compile) [DEBUG] asm:asm:jar:1.5.3:compile (selected for compile) [DEBUG] commons-collections:commons-collections:jar:2.1.1:compile (removed - nearer found: 2.1) [DEBUG] antlr:antlr:jar:2.7.6rc1:compile (selected for compile)

This is a representation of the dependency tree: you can see exactly which library versions were requested, and which were retained for the final dependency list. It also indicates which libraries were removed because a nearer dependency was found (look at the “commons-collections” library in the above listing). This can give useful clues if a library is not behaving as expected.

The other useful tool in understanding your project’s dependencies is the

Dependency report. This report is generated by default when you generate the

Maven site, and placed in the target/dependencies.html

file:

$ mvn site

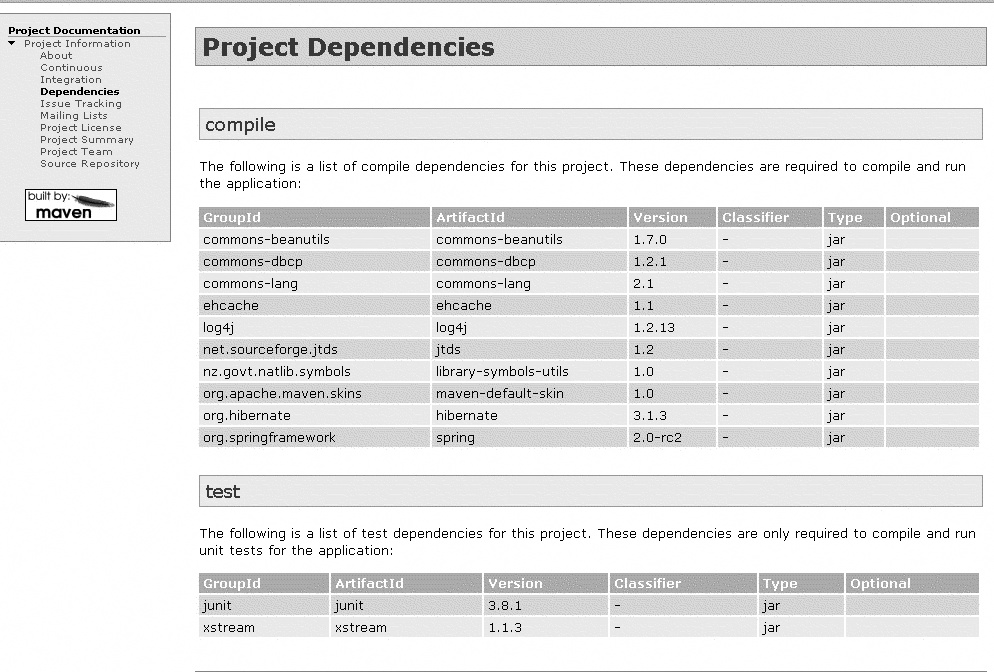

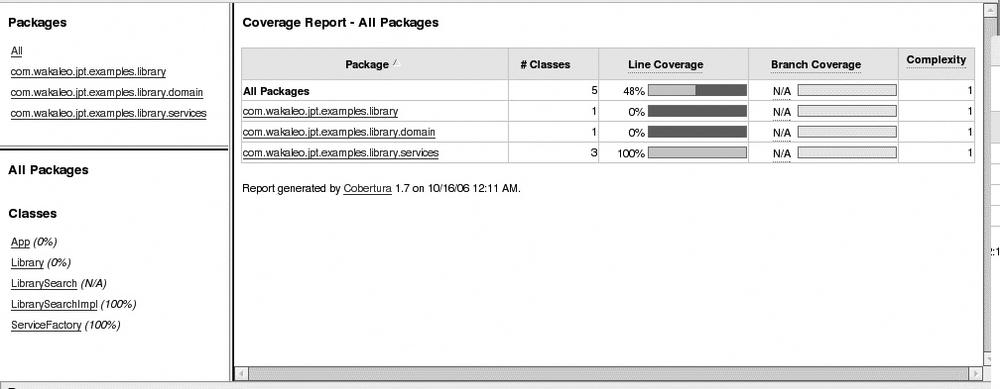

This report displays lists of direct and transitive dependencies for each dependency scope (see Dependency Scope,” later in this section), as well as the full dependency tree (see Figure 2-5).

Dependency Scope

In a real-world enterprise application, you may not need to include all the dependencies in the deployed application. Some JARs are needed only for unit testing, while others will be provided at runtime by the application server. Using a technique called dependency scoping, Maven 2 lets you use certain JARs only when you really need them and excludes them from the classpath when you don’t. Maven provides several dependency scopes.

The default scope is the compile scope. Compile-scope dependencies are available in all phases.

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate</artifactId>

<version>3.1</version>

</dependency>A provided dependency is used to compile the application but will not be deployed. You would use this scope when you expect the JDK or application server to provide the JAR. The servlet APIs are a good example:

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

<version>2.4</version>

<scope>provided</scope>

</dependency>The runtime dependency scope is used for dependencies that are not needed for compilation, only for execution, such as Java Database Connectivity (JDBC) drivers:

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>3.1.13</version>

<scope>runtime</scope>

</dependency>You use the test dependency scope for dependencies that are only needed to compile and run tests, and that don’t need to be distributed (JUnit or TestNG, for example):

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>In some special cases, you may need to use system dependencies, such as the tools.jar file provided with the Java SDK. For example, you may need to use the Sun Apt or WSGen tools within your build process. You can do this using the system dependency scope. In this case (and in this case only), you need to provide a systemPath value that indicates the absolute path to this file. This is illustrated in the following code extract:

<dependency>

<groupId>com.sun</groupId>

<artifactId>tools</artifactId>

<version>1.5.0</version>

<scope>system</scope>

<systemPath>${java.home}/lib/tools.jar</systemPath>

</dependency>Handling Proprietary Dependencies

For commercial and copyright reasons, not all of the commonly used libraries are available on the public Maven repositories. A common example is the Oracle JDBC Driver, which is available free-of-charge on the Oracle web site,[*] but it cannot be redistributed via a public Maven repository. Another frequently encountered example is the Java Transaction API (JTA), which is notably required by Hibernate. The JTA library is produced by Sun, which requires you to agree to a license agreement before you are able to download the JAR.

If you need to use a proprietary library like these in your Maven project, you will need to add it manually to your local repository. Let’s see how this is done, using the Oracle driver as an example.

First, download the appropriate JAR file from the Oracle web site (for example, odbc14.jar). At the time of this writing, this corresponded to the “Oracle Database 10g Release 2 (10.2.0.2) JDBC Driver.” It is important to note the exact version, as it is not visible from the name of the file. This version number will be used to identify the JAR file in our repository. The dependency declaration would look something like this:

<dependency>

<groupId>oracle</groupId>

<artifactId>oracle-jdbc</artifactId>

<version>10.1.0.2.0</version>

<scope>runtime</scope>

</dependency>To get this to work, we need to copy the JAR into the correct place in our Maven repository. There are several ways to do this. You may first want to test on your development machine before installing the JAR onto the organization repository. You can install the jar into your local repository by using the mvn install:install-file command, as shown here:

mvn install:install-file -DgroupId=oracle

-DartifactId=oracle-jdbc

-Dpackaging=jar

-Dversion=10.1.0.2.0

-DgeneratePom=true

-Dfile=ojdbc14.jarInstalling the JTA jar is similar: download it from the Sun site[†] and use the mvn install command as follows:

mvn install:install-file -DgroupId=javax.transaction

-DartifactId=jta

-Dpackaging=jar

-Dversion=1.0.1B

-DgeneratePom=true

-Dfile=jta-1_0_1B-classes.zipNow you can test the installation, typically by running some unit tests and seeing if Maven correctly finds the dependency.

When you are happy, you can either deploy the file to using the mvn deploy:deploy-file command, or simply copy the appropriate directory onto your company Maven repository. When this is done, this dependency can be seamlessly downloaded by all the team members in exactly the same way as any other new dependency.

Refactoring Your Dependencies Using Properties

In large projects, even with the benefits of transitive dependency management, you will often end up with a lot of dependencies. Sometimes, it is useful to declare key version numbers in a central place, making them easier to find and update if necessary. One good way to do this is by using properties.

We saw in Defining Build Profiles” in Declarative Builds and the Maven Project Object Model

and Configuring Maven to Your Environment the ways in which you can define profile

or environment-specific properties in a profile or in the settings.xml file.

However, you also can declare properties directly at the root level in your

pom.xml file. Like constants

in a Java class, or Ant properties (see Customizing Your Build Script Using Properties) in

an Ant build script, this is a convenient way to define reusable values in

an easy-to-maintain manner. The actual <properties> block can appear anywhere in the build

file, but you may want to put it in an easy-to-find place such as near the

start or right at the end.

Let’s look at an example. Suppose that we are developing a web application using JSP and JSTL. In the following listing, we use two properties, somewhat unimaginatively named servlet-api.version and jstl.version, to identify what version of the Java Servlet and JSTL APIs we are using:

<project>

...

<properties>

...

<servlet-api.version>2.4</servlet-api.version>

<jstl.version>1.1.2</jstl.version>

</properties>

...

</project>These properties can then be used to declare our dependencies in a more flexible manner. Now we can use these properties to declare our Servlet API and JSTL dependencies. Note that this makes it easier to ensure that the JSTL API and JSTL standard taglibs versions stay in sync:

<project>

...

<properties>

...

<servlet-api.version>2.4</servlet-api.version>

<jstl.version>1.1.2</jstl.version>

</properties>

...

<dependencies>

...

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

<version>${servlet-api.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>jstl</artifactId>

<version>${jstl.version}</version>

</dependency>

<dependency>

<groupId>taglibs</groupId>

<artifactId>standard</artifactId>

<version>${jstl.version}</version>

</dependency>

...

</dependencies>

</project>Looking for Dependencies with MvnRepository

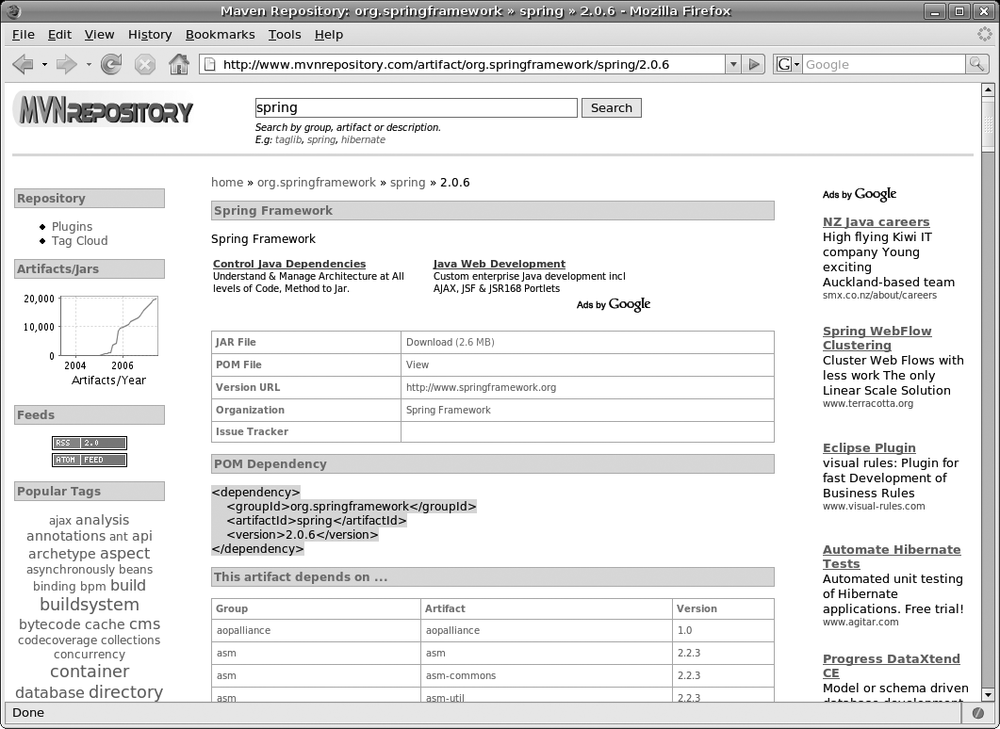

When you are working with Maven, you often need to look up a particular dependency so that you can add it to your POM file. It can be quite tricky to remember and/or hunt down the precise group and artifact names and the latest version numbers for any but the most well-known artifacts. For example, do you remember the exact group and latest version of the Hibernate or Spring MVC libraries?

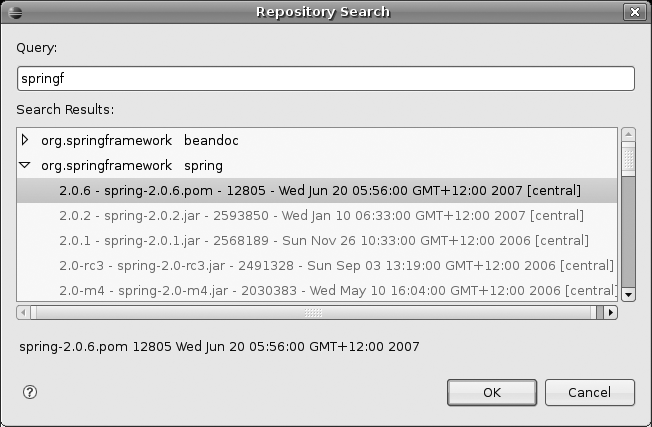

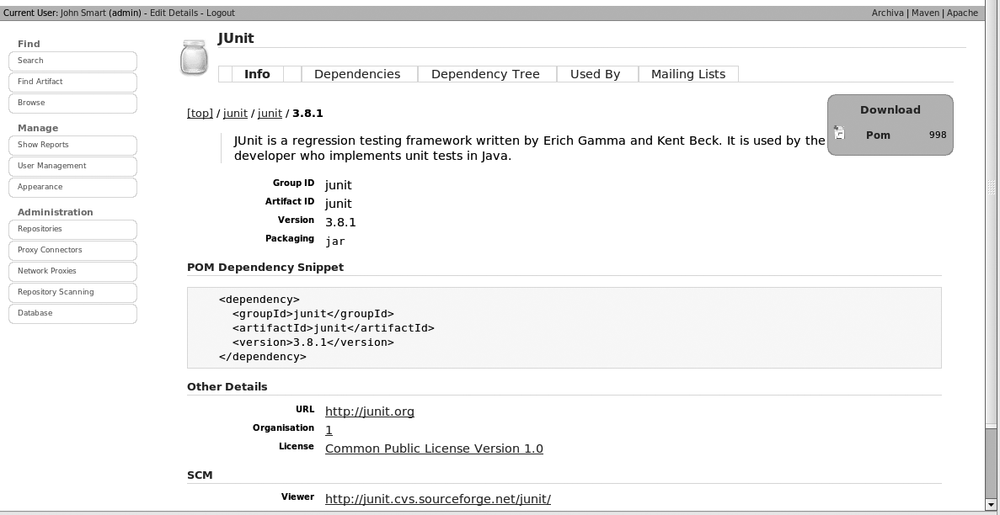

One useful resource that can help out here is the MvnRepository site[*] (see Figure 2-6). Using this site, you can search the central Maven repository for artifacts by name. When you find the version you are looking for, simply copy the displayed dependency block into your POM file. While you’re there, you also can list the dependencies of a particular library, view the latest updates to the repository, or browse the overall structure of the repository.

Project Inheritance and Aggregation

Maven actively encourages you to write your projects as a set of small, flexible, modules rather than as a monolithic block of code. Dependencies are one way that you can create well-defined relationships between a set of modules to form an overall project. Project inheritance is another.

Project inheritance lets you define project-wide properties and values that will be inherited by all of the child projects. This is most easily understood by an example.

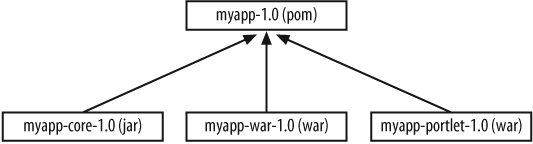

Suppose you are writing a simple web application, which will be deployed both as a traditional web application and as a portlet. One way that you might do this is to define three modules: a core module, containing the application business logic, and two user interface modules, one for each target platform. All three modules would have a common parent POM file, as illustrated in Figure 2-7.

Let’s see how you would implement this project structure.

Parent POM files are very much like any other POM file. The following listing shows a very simple one:

<project> <modelVersion>4.0.0</modelVersion> <groupId>com.mycompany</groupId> <artifactId>myapp</artifactId> <packaging>pom</packaging> <name>Killer application</name> <version>1.0</version> </project>

The main distinguishing factor is the <packaging> element, which is declared as a POM, rather than the WAR or JAR values that we have seen in previous examples. Indeed, all parent POM files must use the pom packaging type.

Then, within each child project, you need to declare a <parent> element that refers, suprisingly enough, to the parent POM file:

<project>

<parent>

<groupId>com.mycompany</groupId>

<artifactId>myapp</artifactId>

<version>1.0</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>debtcalculator-core</artifactId>

...

</project>Note that you don’t need to define the version or groupId of the child project—these values are inherited from the parent.

The parent POM file is an excellent place to define project-wide properties or build configuration details. A typical use is to define the Java compile options in one central place. We can set the Java compiler to Java 1.5. This will be inherited by all the children projects, without any special configuration in their POM files:

<project>

<modelVersion>4.0.0</modelVersion>

<groupId>com.mycompany</groupId>

<artifactId>myapp</artifactId>

<packaging>pom</packaging>

<name>Killer application</name>

<version>1.0</version>

<properties>

<java-api.version>1.5</java-api.version>

</properties>

<build>

<plugins>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>${java-api.version}</source>

<target>${java-api.version}</target>

</configuration>

</plugin>

</plugins>

</build>

</project>In a similar way, you can define project-wide dependencies at this level:

<project>

<modelVersion>4.0.0</modelVersion>

<groupId>com.mycompany</groupId>

<artifactId>myapp</artifactId>

<packaging>pom</packaging>

<name>Killer application</name>

<version>1.0</version>

<properties>

<java-api.version>1.5</java-api.version>

<junit.version>4.4</junit.version>

</properties>

...

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>${junit.version}</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>All the children projects will now be able to use these dependencies without having to list them among their specific dependencies. This is also an excellent way to ensure that all of your children projects use the same versions of particular APIs.

The parent POM file is also an excellent place to set up reporting configurations. This way, you can define and configure the reports that you want generated for all the children projects in one central place.

<project>

<modelVersion>4.0.0</modelVersion>

<groupId>com.mycompany</groupId>

<artifactId>myapp</artifactId>

<packaging>pom</packaging>

<name>Killer application</name>

<version>1.0</version>

...

<reporting>

<plugins>

<plugin>

<artifactId>maven-surefire-report-plugin</artifactId>

</plugin>

<plugin>

<artifactId>maven-checkstyle-plugin</artifactId>

</plugin>

...

</plugins>

</reporting>

</project>Although, at the time of this writing, multimodule reporting is still a bit dodgy, each child project will inherit the reporting configuration defined in the parent POM file, making these files simpler and easier to maintain.

You can also define the subprojects as modules. This is known as aggregation, and allows you to build all the child projects in one go from the parent directory.

<project>

...

<modules>

<module>myapp-core</module>

<module>myapp-war</module>

<module>myapp-portlet</module>

</modules>

...

</project>When you run mvn compile from the parent root directory, all of the child projects also would be compiled:

$ mvn compile [INFO] Scanning for projects... [INFO] Reactor build order: [INFO] Killer App [INFO] Killer App - Core [INFO] Killer App - Portlet [INFO] Killer App - Webapp [INFO] ---------------------------------------------------------------------------- [INFO] Building Killer App [INFO] task-segment: [compile] [INFO] ---------------------------------------------------------------------------- ... [INFO] ---------------------------------------------------------------------------- [INFO] Building Killer App - Core [INFO] task-segment: [compile] [INFO] ---------------------------------------------------------------------------- ... [INFO] ---------------------------------------------------------------------------- [INFO] Building Killer App - Portlet [INFO] task-segment: [compile] [INFO] ---------------------------------------------------------------------------- ... [INFO] ---------------------------------------------------------------------------- [INFO] Building Killer App - Webapp [INFO] task-segment: [compile] [INFO] ---------------------------------------------------------------------------- ... [INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] ------------------------------------------------------------------------ [INFO] Killer App ............................................ SUCCESS [0.317s] [INFO] Killer App - Core ..................................... SUCCESS [1.012s] [INFO] Killer App - Portlet .................................. SUCCESS [0.602s] [INFO] Killer App - Webapp ................................... SUCCESS [0.753s] [INFO] ------------------------------------------------------------------------ [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESSFUL [INFO] ------------------------------------------------------------------------ [INFO] Total time: 4 seconds [INFO] Finished at: Sun Nov 18 02:54:32 GMT 2007 [INFO] Final Memory: 7M/80M [INFO] ------------------------------------------------------------------------

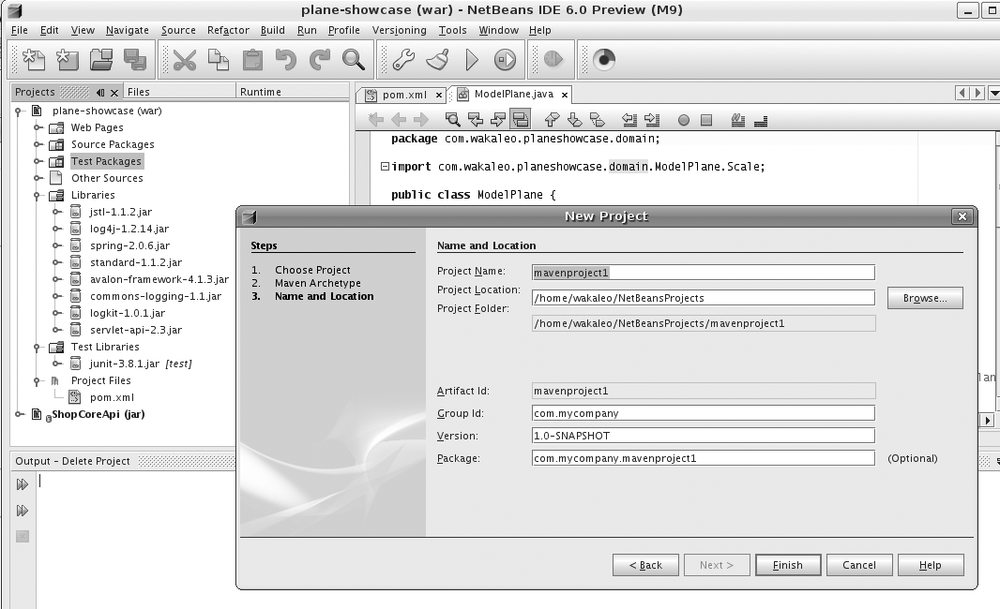

Creating a Project Template with Archetypes

Even with a standardized directory structure, it is tiresome to have to create a full set of empty directories by hand whenever you start a new Maven project. To make life easier, Maven 2 provides the archetype plug-in, which builds an empty Maven 2—compatible project template, containing a standard directory structure as well as some sample files illustrating Maven conventions and best practices. This is an excellent way to get a basic project environment up and running quickly. The default archetype model will produce a JAR library project. Several other artifact types are available for other specific project types, including web applications, Maven plug-ins, and others.

Let’s take a quick tour to see what you can do with Maven Archetypes. Suppose that we want to create an online store using Maven. Following Maven’s recommendations, we will divide the project into several distinct modules. Our backend module will be called ShopCoreApi:

$ mvn archetype:create -DgroupId=com.acme.shop -DartifactId=ShopCoreApi -Dpackagename=com.acme.shop [INFO] Scanning for projects... [INFO] Searching repository for plugin with prefix: 'archetype'. [INFO] ---------------------------------------------------------------------------- [INFO] Building Maven Default Project [INFO] task-segment: [archetype:create] (aggregator-style) [INFO] ---------------------------------------------------------------------------- ... [INFO] Archetype created in dir: /home/john/dev/projects/shop/ShopCoreApi [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESSFUL [INFO] ------------------------------------------------------------------------ [INFO] Total time: 2 seconds [INFO] Finished at: Sun Oct 15 21:50:38 NZDT 2006 [INFO] Final Memory: 4M/8M [INFO] ------------------------------------------------------------------------

This will create a complete, correctly structured, working, albeit minimalist, Maven project, including a simple POM file, a sample class, and a unit test. The POM file looks like this:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi=

"http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0

http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.acme.shop</groupId>

<artifactId>ShopCoreApi</artifactId>

<packaging>jar</packaging>

<version>1.0-SNAPSHOT</version>

<name>ShopCoreApi</name>

<url>http://maven.apache.org</url>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

</dependencies>

</project>The project will be created in a subdirectory with the same name of the artifact (in this case, “ShopCoreApi”). The groupId and the artifactId are used to identify the artifact produced by the project (see Project Context and Artifacts” in Declarative Builds and the Maven Project Object Model). The packagename is the root package for your project. More often than not, the packagename option will be the same at the groupId: in this case, you can drop the packagename option.

This project is now ready to try out. Switch to this directory and build the project using mvn package:

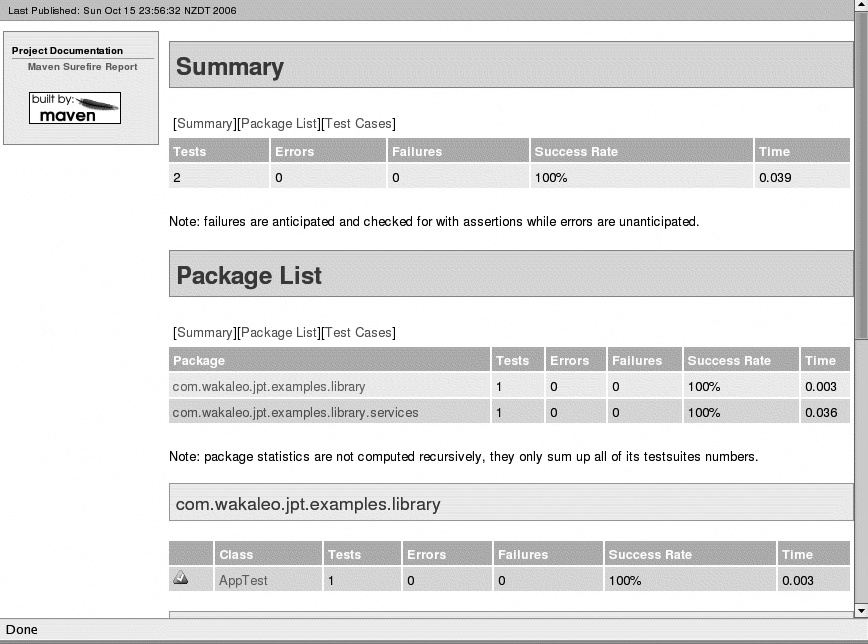

$ ls ShopCoreApi $ cd ShopCoreApi $ mvn package [INFO] Scanning for projects... [INFO] ---------------------------------------------------------------------------- [INFO] Building Maven Quick Start Archetype [INFO] task-segment: [package] [INFO] ---------------------------------------------------------------------------- ... ------------------------------------------------------- T E S T S ------------------------------------------------------- Running com.acme.shop.AppTest Tests run: 1, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 0.039 sec Results : Tests run: 1, Failures: 0, Errors: 0, Skipped: 0 [INFO] [jar:jar] [INFO] Building jar: /home/john/dev/projects/shop/ShopCoreApi/target /ShopCoreApi-1.0-SNAPSHOT.jar [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESSFUL [INFO] ------------------------------------------------------------------------ [INFO] Total time: 4 seconds [INFO] Finished at: Sun Oct 15 21:52:22 NZDT 2006 [INFO] Final Memory: 4M/10M [INFO] ------------------------------------------------------------------------

So, now you have a working Maven project template, generated in just a few minutes!

The default Archetype template (the

maven-archetype-quickstart archetype) is designed to

produce a JAR file. There are also several other archetypes that can be used to

create templates for different types of projects. You can use a different

archetype by using the archetypeArtifactId

command-line option, as shown

here:

$ mvn archetype:create -DgroupId=com.acme.shop -DartifactId=ShopWeb -DarchetypeArtifactId=maven-archetype-webapp

This example uses the maven-archetype-webapp archetype,

which creates (surprisingly enough!) an empty WAR project. Following Maven’s

recommendations about Separation of Concerns, the WAR project is expected to

contain only dynamic web pages (JSPs), with the actual Java code being written

in another project.

Another useful archetype is the maven-archetype-site

archetype, which creates a template for a Maven web site for an existing project, including a full,

multilingual (well, bilingual) site structure with sample XDoc, APT, and FAQs

content. This archetype is the only one that you run on an existing project.

Although it provides none of the source code-based reporting features, such as

unit test reports, checkstyle reports, and so on (which need to be configured in

the main POM file), it does provide a good starting point for manually added

site

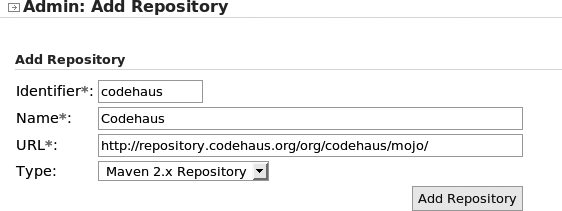

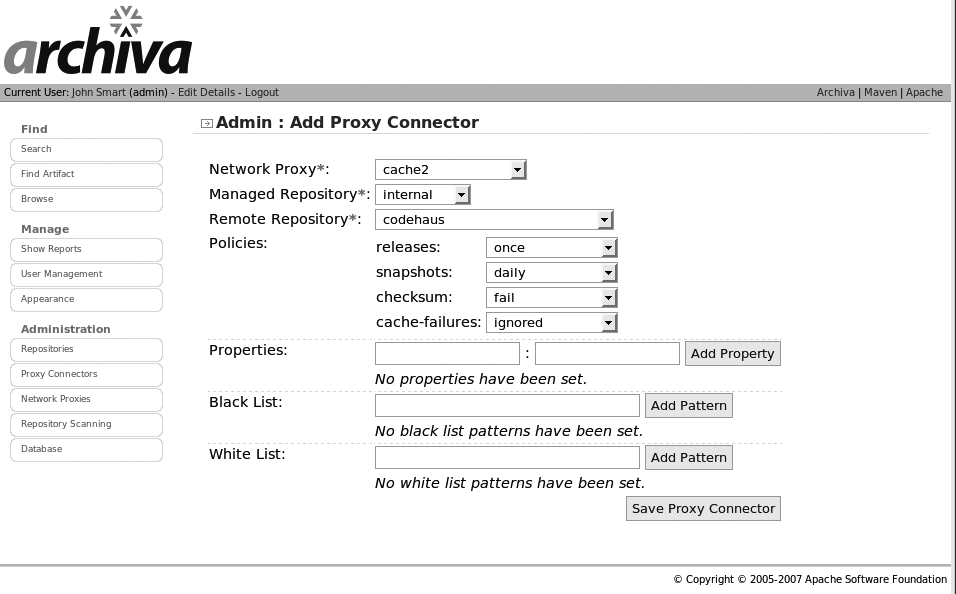

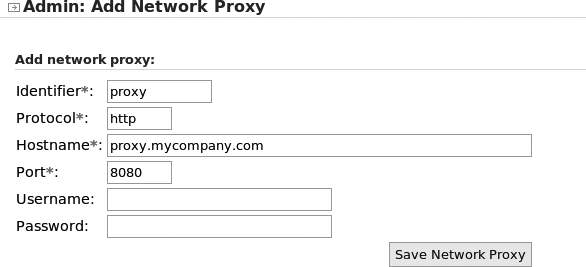

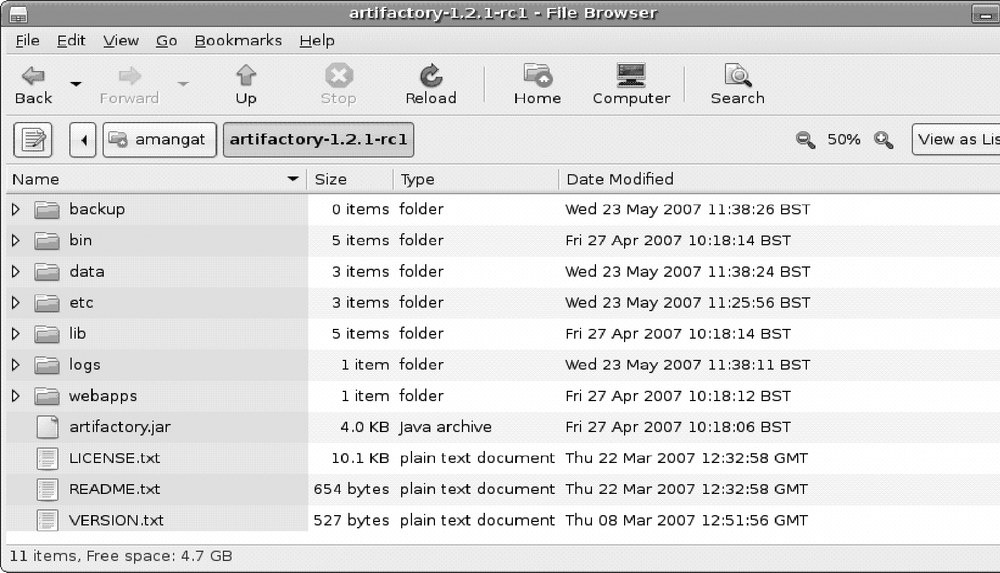

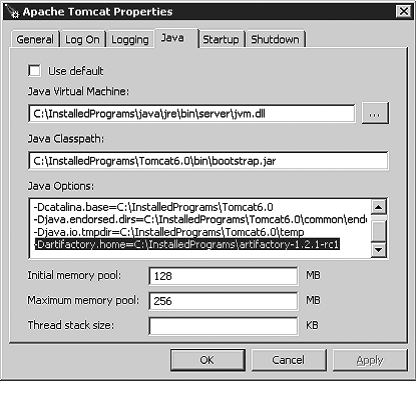

content: