Chapter 26. Using Statistical Functions

In this chapter

Examples of Functions for Descriptive Statistics 642

Examples of Functions for Regression and Forecasting 658

Examples of Functions for Inferential Statistics 682

Using the Analysis Toolpak to Perform Statistical Analysis 711

Statistics in Excel fall into three broad categories:

- Descriptive statistics that describe a dataset—These include measures of central tendency and dispersion.

- Regression tools—These allow you to predict future values based on past values.

- Inferential statistics—This type of statistic allows you to predict the likelihood of an event happening, based on a sample of a population.

Table 26.1 provides an alphabetical list of all of Excel 2007’s statistical functions. Detailed examples of the functions are provided in the remainder of the chapter.

Table 26.1. Alphabetical List of Statistical Functions

Examples of Functions for Descriptive Statistics

Descriptive statistics help describe a population of data. What is the largest? the smallest? the average? Are data points grouped to the left of the average or to the right of the average? How wide is the range of expected values? Do many members of the population have values in the middle, or are they evenly spread throughout the range? All these are measures of descriptive statistics.

Many situations in a business environment involve finding basic information about a dataset such as the largest or smallest values or the rank within a dataset.

Using MIN or MAX to Find the Smallest or Largest Numeric Value

If you have a large dataset and want to find the smallest or largest value in a column, rather than sort the dataset, you can use a function to find the value. To find the smallest numeric value, you use MIN. To find the largest numeric value, you use MAX.

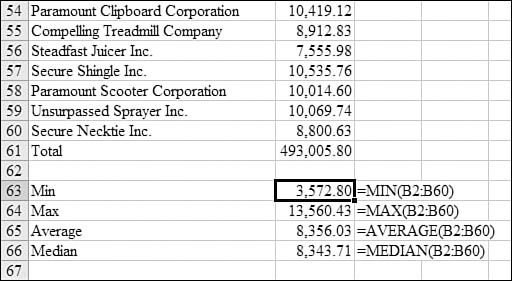

Figure 26.1 shows a list of open receivables, by customer, for 59 customers. Even though the function references says that you can only find the MIN for 255 numbers, a single rectangular reference counts as one of the 255 arguments for the function. To find the smallest value in the range, you use =MIN(B2:B360). To find the largest value in the range, you use =MAX(B2:B360).

Figure 26.1. You use MIN and MAX to find the smallest or largest receivables.

Syntax: =MIN(number1,number2,...)

The MIN function returns the smallest number in a set of values. The arguments number1,number2,... are 1 to 255 numbers for which you want to find the minimum value. You can specify arguments that are numbers, empty cells, logical values, or text representations of numbers. Arguments that are error values or text that cannot be translated into numbers cause errors. If an argument is an array or a reference, only numbers in that array or reference are used. Empty cells, logical values, or text in the array or reference are ignored. If logical values and text should not be ignored, you should use MINA instead. If the arguments contain no numbers, MIN returns 0.

Syntax: =MAX(number1,number2,...)

The MAX function returns the largest value in a set of values. The arguments number1,number2,... are 1 to 255 numbers for which you want to find the maximum value. The remaining rules are similar to those for MIN, described in the preceding section.

Note

If you read the descriptions for MINA and MAXA, you might think that the functions can be used to find the smallest text value in a range. However, here is the Excel Help description for MAXA:

MAXA(value1,value2) returns the largest value in a list of arguments. Text and logical values such as TRUE and FALSE are compared as well as numbers.

The problem, however, is that text values are treated as the number 0 in the compare. It is a struggle to imagine a scenario where this would be mildly useful. If you have a series of positive numbers and want to know if any of them are text, you can use =MINA(A1:A99). If the result is 0, then you know that there is a text value in the range.

Similarly, if you have a range of negative numbers in A1:A99, you could use =MAXA(A1:A99). If any of the values are text, the result will return 0 instead of a negative number.

MINA and MAXA could be used to evaluate a series of TRUE/FALSE values. FALSE values are treated as 0. TRUE values are treated as 1.

Using LARGE to Find the Top N Values in a List of Values

The MAX function discussed in the preceding section finds the single largest value in a list. Sometimes, it is interesting to find the top 10 values in a list. Say that with a list of customer receivables, someone in accounts receivable may want to call the top 10 receivables in an attempt to collect the accounts. The LARGE function can find the first, second, third, and so on largest values in a list.

Syntax: =LARGE(array,k)

The LARGE function returns the kth largest value in a dataset. You can use this function to select a value based on its relative standing. For example, you can use LARGE to return a highest, runner-up, or third-place score. This function takes the following arguments:

array—This is the array or range of data for which you want to determine thekth largest value. Ifarrayis empty,LARGEreturns a#NUM!error.k—This is the position (from the largest) in the array or cell range of data to return. Ifkis less than or equal to 0 or ifkis greater than the number of data points,LARGEreturns a#NUM!error.

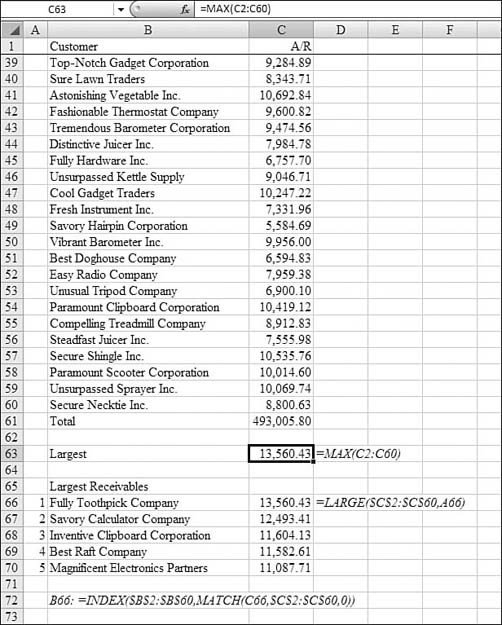

You follow these steps to build a table of the five largest customer receivables:

- Make the second argument of the function the numbers

1through5. Starting from the dataset shown in Figure 26.1, insert a new Column A to hold the values1through5. - In A66:A70, enter the numbers

1through5. - In the column letters above the grid, grab the line between Columns A and B. Drag to the left to make this column narrower. It should be just wide enough to display the numbers in Column A.

- In Column C, Row 66, enter

=LARGE(. Use the mouse or arrow keys to highlight the range of data. After highlighting the data, press the F4 key to add dollar signs to the reference. This allows you to copy the reference to the next several rows while always pointing at the same range. - For the second argument, point to the 1 in Cell A66. Leave this reference as relative (that is, no dollar signs) so that it will change to A67, A68, and so on when copied. The first formula in Cell C66 indicates that the largest value is 13,560.43. So far, you’ve done a lot of work just to find out the same thing that the

MAXfunction could have told you. However, the power comes in the next step. - Select Cell C66. Click the fill handle and drag down to Cell C70. You now have a list of the top five open receivables.

- At this point, you know the amounts of the top receivables, but this immediately brings up the question of which customers have those receivables. Using lookup functions discussed in Chapter 24, “Using Financial Functions,” you can retrieve the name associated with each receivable amount. Note that this method assumes that no two customers in the top five have exactly the same receivable.

- Enter the following intermediate formula in Cell B66:s

=MATCH(C66,$C$2:$C$60,0). This formula tells Excel to take the receivable value in Cell C66 and to find it in the list of open receivables. TheMATCHfunction returns the row number within C2:C60 that has the matching value. For example,13,560.43is found in Cell C9. This is the eighth row in the range of C2:C60, soMATCHreturns the number8. - Finding out that the largest receivable in the eighth row of a range is not useful to a person trying to collect accounts receivables, so to return the name, ask for the eighth value in the range of B2:B66. You can use the

INDEXfunction to do this.=INDEX($B$2:$B$66,8)returns the customer with the largest receivable. - Combine the formulas from step 8 and step 9 into a single formula in Cell B66:

=INDEX($B$2:$B$60,MATCH(C66,$C$2:$C$60,0)). - Copy the formula in Cell B66 down through Cell B70.

As shown in Figure 26.2, the result is a table in A66:A70 that shows the five largest customers. After receiving checks today, you can update the receivable amounts in C2:C60. If Best Raft sent in a check for $10,000, the formulas would automatically move Magnificent Electronics up to the fourth position and move the sixth customer up to the fifth spot.

Figure 26.2. The LARGE function in Column C allows this dynamic table to be built to show the five largest problems.

Using SMALL to Sequence a List in Date Sequence

The MIN function finds the smallest value in a dataset. The SMALL function can find the kth smallest value. This can be great for finding not just the smallest value but the second-smallest, third-smallest, and so on. If n is the number of data points in an array, SMALL(array,1) equals the smallest value, and SMALL(array,n) equals the largest value.

Syntax: =SMALL(array,k)

The SMALL function returns the kth smallest value in a dataset. You use this function to return values with a particular relative standing in a dataset. array is an array or a range of numeric data for which you want to determine the kth smallest value. If array is empty, SMALL returns a #NUM! error. k is the position (from the smallest) in the array or range of data to return. If k is less than or equal to 0 or if k exceeds the number of data points, SMALL returns a #NUM! error.

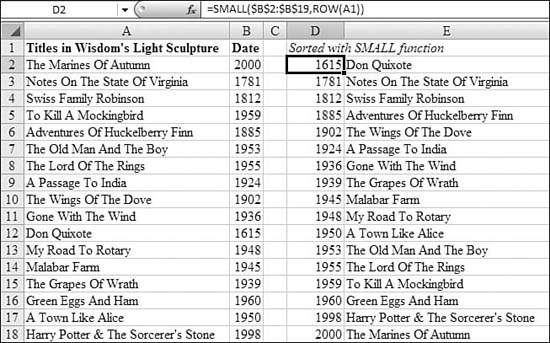

In Figure 26.3, range A2:B19 contains a list of book titles and their publication dates. To find the earliest dates for the books, you use =SMALL().

Figure 26.3. The SMALL function in Column D finds the earliest years in the list.

This example contains a twist that makes the formula easier than in the example for LARGE. In the initial formula in Cell D2, the argument for k was generated using ROW(A1). This function returns the number 1. As the formula is copied from Cell D2 down to the remaining rows, the reference changes to ROW(A2) and so on. This allows each row in Column D to show a successively larger value from array.

The formula in Cell D2 is =SMALL($B$2:$B$19,ROW(A1)). After you have found the year in Column D, the formula in Cell E2 to return the title is =INDEX($A$2:$A$19,MATCH(D2,$B$2:$B$19,0)).

Using MEDIAN, MODE, and AVERAGE to Find the Central Tendency of a Dataset

There are three popular measures to use when trying to find the middle scores in a range:

- Mean—The mean of a dataset is the mathematical average. It is calculated by adding all the values in the range and dividing by the number of values in the set. To calculate a mean in Excel, you use the

AVERAGEfunction. - Median—The median of a dataset is the value in the middle when the set is arranged from high to low. In the dataset, half the values are higher than the median and half the numbers are lower than the median. To calculate a median in Excel, you use the

MEDIANfunction. - Mode—The mode of a dataset is the value that happens most often. To calculate a mode in Excel, you use the

MODEfunction.

Syntax: =AVERAGE(number1,number2,...)

The AVERAGE function returns the average (that is, arithmetic mean) of the arguments. The arguments number1,number2,... are 1 to 255 numeric arguments for which you want the average. The arguments must be either numbers or names, arrays, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells containing the value 0 are included.

Caution

When averaging cells, keep in mind the difference between empty cells and those that contain the value 0. This can be particularly troubling if you have unchecked the Show a Zero in Cells That Have a Zero Value setting. You find this setting by clicking the Office icon and then selecting Excel Options, Advanced, Display Options for This Worksheet.

Syntax: =MEDIAN(number1,number2,...)

The MEDIAN function returns the median of the given numbers. The median is the number in the middle of a set of numbers; that is, half the numbers have values that are greater than the median and half have values that are less. If there is an even number of numbers in the set, then MEDIAN calculates the average of the two numbers in the middle.

The arguments number1, number2,... are 1 to 255 numbers for which you want the median. The arguments should be either numbers or names, arrays, or references that contain numbers. Microsoft Excel examines all the numbers in each reference or array argument. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included.

Syntax: =MODE(number1,number2,...)

The MODE function returns the most frequently occurring, or repetitive, value in an array or a range of data. Like MEDIAN, MODE is a location measure. In a set of values, the mode is the most frequently occurring value; the median is the middle value; and the mean is the average value. No single measure of central tendency provides a complete picture of the data. Suppose data is clustered in three areas, half around a single low value, and half around two large values. Both AVERAGE and MEDIAN may return a value in the relatively empty middle, and MODE may return the dominant low value.

The arguments number1, number2,... are 1 to 255 arguments for which you want to calculate the mode. You can also use a single array or a reference to an array instead of arguments separated by commas. The arguments should be numbers, names, arrays, or references that contain numbers. If an array or reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included. If the dataset contains no duplicate data points, MODE returns a #N/A error.

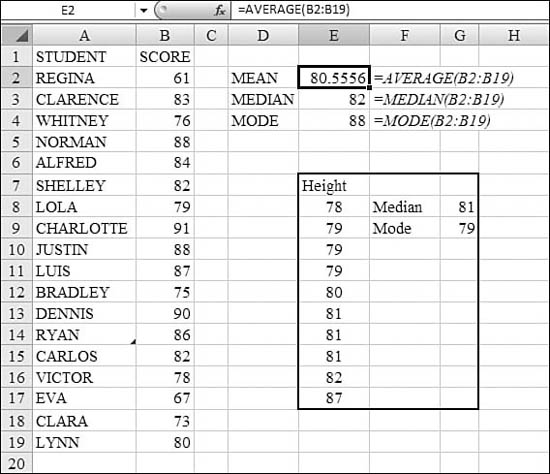

Figure 26.4 shows examples of AVERAGE, MEAN, and MODE. Cell E2 calculates the arithmetic mean of the test scores in Column B: 80.55. The median in Cell E3 is higher: 82. This means that half the students scored above 82 and half scored below 82. The mode in Cell E4 is 88. This is because 88 was the only score that appeared more than once in the class.

Figure 26.4. AVERAGE, MEDIAN, and MODE all describe the central tendencies of a dataset.

The range in E17:G17 demonstrates two anomalies with the median and mode. In this case, there are an even number of entries—10. It is impossible to figure out a median in this case, so Excel takes the average of the two values in the middle—80 and 81—to produce 80.5. This is the only situation in which the median is not a value from the table.

The table contains the height, in inches, of several members of the Cleveland Cavaliers. In this dataset, three players are 79 inches tall, and three players are 81 inches tall. Either answer qualifies as the value that happens most often. Thus, either answer could be the mode. In this case, MODE returns the first of these values it encounters in the dataset. If E7:E17 were sorted high to low, the MODE would report 81 inches.

Using TRIMMEAN to Exclude Outliers from the Mean

Sometimes a dataset includes a few outliers that radically skew the average. For example, say you have a list of gross margin percentages. Most percentages fall in the 45% to 50% range, but there was one deal where for customer satisfaction reasons, the product was given away at a loss. This one data point would skew the average unusually low.

The TRIMMEAN function takes the mean of data points but excludes the n% highest and lowest values. You have to use some care in expressing the n%.

Syntax: =TRIMMEAN(array,percent)

The TRIMMEAN function returns the mean of the interior of a dataset. TRIMMEAN calculates the mean taken by excluding a percentage of data points from the top and bottom tails of a dataset. You can use this function when you want to exclude outlying data from your analysis. This function takes the following arguments:

array—This is the array or range of values to trim and average.percent—This is the fractional number of data points to exclude from the calculation. For example, ifpercentis0.2, 4 points are trimmed from a dataset of 20 points (that is, 20 × 0.2): 2 from the top and 2 from the bottom of the set.

If percent is less than 0 or percent is greater than 1, TRIMMEAN returns a #NUM! error. TRIMMEAN rounds the number of excluded data points down to the nearest multiple of 2. If percent equals 0.1, 10% of 30 data points equals 3 points. For symmetry, TRIMMEAN excludes a single value from the top and bottom of the dataset.

Using GEOMEAN to Calculate Average Growth Rate

Say that your 401(k) plan is invested in a stock market index fund. The stock market goes up 5%, 40%, and 15% in three successive years. Taking the average of these numbers might lead someone to believe that the average increase was 20% per year. This is not correct. The growth rates are all multiplied together to find an ending value of your investment. To find the average growth rate, you need to find a number that, when multiplied together three times, yields the same result as 105% × 140% × 115%. You can calculate this by using GEOMEAN.

To find the geometric mean of 10 numbers, you multiply the 10 numbers together and raise the sum to the 1/10 power. Excel lets you do this quickly with GEOMEAN.

Syntax: =GEOMEAN(number1,number2,...)

The GEOMEAN function returns the geometric mean of an array or a range of positive data. For example, you can use GEOMEAN to calculate average growth rate, given compound interest with variable rates.

The arguments number1,number2,... are 1 to 255 arguments for which you want to calculate the mean. You can also use a single array or a reference to an array instead of arguments separated by commas.

The arguments must be either numbers or names, arrays, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included. If any data point is less than or equal to 0, GEOMEAN returns a #NUM! error.

Using HARMEAN to Find Average Speeds

The typical averaging function fails when you are measuring speeds over a period of time. Say that your exercise regimen is 5 minutes of walking at 2 mph, 25 minutes of running at 5 mph, and then 10 minutes of jogging at 3 mph. If you took the average of (2, 5, 5, 5, 5, 5, 3, 3), you would assume that you averaged 4.125 miles per hour.

The actual calculation for average speed would be to take the reciprocals of each speed, average those values, and then take the reciprocal of the result. In the exercise example, you would average (½, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() ) to obtain

) to obtain ![]() . The you would take the reciprocal,

. The you would take the reciprocal, ![]() , to find the actual average speed of 3.69 mph.

, to find the actual average speed of 3.69 mph.

Syntax: =HARMEAN(number1,number2,...)

The HARMEAN function returns the harmonic mean of a dataset. The harmonic mean is the reciprocal of the arithmetic mean of reciprocals. The arguments number1,number2,... are 1 to 255 arguments for which you want to calculate the mean. You can also use a single array or a reference to an array instead of arguments separated by commas.

The arguments must be either numbers or names, arrays, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included. If any data point is less than or equal to 0, HARMEAN returns a #NUM! error. The harmonic mean is always less than the geometric mean, which is always less than the arithmetic mean.

Using RANK to Calculate the Position Within a List

There are times when you need to determine the order of values but you are not allowed to sort the data. The RANK function helps with this task. However, there is an anomaly with the function that you should understand.

Let’s say five bowlers scored 187, 185, 185, 170, and 160. The traditional way to rank the players is that two players would have a rank of 2, and the next player would have a rank of 4. There would be no one ranked number 3. Although this is technically correct, it can cause problems if you have lookup values expecting to find a person ranked number 3. The example at the end of this section explains how to overcome such a situation.

Syntax: =RANK(number,ref,order)

The RANK function returns the rank of a number in a list of numbers. The rank of a number is its size relative to other values in a list. (If you were to sort the list, the rank of the number would be its position.) This function takes the following arguments:

number—This is the number whose rank you want to find.ref—This is an array of, or a reference to, a list of numbers. Nonnumeric values in ref are ignored.order—This is a number that specifies how to ranknumber. For a value of0or if this argument is omitted, Excel ranksnumberas ifrefwere a list sorted in descending order. Iforderis any nonzero value, Excel ranksnumberas if ref were a list sorted in ascending order.

RANK gives duplicate numbers the same rank. However, the presence of duplicate numbers affects the ranks of subsequent numbers. For example, in a list of integers, if the number 10 appears twice and has a rank of 5, then 11 would have a rank of 7 (no number would have a rank of 6).

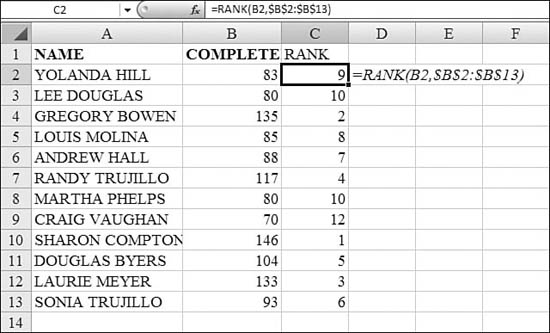

In Figure 26.5, Column B contains a list of scores. The formula for Cell C2 is =RANK(B2,$B$2:$B$13). Notice that the third argument is omitted, so the highest score will be ranked as number 1. Also notice that the second argument is marked as absolute so that the formula can be copied, and it will always point to the same ref range.

Figure 26.5. In this case, RANK works okay. Two students have a rank of 10, and no one is ranked 11.

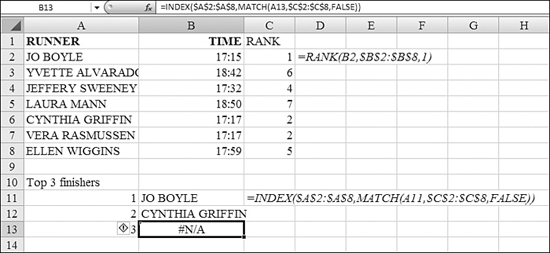

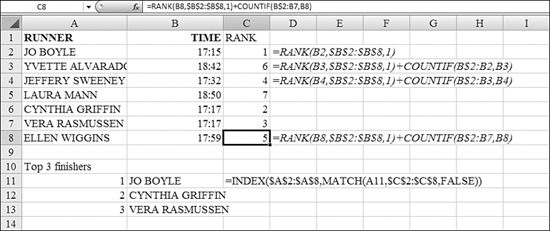

In Figure 26.6, the values in Column B are times in a cross-country race. In this case, the lowest rank should go to the fastest score. The formula in Cell C2 is =RANK(B2,$B$2:$B$8,1). Note that there is a third argument to specify that the lowest value should be ranked 1. In this case, however, there was a tie. The runners in Cells C6 and C7 both had the same time. The RANK function gives both of these values a rank of 2. This causes a problem in Row 13. This table is trying to identify the top three finishers by using lookup formulas. Because no one is ranked number 3, an error occurs.

Figure 26.6. Two runners tied with the same score. This causes the lookup formulas in Row 13 to never find a match.

The generally accepted solution is to use the RANK function and add the COUNTIF of how many times this value occurred previously in the list.

In Figure 26.7, examine the formula in Cell C8. COUNTIF asks how many times the value in Cell B8 was found in B$2:B7. This final reference is an interesting reference. It tells Excel to count always from Row 2 down to the row above the current row. It is easier to build this formula in the final cell of the column and then copy it upwards.

Figure 26.7. You use a COUNTIF to break ties.

Using QUARTILE to Break a Dataset into Quarters

Use QUARTILE to divide populations into groups.

Syntax: =QUARTILE(array,quart)

The QUARTILE function returns the quartile of a dataset. Quartiles are often used in sales and survey data to divide populations into groups. For example, you can use QUARTILE to find the top 25% of incomes in a population. This function takes the following arguments:

array—This is the array or cell range of numeric values for which you want the quartile value. Ifarrayis empty,QUARTILEreturns a#NUM!error.quart—This indicates which value to return. You use0for the minimum value,1for the first quartile (25th percentile),2for the median value (50th percentile),3for the third quartile (75th percentile), and4for the maximum value. Ifquartis not an integer, it is truncated. Ifquartis less than 0 or if quart is greater than 4,QUARTILEreturns a#NUM!error.

MIN, MEDIAN, and MAX return the same value as QUARTILE when quart is equal to 0, 2, and 4, respectively.

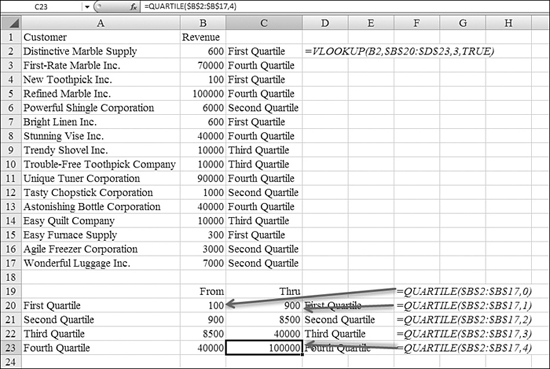

In Figure 26.8, the formulas in B20:C23 break out the limits for each quartile. The formula in Cell B20 is =QUARTILE($B$2:$B$17,0) to find the minimum value. The formula in Cells C20 and B21 is =QUARTILE($B$2:$B$17,1) to define the end of the first quartile and the start of the second quartile.

Figure 26.8. The QUARTILE function can break up a dataset into four equal pieces.

After the QUARTILE functions build the table in B20:C23, the VLOOKUP function returns the text in C2:C17. The formula in Cell C2 is =VLOOKUP(B2,$B$20:$D$23,3,TRUE).

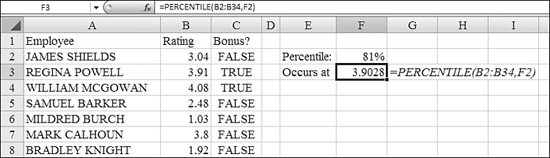

Using PERCENTILE to Calculate Percentile

The QUARTILE function is fine if you are trying to find every record that is in the top 25% of a range. Sometimes, however, you need to find some other percentile. For example, all employees ranked above the 81st percentile may be eligible for a bonus this year. You can use the PERCENTILE function to determine the threshold for any percentile.

Syntax: =PERCENTILE(array,k)

The PERCENTILE function returns the kth percentile of values in a range. You can use this function to establish a threshold of acceptance. For example, you can decide to examine candidates who score above the 90th percentile. This function takes the following arguments:

array—This is the array or range of data that defines relative standing. Ifarrayis empty,PERCENTILEreturns a#NUM!error.k—This is the percentile value in the range 0...1, inclusive. Ifkis nonnumeric,PERCENTILEreturns a#VALUE!error. Ifkis less than 0 or ifkis greater than 1,PERCENTILEreturns a#NUM!error. Ifkis not a multiple of 1 / (n – 1),PERCENTILEinterpolates to determine the value at thekth percentile.

In Figure 26.9, there are 33 employees in Column A. Their ratings on an annual review are shown in Column B. The formula in Cell F3, =PERCENTILE(B2:B34,F2), calculates the level of the 81st percentile. After you determine the particular percentile, you can mark all the qualifying employees by using the formula =B2>=$F$3 in cells C2:C33.

Figure 26.9. Unlike QUARTILE, the PERCENTILE function can determine the breaking point for any particular percentile.

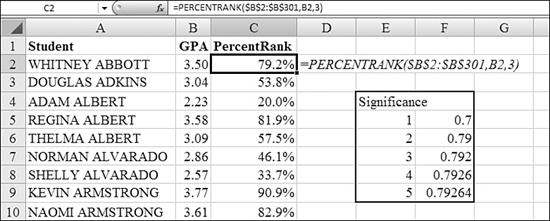

Using PERCENTRANK to Assign a Percentile to Every Record

Say that you have a database of students in a graduating class. Each student has a certain grade point average. To determine each student’s standing in the class, you use the PERCENTRANK function.

Syntax: =PERCENTRANK(array,x,significance)

The PERCENTRANK function returns the rank of a value in a dataset as a percentage of the dataset. This function can be used to evaluate the relative standing of a value within a dataset. For example, you can use PERCENTRANK to evaluate the standing of an aptitude test score among all scores for the test. This function takes the following arguments:

array—This is the array or range of data with numeric values that defines relative standing. Ifarrayis empty,PERCENTRANKreturns a#NUM!error.x—This is the value for which you want to know the rank. Ifxdoes not match one of the values inarray,PERCENTRANKinterpolates to return the correct percentage rank.significance—This is an optional value that identifies the number of significant digits for the returned percentage value. If it is omitted,PERCENTRANKuses three digits (that is, 0.xxx). If significance is less than 1,PERCENTRANKreturns a#NUM!error.

This function is slightly different from RANK, so use caution. Typically, RANK and other functions would ask for x as the first argument and array as the second argument. If you use this function and everyone is assigned to the 100% level, you might have reversed the arguments. The Excel Help is a bit misleading with regard to significance. The Help topic indicates that a significance of 3 generates a value accurate to 0.xxx%. In fact, a significance of 3 returns xx.x%.

In Figure 26.10, the students’ GPAs are in B2:B301. The rank for the first student is =PERCENTRANK($B$2:$B$301,B2,3). Note that PERCENTRANK always starts with the lowest score at the lowest percentile. To find the top students in the class, you use a conditional format to highlight the students with percentiles above 90%.

Figure 26.10. The PERCENTRANK function assigns percentile values to an array of values.

The table in E4:F9 shows the actual behavior of the significance argument. The values in Column F show the PERCENTRANK of Cell B2 to the significance in Column E. You can see that the student ranked at the 79.2th percentile is in the 70th percentile when the significance is 1. A significance of 1 would assign 30 records to be at the 70th percentile.

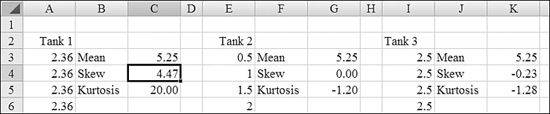

Using AVEDEV, DEVSQ, VAR, and STDEV to Calculate Dispersion

Functions such as AVERAGE tell you about the center of a range of data. Seeing the center is not always the entire picture. The other key element of descriptive statistics is dispersion. If you have a population, the average height might be x. If you look at dispersion, you can find out if every member of the population is tightly grouped around the average or if there is wide variability.

Here are several measures of dispersion:

- Average deviation is calculated by measuring the absolute difference of each data point from the mean and then averaging these values. Say the values in a population are 12, 14, 16, 18, and 20. The mean is 16. Average deviation adds up 4, 2, 0, 2, and 4 and divides the total by 5 to yield 2.4. Excel offers

AVEDEVto calculate this. - Average deviation is not perfect. Say that you have another population of 11, 15, 16, 17, and 21. Again, the mean is 16. The average deviation averages 5, 1, 0, 1, and 5 to yield an average deviation of 2.4. If you want to measure how far from the mean the points range, you can add up the squares of each deviation. In this case, the square of 5 is 25, and it indicates more dispersion than the square of 4. Excel offers

DEVSQto calculate the squares of each deviation. - Variance is a common measurement of dispersion. It averages the square deviations to come up with the variance of a dataset. Here is the one odd thing about variance: Say that you have 20 measurements, and they represent the entire population (for example, the 20 fish in an aquarium). In this case, you divide

DEVSQby 20 to calculate the variance. You useVARPin Excel to do this. However, if your 20 values are a random sample, then variance is calculated by dividingDEVSQby 20 – 1, or 19. You useVARin Excel to calculate this. - The measurement for variance is a square, right? You took all the deviations, squared them, and then averaged (or nearly averaged) them. The final popular measure of dispersion is calculated by taking the square root of the variance. This number is called standard deviation. Excel offers two functions for standard deviation. You use

STDEVPif your dataset represents the entire population, and you useSTDEVif your dataset represents only a sample of the population.

There are many theories about standard deviation. One rule of thumb says that 95% of a population will be located within two standard deviations of the mean. If you extend your range to within three standard deviations of the mean, that range should encompass 99.7% of the population.

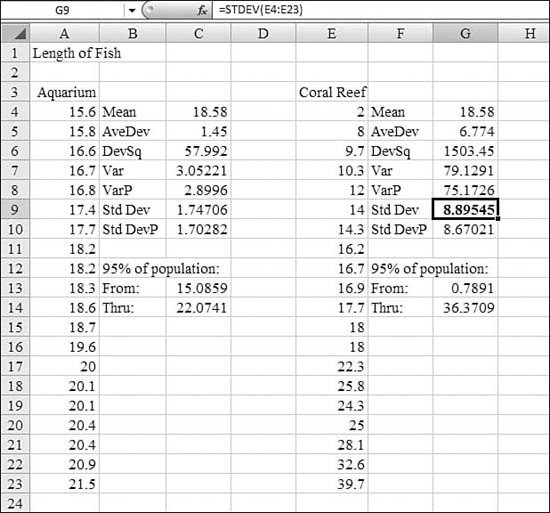

Figure 26.11 shows the lengths of fish. Column A contains the lengths of all 20 fish in one particular tank at a science museum. Column E contains the lengths of 20 random fish observed while snorkeling at a coral reef. Both groups have a mean value of 18.58 inches, as shown in Cells C4 and G4.

Figure 26.11. Although the averages are the same, the dispersion measurements paint a different picture of these populations.

The fish in the museum tank have an average deviation of 1.45 inches from the mean. Cells C6, C8, and C10 walk through the calculation of squares of deviation, variance, and standard deviation. The theory about standard deviation says that of the fish in the tank, 95% will occur between 15.08 inches and 22.07 inches.

The fish at the coral reef have an average deviation of 6.7 inches from the mean. Cells G6, G7, and G9 walk through the calculation of squares of deviation, variance, and standard deviation. The theory about standard deviation says that of the fish at the coral reef, 95% will be between 0.78 inches and 36.37 inches long.

Comparing these two results helps you to picture the likely populations of both locations. Although both have the same mean size, the variety of fish (that is, the measure of dispersion) at the coral reef is much higher than that at the aquarium.

Syntax: =AVEDEV(number1,number2,...)

The AVEDEV function returns the average of the absolute deviations of data points from their mean. AVEDEV is a measure of the variability in a dataset. AVEDEV is influenced by the unit of measurement in the input data.

Syntax: =DEVSQ(number1,number2,...)

The DEVSQ function returns the sum of squares of deviations of data points from their sample mean.

Syntax: =VAR(number1,number2,...)

The VAR function estimates variance based on a sample.

Syntax: =VARP(number1,number2,...)

The VARP function calculates variance based on the entire population.

Syntax: =STDEV(number1,number2,...)

The STDEV function estimates standard deviation based on a sample. The standard deviation is a measure of how widely values are dispersed from the average value (that is, the mean). The standard deviation is calculated using the “nonbiased” or “n – 1” method.

Syntax: =STDEVP(number1,number2,...)

The STDEVP function calculates standard deviation based on the entire population, given as arguments. The standard deviation is a measure of how widely values are dispersed from the average value (that is, the mean). STDEVP assumes that its arguments are the entire population. If your data represents a sample of the population, you can compute the standard deviation by using STDEV. For large sample sizes, STDEV and STDEVP return approximately equal values. The standard deviation is calculated using the “biased” or “n” method.

The arguments number1, number2,... are 1 to 255 arguments for which you want the average of the absolute deviations. You can also use a single array or a reference to an array instead of arguments separated by commas. The arguments must be either numbers or names, arrays, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included.

Logical values (TRUE/FALSE) are ignored in the STDDEV and STDDEVP calculations. There are some statistics for which you need to figure out how many people answered TRUE to a question. In order to count TRUE values as 1 and FALSE values as 0, you use VARA, VARPA, STDEVA, and STDEVPA versions of those four functions.

Examples of Functions for Regression and Forecasting

Regression analysis allows you to predict the future, based on past events. Say that you have observed total sales for the past several years. Regression analysis finds a line that best fits the past data points. You can then use the description of that line to predict results for the future data points.

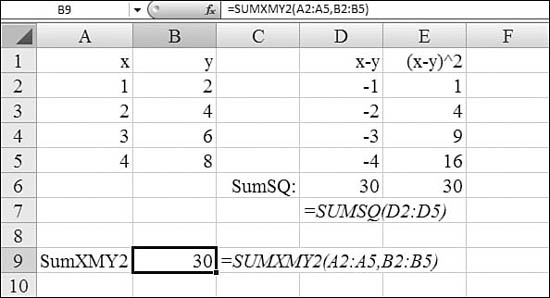

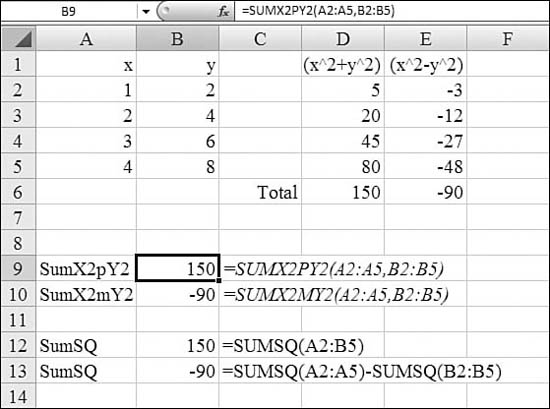

Regression works by finding a line that can best be drawn through existing data points. In real-life data, the data points aren’t arranged exactly in a line. Any line that the computer draws will have errors at any data point. Regression finds the line that minimizes the errors at each data point.

Consider the error in a regression line. The actual data point in Year 1 might by higher than the regression line by 2. In Year 2, the data might be lower by 1, and in Year 3 it might be lower by 1. If you added up these three errors, you would have an error of 0. This is a bad method. If you used this method to judge a line with errors of +400, –300, –100, it would also add up to an error of 0.

Instead, the regression engine sums the square of each error. In this case, the first line would have an error of 2^2 + –1^2+ –1^2 or 4 + 1 + 1, or 6. The second line would have an error of 400^2+ –300^2 + –100^2 or 160,000 + 90,000 + 10,000, or 260,000. With this method, the error for the first line is clearly better than the error for the second line. This method is called the least-squares method.

You might wonder why regression doesn’t add the absolute value of each error. Ideally, the errors around the regression line should be narrow. A line with errors of –4, +4, –4, +4 would results in a sum of squares of 64. A line with errors of –7, 1, 7, –1 would result in a sum of squares of 100. The sum of squares method would deem the earlier line to be better, while using absolute values would call them equal.

You need to consider one question before doing regression analysis. First, is the data series growing linearly or exponentially? Sales for a company might grow linearly. The number of bacteria cells in a Petri dish might grow exponentially. You use LINEST and TREND to predict sales that are growing linearly. You use LOGEST and GROWTH to predict bacteria that are growing exponentially.

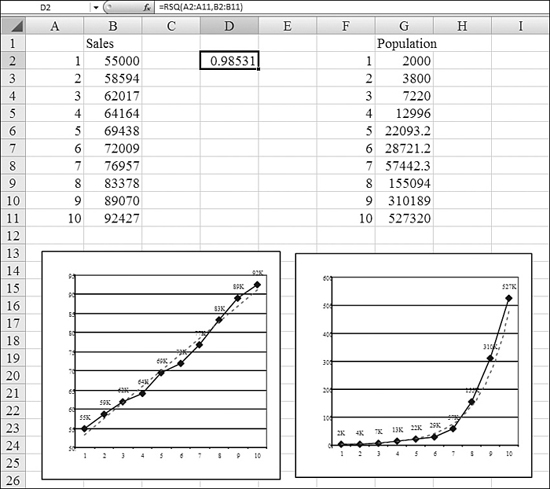

In Figure 26.12, the chart on the left shows sales over time. These sales are growing linearly and could probably be predicted fairly well by a straight line. The dotted line in the chart is the straight-line regression for the dataset. Although each data point is either above or below the regression line, the error at any given data point is fairly small.

Figure 26.12. These two datasets can be accurately predicted using regression.

The chart on the right shows an exponential growth curve. In this chart, the dotted line shows the regression line plotted using LOGEST. Again, although the dotted line does not correlate exactly with the actual data points, it is fairly close.

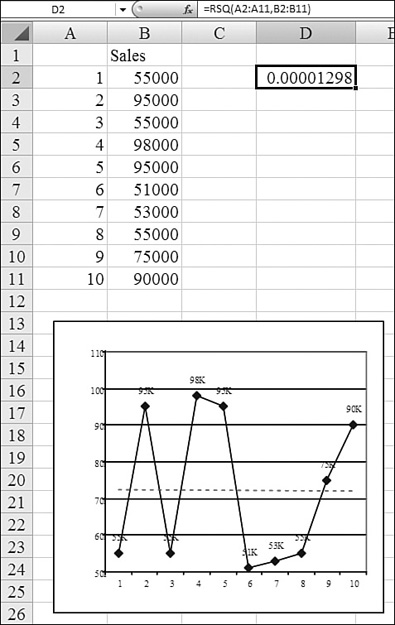

Here is the problem: Regression always finds a line to fit your dataset. In Figure 26.13, there is no apparent correlation between sales and time. Each year, the sales fluctuate wildly up or down. If you asked Excel to use regression, it would gladly predict the dotted line shown in the graph. The problem is that this line has no predictive ability. If you base your future sales on this line, you will get results that will vary greatly from the prediction.

Figure 26.13. This dataset has no correlation to time. LINEST happily predicts a line, but it is severely wrong most of the time.

Part of the results of regression analysis are statistics that tell how well the regression line fits the actual data. You should always check statistics such as r-squared or the standard error to see if the past data shows a relationship between the variables. The r-squared value is a value between 0 and 1. The closer that r-squared is to 1, the better the regression line. The r-squared for the left chart in Figure 26.12 is 0.985. The r-squared for the chart in Figure 26.13 is 0.000001, indicating that there is no correlation.

When you have data like the data in Figure 26.13, it does not mean that you cannot use regression analysis. It means that you need to think about the data to see if other factors could help describe the data. Let’s say that the data represents sales of squares of roofing shingles in Florida. If you add data to the chart that describes the number of category 3+ hurricanes making landfall each year, the sales numbers begin to make sense. The r-squared for predicting sales based on year is nearly 0. The r-squared for predicting sales based on hurricanes is 0.987. Since an r-squared of 1 means almost perfect correlation, you could base prediction of sales on a forecast of hurricanes.

For all the following regression functions, the arguments list generally includes these two arguments (for brevity, they are described here once):

known_y's—This is an array or a cell range of numeric dependent data points. This is the range of data that you want to predict. It might be the actual sales for the past several years or the population of bacteria for the past several hours.known_x's—This is the set of independent data points. These are the values that you think will lead to a prediction of the y values. For a simple time series, this might be a list of year numbers. It might be a list of other independent data points, such as the number of hurricanes making landfall each year.

The arguments must be either numbers or names, arrays, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included. If known_y's and known_x's are empty or have a different number of data points, the function returns a #N/A error.

Functions for Simple Straight-Line Regression: SLOPE and INTERCEPT

With many things in Excel, there is a right way to do something. However, sometimes the powers-that-be decide that the right way is too difficult for Excel customers, so they offer alternative, easier ways to solve problems.

The LINEST function is powerful, and using it is the right way to calculate straight-line regression. However, because the LINEST function returns an array of values, it seemed too difficult, so Microsoft also offers the SLOPE and INTERCEPT functions to retrieve the key results from LINEST.

In mathematical terms, a line is described as y = mx + b:

- y—This is the value you are trying to predict. It could be sales for a given year.

- b—This is called the y-intercept. This is the base level of sales that you can count on year after year after year.

- m—This is the slope of the line. If your sales are going up by 1,000 per year, the slope is 1,000. If your sales are going up by 100,000 per year, the slope is 100,000.

- x—This is a point along the x-axis. In a problem where you are measuring sales over a span of several years, you can assign year numbers 1, 2, 3, and so on to each year. x then corresponds to a year number.

If you have a series of year numbers and sales for each year, you need to calculate both the SLOPE and INTERCEPT in order to describe the line.

Syntax: =SLOPE(known_y's,known_x's)

The SLOPE function returns the slope of the linear regression line through data points in known_y's and known_x's. The slope is the vertical distance divided by the horizontal distance between any two points on the line; in other words, it is the rate of change along the regression line.

Syntax: =INTERCEPT(known_y's,known_x's)

The INTERCEPT function calculates the point at which a line intersects the y-axis by using existing x values and y values. The intercept point is based on a best-fit regression line plotted through the known x values and known y values. You use the intercept when you want to determine the value of the dependent variable when the independent variable is 0.

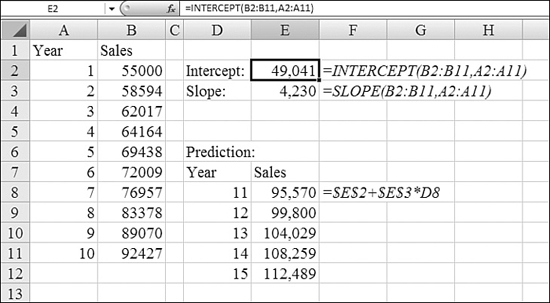

In Figure 26.14, the sales in B2:B11 are the dependent variables. In the language of Excel, these are the known_y's. You are predicting that sales are increasing linearly over time. The year numbers in A2:A11 are the independent variables. In the language of Excel, these are the known_x's.

Figure 26.14. Using the SLOPE and INTERCEPT functions is a simple way to calculate a linear regression line.

The formula in Cell E2 calculates the intercept for the line by using =INTERCEPT(B2:B11,A2:A11). The answer of 49,041 means that the model predicts that your sales in a hypothetical Year 0 would have been 49,041.

The formula in Cell E3 calculates the slope of the line by using =SLOPE(B2:B11,A2:A11). The answer of 4,230 means that the model predicts that your sales are increasing by about 4,230 each year.

When you have the slope and y-intercept, you can build a new table to predict future sales. You enter year numbers 11 through 15 in D8:D12. The formula in Cell E8 needs to multiply the year number by the slope and add the intercept. That formula is =$E$2+$E$3*D8.

The values in Cells E8 through E12 are one prediction of future sales. This assumes that the past trends continue to work over the next five years.

Using LINEST to Calculate Straight-Line Regression with Complete Statistics

Although SLOPE and INTERCEPT would do the job, the more powerful function is LINEST. Here is the difficulty: LINEST returns both the slope and the intercept. In addition, it returns a whole series of statistics. Anytime a function returns several values, you must enter the function by using Ctrl+Shift+Enter. You should also select a large enough range in advance before entering the formula. Figuring out the size of the range in advance is difficult because it varies, depending on the shape of the independent variables and also whether you ask for statistics.

However, LINEST is far more powerful than SLOPE and INTERCEPT. There are additional arguments available in LINEST that are not available in the easier functions.

Syntax: =LINEST(known_y's,known_x's,const,stats)

The LINEST function calculates the statistics for a line by using the least-squares method to calculate a straight line that best fits the data, and it returns an array that describes the line. Because this function returns an array of values, it must be entered as an array formula with Ctrl+Shift+Enter. The equation for the line is y = mx + b or y = m1×1 + m2×2 + ... + b (if there are multiple ranges of x values) where the dependent y value is a function of the independent x values. The m values are coefficients corresponding to each x value, and b is a constant value. Note that y, x, and m can be vectors. The array that LINEST returns is backward from what you would expect. The slope for the last independent variable appears first: {mn,mn-1,...,m1,b}. LINEST can also return additional regression statistics.

The LINEST function takes the following arguments:

known_y's—This is the set of y values you already know in the relationship y = mx + b. If the arrayknown_y'sis in a single column, each column ofknown_x'sis interpreted as a separate variable. If the arrayknown_y'sis in a single row, each row ofknown_x'sis interpreted as a separate variable.known_x's—This is an optional set of x values that you may already know in the relationship y = mx + b. The arrayknown_x'scan include one or more sets of variables. If only one variable is used,known_y'sandknown_x'scan be ranges of any shape, as long as they have equal dimensions. If more than one variable is used,known_y'smust be a vector (that is, a range with a height of one row or a width of one column). Ifknown_x'sis omitted, it is assumed to be the array{1,2,3,...}that is the same size asknown_y's.const—This is a logical value that specifies whether to force the constant b to equal 0. IfconstisTRUEor omitted, b is calculated normally. IfconstisFALSE, b is set equal to 0, and the m values are adjusted to fit y = mx.stats—This is a logical value that specifies whether to return additional regression statistics. IfstatsisTRUE,LINESTreturns the additional regression statistics, so the returned array is{mn,mn-1,...,m1,b;sen,sen-1,...,se1,seb;r2,sey;F,df;ssreg,ssresid}. IfstatsisFALSEor omitted,LINESTreturns only the m coefficients and the constant b. If you specifyTRUEforstats, the additional regression statistics shown in Table 26.2 are possible return values.

Table 26.2. Additional Regression Statistics for LINEST

Figure 26.16, later in this chapter, shows a visual map of the statistics being returned.

The accuracy of the line calculated by LINEST depends on the degree of scatter in the data. The more linear the data, the more accurate the LINEST model. LINEST uses the method of least squares for determining the best fit for the data.

The line- and curve-fitting functions LINEST and LOGEST can calculate the best straight line or exponential curve that fits the data. However, you have to decide which of the two results best fits the data. You can calculate TREND(known_y's,known_x's) for a straight line or GROWTH(known_y's,known_x's) for an exponential curve. These functions, without the known_x's argument, return an array of y values predicted along that line or curve at your actual data points. You can then compare the predicted values with the actual values. You might want to chart them both for a visual comparison.

In regression analysis, Microsoft Excel calculates for each point the squared difference between the y value estimated for that point and its actual y value. The sum of these squared differences is called the residual sum of squares. Microsoft Excel then calculates the sum of the squared differences between the actual y values and the average of the y values, which is called the total sum of squares (that is Regression sum of squares + Residual sum of squares). The smaller the residual sum of squares compared with the total sum of squares, the larger the value of the coefficient of determination, r-squared, which is an indicator of how well the equation resulting from the regression analysis explains the relationship among the variables.

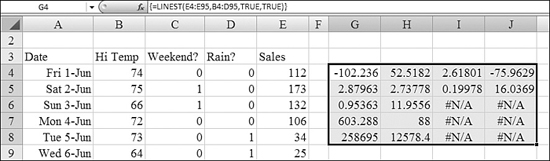

Say that you rent a snowcone cart at a local amusement park. You create a table showing total snow cones sold for each day of last summer. In Figure 26.15, Column E shows the total snow cones sold by day. As you can see, the sales rise and fall sharply from day to day.

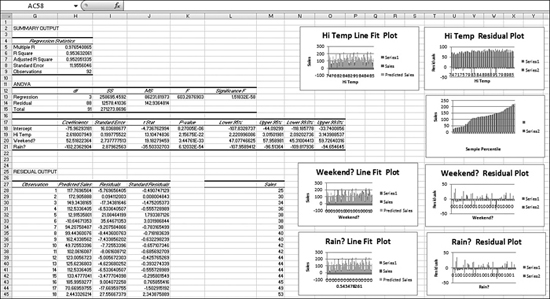

Figure 26.15. The results of the LINEST function in G4:J8 are seemingly meaningless.

The previous manager of the cart had noticed certain trends in the data. Sales were better on the weekends than on weekdays. Sales were horrible when it rained. Sales improved as the weather became hotter in July and August.

Columns B:D in Figure 26.15 contain data related to temperature, weekends, and rain. Note that in Column C, the weekend data is binary data—either 0 or 1. In Column D, the manager could have kept information about the amount of rainfall each day but instead kept this as binary data as well. If the day was predominantly rainy, the manager recorded a 1 to indicate a rainout. If the day had just a spot of rain, the manager recorded it as a non-rainy day.

To perform regression on this data, you follow these steps:

- Total the number of independent variables and add one. This is the number of columns the results of the regression will occupy. In the snow cone cart example, that is four columns.

- Figure out how may rows the result of the regression will occupy. Because you plan on asking for statistics in the snow cone example, this is five rows.

- Off to the side of the data, select a range that is four columns wide by five columns tall. This size is determined by the results of the first two steps.

- Start to type the formula, =

LINEST(. - For the

known_y's, use the sales data in Column E; this would be E4:E95. - For the

known_x's, use the values for temperature, weekend, and rain. This would be B4:D95. Note that the dates in Column A are not being used as an independent variable. The amusement park is an established park: There is nothing to indicate that attendance rises over the course of the season. - User

TRUEfor the next argument, which asks whether the intercept should be forced to be 0. This is not a requirement in the current situation. You want to allow the intercept to be calculated normally. - Use 1 or

TRUEfor thestatsargument. - Although you have now typed the complete formula,

=LINEST(E4:E95,B4:D95,TRUE,TRUE), do not press the Enter key. This is one formula that returns many results. You have to tell Excel to interpret the formula as an array formula. To do this, hold down Ctrl+Shift while pressing Enter. The function returns a seemingly meaningless range of numbers, as shown in Figure 26.15. - Start labeling the regression results in the upper-right corner. The value in the upper-right corner is the y-intercept. This is equivalent to the result of the

INTERCEPTfunction. - Working in the top row from right to left, look at the slopes of the independent variables. These appear backward from how you originally specified them. Your independent variables were temperature, weekend, and rain. The slope for the last independent variable is in the top-left corner of the results. In Figure 26.16, Cell G4 is the slope associated with rain. Cell H4 is the slope associated with weekend. Cell H5 is the slope associated with temperature.

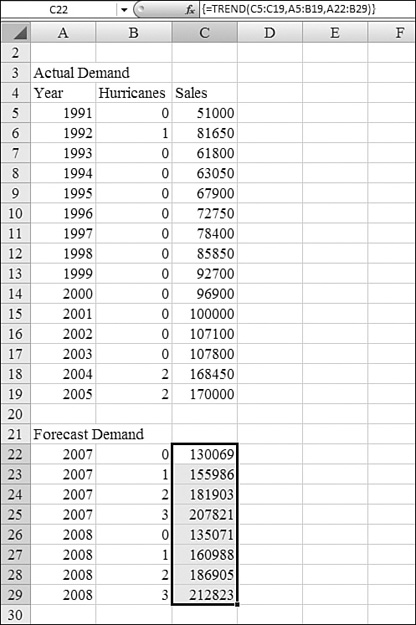

Figure 26.16. When you have the

LINESTresults, there are many more tests and charts that you can perform to test how good the regression model is.

- Take a look at these numbers for a second to see if they make sense. The intercept says you are going to sell –75 snow cones each day. This initially seems wrong. However, the value in Column I says that you will sell 2.6 snow cones for every degree of temperature. Because the lowest minimum high temperature for the summer would be about 60 degrees, the result suggests that you would sell a minimum of (60 × 2.6), or about 156 snow cones, due to temperature. Adding the –75 and 156 gets you to a minimum of 80 snow cones on a sunny day. Cell H4 suggests that you would sell about 52 extra snow cones on a weekend. Cell G4 suggests that you would sell 102 fewer snow cones on a rainy day.

- Fill in the rest of the labels for statistics. The second row of the results shows the standard error for the number above it. The first column of the third row returns the all-important r-squared value. If this value is close to 1, your model is doing a good job of predicting the data. The value of 0.95 shows that this model is fairly good. Row 3, Column 2 shows the standard error of Y. It is normal to have

#N/Ain any additional columns of Row 3. Row 4 contains the F statistic and degrees of freedom. Row 5 contains the sum of squares of the regression and the residual sum of squares. This is the number that Excel is trying to minimize when it fits the line using least squares. - In Column L, build a formula to predict sales with the results of the regression. This formula would be Intercept + Slope temp × Temp + Slope weekend × Weekend + Slope rain × Rain. The formula in Cell L4 is therefore

=$J$4+$I$4*B4+$H$4*C4+$G$4*D4. - To visually compare the data, plot the actuals in Column E and the prediction in Column L on a chart. The chart in rows 12:22 shows that the prediction is tracking fairly well with the actual. There was a cold, rainy weekday near the beginning where the model predicted –10 sales versus an actual of 25.

- For another interesting test, calculate the residual or error for each day. The data in Column M is the difference of Column L minus Column E. Plot this data. You should see many small positive and negative values. The values should swing from positive to negative frequently. The amount of scatter should not vary over time. You should not see many clusters of points that are either positive or negative. The chart in rows 24:34 shows that there are many positive residuals early in the summer, and there are fewer later in the summer. This might mean that the model is less successful at lower June temperatures than at higher August temperatures. Perhaps only real snow cone fans buy the product at temperatures of 60 to 80. Above 80 degrees, more people might buy the product.

Troubleshooting LINEST

Remember that LINEST returns an array of values. In addition, you need to select a large enough range before entering the function, and you need to use Ctrl+Shift+Enter to enter the formula.

If you forget to use Ctrl+Shift+Enter, Excel returns just the top-left cell from the result set. In the dataset in Figure 26.15, this would just be the slope for the final independent variable (–102.236). If you enter LINEST and receive just one value, you should follow these steps:

- Select a range starting with the

LINESTformula in the upper-left corner. The range should be five rows tall. It should be at least two columns wide for models with oneknown_xcolumn. Add additional columns for additionalknown_xseries. - Press the F2 key to edit the current

LINESTformula. - Hold down Ctrl+Shift+Enter to reenter the formula as an array.

Alternatively, you can use the INDEX function to pluck one particular value out of the LINEST function. For example, if you wanted to retrieve the F statistic from Row 4, Column 1, you could use =INDEX(LINEST(E4:E95,B4:D95,TRUE,TRUE),4,1).

In the simpler situation when you have only one independent x variable, you can obtain the slope and y-intercept values directly by using the following formula for slope:

INDEX(LINEST(known_y's,known_x's),1)

You use the following formula for the y-intercept:

INDEX(LINEST(known_y's,known_x's),2)

Using FORECAST to Calculate Prediction for Any One Data Point

When you understand straight-line regression, you can use the FORECAST function to return a prediction for any point in the future.

Syntax: =FORECAST(x,known_y's,known_x's)

The FORECAST function calculates, or predicts, a future value by using existing values. The predicted value is a y value for a given x value. The known values are existing x values and y values, and the new value is predicted by using linear regression. You can use this function to predict future sales, inventory requirements, or consumer trends.

The FORECAST function takes the following arguments:

x—This is the data point for which you want to predict a value. Ifxis nonnumeric,FORECASTreturns a#VALUE!error.known_y's—This is the dependent array or range of data.known_x's—This is the independent array or range of data.

If known_y's and known_x's are empty or contain a different number of data points, FORECAST returns a #N/A error. If the variance of known_x's equals 0, then FORECAST returns a #DIV/0! error.

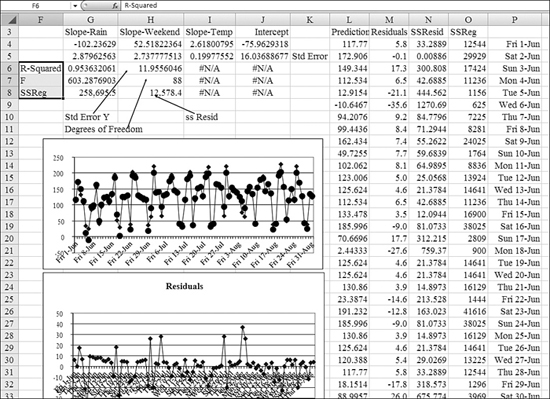

Figure 26.17 shows actual sales data for the past decade. Years are in Column A, and sales are in Column C. The sales data in C2:C12 is the range of known_y's. The years in A2:A12 is the range of known_x's.

Figure 26.17. You use the FORECAST function to find the data point for one future time period.

To predict sales for future periods, you follow these steps:

- Enter future years in A13:A2011.

- In Column B, enter

ActualorForecastfor each row so that the person reading the table understands that the new values are a forecast. - To predict sales for 2007, enter this formula in Cell C13:

=FORECAST(A13,$C$2:$C$12,$A$2:$A$12). - Copy the formula from Cell C13 down to C14:C17.

Note

Note that FORECAST works only for straight-line regression. It also does not offer the ability to force the intercept to be 0. If you need this ability, you have to use LINEST and then build a prediction formula as in step 14 of the previous section or the TREND function as discussed in the next section.

Using TREND to Calculate Many Future Data Points at Once

The TREND function is another array function. This means that it can return many values from a single formula. If you think about the previous use of FORECAST in Figure 26.17, you realize that Excel really had to perform the linear regression multiple times—once for each of the cells in C13:C17. It would be better if you could perform the regression once and have Excel calculate all the values from that regression. The TREND function helps you do this.

Syntax: =TREND(known_y's,known_x's,new_x's,const)

The TREND function returns values along a linear trend. It fits a straight line (using the least-squares method) to the arrays known_y's and known_x's. It returns the y values along that line for the array of new_x's that you specify.

The TREND function takes the following arguments:

known_y's—This is the set of y values you already know in the relationship y = mx + b. If the arrayknown_y'sis in a single column, each column ofknown_x'sis interpreted as a separate variable. If the arrayknown_y'sis in a single row, each row ofknown_x'sis interpreted as a separate variable.known_x's—This is an optional set of x values that you may already know in the relationship y = mx + b. The arrayknown_x'scan include one or more sets of variables. If only one variable is used,known_y'sandknown_x'scan be ranges of any shape, as long as they have equal dimensions. If more than one variable is used,known_y'smust be a vector (that is, a range with a height of one row or a width of one column). Ifknown_x'sis omitted, it is assumed to be the array{1,2,3,...}that is the same size asknown_y's.new_x's—These are new x values for which you wantTRENDto return corresponding y values.new_x'smust include a column (or row) for each independent variable, just asknown_x'sdoes. So, ifknown_y'sis in a single column,known_x'sandnew_x'smust have the same number of columns. Ifknown_y'sis in a single row,known_x'sandnew_x'smust have the same number of rows. If you omitnew_x's, it is assumed to be the same asknown_x's. If you omit bothknown_x'sandnew_x's, they are assumed to be the array{1,2,3,...}that is the same size asknown_y's.const—This is a logical value that specifies whether to force the constant b to equal0. IfconstisTRUEor omitted, b is calculated normally. IfconstisFALSE, b is set equal to0, and the m values are adjusted so that y = mx.

Formulas that return arrays must be entered as array formulas. This means that after entering the formula, you need to hold down Ctrl+Shift while pressing Enter.

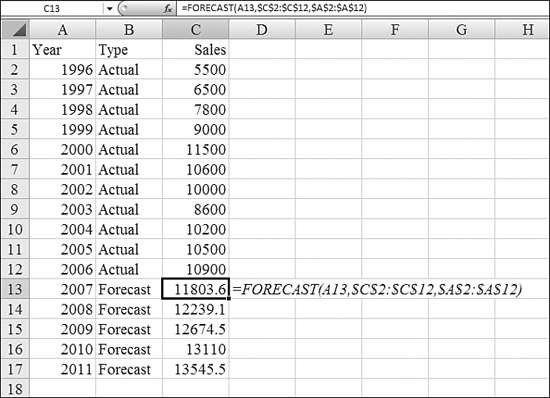

Say that you are responsible for forecasting the material needs for a company that supplies roofing material. You have historical trends of usage by year. You’ve included past hurricane data because those events caused extraordinary demand. Your job is to predict how much roofing material you will sell, assuming that there are no hurricanes, but how much you might want to have lined up in case there are one, two, or three hurricanes. Here’s what you do:

- As in the worksheet shown in Figure 26.18, enter the actual data in A4:C17. Make the sales in Column C the

known_y's.Figure 26.18. The

TRENDfunction is an array formula that can do one regression and return many future data points.

- Make the years and hurricane data in Columns A and B the

known_x's. - Enter a new table in A22:B29. You want to find the forecasted requirements for 2007 and 2008 for the possibility that there are zero, one, two, or three hurricanes. The year and hurricane columns must be in the same format as the

known_x'sin step 2. - Keep in mind that because the

TRENDfunction is an array function, it can return several answers from one formula. Select the range C22:C29. With that range selected, start to type the formula=TREND(. - Enter C5:C19 for

known_y's, which are past sales. Enter A5:A19 forknown_x's. The new x values are the data in A22:B29. - Ensure that your formula is now

=TREND(C5:C19,A5:B19,A22:B29). To finish the formula, hold down Ctrl+Shift while pressing Enter.

The result is shown in C22:C29. The TREND function predicts that you will need a base level of 130 thousand in 2007 with no hurricanes. With two hurricanes in 2007, demand would rise to 182 thousand.

Using LOGEST to Perform Exponential Regression

Some patterns in business follow a linear regression. However, other items are not linear at all. If you are a scientist monitoring the growth of bacteria in a Petri jar, you will see exponential growth in the generations.

If you try to fit an exponential growth to a straight line, you have a large error. If the r-squared from linear regression is too low, you can try using exponential regression to see if the pattern of data matches exponential regression better. For exponential regression, you use the LOGEST function, which is similar to the LINEST function.

Syntax: =LOGEST(known_y's,known_x's,const,stats)

In regression analysis, the LOGEST function calculates an exponential curve that fits the data and returns an array of values that describes the curve. Because this function returns an array of values, it must be entered as an array formula. The equation for the curve is y = b*m^x or y = (b*(m1^x1)*(m2^x2)*_) (if there are multiple x values), where the dependent y value is a function of the independent x values. The m values are bases that correspond to each exponent x value, and b is a constant value.

The LOGEST function takes the following arguments:

known_y's—This is the set of y values you already know in the relationship y = b × m^x. If the arrayknown_y'sis in a single column, each column ofknown_x'sis interpreted as a separate variable. If the arrayknown_y'sis in a single row, each row ofknown_x'sis interpreted as a separate variable.known_x's—This is an optional set of x values you may already know in the relationship y = b × m^x. The arrayknown_x'scan include one or more sets of variables. If only one variable is used,known_y'sandknown_x'scan be ranges of any shape, as long as they have equal dimensions. If more than one variable is used,known_y'smust be a range of cells with a height of one row or a width of one column (which is also known as a vector). Ifknown_x'sis omitted, it is assumed to be the array{1,2,3,...}that is the same size asknown_y's.const—This is a logical value that specifies whether to force the constant b to equal1. IfconstisTRUEor omitted, b is calculated normally. IfconstisFALSE, b is set equal to1, and the m values are fitted to y = m^x.stats—This is a logical value that specifies whether to return additional regression statistics. IfstatsisTRUE,LOGESTreturns the additional regression statistics (refer to Figure 26.16), so the returned array is{mn,mn-1,...,m1,b;sen,sen-1,...,se1,seb;r2,sey; F,df;ssreg,ssresid}. IfstatsisFALSEor omitted,LOGESTreturns only the m coefficients and the constant b.

The more a plot of data resembles an exponential curve, the better the calculated line fits the data. Like LINEST, LOGEST returns an array of values that describes a relationship among the values, but LINEST fits a straight line to the data; LOGEST fits an exponential curve.

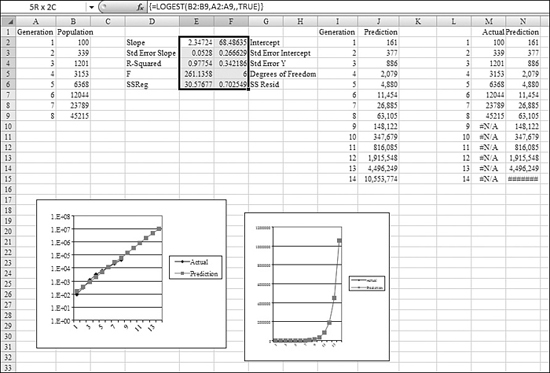

Figure 26.19 shows an estimated population in Column B and the generation in Column A. To perform a exponential regression, you follow these steps:

- Because there is one independent variable, the results from the regression occupy two columns, so find a blank range of the spreadsheet and select a range that is two columns wide by five rows tall, such as E2:F6.

- Enter the beginning of the formula:

=LOGEST(. Enter theknown_y'sas B2:B9 and theknown_x'sas A2:A9. Leave theconstvalue blank. SpecifyTRUEforstatistics. The formula should be=LOGEST(B2:B9,A2:A9,,TRUE). - Do not press Enter for the formula. Instead, hold down Ctrl+Shift while pressing Enter to tell Excel to interpret the result as an array formula and to return a table of values from

LOGEST. - Add some labels to help interpret the statistics. The labels shown in Column D and G are examples.

- To use the results of the regression in a prediction calculation, enter a different formula than with

LINEST. The formula is Intercept × Slope^X. In Figure 26.19, to predict population values for a given generation in Cell I2, use=$F$2*$E$2^I2. Alternatively, you can use theGROWTHfunction, discussed in the next section.

Figure 26.19. When data is growing at an exponential rate, you use LOGEST to perform a regression analysis.

Usign GROWTH to Predict Many Data Points from an Exponential Regression

As the TREND function is able to extrapolate points from a linear regression, the GROWTH function is able to extrapolate points from an exponential regression.

Syntax: =GROWTH(known_y's,known_x's,new_x's,const)

The GROWTH function calculates predicted exponential growth by using existing data. GROWTH returns the y values for a series of new x values that you specify by using existing x values and y values. You can also use the GROWTH worksheet function to fit an exponential curve to existing x values and y values. This function takes the following arguments:

known_y's—This is the set of y values you already know in the relationship y = b × m^x. If the arrayknown_y'sis in a single column, each column ofknown_x'sis interpreted as a separate variable. If the arrayknown_y'sis in a single row, each row ofknown_x'sis interpreted as a separate variable. If any of the numbers inknown_y'sis0or negative,GROWTHreturns a#NUM!error.known_x's—This is an optional set of x values that you may already know in the relationship y = b × m^x. The arrayknown_x'scan include one or more sets of variables. If only one variable is used,known_y'sandknown_x'scan be ranges of any shape, as long as they have equal dimensions. If more than one variable is used,known_y'smust be a vector (that is, a range with a height of one row or a width of one column). Ifknown_x'sis omitted, it is assumed to be the array{1,2,3,...}that is the same size asknown_y's.new_x's—These are new x values for which you wantGROWTHto return corresponding y values.new_x'smust include a column (or row) for each independent variable, just asknown_x'sdoes. So, ifknown_y'sis in a single column,known_x'sandnew_x'smust have the same number of columns. Ifknown_y'sis in a single row,known_x'sandnew_x'smust have the same number of rows. Ifnew_x'sis omitted, it is assumed to be the same asknown_x's. If bothknown_x'sandnew_x'sare omitted, they are assumed to be the array{1,2,3,...}that is the same size asknown_y's.const—This is a logical value that specifies whether to force the constant b to equal1. IfconstisTRUEor omitted, b is calculated normally. IfconstisFALSE, b is set equal to1, and the m values are adjusted so that y = m^x.

When you have formulas that return arrays, you must enter them as array formulas after selecting the correct number of cells. To specify an array formula, you hold down Ctrl+Shift while pressing Enter.

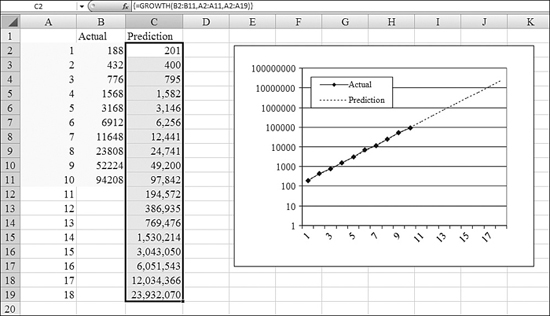

In Figure 26.20, the original data is the population for the first 10 generations in A2:B11.

Figure 26.20. GROWTH performs an exponential regression and extrapolates the results in one step.

It would be interesting to run an exponential regression and see the prediction for future generations but also for the known generations as well. This would allow you to see how well the prediction tracks with current values. To do this, you follow these steps:

- Add new generation numbers in A12:A18. The

GROWTHfunction will use these numbers and return an array of values. - Select the entire range C2:C19 for the results before entering the formula.

- Put the

known_y'sin B2:B11. Theknown_x'sare in A2:A11. Put thenew_x'sin A2:A19. The formula is=GROWTH(B2:B11,A2:A11,A2:A19). - After typing the formula, hold down Ctrl+Shift while pressing Enter. This should cause the formula to return values in each cell in C2:C19.

- To visualize the original data and the prediction, plot A1:C19 on a line chart. Numbers at the end of the progression (24 million) make the scale of the chart so large that you cannot see the detail of the first 12 generations.

- Right-click the numbers along the y-axis and choose Format Axis. On the Scale tab, choose Logarithmic Scale. The resulting chart allows you to examine both the smaller and larger numbers in the chart.

Using PEARSON to Determine Whether a Linear Relationship Exists

Remember that Excel blindly fits a regression line to any dataset. The fact that Excel returns a regression line does not mean that you should use it to make any predictions. The initial question to ask yourself is Does a linear relationship exist in this data?

The Pearson product–moment correlation coefficient, named after Karl Pearson, returns a value from –1.0 to +1.0. The calculation could make your head spin, but the important thing to know is that a PEARSON value closer to 1 or –1 means that a linear relationship exists. A value of 0 indicates no correlation between the independent and dependent variables.

Note

I am somewhat jealous that Microsoft has named an obscure function after fellow Excel consultant Chip Pearson. I am lobbying Microsoft for the inclusion of a JELEN function, possibly used to measure the degree of laid-backness caused by the gel in your shoe insoles. Seriously, Chip Pearson’s website is one of the best established sources of articles on the Web about Excel. To peruse the articles, visit www.cpearson.com.

Syntax: =PEARSON(array1,array2)

The PEARSON function returns the Pearson product–moment correlation coefficient, r, a dimensionless index that ranges from –1.0 to 1.0, inclusive, and reflects the extent of a linear relationship between two datasets.

The PEARSON function takes the following arguments:

array1—This is a set of independent values.array2—This is a set of dependent values.

The arguments must be either numbers or names, array constants, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included. If array1 and array2 are empty or have a different number of data points, PEARSON returns a #N/A error.

The result of PEARSON is also sometimes known as r. Multiplying PEARSON by itself leads to the more famous r-squared test.

Using RSQ to Determine the Strength of a Linear Relationship

r-squared is a popular measure of how well a regression line explains the variability in the y values. It is popular because the values range from 0 to 1. Numbers close to 1 mean that the regression line does a great job of predicting the values. Numbers close to 0 mean that the regression result can’t predict the values at all.

r-squared is the statistic in the third row, first column of a LINEST function. It is also the square of the PEARSON function. You could use =INDEX(LINEST(),3,1) or =PEARSON()^2. But instead, Excel provides the easy-to-remember RSQ function.

Syntax: =RSQ(known_y's,known_x's)

The RSQ function returns the square of the Pearson product–moment correlation coefficient through data points in known_y's and known_x's. (For more information, see the section on the PEARSON function, earlier in this chapter.) The r-squared value can be interpreted as the proportion of the variance in y that is attributable to the variance in x.

The RSQ function takes the following arguments:

known_y's—This is an array or a range of data points.known_x's—This is an array or a range of data points.

The arguments must be either numbers or names, arrays, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included. If known_y's and known_x's are empty or have a different number of data points, RSQ returns a #N/A error.

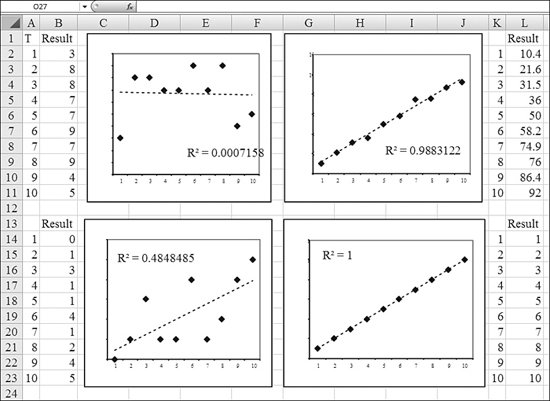

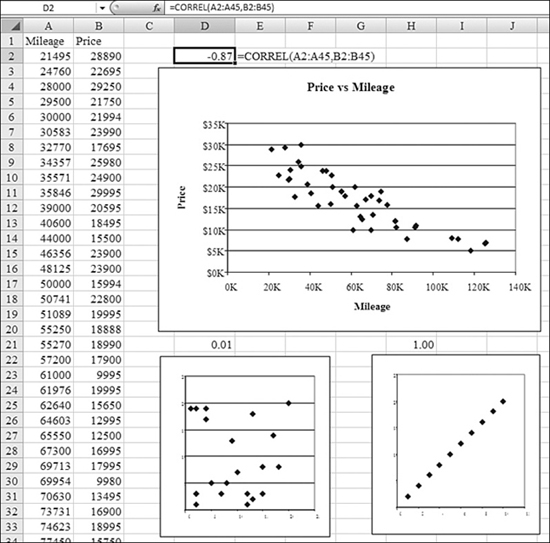

Figure 26.21 shows four datasets and their associated r-squared values:

Figure 26.21. As r-squared approaches 1.0, the predictive ability of the regression line improves.

- The chart in the top-left corner has an r-squared near 0. There is little predictive ability in this regression line. In fact, the regression line is practically a horizontal line drawn through the mean of the data points.

- The chart in the lower-left corner has an r-squared of 0.48. There is a lot of variability in the dots, but they do seem to trend up. There are huge relative errors on certain data points (for example, the value of y = 1 when x = 7).

- The chart in the upper-right corner shows a nearly perfect correlation. The r-squared is appropriately high, at 0.988. This means that most of the variability in y is explained by x. There are some tiny minor variations above or below the line, but the regression is doing a great job.

- The final chart, in the lower right, illustrates a perfect correlation and an r-squared of 1.0. Every occurrence of y falls exactly on the regression line.

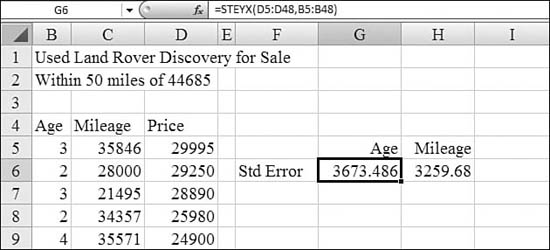

Using STEYX to Calculate Standard Regression Error

Standard error is a measure of the quality of a regression line. In rough terms, the standard error is the size of an error that you might encounter for any particular point on the line. Smaller errors are better, and larger errors are worse. Standard error can also be used to calculate a confidence interval for any point.

Syntax: =STEYX(known_y's,known_x's)

The STEYX function returns the standard error of the predicted y value for each x in the regression. The standard error is a measure of the amount of error in the prediction of y for an individual x.

The STEYX function takes the following arguments:

known_y's—This is an array or a range of dependent data points.known_x's—This is an array or a range of independent data points.

The arguments must be either numbers or names, arrays, or references that contain numbers. If an array or a reference argument contains text, logical values, or empty cells, those values are ignored; however, cells that contain the value 0 are included. If known_y's and known_x's are empty or have a different number of data points, STEYX returns a #N/A error.

To calculate standard error, you square all the residuals and add them together. Then you divide by the number of points, excluding the starting and ending points. Finally, you take the square root of that result to calculate standard error.

In general, a lower standard error is better than a higher one. A standard error of 2,000 when you are trying to predict the price of a $30,000 car isn’t too bad. A standard error of 2,000 when you are trying to predict the price of a $3 jar of pickles is horrible. You need to compare the standard error to the size of the value you are predicting.

In Figure 26.22, two regressions attempt to predict the price of a car based on either mileage or age. The standard error for the mileage method is a little less than the standard error for the age method.

Figure 26.22. Standard error is another measure of the quality of a regression line.

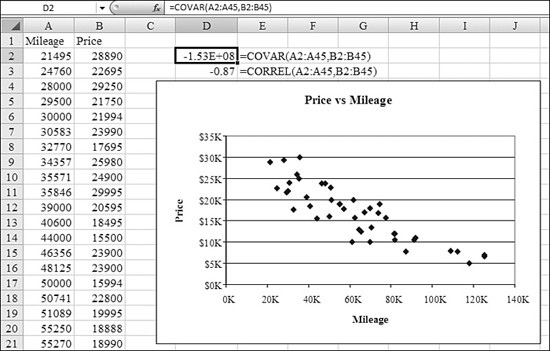

Using COVAR to Determine Whether Two Variables Vary Together

Covariance is a measure of how greatly two variables vary together. If the value is 0, the variables do not appear to be related. For positive values, covariance indicates that as x increases, y also increases. For negative values, covariance indicates that as x increases, y decreases.

Syntax: =COVAR(array1,array2)

The COVAR function returns covariance, the average of the products of deviations for each data point pair. You use covariance to determine the relationship between two datasets. For example, you can examine whether greater income accompanies greater levels of education.

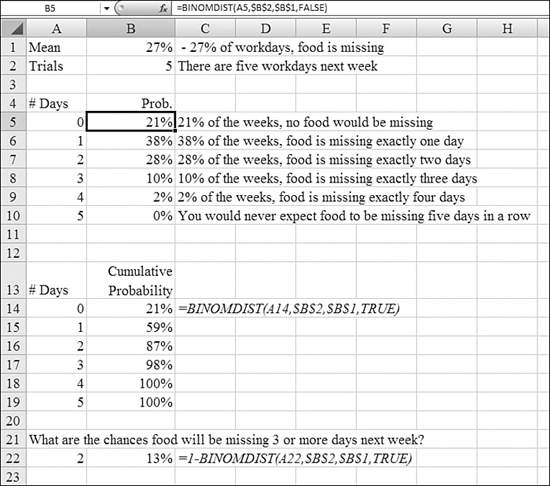

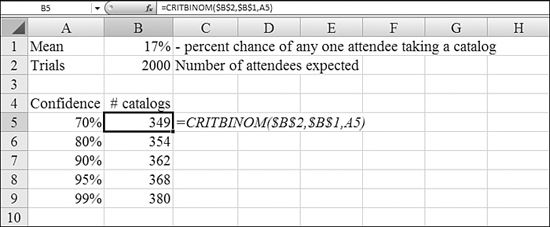

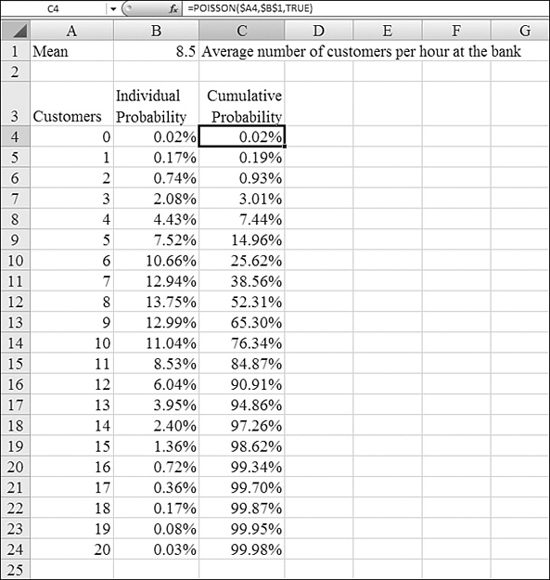

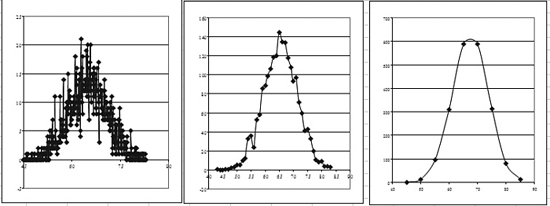

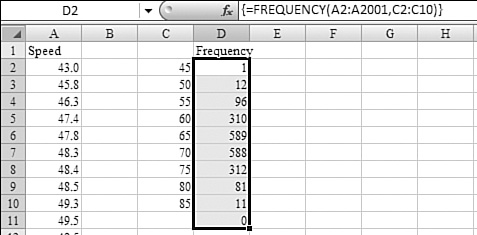

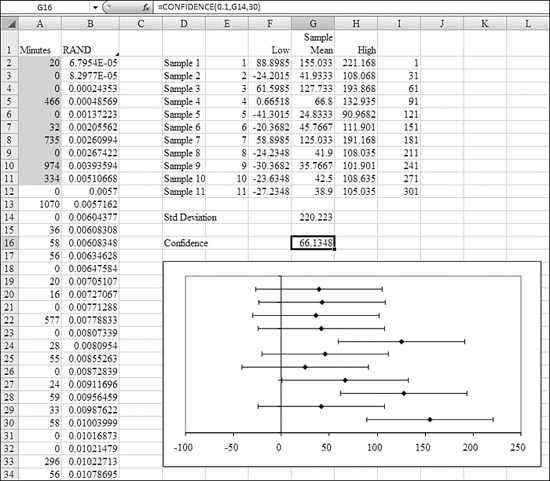

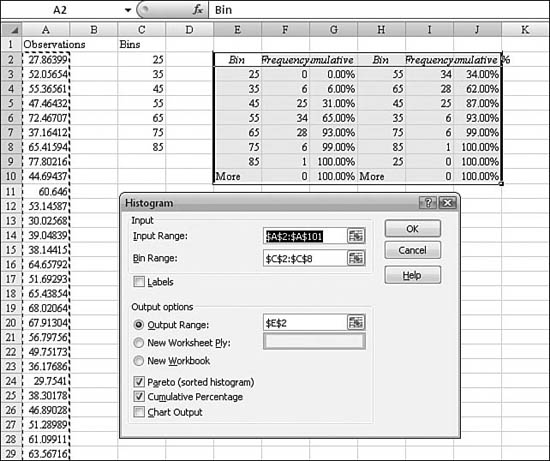

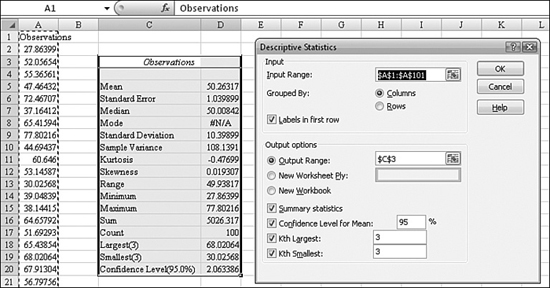

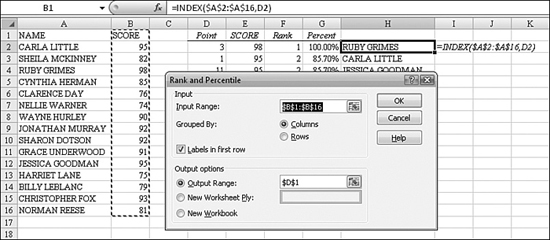

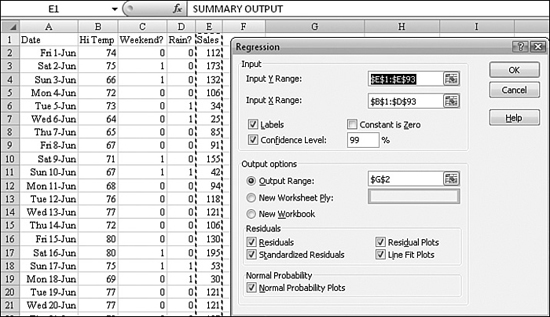

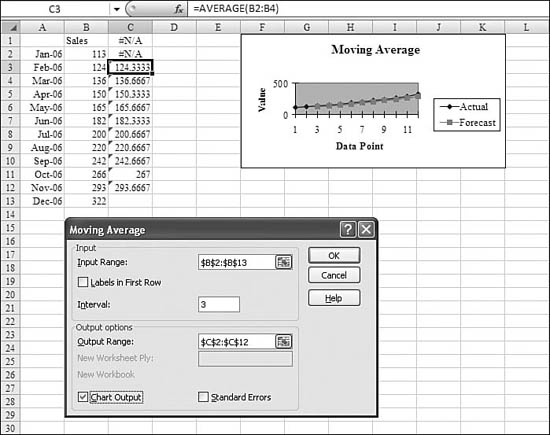

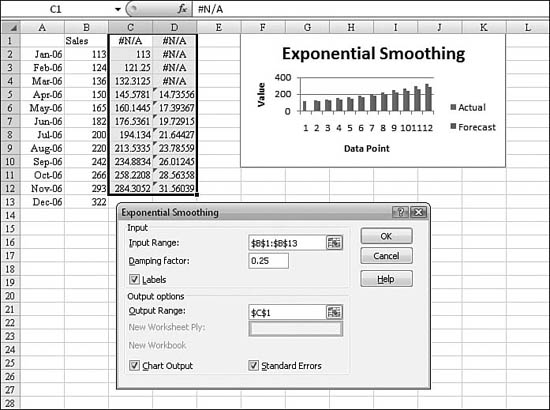

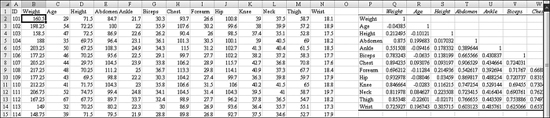

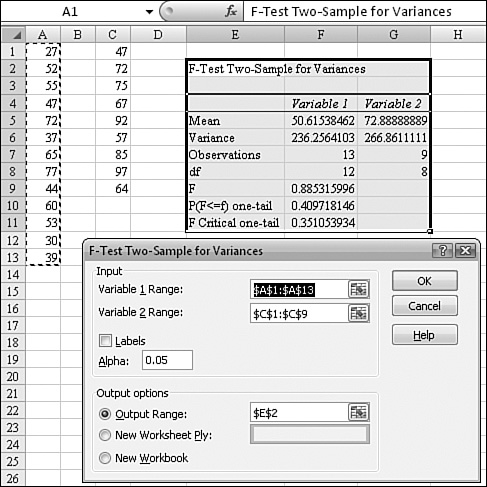

The COVAR function takes the following arguments: