Chapter 31. External DSL Miscellany

At the point in time that I’m writing this chapter, I’m very conscious about how long I’ve spent on this book. As with writing software, there is a point at which you have to cut scope in order to ship your software, and the same is true of book writing—although the bounds of the decision are somewhat different.

This tradeoff is particularly apparent to me in writing about external DSLs. There is a host of topics that are worth further investigation and writing. These are all interesting topics, and probably useful to a reader of this book. But each topic takes time to research and thus delays the book appearing at all, so I felt I needed to leave them unexplored. Despite this, however, I do have some incomplete but hopefully useful thoughts that I felt I could collect here. (Miscellany is, after all, just a fancy sounding name for hodgepodge.)

Remember that my thoughts here are more preliminary than much of the other material in this book. By definition, these are all topics that I haven’t done enough work on to merit a proper treatment.

31.1 Syntactic Indentation

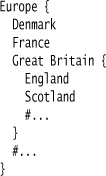

In many languages, there is a strong hierarchic structure of elements. This structure is often encoded through some kind of nested block. So we might describe Europe’s structure using a syntax like this:

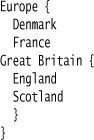

That example shows a common way in which programmers of all stripes indicate the hierarchic structure of their programs. The syntactic information about the structure is contained between delimiters, in this case the curly brackets. However, when you read the structure, you pay more attention to the formatting. The primary form of structure that we read comes from the indentation, not from the delimiters. As a true-blooded Englishman, I might prefer to format the above list like:

Here the indentation is misleading, as it does not match the actual structure shown by the curly brackets. (Although it is informative, as it shows a common British view of the world.)

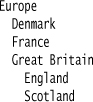

Since we mostly read structure through indentation, there’s an argument that we should use the indentation to actually show the structure. In this case, I can write my European structure like this:

In this way, the indentation defines the structure as well as communicates it to the eye. This approach is most famously used by the Python programming language, it’s also used by YAML—a language for describing data structures.

In terms of usability, the great advantage of syntactic indentation is that the definition and the eye are always in sync—you can’t mislead yourself by altering the formatting without changing the real structure. (Text editors that do automatic formatting remove much of that advantage, but DSLs are less likely to have that kind of support.)

If you use syntactic indentation, be very careful about the interplay between tabs and spaces. Since tab widths vary depending on how you set the editor, mixing tabs and spaces in a file can cause no end of confusion. My recommendation is to follow the approach of YAML and forbid tabs from any language that uses syntactic indentation. Any inconvenience you’ll suffer from not allowing tabs will be much less than the confusion you avoid.

Syntactic indentation is very convenient to use, but presents some real difficulties in parsing. I spent some time looking at Python and YAML parsers and saw plenty of complexity due to the syntactic indentation.

The parsers I looked at handled syntactic indentation in the lexer, since the lexer is the part of a Syntax-Directed Translation system that deals with characters. (Delimiter-Directed Translation is probably not a good companion for syntactic indentation, since syntactic indentation is all about the kind of counting of the block structure that Delimiter-Directed Translation has problems with.)

A common, and I think effective, tactic is to get the lexer to output special “indent” and “dedent” tokens to the parser when it detects an indentation change. Using these imaginary tokens allows you to write the parser using normal techniques for handling blocks—you just use “indent” and “dedent” instead of { and }. Doing this in a conventional lexer, however, is somewhere between hard and impossible. Detecting indentation changes isn’t something that lexers are designed to do, nor are they usually designed to emit imaginary tokens that don’t correspond to particular characters in the input text. As a result, you’ll probably end up having to write a custom lexer. (Although ANTLR can do this, take a look at Parr’s advice for handling Python [parr-antlr].)

Another plausible approach—one that I’d certainly be inclined to try—is to preprocess the input text before it hits the lexer. This preprocessing would only focus on the task of recognizing indentation changes and would insert special textual markers into the text when it finds them. These markers can then be recognized by the lexer in the usual way. You have to pick markers that aren’t going to clash with anything in the language. You also have to cope with how this may interfere with diagnostics that tell you the line and column numbers. But this approach will greatly simplify the lexing of syntactic indentation.

31.2 Modular Grammars

DSLs are the better the more limited they are. Limited expressiveness keeps them easy to understand, use, and process. One of the biggest dangers with a DSL is the desire to add expressiveness—leading to the trap of the language inadvertently becoming general-purpose.

In order to avoid that trap, it’s useful to be able to combine independent DSLs. Doing this requires independently parsing the different pieces. If you’re using Syntax-Directed Translation, this means using separate grammars for different DSLs but being able to weave these grammars into a single overall parser. You want to be able to reference a different grammar from your grammar, so that if that referenced grammar changes you don’t need to change your own grammar. Modular grammars would allow you to use reusable grammars in the same way that we currently use reusable libraries.

Modular grammars, however useful for DSL work, are not a well-understood area in the language world. There are some people exploring this topic, but nothing that’s really mature as I write this.

Most Parser Generators use a separate lexer, which further complicates using modular grammars since a different grammar will usually need a different lexer than the parent grammar. You can work around this by using Alternative Tokenization, but that places constraints on how the child grammar can fit in with the parent. There’s currently a growing feeling that scannerless parsers—those which don’t separate lexical and syntactic analysis—may be more applicable to modular grammars.

For the moment, the simplest way of dealing with separate languages is to treat them as Foreign Code, pulling the text of the child language into a buffer and then parsing that buffer separately.