CHAPTER 16

Appropriate Incident Response Procedures

In this chapter you will learn:

• The major steps of the incident response cycle

• How to prepare for security incidents

• Incident detection and analysis techniques

• How to contain, or reduce the spread, of an incident

• How to eradicate and recover from an incident

• What to do post incident

Predicting rain doesn’t count. Building arks does.

—Warren Buffett

Although we commonly use the terms interchangeably, there are subtle differences between an event, which is any occurrence that can be observed, verified, and documented, and an incident, which is one or more related negative events that compromise an organization’s security posture. Incident response is the process of negating the effects of an incident on an information system.

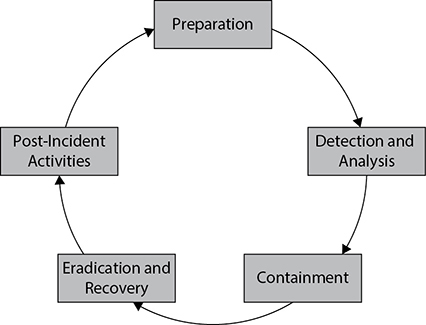

In Chapter 1, we covered the benefits of using the intelligence cycle in enabling us to understand and scale key intelligence tasks by breaking them down into distinct phases. As with generating and sharing intelligence, incident response benefits from using a framework to help us more easily understand an adversary’s activities and respond to them using repeatable, scalable methods. The incident response cycle comprises the major steps required to prepare for an intrusion, identify the activities associated with it, develop and deploy the analytical techniques to understand it, and execute the plans to return the system to an operational state. In this chapter we’ll cover these phases, which are shown in Figure 16-1, and we’ll discuss techniques associated with each step and how these defensive efforts can make our responses more effective.

Figure 16-1 The incident response cycle

There are many incident response models, but all share some basic characteristics. They all require us to take some preparatory actions before anything bad happens, to identify and analyze an event to determine the appropriate counteractions, to correct the problem(s), and finally to keep the incident from happening again. Clearly, efforts to prevent future occurrences tie back to our preparatory actions, to create a cycle.

Preparation

As highlighted several times throughout the book, preparing for security incidents requires a sound methodology that aims to reduce the amount of uncertainty as much as possible. By prescribing technical and operational best practices, we may effectively protect critical business from compromise and sensitive data from exposure by identifying key assets and response priorities. Though we do as much as we can to be proactive in stopping threats, we must face the reality that at some point, something will get through, so we must be prepared to recognize and remediate as quickly and completely as possible. In thinking about how a security team might approach preparing for the unknown, we need to keep a few things in mind. Because preparation will likely affect a wide range of teams outside of the security team itself, it’s important that we get buy-in from all levels of the organization as soon as possible.

Preparation will involve many technical and nontechnical steps. For the purpose of the CySA+ exam, we’ll cover three elements of preparation: training, testing, and documentation. Although many of the technical steps associated with each element will not involve everyone in the organization, it will require the cooperation of teams directly impacted by changes to the network and its operations. For example, installing new security devices, deploying detection signatures, and ensuring that patches are applied may not need direct involvement from end users, but the organization’s network architecture, systems administrators, and IT support teams must be aware so that they can make the appropriate modifications on their end to enforce and validate these changes. For all users in the organization, the processes and documentation techniques used must be as user-friendly as possible, particularly for a nontechnical audience.

Training

While laying the groundwork for effective response, you’ll need to ensure that the first few steps performed by everyone involved are the right ones. After all, the more quickly and accurately a team can identify an issue, the better off you all will be as you work through the entire incident response (IR) process. Seeing the signs becomes will be easier as you and your team gain experience, but providing good training on the fundamentals can save the organization time and money in the long run. Training should include technical training for the IR team, college courses, or various professional education classes. Several universities well known for their computer science and security programs offer distance learning, which makes it easier for students to access training opportunities.

In many cases, the nontechnical staff make up a majority of the organization, and invariably these users will be the ones who are most exposed to the signs of a potential security incident. Devising a defense against continuous and persistent threats such as phishing is imperative. Key to a collective defense is training users in how to identify such threats and how to handle situations in which they may have been tricked into providing sensitive information. To be clear, training nontechnical staff members who are targets of these types of malicious activities should include training in how to communicate what they observe. This will significantly raise the organization’s level of preparation.

Testing

In IR, as with so many tasks in life, practice makes perfect. And getting to a state of proficiency takes time and discipline. Practice sessions are useful in gauging the team’s ability to respond appropriately, identifying areas for improvement, and increasing team members’ confidence in themselves and in the IR process. Without practice that simulates live conditions, incident responders may not be able to articulate their observations in a realistic manner. Through realistic testing, your team members can validate assumptions and dispel unhelpful preconceptions about the incidents they may face.

There are many parallels between the development and execution of IR and military operations plans. For the last few decades, the US military has participated in a large-scale multinational series of exercises called Cobra Gold. Held annually since 1982, Cobra Gold began as a way for the United States and Thailand to strengthen ties between their militaries. While the focus has changed from year to year, the goals of having a venue for the participating nations to conduct military, humanitarian, and disaster relief exercises have remained. One of the authors had the pleasure of preparing an expeditionary communications team for multiple deployments to Thailand in support of Cobra Gold. As part of the preparation, these military units conducted various types of exercises used to evaluate its readiness, or ability to do its job. Year after year, the units were evaluated using four major types of exercises during the event. The four, listed next, can also be used to evaluate your organization’s IR plan, procedures, and capabilities. As with military war gaming, the goals of IR exercises are to test strategies, vet procedures, and clarify the effects of deploying countermeasures in a manner that doesn’t put any resources directly in front of the adversary.

• Walkthrough Walkthroughs offer the most basic kind of testing for team members. They often accompany training because they can be performed in a classroom setting, with little or no additional resources required. Walkthroughs are designed to familiarize participants with response steps, crisis communications plans, and their roles and responsibilities as defined in the plans.

• Tabletop exercises Tabletop exercise are live sessions in which members of the security team come together to discuss their roles and responses to hypothetical situations. What separates tabletop exercises from simple walkthroughs is that participants are often guided through a scenario, complete with changes in the environment and simulated actor behaviors. The goal is to highlight how various teams execute their parts of the plan, what improvements may be needed, and what it’s like to operate in uncertainty through discussion.

• Functional exercises Functional exercises enable teams to test their understanding of the IR plan, identifying and executing specific technical tasks related to the response effort. In this kind of exercise, a team may be presented with a scenario and be expected to identify the correct tool to use, how to use the tool, and how to create report results. The focus of functional exercises is often to assess an individual’s mastery of a particular technique.

• Full-scale exercises A full-scale exercise is an experience modeled as closely as possible to a real event. Often performed in real-time, full-scale exercises test everything from the participants’ detailed understanding of the organization’s IR process to specific individual and team tasks. This type of exercise is useful in measuring performance compared to program objectives.

Keep in mind when formulating the testing plan that the tests and the testing process should be easy to understand for everyone involved. Understanding that plans are designed with little space for improvisation, the exercises should be designed so that all participants can clearly understand the roles they play and the tasks they are expected to perform. It’s also desirable for key leaders to be involved in testing by providing initial requirements and vision, participating in a tabletop event, observing a full-scale exercise, or simply providing feedback at the conclusion of the assessments. Although the results from training aren’t always immediately apparent, judging the effectiveness of a response strategy by using a well-designed testing plan can tell you if your team is on the right path, and it provides clear steps forward if adjustments are necessary.

Documentation

All good processes need to be recorded so that they can be referenced, shared, and sometimes improved upon. Documentation is arguably the most important nontechnical tool at your team’s disposal. Even for the most seasoned team, it pays to have well-documented steps at the ready. Documentation extends beyond just recording the steps of your organization’s IR process; any information about systems that may be useful to responders throughout the process, such as network configurations, system settings, and system points of contact, should also be included.

Detection and Analysis

Detecting and analyzing events is the first step in putting practice into play. Often referred to as identification, this step in the process may use a number of automated detection techniques to increase team efficiency. An automated detection and analysis process is much more scalable and reliable that manual processes because it can be used consistently to highlight behavior patterns of interest that a human analyst may miss.

Characteristics of Severity Level Classification

It’s important to have a clear reference point to know the true scope of impact during a suspected incident—simply noting that the network seems slow will not be enough to make a good determination regarding what to do next. As responders, it’s important that you lay out a clear set of criteria to determine how to classify a security event. This classification process, sometimes known as the scope of impact, is the formal determination of whether an event is enough of a deviation from normal operations to be called an incident and the degree to which services have been affected. Keep in mind that some actions you perform in the course of your duties as a systems administrator may trigger security devices and appear to be an attack. Documenting these types of legitimate anomalies will reduce the number of false positives and enable you to have more confidence in your alerting system. In the case of a legitimate attack, you must collect as much data as possible from sources throughout your network, such as log files from network devices. Gathering as much information as possible in this step will help you decide on the next steps for your incident responders.

Once an event is confirmed to be legitimate, you should quickly communicate with your team to identify who needs to be contacted outside of your security group and key leadership. Whom you contact may be dictated by your local policy—and in some cases, laws or regulations. Opening communication channels early will also ensure that you get the appropriate support for any major changes to the organization’s resources. We covered the communications process in detail in Chapter 7.

For some organizations, the mere mention of a successful breach can be damaging, regardless of what was compromised. In 2011, for example, security company RSA was the victim of a major breach. RSA’s SecureID, a line of two-factor authentication token-based products, was used by more than 40 million businesses at the time for the purposes of securing their own network. These tokens, which were the cornerstone of RSA’s authentication service, were revealed to have been compromised after a thorough investigation of the incident, prompting the company to replace all 40 million tokens. Almost as damaging as the financial cost of replacing the tokens was the damage done to RSA’s reputation after the story made headlines throughout the world. How could a security company be the victim of a hack? In serious cases like this, it’s critical that only those playing a role in the IR and decision-makers be informed of the breach. This will help reduce confusion across the organization as a clear path forward is determined. In addition, you do not want an attacker to be tipped off that he has been discovered. As an incident responder, you must be prepared to inform leadership of your technical assessment so that they can make informed decisions in the best interest of the organization.

Downtime

Networks exist to provide resources to those who need them, when they need them. Without a network and services that are available when they need to be, nothing can be accomplished. Every other metric in determining network performance such as stability, throughput, scalability, and storage all require the network to be up. The decision on whether to take a network completely offline to handle a breach is not a small one by any measure. Understanding that a complete shutdown of the network may not be possible, you should isolate infected systems to prevent additional damage. The priority here is to prevent additional losses and minimize impacts on the organization. This is not dissimilar to triage in a hospital emergency room: your team must work quickly to perform triage on your network to determine the extent of the damage and prevent additional harm, all while keeping the organization running.

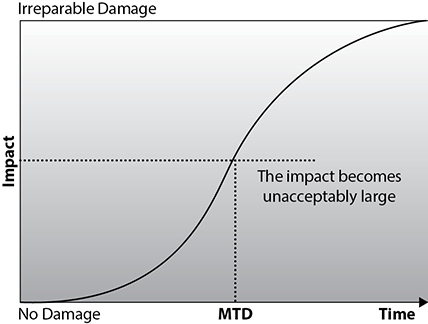

The key is to determine which of the organization’s critical systems are needed for survival and estimate the outage time that can be tolerated by the company as a result of an incident. The outage time that can be endured by an organization is referred to as the maximum tolerable downtime (MTD), which is illustrated in Figure 16-2.

Figure 16-2 Maximum tolerable downtime

Following are some sample MTD estimates for several systems arranged by criticality. These estimates will vary from organization to organization and from business unit to business unit:

• Nonessential 30 days

• Normal 7 days

• Important 72 hours

• Urgent 24 hours

• Critical Minutes to hours

Each business function and asset should be placed in one of these categories, depending on how long the organization can survive without it. These estimates will help you determine how to prioritize response team efforts to restore these assets. The shorter the MTD, the higher the priority of the function in question. Thus, for example, the systems classified as Urgent should be addressed before those classified as Normal.

Recovery Time

Time is money, and the faster you can restore your network to a safe operating condition, the better it is for the organization’s bottom line. Although there may be serious financial implications for every second a network asset is offline, you should not sacrifice speed for completeness. You should keep lines of communication open with organization management to determine acceptable limits to downtime. Having a sense of what the key performance indicators (KPIs) are for detection and remediation will help clear up confusion, manage expectations, and potentially enable you to demonstrate your team’s preparedness should you exceed these limits. Setting recovery time limits may also be useful in the long run for the reputation of the team and may be useful in securing additional budgets for training and tools.

The recovery time objective (RTO) is oftentimes used, particularly in the context of disaster recovery, to denote the earliest time within which a business process must be restored after an incident to avoid unacceptable consequences associated with a break in business processes. The RTO value is smaller than the MTD value, because the MTD value represents the time after which an inability to recover significant operations will mean severe and perhaps irreparable damage to the organization’s reputation or bottom line. The RTO assumes that there is a period of acceptable downtime. This means that an organization can be out of production for a certain period of time (RTO) and can still get back on its feet. But if it cannot get production up and running within the MTD window, the organization may be sinking too fast to recover properly.

Data Integrity

Taking down a network isn’t always the goal of a network intrusion. For malicious actors, tampering with data may be enough to disrupt operations, and it may provide them with the outcome they were looking for. Financial transaction records, personal data, and professional correspondence are types of data that are especially susceptible to data tampering. There are cases when attacks on data are obvious, such as those involving ransomware. In these situations, malware will encrypt data files on a system so the users cannot access them without submitting payment for the decryption keys. However, it may not always be apparent that an attack on data integrity has taken place. It may be that you are able to discover the unauthorized insertion, modification, or deletion of data only after a detailed inspection. This illustrates why it’s critical to back up data and system configurations, and to keep them sufficiently segregated from the network so that they are not themselves affected by the attack. An easily deployable backup solution will allow for very rapid restoration of services. The authors will caution, however, that much like Schrödinger’s cat, the condition of any backup is unknown until a restore is attempted. In other words, having a backup alone isn’t enough; it must be verified over time to ensure that it’s free from corruption and malware.

Economic Impacts

It’s difficult to predict the second- and third-order effects of network intrusions. Even if some costs are straightforward to calculate, the complete economic impact of a network breach is difficult to quantify. A fine levied against an organization that had not adequately secured its workers’ personal information is an immediate and obvious cost, but how does one accurately calculate the future losses due to identity theft, or the damage to the reputation of the organization resulting from the lack of confidence? It’s critical to include questions like these in your discussion with stakeholders when determining courses of action for dealing with an incident.

Another consideration in calculating the economic scope of an incident is the value of the assets involved. The value placed on information is relative to the parties involved, what work was required to develop it, how much it costs to maintain, what damage would result if it were lost or destroyed, what enemies would pay for it, and what liability penalties could be endured. If an organization does not know the value of the information and the other assets it is trying to protect, it does not know how much money and time it should spend on protecting or restoring them. If the calculated value of a company’s trade secret is $x, then the total cost of protecting or restoring it should be some value less than $x.

The previous examples refer to assessing the value of information and protecting it, but this logic also applies to an organization’s facilities, systems, and resources. The value of facilities must be assessed, along with all printers, workstations, servers, peripheral devices, supplies, and employees. You do not know how much is in danger of being lost if you don’t know what you have and what it is worth in the first place.

System Process Criticality

As part of your preparation, you must determine what processes are considered essential for the business’s operation. These processes are associated with tasks that must be accomplished with a certain level of consistency for the business to remain competitive. Each business’s list of critical processes will be different, but it’s important to identify those early so that they can be the first to be brought back up during a recovery. The critical process lists aren’t restricted to technical assets only; they should include the essential staff required to get these critical systems back online and keep them operational. It’s important to educate members across the organization as to what these core processes are, how their work directly supports the goals of the processes, and how they benefit from successful operations. This is effective in getting the appropriate level of buy-in required for successfully responding to incidents and recovering from any resulting damage.

Data Correlation

Security information and event management (SIEM) systems do a fantastic job of collecting and presenting massive amounts of data from disparate sources in a single view. They assist the analyst in determining whether an event is indeed malicious and what behaviors may be connected with the event. With a smart policy for how logs are captured and sent to a SIEM, we can spend more time investigating what actually happened versus trying to figure out if the data we needed even recorded. SIEMs are critical when it comes to categorizing attacks. Because a primary goal of IR is to enable the organization to figure out what happened and get back to normal operations, it helps to be able to say with a fair degree of certainty that something we observe on the network is tied to some stage of an attack. If we’re able to determine with confidence that an attack is early in its attack cycle, we can prioritize actions to remove it from the network. Compare this with finding evidence of exfiltration after the fact, and you can see why time is so critical in tying into and referencing across massive data sets.

Reverse Engineering

Reverse engineering (RE) is the detailed examination of a product to learn what it does and how it works. In the context of IR, RE relates exclusively to malware. The idea is to analyze the binary code to find, for example, the IP addresses or host/domain names it uses for command and control (C2) nodes, the techniques it employs to achieve permanence in an infected host, or the unique characteristics that could be used as a signature for the malware.

Generally speaking, there are two approaches to reverse engineering malware. The first doesn’t really care about what the binary is, but rather with what the binary does. This approach, sometimes called dynamic code analysis, requires a sandbox in which to execute the malware. This sandbox creates an environment that looks like a real operating system to the malware and provides such things as access to a file system, network interface, memory, and anything else the malware asks for. Each request is carefully documented to establish a timeline of behavior that enables us to understand what it does. The main advantage of dynamic malware analysis is that it tends to be significantly faster and requires less expertise than the alternative (described next). It can be particularly helpful for code that has been heavily obfuscated by its authors. The biggest disadvantage is that it doesn’t reveal all that the malware does, but rather all that it did during its execution in the sandbox. Some malware will actually check to see if it is being run in a sandbox before doing anything interesting. Additionally, some malware doesn’t immediately do anything nefarious, waiting instead for a certain condition to be met (for example, a time bomb that activates only at a particular date and time).

The alternative to dynamic code analysis is, unsurprisingly, static code analysis. In this approach to malware RE, a highly skilled analyst will either disassemble or decompile the binary code to translate its 1’s and 0’s into either assembly language or whichever higher level language it was created in. This enables a reverse-engineer to see all possible functions of the malware, not just the ones that it exhibited during a limited run in a sandbox. It is then possible, for example, to see all the domains the malware would reach out to given the right conditions, as well as the various ways in which it would permanently insert itself into its host. This last insight enables the IR team to look for evidence that any of the other persistence mechanisms exist in other hosts that were not considered infected up to that point.

Containment

Once you know that a threat agent has compromised the security of your information system, your first order of business is to keep things from getting worse. Containment comprises a set of actions that attempts to deny the threat agent the ability or means to cause further damage. The goal is to prevent or reduce the spread of this incident while you strive to eradicate it. This is akin to confining highly contagious patients in an isolation room of a hospital until they can be cured to keep others from becoming infected. A proper containment process buys the IR team time for a proper investigation and determination of the incident’s root cause. The containment should be based on the category of the attack (that is, whether it was internal or external), the assets affected by the incident, and the criticality of those assets. Containment approaches can be proactive or reactive. Which is the best approach depends on the environment and the category of the attack. In some cases, the best action may be to disconnect the affected system from the network. However, this reactive approach could cause a denial of service or limit functionality of critical systems.

Segmentation

A well-designed security architecture will segment your information systems by some set of criteria such as function (for example, finance or HR) or sensitivity (for example, unclassified or secret). Segmentation divides a network into subnetworks (or segments) so that hosts in different segments are not able to communicate directly with each other. This can be done by either physically wiring separate networks or by logically assigning devices to separate virtual local area networks (VLANs). In either case, traffic between network segments must go through some sort of gateway device, which is oftentimes a router with the appropriate access control lists (ACLs). For example, the accounting division may have its own VLAN that prevents users in the research and development (R&D) division from directly accessing the financial data servers. If certain R&D users had legitimate needs for such access, they would have to be added to the gateway device’s ACL, which could place restrictions based on source/destination addresses, time of day, or even specific applications and data to be accessed.

The advantages of network segmentation during IR should be pretty obvious: compromises can be constrained to the network segment in which they started. To be clear, it is still possible to go from one segment to another, as was the case with the R&D users example. Some VLANs may also have vulnerabilities that could enable an attacker to jump from one to another without going through the gateway. Still, segmentation provides an important layer of defense that can help contain an incident. Without it, the resulting “flat” network will make it more difficult to contain an incident.

Isolation

Although it is certainly helpful to segment the network as part of its architectural design, we already saw that this can still allow an attacker to move easily between hosts on the same subnet. As part of your preparations for IR, it is helpful to establish an isolation VLAN, much like hospitals prepare isolation rooms before any contagious patients actually need them. The IR team would then have the ability to move any compromised or suspicious hosts quickly to this VLAN until they can be further analyzed. The isolation VLAN would have no connectivity to the rest of the network, which would prevent the spread of any malware. This isolation would also prevent compromised hosts from communicating with external hosts such as C2 nodes. About the only downside to using isolation VLANs is that some advanced malware can detect this situation and then take steps to eradicate itself from the infected hosts. Although this may sound wonderful from an IR perspective, it does hinder your ability to understand what happened and how the compromise was executed so that you can keep it from happening in the future.

While a host is in isolation, the response team is safely able to observe its behaviors to gain information about the nature of the incident. By monitoring its network traffic, you can discover external hosts (for example, C2 nodes and tool repositories) that may be part of the compromise. This enables you to contact other organizations and get their help in shutting down whatever infrastructure the attackers are using. You can also monitor the compromised host’s running processes and file system to see where the malware resides and what it is trying to do on the live system. All this helps you better understand the incident and come up with the best way to eradicate it. It also enables you to create indicators of compromise (IOCs) that you can then share with others such as the Computer Emergency Readiness Team (CERT) or an Information Sharing and Analysis Center (ISAC).

Removal

At some point in the response process, you may have to remove compromised hosts from the network altogether. This can happen after isolation or immediately upon noticing the compromise, depending on the situation. Isolation is ideal if you have the means to study the behaviors and gain actionable intelligence, or if you’re overwhelmed by a large number of potentially compromised hosts that need to be triaged. Still, one way or another, some of the compromised hosts will come off the network permanently.

When you remove a host from the network, you need to decide whether you will keep it powered on, shut it down and preserve it, or simply rebuild it. Ideally, the criteria for making this decision are already spelled out in the IR plan. Here are some of the factors to consider in this situation:

• Threat intelligence value A compromised computer can be a treasure trove of information about the tactics, techniques, procedures (TTPs), and tools used by an adversary—particularly a sophisticated or unique one. If you have a threat intelligence capability in your organization and can gain new or valuable information from a compromised host, you may want to keep it running until its analysis is completed.

• Crime scene evidence Almost every intentional compromise of a computer system is a criminal act in many countries, including the United States. Even if you don’t plan to pursue a criminal or civil case against the perpetrators, it is possible that future IR activities change your mind and would benefit from the evidentiary value of a removed host. If you have the resources, it may be worth your effort to make forensic images of the primary storage (for example, RAM) before you shut it down and of secondary storage (for example, the file system) before or after you power it off.

• Ability to restore It is not a happy moment for anybody in our line of work when we discover that, though we did everything by the book, we removed and disposed of a compromised computer that contained critical business information that was not replicated or backed up anywhere else. If we took and retained a forensic image of the drive, we could mitigate this risk, but otherwise, someone is going to have a bad day. This is yet another reason why you should, to the extent that your resources allow, keep as much of a removed host as possible.

The removal process should be well documented in the IR plan so that the right issues are considered by the right people at the right time. We address chain of custody and related issues in Chapter 18, but for now, suffice it so say that what you do with a removed computer can come back and haunt you if you don’t do it properly.

Eradication and Recovery

Once the incident is contained, you turn your attention to the eradication process, in which you return all systems to a known-good state. It is important that you gather evidence before you recover systems, because in many cases you won’t know that you need legally admissible evidence until days, weeks, or even months after an incident. It pays, then, to treat each incident as if it will eventually end up in a court of justice.

Once all relevant evidence is captured, you can fix all that was broken. The aim is to restore full, trustworthy functionality to the organization. For hosts that were compromised, the best practice is simply to reinstall the system from a gold master image and then restore data from the most recent backup that occurred prior to the attack.

Recovery in an IR is focused on ensuring that you have identified the corresponding attack vectors and implemented effective countermeasures against them. This stage presumes that you have analyzed the incident and verified the manner in which it was conducted. This analysis can be a separate post-mortem activity or can take place in parallel with the response.

Vulnerability Mitigation

Recovering from an incident means not only getting things back to where they were, but also not allowing your environment to be disrupted in the same way again. Mitigation involves eradicating the cause of any event or incident once it’s been accurately identified. By definition, every incident occurs because a threat actor exploits a vulnerability and compromises the security of an information system. It stands to reason, then, that after recovering from an incident, you would want to scan your systems for other instances of that same (or a related) vulnerability.

Although it is true that you will never be able to protect against every vulnerability, it is also true that you have a responsibility to mitigate those that have been successfully exploited, whether or not you thought they posed a high risk before the incident. The reason is that you now know that the probability of a threat actor exploiting it is 100 percent, because it already happened. And if it happened once, it is likely to happen again absent a change in your controls. The inescapable conclusion is that, after an incident, you need to implement a control that will prevent a recurrence of the exploitation and develop a plug-in for your favorite scanner that will test all systems for any residual vulnerabilities.

For the best results, this process will require coordination with your vulnerability management process, since vulnerability mitigation activities will likely change your environment. Your team will need a method to determine, first, if the vulnerability was sufficiently addressed and, second, if any of these changes introduce new conditions that may make the system more vulnerable to attack. Your vulnerability management team will often be a great ally to advise IR efforts related to vulnerability mitigation, since they will likely have the most experience and resources related to identifying, prioritizing, and remediating software flaws.

Sanitization

According to NIST Special Publication 800-88 Revision 1, Guidelines for Media Sanitization, sanitization refers to the process by which access to data on a given medium is made infeasible for a given level of effort. These levels of effort, in the context of IR, can be cursory and sophisticated. What we call cursory sanitization can be accomplished by simply reformatting a drive. It may be sufficient against run-of-the-mill attackers who look for large groups of easy victims and don’t put too much effort into digging their hooks deeply into any one victim. On the other hand, there are sophisticated attackers who may have deliberately targeted your organization and will go to great lengths to persist in your systems or, if repelled, compromise them again. This class of threat actor requires more advanced approaches to sanitization.

The challenge, of course, is that you don’t always know which kind of attacker is responsible for the incident. For this reason, simply reformatting a drive is a risky approach. Instead, we recommend one of the following techniques, listed in increasing level of effectiveness at ensuring the adversary is definitely denied access to data on the medium:

• Overwriting Overwriting data entails replacing the 1’s and 0’s that represent it on storage media with random or fixed patterns of 1’s and 0’s to render the original data unrecoverable. This should be done at least once (for example, overwriting the medium with 1’s, 0’s, or a pattern of these), but it may have to be done more than that to ensure that all data is destroyed.

• Encryption Many mobile devices take this quick and secure approach to render data unusable. The data stored on the medium is encrypted using a strong key. To render the data unrecoverable, the system securely deletes the encryption key, which is many times faster than deleting the encrypted data. Recovering the data in this scenario is typically computationally infeasible.

• Degaussing This is the process of removing or reducing the magnetic field patterns on conventional disk drives or tapes. In essence, a powerful magnetic force is applied to the media, which results in the wiping of the data and sometimes the destruction of the motors that drive the platters. Note that degaussing typically renders the drive unusable.

• Physical destruction Perhaps the best way to combat data remanence is simply to destroy the physical media. The two most commonly used approaches to destroying media are to shred them or expose them to caustic or corrosive chemicals. Another approach is incineration.

Reconstruction

Once a compromised host’s media is sanitized, your next step is to rebuild the host to its pristine state. The best approach to doing this is to ensure you have created known-good, hardened images of the various standard configurations for hosts on your network. These images are sometimes called gold masters and facilitate the process of rebuilding a compromised host. This reconstruction is significantly more difficult if you manually reinstall the operating system, configure it so it is hardened, and then install the various applications and/or services that were in the original host. We don’t know anybody who, having gone through this dreadful process once, doesn’t invest the time to build and maintain gold images thereafter.

Another aspect of reconstruction is the restoration of data to the host. Again, there is one best practice here, which is to ensure you have up-to-date backups of the system data files. This is also key for quickly and inexpensively dealing with ransomware incidents. Sadly, in too many organizations, backups are the responsibility of individual users. If your organization does not enforce centrally managed backups of all systems, your only other hope is to ensure that data is maintained in a managed data store such as a file server.

Secure Disposal

When you’re disposing of media or devices as a result of an IR, any of the four techniques covered earlier (overwriting, encryption, degaussing, or physical destruction) may work, depending on the device. Overwriting is usually feasible only with regard to hard disk drives and may not be available on some solid-state drives. Encryption-based purging can be found in multiple workstation, server, and mobile operating systems, but not in all. Degaussing works on magnetic media only, but some of the most advanced magnetic drives use stronger fields to store data and may render older degaussers inadequate. Note that we have not mentioned network devices such as switches and routers, which typically don’t offer any of these alternatives. In the end, the only way to dispose of these devices securely is by physically destroying them using an accredited process or service provider. This physical destruction involves the shredding, pulverizing, disintegration, or incineration of the device. Although this may seem extreme, it is sometimes the only secure alternative left.

Patching

Many of the most damaging incidents are the result of an unpatched software flaw. This vulnerability can exist for a variety of reasons, including failure to update a known vulnerability or the existence of a heretofore unknown vulnerability, also known as a zero-day. As part of the IR, the team must determine which cause is the case. The first would indicate an internal failure to keep patches updated, whereas the second would all but require notification to the vendor of the product that was exploited so a patch can be developed.

Many organizations rely on endpoint protection that is not centrally managed, particularly in a “bring-your-own-device” (BYOD) environment. As a result, if a user or device fails to download and install an available patch, it causes a vulnerability, which can become an incident. If this is the case in your organization and you are unable to change the policy to require centralized patching, you should also assume that some number of endpoints will fail to be patched, so you should develop compensatory controls elsewhere in your security architecture. For example, by implementing network access control (NAC), you can test any device attempting to connect to the network for patching, updates, antimalware, and any other policies you want to enforce. If the endpoint fails any of the checks, it is placed in a quarantine network that may allow Internet access (particularly for downloading patches) but keeps the device from joining the organizational network and potentially spreading malware.

If, on the other hand, your organization uses centralized patches and updates, the vulnerability was known, and still it was successfully exploited, this points to a failure within whatever system or processes you are using for patching. Part of the response would be to identify the failure, correct it, and then validate that the fix is effective at preventing a repeated incident in the future.

Restoration of Permissions

For many organizations, recovery is an effort to restore business operations quickly and efficiently, and it also addresses the issues that contributed to that incident. Although recovery operations will ideally leave you in a better place than before the incident, just getting access back at the same level is a win for many security teams. This often means that a reliable backup solution is in place and that backups have been tested and verified as part of the overall IR process. The rebuilding phase is not the time to discover that a backup you relied on was never verified.

Validating Permissions

There are two principal reasons for validating permissions before you wrap up your IR activities. The first is that inappropriately elevated permissions may have been a cause of the incident in the first place. It is not uncommon for organizations to allow excessive privileges for their users. One of the most common reasons we’ve heard is that if the users don’t have administrative privileges on their devices, they won’t be able to install whatever applications they’d like to try out in the name of improving their efficiency. Of course, we know better, but this may be an organizational culture issue that is beyond your power to change. Still, documenting the incidents (and their severity) that are the direct result of excessive privileges may, over time, move the needle in the direction of common sense.

Not all permissions issues can be blamed on end users. Time and again, we’ve seen system or domain admins who do all their work (including surfing the Web) using their admin account. Furthermore, most of us have heard of (or had to deal with) the discovery that a system admin who left the organization months or even years ago still has a valid account. The aftermath of an IR provides a great opportunity to double-check on issues like these.

Finally, it is very common for interactive attackers to create or hijack administrative accounts so that they can do their nefarious deeds undetected. Although it may be odd to see an anonymous user in Russia accessing sensitive resources on your network, you probably wouldn’t get too suspicious if you saw one of your fellow admin staff members moving those files around. If there is any evidence that the incident leveraged an administrative account, it would be a good idea to delete that account and, if necessary, issue a new one to the victimized administrator. While you’re at it, you may want to validate that all other accounts are needed and protected.

Restoration of Services and Verification of Logging

There are two key aspects of restoring services that the security team will be directly involved in. The first is developing and executing out the processes for network service validation and testing to certify all systems as operational and provide the requisite level of service. The second, and less obvious aspect, is to ensure that, along with certifying that any vulnerable services are taken offline, the team has the necessary visibility and logging to detect any future issues.

Post-Incident Activities

No effective business process would be complete without some sort of introspection or opportunity to learn from and adapt to our experiences. This is the role of the corrective actions phase of an incident response. It is here that we apply the lessons learned and information gained from the process to improve our posture in the future.

Lessons-Learned Report

In our time in the Army, it was virtually unheard of to conduct any sort of operation (training or real world), or run any event of any size, without having a “hotwash” (a quick huddle immediately after the event to discuss the good, the bad, and the ugly) and/or an after-action review (AAR) to document issues and recommendations formally. It has been very heartening to see the same diligence in most nongovernmental organizations in the aftermath of incidents. Although there is no single best way to capture lessons learned, we’ll present one that has served us well in a variety of situations and sectors.

The general approach is that every participant in the operation is encouraged or required to provide his or her observations in the following format:

• Issue A brief (usually a single sentence) label for an important (from the participant’s perspective) issue that arose during the operation

• Discussion A (usually a paragraph long) description of what was observed and why it is important to remember or learn from it for the future

• Recommendation A recommendation that usually starts with a “sustain” or “improve” label if the contributor felt the team’s response was effective or ineffective (respectively)

Every participant’s input is collected and organized before the AAR. Usually all inputs are discussed during the review session, but occasionally the facilitator will choose to disregard some if he or she thinks they are repetitive (of others’ inputs) or would be detrimental to the session. As the issues are discussed, they are refined and updated with other team members’ inputs. At the conclusion of the AAR, the group (or the person in charge) decides which issues deserve to be captured as lessons learned, and those find their way into a final report. Depending on your organization, these lessons-learned reports may be sent to management, kept locally, and/or sent to a higher echelon clearinghouse.

Change Control Process

During the lessons learned or AAR process, the team will discuss and document important recommendations for changes. Although these changes may make perfect sense to the IR team, you must be careful about assuming that they should automatically be made. Every organization should have some sort of change control process. Oftentimes, this mechanism takes the form of a change control board (CCB), which consists of representatives of the various business units as well as other relevant stakeholders. Whether or not there is a board, the process is designed to ensure that no significant changes are made to any critical systems without careful consideration by all who may be affected.

Going back to an earlier example about an incident triggered by a BYOD policy in which every user could control software patching on their own devices, it is possible that the incident response team will determine that this is an unacceptable state of affairs and recommend that all devices on the network be centrally managed. This decision makes perfect sense from an information security perspective, but it would probably face some challenges in the legal and human resources departments. The change control process is the appropriate way to consider all perspectives and arrive at sensible and effective changes to the systems.

Updates to Response Plan

Regardless of whether the change control process implements any of the recommendations from the IR team, the response plan should be reviewed and, if appropriate, updated. Whereas the change control process implements organization-wide changes, the response team has much more control over the response plan. Absent sweeping changes, some compensation can happen at the IR team level.

As shown earlier in Figure 16-1, incident management is a process. In the aftermath of an event, we take actions that enable us to prepare better for future incidents, which starts the process all over again. Any changes to this lifecycle should be considered from the perspectives of the stakeholders with which we started this chapter. This will ensure that the IR team is creating changes that make sense in the broader organizational context. To get stakeholders’ perspectives, establishing and maintaining positive communications is paramount.

Summary Report

The post-incident report can be a very short one-pager or a lengthy treatise; it all depends on the severity and impact of the incident. Whatever the case, you must consider who will read the report and what interests and concerns will shape the manner in which they interpret it. Before you even begin to write it, you should consider one question: What is the purpose of this report? If the goal is to ensure that the IR team remembers some of the technical details of the response that worked (or didn’t), then you may want to write it in a way that persuades future responders to consider these lessons. This report would be very different from a report intended to persuade senior management to modify a popular BYOD policy to enhance security even if some are unhappy as a result. In the first case, the report would likely be technologically focused, whereas in the latter case, it would focus on the business’s bottom line.

Indicator of Compromise Generation

We already mentioned the creation of IOCs as part of isolation efforts in the containment phase of the response. Now you can leverage those IOCs by incorporating them into your network monitoring plan. Most organizations would add these indicators to rules in their intrusion detection or prevention system (IDS/IPS). You can also cast a wider net by providing the IOCs to business partners or even competitors in your sector. This is where organizations such as the US-CERT and the ISACs can be helpful in keeping large groups of organizations protected against known attacks.

Monitoring

So you have successfully responded to the incident, implemented new controls, and ran updated vulnerability scans to ensure that everything is on the up and up. These are all important preventive measures, but you still need to ensure that you improve your ability to react to a return by the same (or a similar) actor. Armed with all the information on the adversary’s TTPs, you now need to update your monitoring plan to help you better detect similar attacks.

Chapter Review

This chapter sets the stage for the rest of our discussion on incident responses. It started and ended with a focus on the interpersonal element of IR. Even before we discussed the technical process itself, we discussed the preparatory steps that you as a security analyst, along with your greater security team, must take. Many an incident has turned into an RGE (resume-generating event) for highly skilled responders who did not understand the importance of practice, execution, and communication.

The technical part, by comparison, is a lot more straightforward. The incident recovery and post-incident response process consists of five discrete phases: preparation, detection and analysis, containment, eradication and recovery, and post-incident activities. Your effectiveness in this process is largely dictated by the amount of preparation you and your teammates put into it. If you have a good grasp on the risks facing your organization, develop a sensible plan, and rehearse it with all the key players periodically, you will likely do very well when your adversaries breach your defenses. In the next few chapters, we get into the details of the key areas of technical response.

Questions

1. The process of dissecting a sample of malicious software to determine its purpose is referred to as what?

A. Segmentation

B. Frequency analysis

C. Traffic analysis

D. Reverse engineering

2. During the IR process, when is a good time to perform a vulnerability scan to determine the effectiveness of corrective actions?

A. Change control process

B. Reverse engineering

C. Removal

D. Eradication and recovery

3. What is the key goal of the containment stage of an IR process?

A. To limit further damage from occurring

B. To get services back up and running

C. To communicate goals and objectives of the IR plan

D. To prevent data follow-on actions by the adversary exfiltration

Use the following scenario to answer Questions 4–8:

You receive an alert about a compromised device on your network. Users are reporting that they are receiving strange messages in their inboxes and having problems sending e-mails. Your technical team reports unusual network traffic from the mail server. The team has analyzed the associated logs and confirmed that a mail server has been infected with malware.

4. You immediately remove the server from the network and route all traffic to a backup server. What stage are you currently operating in?

A. Preparation

B. Containment

C. Eradication

D. Validation

5. Now that the device is no longer on the production network, you want to restore services. Before you rebuild the original server to a known-good condition, you want to preserve the current condition of the server for later inspection. What is the first step you want to take?

A. Format the hard drive.

B. Reinstall the latest operating systems and patches.

C. Make a forensic image of all connected media.

D. Update the antivirus definitions on the server and save all configurations.

6. Your team has identified the strain of malware that took advantage of a bug in your mail server version to gain elevated privileges. Because you cannot be sure what else was affected on that server, what is your best course of action?

A. Immediately update the mail server software.

B. Reimage the server’s hard drive.

C. Write additional firewall rules to allow only e-mail–related traffic to reach the server.

D. Submit a request for next-generation antivirus for the mail server.

7. Your team believes it has eradicated the malware from the primary server. You attempt to bring affected systems back into the production environment in a responsible manner. Which of the following tasks will not be a part of this phase?

A. Applying the latest patches to server software

B. Monitoring network traffic on the server for signs of compromise

C. Determining the best time to phase in the primary server into operations

D. Using a newer operating system with different server software

8. Your team has successfully restored services on the original server and verified that it is free from malware. What activity should be performed as soon as practical?

A. Preparing the lessons-learned report

B. Notifying law enforcement to press charges

C. Notifying industry partners about the incident

D. Notifying the press about the incident

Answers

1. D. Reverse engineering is the process of decomposing malware to understand what it does and how it works.

2. D. Additional scanning should be performed during validation, part of the recovery process, to ensure that no additional vulnerabilities exist after remediation.

3. A. The goal of containment is to prevent or reduce the spread of this incident while you strive to eradicate it.

4. B. Containment is the set of actions that attempts to deny the threat agent the ability or means to cause further damage.

5. C. Since unauthorized access of computer systems is a criminal act in many areas, it may be useful to take a snapshot of the device in its current state using forensic tools to preserve evidence.

6. B. Generally, the most effective means of disposing of an infected system is a complete reimaging of a system’s storage to ensure that any malicious content was removed and to prevent reinfection.

7. D. The goal of the IR process is to get services back to normal operation as quickly and safely as possible. Introducing completely new and untested software may introduce significant challenges to this goal.

8. A. Preparing the lessons-learned report is a vital stage in the process after recovery. It should be performed as soon as possible after the incident to record as much information and complete any documentation that may be useful for the prosecution of the incident and to prevent future incidents from occurring.