26.4MediumBlockAllocator 419

A major improvement is to record the largest block that can be allocated in

the

Tail structure of the page, along with the bit index of where that block is lo-

cated. Armed with this information, the memory manager can simply keep a table

of page lists, ordered by big block size, so that allocation is always attempted on

a page that is known to have enough space. Thus, no searching is required once a

page is located, and pages can be located very quickly. More details are provided

on this later.

The second wrinkle is that allocation can work with the data as specified, but

we cannot free a block of memory without knowing how many blocks are in each

allocation. And without knowing how long the allocation is, there’s no way to

embed a length variable at the end of each allocation. Most competing memory

managers embed the length variable just before the pointer address in memory,

but we reject that option since it means wasting the first block in any given page

(which would precede any allocation on the page). That’s much too wasteful.

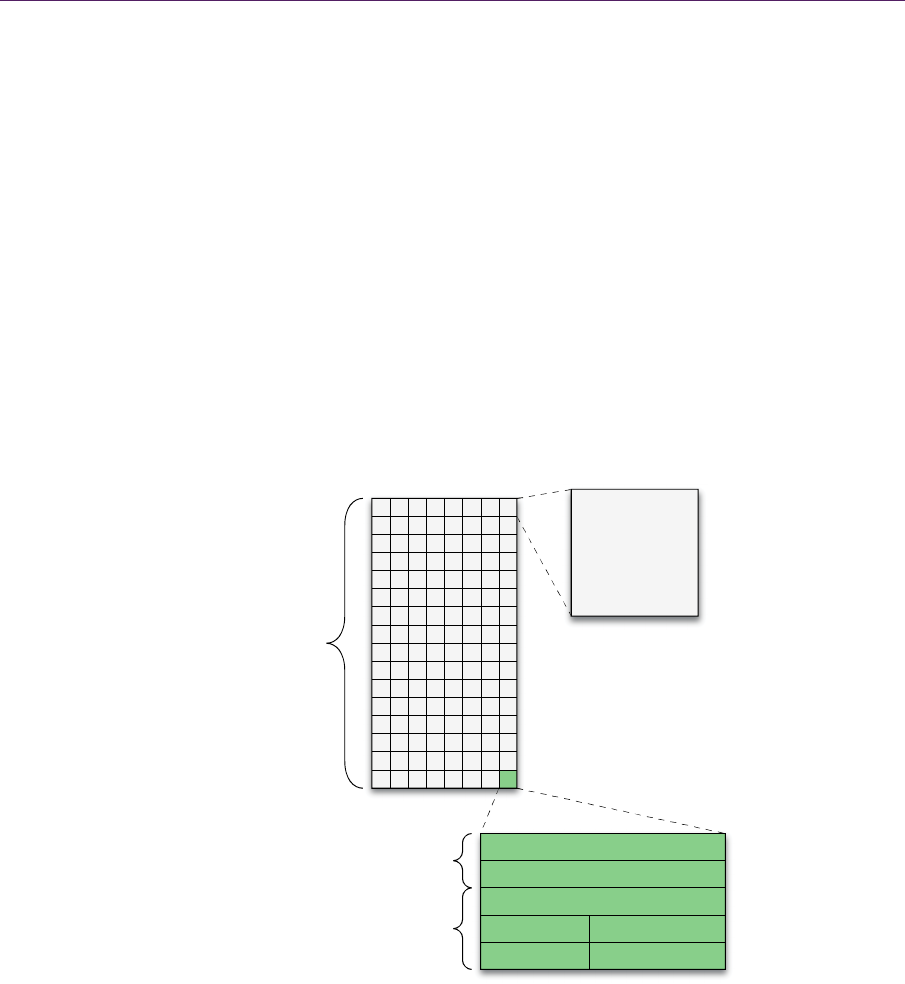

Figure 26.3. This is the memory layout of an MBA page. This particular example is a

16 kB page with 128-byte blocks. All of the management metadata fits within a single

block at the end of the page.

128 bytes/block

FreeBlockBitMask

EndofAllocBitMask

BigBlockIndex

FreeBlockCountBigBlockSize

Prev Page Next Page

MediumBlockAllocator P

a

ge

16kB Page

16 byteseach

4byteseach

420 26.AHighlyOptimizedPortableMemoryManager

Instead, we could store a value in an array at the end of the page, similar to how

the free block bit mask is stored, although that is quite expensive as well. Con-

sidering most of the allocations are larger than one block, many of the entries in

this array would be unused at any given time. Even if we stored the length per

block in one byte, it would be far too wasteful!

The solution: store a single bit in a bit mask that identifies whether a block is

at the end of an allocation. The pointer passed in to the

Free() function identi-

fies the start of the block already. Using these two pieces of information, we can

extrapolate the length of an allocation simply by scanning the bit mask forward

looking for a set bit. As a matter of standards, in the code provided, unallocated

blocks have their end-of-allocation bit set to zero, but it won’t ever be checked so

it shouldn’t matter. See Figure 26.3 for a more visual explanation.

HowAlloc()Works

Once a page is found, the MBA simply looks up the large block index in the

Tail structure, marks the range of bits as allocated in the free block bit mask,

and clears all the bits except the last one in the end-of-allocation bit mask. This is

enough information to allow the

Free() function to determine the length of the

original allocation given only the pointer address.

Now that the big block in the page has been at least partially allocated, we

have to find the new big block in the page. This entails scanning the free block

bit mask and finding the longest run of set bits, remembering the index and size

of the longest one. Once this is done, it is stored in the

Tail structure in the page

for future use.

The pointer to the allocation is returned to the memory manager. The

memory manager then must grab the big block size from the page and rearrange

that page to a different list based on the size of its big block, so future allocations

do not need to skip over insufficient memory pages.

HowFree()Works

Once the page manager has identified the page, the MBA begins by scanning the

end-of-allocation bit mask at the end of the page and counting 0 bits until a 1 bit

is found. This marks the end of the allocation and implicitly defines the length of

the allocation. We set the free block bit mask to one for all the blocks between

the start and end of the allocation, and clear the end-of-allocation bit to zero

(though this is not strictly necessary).

Since the memory region that was just released plus any adjacent free blocks

might sum to a larger big block, we update the big block size, just as was done in

26.4MediumBlockAllocator 421

the Alloc() function. Again, the memory manager requests this updated infor-

mation from the page and reorders its internal structures based on the big block

size in this page to optimize the next allocation.

PerformanceAnalysis

Allocation and deallocation are very fast. The act of taking possession of the re-

quested memory once a page is found is simply a few pointer additions and a

couple of memory dereferences. Setting a few bits in the free block bit mask is a

relatively quick

3

2

n operation when using 32-bit bitset operations, where n is

the number of blocks that the allocation spans.

Free() performs the same opera-

tions as

Alloc(), except that it reads bits from the free block bit mask rather than

sets them. However, searching for the big block takes

32m memory accesses,

where m is the number of blocks in the page. Since m is a constant for a given

page and block size, technically both of these analyses are bound by a constant

time (

1O ), meaning the number of cycles that is required can be computed at

compile time. Practically speaking, it is very fast, requiring only one L1 cache

line to be loaded if page and block sizes are carefully chosen for your architec-

ture.

Here’s a specific example: given a 16-kB page with 128 bytes per block,

there are 127 allocatable blocks per page plus one reserved block for the

Tail

structure. This is 127 bits for the bit mask, which fits in only four 32-bit words. A

good target is to make all MBA pages at least two times larger than the maxi-

mum medium block allocation size to improve memory performance when allo-

cating close to the maximum. In the worst case, a single allocation might be half

the size of a page, thus writing 64 bits, 32 bits at a time. The first load causes a

delay as a cache line is flushed and another is pulled into the L2 and L1 caches,

but the second load takes only one or two cycles on typical hardware.

MemoryOverhead

The MBA scheme is almost as efficient as the SBA approach, given that the

memory requirements are very similar with an extra bit mask and a couple more

words stored in the

Tail structure. For any page, the Tail structure is 20 bytes in

size. There is a fixed amount of overhead for the two bit masks, which is two bits

per block, aligned and rounded up to the next 32-bit word. For a 16-kB page

with 128 bytes per block, this is 32 bytes for bit masks. Management overhead

for this example is under three bits per block, but since allocations tend to span

many blocks, this could add up. The largest reasonable allocation in this example

page size would be 8 kB (half the page size), which covers 64 blocks. At two bits

422 26.AHighlyOptimizedPortableMemoryManager

per block, this worst-case allocation overhead is 16 bytes. dlmalloc appears to

have 16 bytes of overhead per allocation, regardless of its size, and those bytes

are spread out in memory causing random cache misses. In our system, the cache

lines involved in storing memory management metadata are far more likely to be

accessed again in the future.

26.5LargeBlockAllocator

There are many techniques for making memory management faster, but none so

easy as handling a few large allocations. In general, dealing with a few large al-

locations is the simplest of all possible cases and, thus, is really not worth putting

effort into. For this, feel free to substitute any allocation strategy that is suitably

efficient. dlmalloc can be configured to have an

mspace heap constrained within

the address range from which your large blocks are allocated. This is quite suffi-

cient.

If you plan to write your own LBA, a simple linked list of free blocks and a

separate linked list of allocated blocks would work fine. Best-fit strategies tend to

fragment more over time, but if your memory use tends to be generational (e.g.,

many allocations have matching lifetimes and are freed at the same time), almost

any method works fine. For very specific cases, you might explicitly carve out

memory for all large allocations or even use a simple stack allocator, where the

entire heap is reset with a single pointer being reset to the top of the stack. Use

what makes sense for your team and project.

The one point that should be made is that the system should have some way

of deciding whether a pointer is an LBA pointer or whether it belongs to the SBA

or MBA. The simplest way is to check the SBA and MBA first, although this

may become a performance problem if those operations are slow. Alternatively,

keeping a map, a hash table, or even a simple array of allocations may be fine.

There shouldn’t be too many large allocations, and unless your project allocates

and deallocates them frequently, any slowdown here is acceptable.

26.6PageManagementintheMemoryManager

One of the early performance bottlenecks of our system was the handling and

searching of pages inside the memory manager. Once each allocation strategy is

fast inside a single page, it is all the more critical to make sure the memory man-

ager itself can quickly figure out from what page to allocate memory. Finding the

right page to free memory from is really an operation that is performed by the

26.6PageManagementintheMemoryManager 423

OSAPI because it may have special knowledge of determining that based on the

page handling implementation.

SBAPages

Page management for small block pages is relatively straightforward. Since each

page has a specific block size that it can allocate, the memory manager simply

keeps two tables of linked lists of pages. One table contains pages that have abso-

lutely no free space in them (and are thus useless when trying to allocate

memory). The other table contains pages that have at least one free block availa-

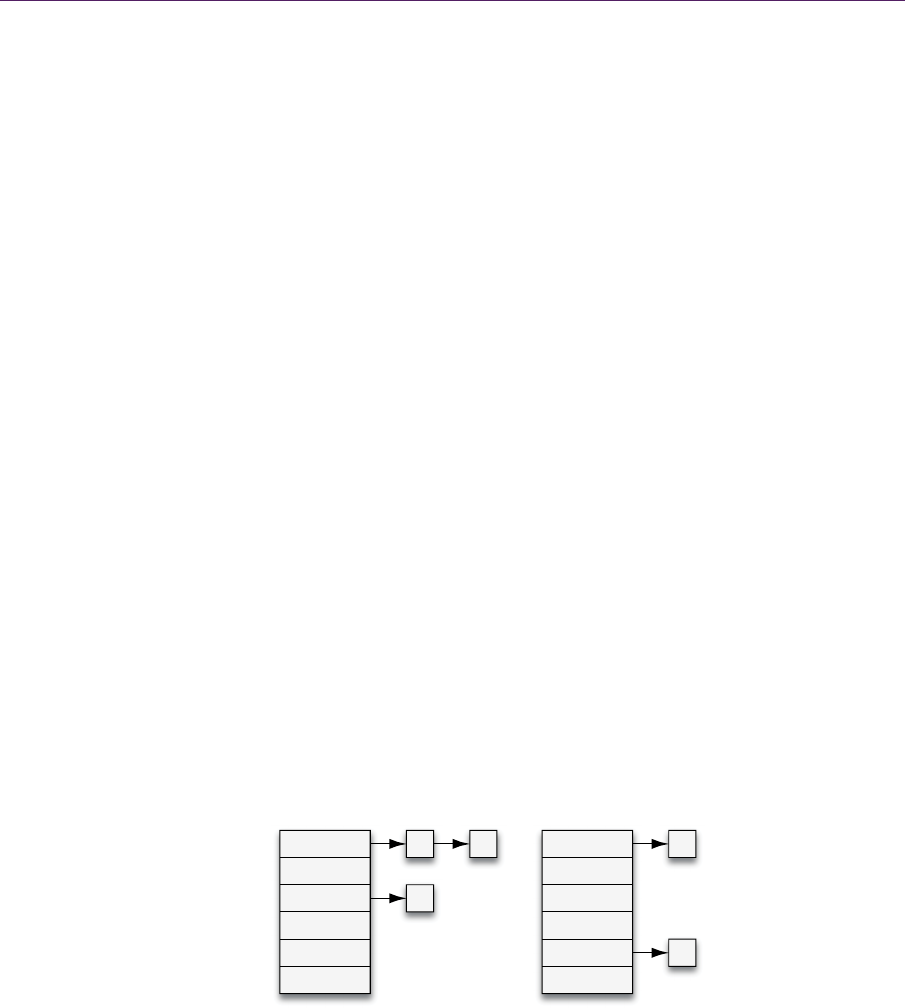

ble. As shown in Figure 26.4, each list contains pages that have equal block sizes,

so whenever a page fills up, it can be moved to the full table, and the next page in

the available list will service the next request. Any time a block is freed from a

page in the full list, that page is moved back to the available list for that block

size. Because of this, no searching for an allocation is ever required!

It should be noted that the current implementation of the page management

feature for SBA always requires allocations be as tightly fitting as possible. As a

result, any time there are no pages of exactly the correct block size, a new page

must be allocated for that block size and added to the available list. In low-

memory conditions, however, this could cause an early failure to allocate because

larger block sizes might be available but not considered for allocation. You may

wish to implement this as a fallback in case of page allocation failure to extend

the life of your game in the event of memory exhaustion. Alternatively, you

might always return a piece of memory that is already in an available page rather

than allocating a new page when a block size runs out of pages, which may help

utilize memory more effectively (although it is quite wasteful) because it will

Figure 26.4. The SBA always allocates a single sized object from each list of pages. So

to prevent searching, full pages are removed from the list from which we allocate.

16 bytes

24 bytes

32 bytes

40 bytes

48 bytes

56 bytes

16 bytes

24 bytes

32 bytes

40 bytes

48 bytes

56 bytes

P

a

geswithBlocks Available Full

P

a

ges

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.