97

7

ASpatialandTemporalCoherence

FrameworkforReal‐TimeGraphics

Michał Drobot

Reality Pump Game Development Studios

With recent advancements in real-time graphics, we have seen a vast improve-

ment in pixel rendering quality and frame buffer resolution. However, those

complex shading operations are becoming a bottleneck for current-generation

consoles in terms of processing power and bandwidth. We would like to build

upon the observation that under certain circumstances, shading results are tempo-

rally or spatially coherent. By utilizing that information, we can reuse pixels in

time and space, which effectively leads to performance gains.

This chapter presents a simple, yet powerful, framework for spatiotemporal

acceleration of visual data computation. We exploit spatial coherence for geome-

try-aware upsampling and filtering. Moreover, our framework combines motion-

aware filtering over time for higher accuracy and smoothing, where required.

Both steps are adjusted dynamically, leading to a robust solution that deals suffi-

ciently with high-frequency changes. Higher performance is achieved due to

smaller sample counts per frame, and usage of temporal filtering allows conver-

gence to maximum quality for near-static pixels.

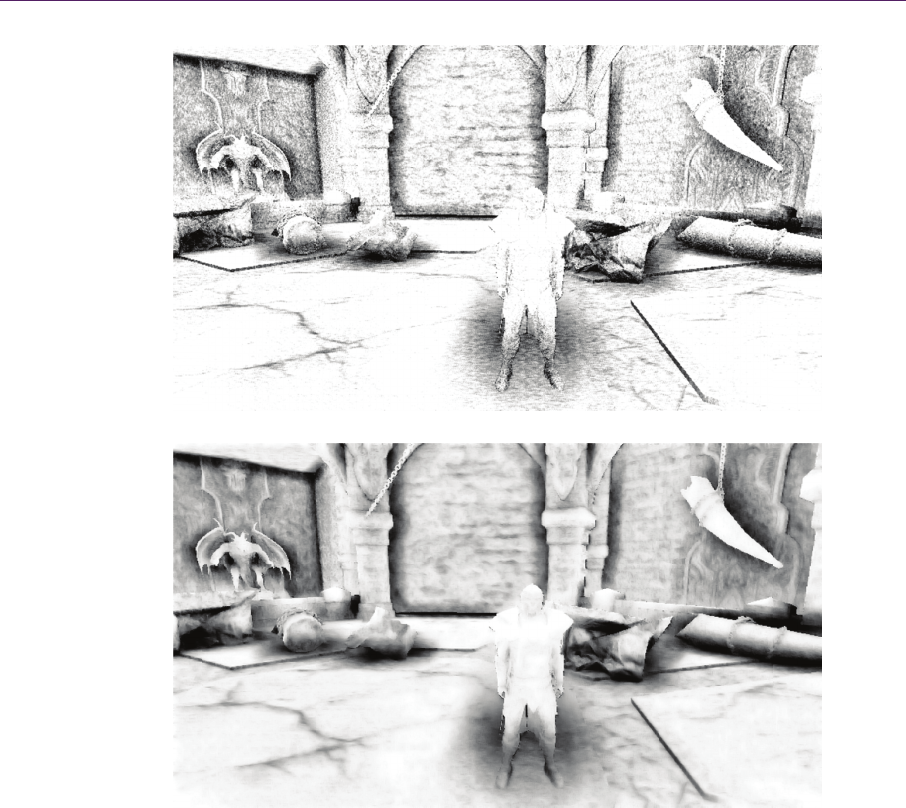

Our method has been fully production proven and implemented in a multi-

platform engine, allowing us to achieve production quality in many rendering

effects that were thought to be impractical for consoles. An example comparison

of screen-space ambient occlusion (SSAO) implementations is shown in Figure

7.1. Moreover, a case study is presented, giving insight to the framework usage

and performance with some complex rendering stages like screen-space ambient

occlusion, shadowing, etc. Furthermore, problems of functionality, performance,

and aesthetics are discussed, considering the limited memory and computational

power of current-generation consoles.

98

7.

1

(a)

(b)

Figure 7

.

spatiote

m

1

Introd

u

The mo

s

provem

e

duced,

l

7.ASp

.

1. (a) A conv

e

m

poral framew

o

u

ction

s

t recen

t

ge

n

e

nts in grap

h

l

ike deferred

atialandTe

m

e

ntional four-t

a

o

r

k

.

n

eration of g

a

h

ics renderin

g

lighting, p

e

m

poralCoher

e

a

p SSAO pas

s

a

me console

s

g

quality. Se

v

e

numbral so

ft

e

nceFrame

w

s

. (b) A fou

r

-t

a

s

has

b

roug

h

v

eral new te

ft

shadows,

s

w

orkforReal

‐

a

p SSAO pass

h

t some dra

m

chniques we

r

s

creen-space

‐

TimeGraphi

c

using the

m

atic im-

r

e int

r

o-

ambient

c

s

7.1Introduction 99

occlusion, and even global illumination approximations. Renderers have touched

the limit of current-generation home console processing power and bandwidth.

However, expectations are still rising. Therefore, we should focus more on the

overlooked subject of computation and bandwidth compression.

Most pixel-intensive computations, such as shadows, motion blur, depth of

field, and global illumination, exhibit high spatial and temporal coherency. With

ever-increasing resolution requirements, it becomes attractive to utilize those

similarities between pixels [Nehab et al. 2007]. This concept is not new, as it is

the basis for motion picture compression.

If we take a direct stream from our rendering engine and compress it to a lev-

el perceptually comparable with the original, we can achieve a compression ratio

of at least 10:1. What that means is that our rendering engine is calculating huge

amounts of perceptually redundant data. We would like to build upon that.

Video compressors work in two stages. First, the previous frames are ana-

lyzed, resulting in a motion vector field that is spatially compressed. The previ-

ous frame is morphed into the next one using the motion vectors. Differences

between the generated frame and the actual one are computed and encoded again

with compression. Because differences are generally small and movement is

highly stable in time, compression ratios tend to be high. Only keyframes (i.e.,

the first frame after a camera cut) require full information.

We can use the same concept in computer-generated graphics. It seems at-

tractive since we don’t need the analysis stage, and the motion vector field is eas-

ily available. However, computation dependent on the final shaded pixels is not

feasible for current rasterization hardware. Current pixel-processing pipelines

work on a per-triangle basis, which makes it difficult to compute per-pixel differ-

ences or even decide whether the pixel values have changed during the last frame

(as opposed to ray tracing, where this approach is extensively used because of the

per-pixel nature of the rendering). We would like to state the problem in a differ-

ent way.

Most rendering stages’ performance to quality ratio are controlled by the

number of samples used per shaded pixel. Ideally, we would like to reuse as

much data as possible from neighboring pixels in time and space to reduce the

sampling rate required for an optimal solution. Knowing the general behavior of

a stage, we can easily adopt the compression concept. Using a motion vector

field, we can fetch samples over time, and due to the low-frequency behavior, we

can utilize spatial coherency for geometry-aware upsampling. However, there are

several pitfalls to this approach due to the interactive nature of most applications,

particularly video games.

100 7.ASpatialandTemporalCoherenceFrameworkforReal‐TimeGraphics

This chapter presents a robust framework that takes advantage of spatiotem-

poral coherency in visual data, and it describes ways to overcome the associated

problems. During our research, we sought the best possible solution that met our

demands of being robust, functional, and fast since we were aiming for

Xbox 360- and PlayStation 3-class hardware. Our scenario involved rendering

large outdoor scenes with cascaded shadow maps and screen-space ambient oc-

clusion for additional lighting detail. Moreover, we extensively used advanced

material shaders combined with multisampling as well as a complex postpro-

cessing system. Several applications of the framework were developed for vari-

ous rendering stages. The discussion of our final implementation covers several

variations, performance gains, and future ideas.

7.2TheSpatiotemporalFramework

Our spatiotemporal framework is built from two basic algorithms, bilateral up-

sampling and real-time reprojection caching. (Bilateral filtering is another useful

processing stage that we discuss.) Together, depending on parameters and appli-

cation specifics, they provide high-quality optimizations for many complex ren-

dering stages, with a particular focus on low-frequency data computation.

BilateralUpsampling

We can assume that many complex shader operations are low-frequency in na-

ture. Visual data like ambient occlusion, global illumination, and soft shadows

tend to be slowly varying and, therefore, well behaved under upsampling opera-

tions. Normally, we use bilinear upsampling, which averages the four nearest

samples to a point being shaded. Samples are weighted by a spatial distance func-

tion. This type of filtering is implemented in hardware, is extremely efficient, and

yields good quality. However, a bilinear filter does not respect depth discontinui-

ties, and this creates leaks near geometry edges. Those artifacts tend to be dis-

turbing due to the high-frequency changes near object silhouettes. The solution is

to steer the weights by a function of geometric similarity obtained from a high-

resolution geometry buffer and coarse samples [Kopf et al. 2007]. During inter-

polation, we would like to choose certain samples that have a similar surface ori-

entation and/or a small difference in depth, effectively preserving geometry

edges. To summarize, we weight each coarse sample by bilinear, normal-

similarity, and depth-similarity weights.

Sometimes, we can simplify bilateral upsampling to account for only depth

discontinuities when normal data for coarse samples is not available. This solu-

7.2TheSpatiotemporalFramework 101

for (int i = 0; i < 4; i++)

{

normalWeights[i] = dot(normalsLow[i], normalHi);

normalWeights[i] = pow(vNormalWeights[i], contrastCoef);

}

for (int i = 0; i < 4; i++)

{

float depthDiff = depthHi – depthLow[i];

depthWeights[i] = 1.0 / (0.0001F + abs(depthDiff));

}

for (int i = 0; i < 4; i++)

{

float sampleWeight = normalWeights[i] * depthWeights[i] *

bilinearWeights[texelNo][i];

totalWeight += sampleWeight;

upsampledResult += sampleLow[i] * fWeight;

}

upsampledResult /= totalWeight;

Listing 7.1. Pseudocode for bilateral upsampling.

tion is less accurate, but it gives plausible results in most situations when dealing

with low-frequency data.

Listing 7.1 shows pseudocode for bilateral upsampling. Bilateral weights are

precomputed for a

22

coarse-resolution tile, as shown in Figure 7.2. Depending

on the position of the pixel being shaded (shown in red in Figure 7.2), the correct

weights for coarse-resolution samples are chosen from the table.

ReprojectionCaching

Another optimization concept is to reuse data over time [Nehab et al. 2007]. Dur-

ing each frame, we would like to sample previous frames for additional data, and

thus, we need a history buffer, or cache, that stores the data from previous

frames. With each new pixel being shaded in the current frame, we check wheth-

er additional data is available in the history buffer and how relevant it is. Then,

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.