355

21

Data‐DrivenSoundPack

LoadingandOrganization

Simon Franco

The Creative Assembly

21.1Introduction

Typically, a game’s audio data, much like all other game data, cannot be fully

stored within the available memory of a gaming device. Therefore, we need to

develop strategies for managing the loading of our audio data. This is so we only

store in memory the audio data that is currently needed by the game. Large audio

files that are too big to fit into memory, such as a piece of music or a long sound

effect that only has one instance playing at a time, can potentially be streamed in.

Streaming a file in this way results in only the currently needed portion of the file

being loaded into memory. However, this does not solve the problem of how to

handle audio files that may require multiple instances of an audio file to be

played at the same time, such as gunfire or footsteps.

Sound effects such as these need to be fully loaded into memory. This is so

the sound engine can play multiple copies of the same sound effect, often at

slightly different times, without needing to stream the same file multiple times

and using up the limited bandwidth of the storage media.

To minimize the number of file operations performed, we typically organize

our audio files into sound packs. Each sound pack is a collection of audio files

that either need to be played together at the same time or within a short time pe-

riod of each other.

Previously, we would package up our audio files into simplified sound packs.

These would typically have been organized into a global sound pack, character

sound packs, and level sound packs. The global sound pack would contain all

audio files used by global sound events that occur across all levels of a game.

356 21.Data‐DrivenSoundPackLoadingandOrganization

This would typically have been player and user interface sound events. Character

sounds would typically be organized so that there would be one sound pack per

type of character. Each character sound pack would contain all the audio files

used by that character. Level sound packs would contain only the audio files only

used by sound events found on that particular level.

However, this method of organization is no longer applicable, as a single

level’s worth of audio data can easily exceed the amount of sound RAM availa-

ble. Therefore, we must break up our level sound packs into several smaller

packs so that we can fit the audio data needed by the current section of the game

into memory. Each of these smaller sound packs contain audio data for a well-

defined small portion of the game. We then load in these smaller sound packs

and release them from memory as the player progresses through the game. An

example of a small sound pack would be a sound pack containing woodland

sounds comprising bird calls, trees rustling in the wind, forest animals, etc.

The problem with these smaller sound packs is how we decide which audio

files are to be stored in each sound pack. Typically, sound packs, such as the ex-

ample woodlands sound, pack are hand-organized in a logical manner by the

sound designer. However, this can lead to wasting memory as sounds are

grouped by their perceived relation to each other, rather than an actual require-

ment that they be bound together into a sound pack.

The second problem is how to decide when to load in a sound pack. Previ-

ously, a designer would place a load request for a sound pack into the game

script. This would then be triggered when either the player walks into an area or

after a particular event happens. An example of this would be loading a burning

noises sound pack and having this ready to be used after the player has finished a

cut-scene where they set fire to a building.

This chapter discusses methods to automate both of these processes. It allows

the sound designer to place sounds into the world and have the system generate

the sound packs and loading triggers. It also alerts the sound designer if they

have overpopulated an area and need to reduce either the number of variations of

a sound or reduce the sample quality.

21.2ConstructingaSoundMap

We examine the game world’s sound emitters and their associated sound events

in order to generate a sound map. The sound map contains the information we

require to build a sound event table. The sound event table provides us with the

information needed to construct our sound packs and their loading triggers.

21.2ConstructingaSoundMap 357

Sound emitters are typically points within 3D space representing the posi-

tions from which a sound will play. As well as having a position, an emitter also

contains a reference to a sound event. A sound event is a collection of data dictat-

ing which audio file or files to play, along with information on how to control

how these audio files are played. For example, a sound event would typically

store information on playback volume, pitch shifts, and any fade-in or fade-out

durations.

Sound designers typically populate the game world with sound emitters in

order to build up a game’s soundscape. Sound emitters may be scripted directly

by the sound designer or may be automatically generated by other game objects.

For example, an animating door may automatically generate

wooden_door_

open

and wooden_door_close sound emitters.

Once all the sound emitters have been placed within the game world, we can

begin our data collection. This process should be done offline as part of the pro-

cess for building a game’s level or world data.

Each sound event has an audible range defined by its sound event data. This

audible range is used to calculate both the volume of the sound and whether the

sound is within audible range by comparing against the listener’s position. The

listener is the logical representation of the player’s ear in the world—it’s typical-

ly the same as the game’s camera position. We use the audible range property of

sound emitters to see which sound emitters are overlapping.

We construct a sound map to store an entry for each sound emitter found

within the level data. The sound map can be thought of as a three-dimensional

space representing the game world. The sound map contains only the sound emit-

ters we’ve found when processing the level data. Each sound emitter is stored in

the sound map as a sphere, with the sphere’s radius being the audible distance of

the sound emitter’s event data.

Once the sound map is generated, we can construct an event table containing

an entry for each type of sound event found in the level. For each entry in the

table, we must mark how many instances of that sound event there are within the

sound map, which other sound events overlap with them (including other in-

stances of the same sound event), and the number of instances in which those

events overlap. For example, if a single

Bird_Chirp sound emitter overlaps with

two other sound emitters playing the

Crickets sound event, then that would be

recorded as a single occurrence of an overlap between

Bird_Chirp and Crick-

ets

for the Bird_Chirp entry. For the Crickets entry, it would be recorded as

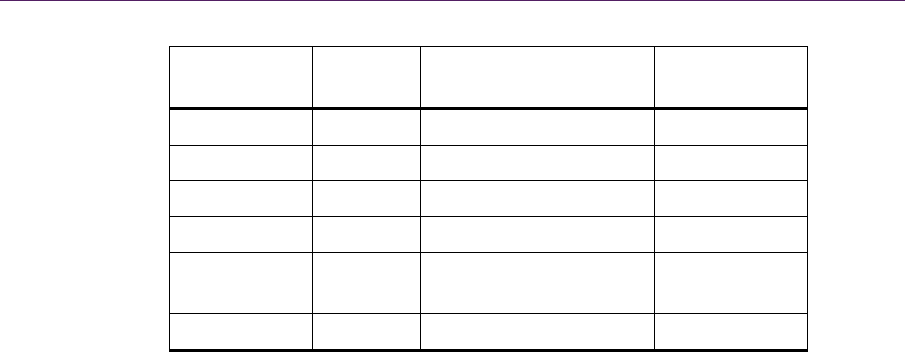

two instances of an overlap. An example table generated for a sample sound map

is shown in Table 21.1. From this data, we can begin constructing our sound

packs.

358 21.Data‐DrivenSoundPackLoadingandOrganization

Sound Event Instance

Count

Overlapped Events Occurrences of

Overlap

Bird_Chirp 5 Crickets, Bird_Chirp 4, 2

Waterfall 1 None 0

Water_Mill 1 NPC_Speech1 1

NPC_Speech1 1 Water_Mill 1

Crickets 10 Bird_Chirp, Crickets,

Frogs

7, 6, 1

Frogs 5 Crickets, Frogs 5, 5

Table 21.1. Sound events discovered within a level and details of any other overlapped

events.

21.3ConstructingSoundPacksby

AnalyzingtheEventTable

The information presented in our newly generated event table allows us to start

planning the construction of our sound packs. We use the information gathered

from analyzing the sound map to construct the sound packs that are to be loaded

into memory and to identify those that should be streamed instead.

There are several factors to take into consideration when choosing whether to

integrate a sound event’s audio data into a sound pack or to stream the data for

that sound event while it is playing. For example, we must consider how our

storage medium is used by other game systems. This is vital because we need to

determine what streaming resources are available. If there are many other sys-

tems streaming data from the storage media, then this reduces the number of

streamed samples we can play and places greater emphasis on having sounds be-

come part of loaded sound packs, even if those sound packs only contain a single

audio file that is played once.

DeterminingWhichSoundEventstoStream

Our first pass through the event table identifies sound events that should be

streamed. If we decide to stream a sound event, then it should be removed from

the event table and added to a streamed event table. Each entry in the streamed

event table is formatted in a manner similar to the original event table. Each entry

21.3ConstructingSoundPacksbyAnalyzingtheEventTable 359

in the streamed sound event table contains the name of a streamed sound event

and a list of other streamed sound events whose sound emitters overlap with a

sound emitter having this type of sound event. To decide whether a sound event

should be streamed, we must take the following rules into account:

■ Is there only one instance of the sound event in the table? If so, then stream-

ing it would be more efficient. The exception to this rule is if the size of the

audio file used by the event is too small. In this eventuality, we should load

the audio file instead. This is so our streaming resources are reserved for

more suitable files. A file is considered too small if its file size is smaller

than the size of one of your streaming audio buffers.

■ Does the sound event overlap with other copies of itself? If not, then it can be

streamed because the audio data only needs to be processed once at any giv-

en time.

■ Does the audio file used by the sound event have a file size bigger than the

available amount of audio RAM? If so, then it must be streamed.

■ Are sufficient streaming resources available, such as read bandwidth to the

storage media, to stream data for this sound event? This is done after the oth-

er tests have been passed because we need to see which other streamed sound

events may be playing at the same time. If too many are playing, then we

need to prioritize the sound events. Larger files should be streamed, and

smaller-sized audio files should be loaded into memory.

Using the data from Table 21.1, we can extract a streamed sound event table

similar to that shown in Table 21.2. In Table 21.2, we have three sound events

that we believe to be suitable candidates for streaming. Since

Water_Mill and

NPC_Speech1 overlap, we should make sure that the audio files for these events

are placed close to each other on the storage media. This reduces seek times

when reading the audio data for these events.

Sound Event Instance

Count

Overlapped Events Occurrences of

Overlap

Waterfall 1 None 0

Water_Mill 1 NPC_Speech1 1

NPC_Speech1 1 Water_Mill 1

Table 21.2. Sound events found suitable for streaming.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.