11.8SupportingBothMonoscopicandStereoscopicVersions 173

m

ax ,

x

RxR y z

Nx N x e Ny Nz

.

This means that in the worst case, it takes

4

41 1multiplication addition maximum comparison

operations. For checking the back-facing triangle for both views, the efficiency

of this test is improved by around 33 percent.

11.8SupportingBothMonoscopicand

StereoscopicVersions

If a game supports both stereoscopic and monoscopic rendering, the stereoscopic

version may have to reduce the scene complexity by having more aggressive lev-

el of detail (geometry, assets, shaders) and possibly some small objects or post-

processing effects disabled. Thankfully, the human visual system does not only

use the differences between what it sees in each eye to judge depth, it also uses

the similarities to improve the resolution. In other words, reducing the game vid-

eo resolution in stereoscopic games has less of an impact than with monoscopic

games, as long as the texture and antialiasing filters are kept optimal.

In this chapter, we focus on creating 3D scenes by using distinct cameras. It

is also possible to reconstruct the 3D scene using a single camera and generate

the parallax from the depth map and color separation, which can be performed

very efficiently on modern GPUs

1

[Carucci and Schobel 2010]. The stereoscopic

image can be created by rendering an image from one view-projection matrix,

which can then be projected pixel by pixel to a second view-projection matrix

using both the depth map and color information generated by the single view.

One of the motivations behind this approach is to keep the impact of stereoscopic

rendering as low as possible to avoid compromises in terms of resolution, details,

or frame rate.

11.9VisualQuality

Some of the techniques used in monoscopic games to improve run-time perfor-

mance, such as view-dependent billboards, might not work very well for close

objects, as the left and right quads would face their own cameras at different an-

gles. It is also important to avoid scintillating pixels as much as possible. There-

1

For example, see http://www.trioviz.com/.

174 11.Making3DStereoscopicGames

fore, keeping good texture filtering and antialiasing ensures a good correlation

between both images by reducing the difference of the pixel intensities. The hu-

man visual system extracts depth information by interpreting 3D clues from a 2D

picture, such as a difference in contrast. This means that large untextured areas

lack such information, and a sky filled with many clouds produces a better result

than a uniform blue sky, for instance. Moreover, and this is more of an issue with

active shutter glasses, a large localized contrast is likely to produce ghosting in

the image (crosstalk).

StereoCoherence

Both images need to be coherent in order to avoid side effects. For instance, if

both images have a different contrast, which can happen with postprocessing ef-

fects such as tone mapping, then there is a risk of producing the Pulfrich effect.

This phenomenon is due to the signal for the darker image being received later by

the brain than for the brighter image, and the difference in timing creates a paral-

lax that introduces a depth component. Figure 11.10 illustrates this behavior with

a circle rotating at the screen distance and one eye looking through filter (such as

sun-glasses). Here, the eye that looks through the filter receives the image with a

slight delay, leading to the impression that the circle rotates around the up axis.

Other effects, such as view-dependent reflections, can also alter the stereo

coherence.

Figure 11.10. The Pulfrich effect.

L

R

L

R

11.9VisualQuality 175

FastAction

The brain needs some time to accommodate the stereoscopic viewing in order to

register the effect. As a result, very fast-moving objects might be difficult to in-

terpret and can cause discomfort to the user.

3DSlider

Using a 3D slider allows the user to reduce the stereoscopic effect to a comforta-

ble level. The slider controls the camera properties for interaxial distance and

convergence. For instance, reducing the interaxial distance makes foreground

objects move further away, toward the comfortable area (see Figure 11.4), while

reducing the convergence moves the objects closer. The user might adjust it to

accommodate the screen size, the distance he is sitting from the screen, or just for

personal tastes.

ColorEnhancement

With active shutter glasses, the complete image is generally darkened due to the

LCD brightness level available on current 3D glasses. It is possible to minimize

this problem by implementing a fullscreen postprocessing pass that increases the

quality, but it has to be handled with care because the increased contrast can in-

crease the crosstalk between both images.

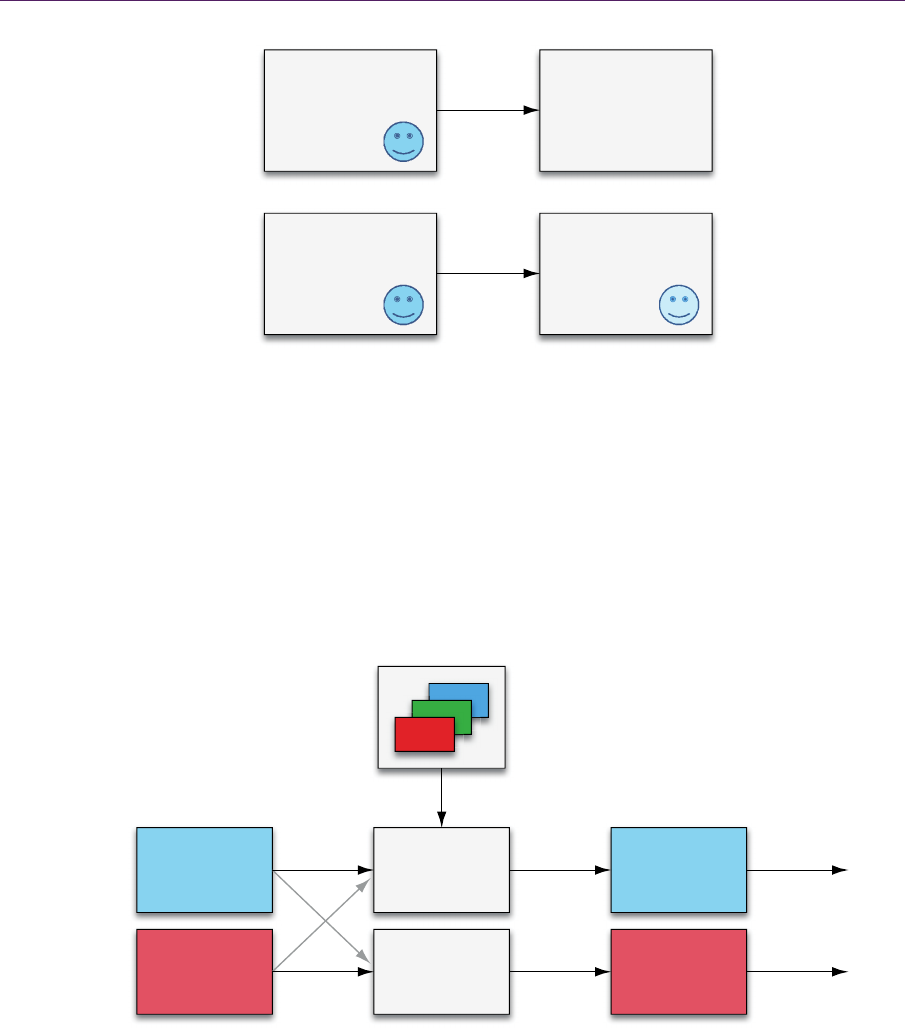

CrosstalkReduction

Crosstalk is a side effect where the left and right image channels leak into each

other, as shown in Figure 11.11. Some techniques can be applied to reduce this

problem by analyzing the color intensity for each image and subtracting them

from the frame buffer before sending the picture to the screen. This is done by

creating calibration matrices that can be used to correct the picture. The concept

consists of fetching the left and right scenes in order to extract the desired and

unintended color intensities so that we can counterbalance the expected intensity

leakage, as shown in Figure 11.12. This can be implemented using multiple ren-

der targets during a fullscreen postprocessing pass. This usually produces good

results, but unfortunately, there is a need to have specific matrices tuned to each

display, making it difficult to implement on a wide range of devices.

176 11.Making3DStereoscopicGames

Figure 11.11. Intensity leakage, where a remnant from the left image is visible in the

right view.

Figure 11.12. Crosstalk reduction using a set of calibration matrices.

Left Right

Left Right

No

crosstalk

Intensity

leakage

Left scene

texture

Right scene

texture

Crosstalk

reduction L-R

Crosstalk

reduction R-L

Corrected

left scene

Corrected

right scene

R

G

B

Input texture Frame buffer

Calibration texture

Display L

Display R

desired

I

desired

I

unintended

I

11.10GoingOneStepFurther 177

Figure 11.13. Ortho-stereo viewing with head-tracked VR.

11.10GoingOneStepFurther

Many game devices now offer support for a front-facing camera. These open new

possibilities through face tracking, where “ortho-stereo” viewing with head-

tracked virtual reality (VR) can be produced, as shown in Figure 11.13. This pro-

vides the ability to follow the user so that we can adapt the 3D scene based on his

current location. We can also consider retargeting stereoscopic 3D by adopting a

nonlinear disparity mapping based on visual importance of scene elements [Lang

et al. 2010]. In games, elements such as the main character could be provided to

the system to improve the overall experience. The idea is to improve the 3D ex-

perience by ensuring that areas where the user is likely to focus are in the com-

fortable area and also to avoid divergence on far away objects. For instance, it is

possible to fix the depth for some objects or to apply depth of field for objects

outside the convergence zone.

11.11Conclusion

Converting from a monoscopic game to stereoscopic 3D requires less than twice

the amount of processing but requires some optimization for the additional ren-

dering overhead. However, the runtime performance is only one component with-

in a stereoscopic 3D game, and more effort is needed to ensure the experience is

comfortable to watch. A direct port of a monoscopic game might not create the

best experience, and ideally, a stereoscopic version would be conceived in the

early stages of development.

Asymmetric

field of view

Dynamic

view frustum

V

iewer

Scree

n

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.