102 7.ASpatialandTemporalCoherenceFrameworkforReal‐TimeGraphics

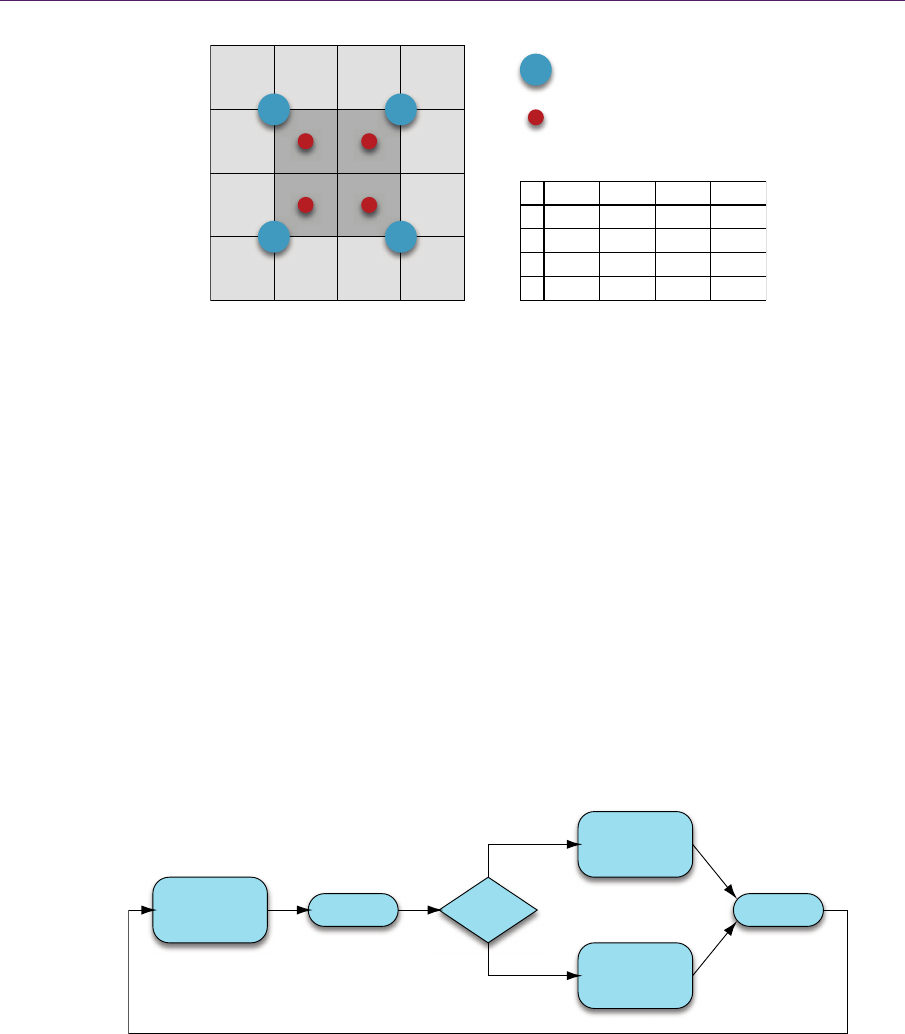

Figure 7.2. Bilinear filtering with a weight table.

we decide if we want to use that data, reject it, or perform some additional com-

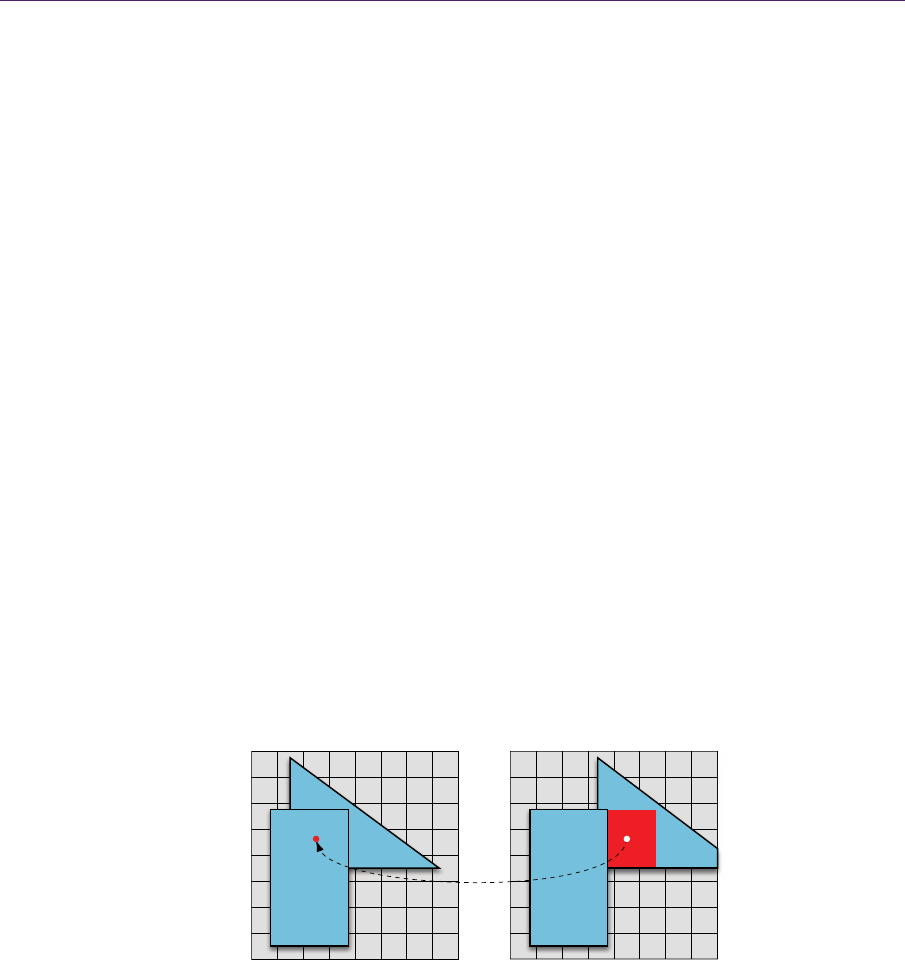

putation. Figure 7.3 presents a schematic overview of the method.

For each pixel being shaded in the current frame, we need to find a corre-

sponding pixel in the history buffer. In order to find correct cache coordinates,

we need to obtain the pixel displacement between frames. We know that pixel

movement is a result of object and camera transformations, so the solution is to

reproject the current pixel coordinates using a motion vector. Coordinates must

be treated with special care. Results must be accurate, or we will have flawed

history values due to repeated invalid cache resampling. Therefore, we perform

computation in full precision, and we consider any possible offsets involved

when working with render targets, such as the half-pixel offset in DirectX 9.

Coordinates of static objects can change only due to camera movement, and

the calculation of pixel motion vectors is therefore straightforward. We find the

Figure 7.3. Schematic diagram of a simple reprojection cache.

Low-resolution sample

High-resolution sample

0

0

0

0

1

1

1

1

2

2

2

2

3

3

3

3

9/16

9/16

9/16

9/16

3/16

3/16

3/16 3/16

3/16

3/16

3/16

3/16 1/16

1/16

1/16

1/16

Calculate

cache coord

Reuse/

Accumulate

Reject/

Recompute

Lookup

UpdateCheck

Hit

Miss

7.2TheSpatiotemporalFramework 103

pixel position in camera space and project it back to screen space using the pre-

vious frame’s projection matrix. This calculation is fast and can be done in one

fullscreen pass, but it does not handle moving geometry.

Dynamic objects require calculation of per-pixel velocities by comparing the

current camera-space position to the last one on a per-object basis, taking skin-

ning and transformations into consideration. In engines calculating a frame-to-

frame motion field (i.e., for per-object motion blur [Lengyel 2010]), we can reuse

the data if it is pixel-correct. However, when that information is not available, or

the performance cost of an additional geometry pass for velocity calculation is

too high, we can resort to camera reprojection. This, of course, leads to false

cache coordinates for an object in motion, but depending on the application, that

might be acceptable. Moreover, there are several situations where pixels may not

have a history. Therefore, we need a mechanism to check for those situations.

Cache misses occur when there is no history for the pixel under considera-

tion. First, the obvious reason for this is the case when we are sampling outside

the history buffer. That often occurs near screen edges due to camera movement

or objects moving into the view frustum. We simply check whether the cache

coordinates are out of bounds and count it as a miss.

The second reason for a cache miss is the case when a point p visible at time

t was occluded at time

1

t

by another point q. We can assume that it is impossi-

ble for the depths of

q and p to match at time 1

t

. We know the expected depth

of

p at time 1

t

through reprojection, and we compare that with the cached depth

at

q. If the difference is bigger than an epsilon, then we consider it a cache miss.

The depth at

q is reconstructed using bilinear filtering, when possible, to account

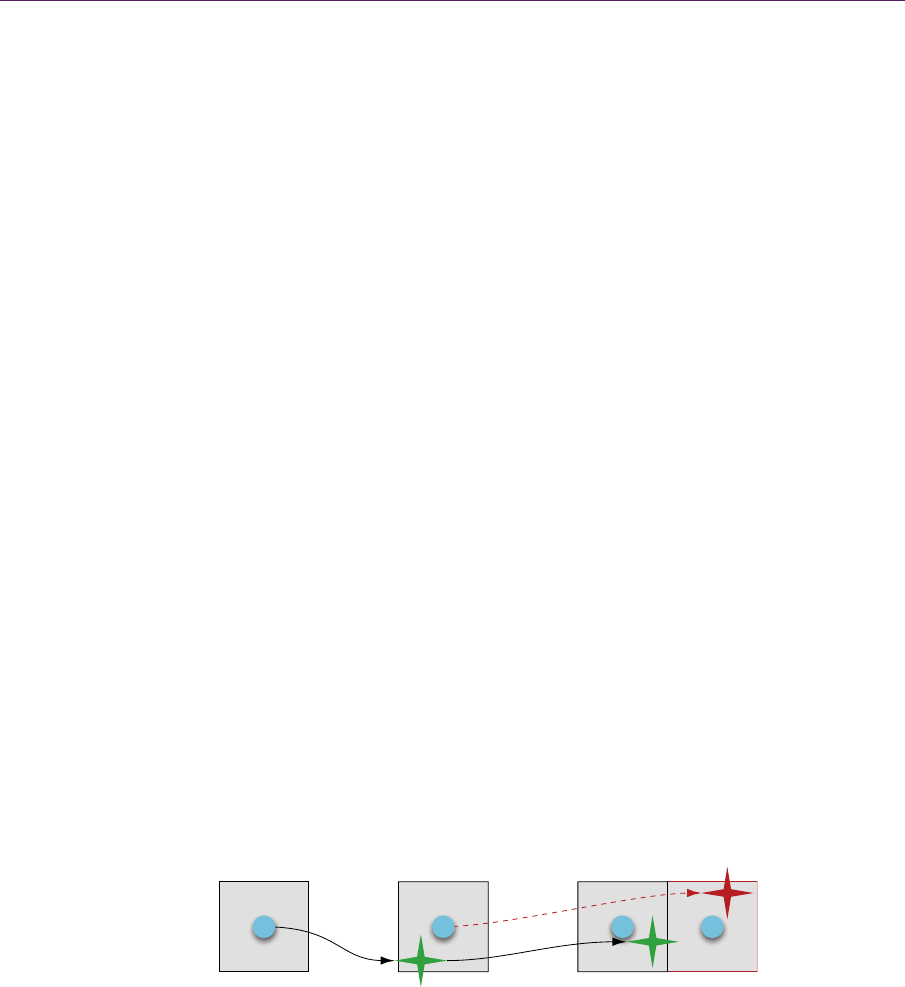

for possible false hits at depth discontinuities, as illustrated in Figure 7.4.

Figure 7.4. Possible cache-miss situation. The red area lacks history data due to occlu-

sion in the previous frame. Simple depth comparison between projected p and q from

1

t

is sufficient to confirm a miss.

qp

tt − 1

104 7.ASpatialandTemporalCoherenceFrameworkforReal‐TimeGraphics

If there is no cache miss, then we sample the history buffer. In general, pixels

do not map to individual cache samples, so some form of resampling is needed.

Since the history buffer is coherent, we can generally treat it as a normal texture

buffer and leverage hardware support for linear and point filtering, where the

proper method should be chosen for the type of data being cached. Low-

frequency data can be sampled using the nearest-neighbor method without signif-

icant loss of detail. On the other hand, using point filtering may lead to disconti-

nuities in the cache samples, as shown in Figure 7.5. Linear filtering correctly

handles these artifacts, but repeated bilinear resampling over time leads to data

smoothing. With each iteration, the pixel neighborhood influencing the result

grows, and high-frequency details may be lost. Last but not least, a solution that

guarantees high quality is based on a higher-resolution history buffer and nearest-

neighbor sampling at a subpixel level. Nevertheless, we cannot use it on consoles

because of the additional memory requirements.

Motion, change in surface parameters, and repeated resampling inevitably

degrade the quality of the cached data, so we need a way to refresh it. We would

like to efficiently minimize the shading error by setting the refresh rate propor-

tional to the time difference between frames, and the update scheme should be

dependent on cached data.

If our data requires explicit refreshes, then we have to find a way to guaran-

tee a full cache update every n frames. That can easily be done by updating one

of n parts of the buffer every frame in sequence. A simple tile-based approach or

jittering could be used, but without additional processing like bilateral upsam-

pling or filtering, pattern artifacts may occur.

The reprojection cache seems to be more effective with accumulation func-

tions. In computer graphics, many results are based on stochastic processes that

combine randomly distributed function samples, and the quality is often based on

the number of computed samples. With reprojection caching, we can easily

amortize that complex process over time, gaining in performance and accuracy.

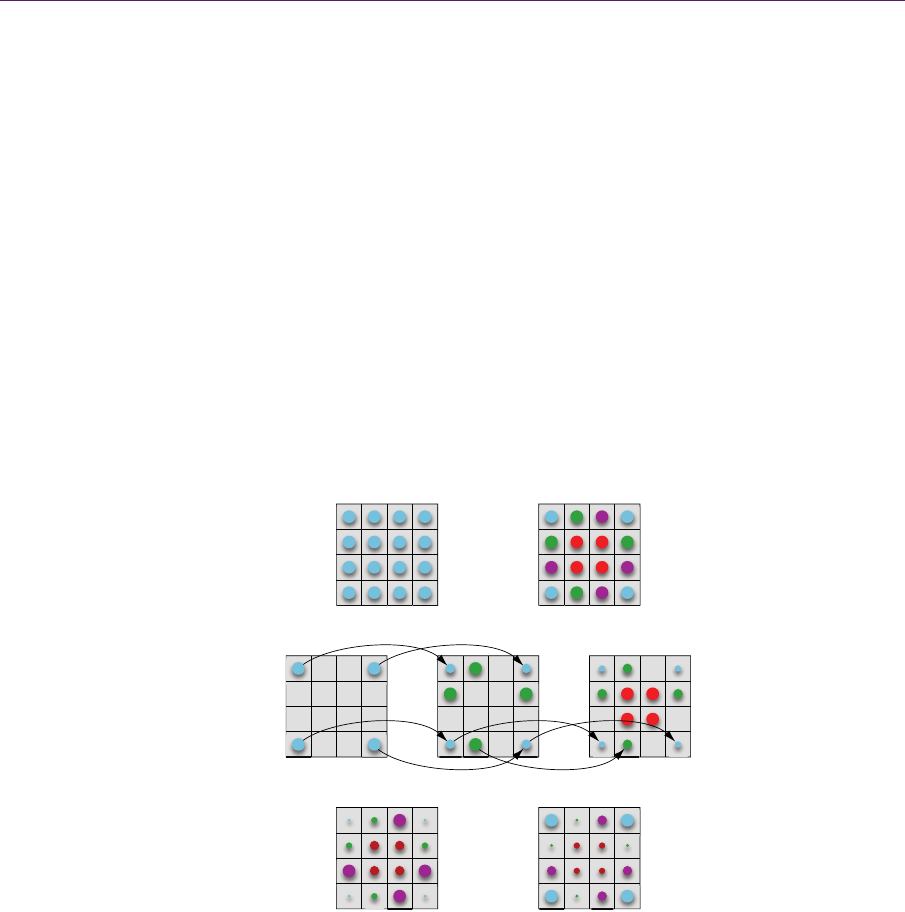

Figure 7.5. Resampling artifacts arising in point filtering. A discontinuity occurs when

the correct motion flow (yellow star) does not match the approximating nearest-neighbor

pixel (red star).

t = −1t = 0 t = −2

7.2TheSpatiotemporalFramework 105

This method is best suited for rendering stages based on multisampling and low-

frequency or slowly varying data.

When processing frames, we accumulate valid samples over time. This leads

to an exponential history buffer that contains data that has been integrated over

several frames back in time. With each new set of accumulated values, the buffer

is automatically updated, and we control the convergence through a dynamic fac-

tor and exponential function. Variance is related to the number of frames con-

tributing to the current result. Controlling that number lets us decide whether

response time or quality is preferred.

We update the exponential history buffer using the equation

11ht ht w r w

,

where

ht represents a value in the history buffer at time t, w is the convergence

weight, and r is a newly computed value. We would like to steer the convergence

Figure 7.6. We prepare the expected sample distribution set. The sample set is divided

into N subsets (four in this case), one for each consecutive frame. With each new frame,

missing subsets are sampled from previous frames, by exponential history buffer look-up.

Older samples lose weight with each iteration, so the sampling pattern must repeat after N

frames to guarantee continuity.

t − 1t − 2t − 3

t + 1t

Distribution set Per-frame subset

s

106 7.ASpatialandTemporalCoherenceFrameworkforReal‐TimeGraphics

weight according to changes in the scene, and this could be done based on the

per-pixel velocity. This would provide a valid solution for temporal ghosting arti-

facts and high-quality convergence for near-static elements.

Special care must be taken when new samples are calculated. We do not

want to have stale data in the cache, so each new frame must bring additional

information to the buffer. When rendering with multisampling, we want to use a

different set of samples with each new frame. However, sample sets should re-

peat after N frames, where N is the number of frames being cached (see Figure

7.6). This improves temporal stability. With all these improvements, we obtain a

highly efficient reprojection caching scheme. Listing 7.2 presents a simplified

solution used during our SSAO step.

float4 ReproCache(vertexOutput IN)

{

float4 ActiveFrame = tex2D(gSampler0PT, IN.UV.xy);

float freshData = ActiveFrame.x;

float3 WSpacePos = WorldSpaceFast(IN.WorldEye, ActiveFrame.w);

float4 LastClipSpacePos = mul(float4(WorldSpacePos.xyz, 1.0),

IN_CameraMatrixPrev);

float lastDepth = LastClipSpacePos.z;

LastClipSpacePos = mul(float4(LastClipSpacePos.xyz, 1.0),

IN_ProjectionMatrixPrev);

LastClipSpacePos /= LastClipSpacePos.w;

LastClipSpacePos.xy *= float2(0.5, -0.5);

LastClipSpacePos.xy += float2(0.5, 0.5);

float4 reproCache = tex2D(gSampler1PT, LastClipSpacePos.xy);

float reproData = reproCache.x;

float missRate = abs(lastDepth - reproCache.w) - IN_MissThreshold;

missRate = clamp(missRate, 0.0, 100.0);

missRate = 1.0 / (1.0 + missRate * IN_MissSlope);

if (LastClipSpacePos.x < 0.0 || LastClipSpacePos.x > 1.0 ||

LastClipSpacePos.y < 0.0 || LastClipSpacePos.y > 1.0)

missRate = 0.0;

missRate = saturate(missRate * IN_ConvergenceTime);

freshData += (reproData - freshData.xy) * missRate;

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.