409

26

AHighlyOptimizedPortable

MemoryManager

Jason Hughes

Steel Penny Games, Inc.

26.1Introduction

Every game has a memory manager of some sort. On PCs, this tends to be Mi-

crosoft’s C run-time library memory manager. On the various consoles, it’s likely

to either be the platform-specific memory manager that was written by the hard-

ware vendor or the one provided by the compiler company in its run-time library.

Do they all work the same way? No. Do they exhibit the same performance char-

acteristics? Absolutely no. Some allow the heap to become fragmented very

quickly, while others may be very slow for small allocations or when the number

of allocations becomes quite large, and still others may have a high per-allocation

overhead that invisibly eats away at your memory.

Memory allocation is a fundamental operation, thus, it has to satisfy a wide

number of use cases robustly and efficiently. This is a serious technical chal-

lenge. Even a good implementation can harm a game’s performance if exercised

in just the wrong way. A naive implementation can utterly cripple performance

or cause crashes due to artificial low-memory situations (e.g., fragmentation or

overhead). The good news is that most of the provided memory managers are

relatively efficient and work well enough for simple cases with few allocations.

After enough experiences where cross-platform stability and performance

came down strictly to the memory manager, however, you may be tempted to

implement your own that is scalable and easy to tune for best performance. These

days, with so many platforms to support, it’s a mark of quality for an engine to

run well across all machines.

410 26.AHighlyOptimizedPortableMemoryManager

AlternativestoRollingYourOwn

Surely someone has already written a good heap. Doug Lea’s dlmalloc is one of

the best and is typically the memory manager of choice on Linux. There are

many derivatives on the internet that claim some improvements, but there are

many flavors to choose from. dlmalloc is a good choice primarily because it’s

very stable and well-tested, it’s public domain code that is freely available, and it

runs on 32-bit and 64-bit systems of either endianness. Very compelling.

The main gripes with dlmalloc is that it is a mess to look at, it’s horrible to

debug in case of heap corruption, and it’s easily corrupted in case of a buffer

overrun. As for usability, there’s no way to create one-off heaps with it that have

been optimized for special use cases, where you can predict sizes and counts of

allocations. dlmalloc also has a fairly high per-allocation overhead that chews up

memory. As general-purpose heaps go, it’s excellent, but for games, we some-

times need custom solutions with more bells and whistles.

DesirableProperties

After writing about eight or nine different memory managers, a list of priorities

emerges from recognizing the importance of certain attributes that make a

memory manager good. Here are a few:

1. Must not thrash the CPU cache. High performance comes with limiting the

number of bytes that have to interact with RAM. Whatever is touched should

fit within a minimum number of cache lines, both to reduce memory latency

and to limit the amount of cache disruption to the rest of the program.

2. No searching for free space. The naive implementation of a memory manag-

er is a linked list of blocks that are marked free or empty. Scanning this list is

hugely expensive and slow under all circumstances. Good memory managers

have lookup tables of some sort that point to places where free blocks of

memory of various sizes are.

3. Minimum overhead per allocation. Reducing overhead in a memory manager

is almost like adding compression to memory—you can fit more data in the

same physical space.

4. Should be easy to debug. Sometimes memory problems happen, and it’s im-

portant to consider the difficulty in tracking down such issues. Sometimes

this means temporarily adding features to the memory manager. Ideally, the

debug build should do some basic instrumentation as it runs that determines

whether memory has been trampled without slowing down the system.

5. Should resist corruption. Most memory managers are organized such that

blocks of program data are sandwiched between heap tracking information.

26.2Overview 411

This is particularly fragile because buffer overruns tend to be utterly fatal to

the system, but not immediately. The system keeps running until the memory

manager has to allocate or free memory in that region and attempts to use a

pointer that is garbage. However, determining what exactly is smashing the

heap is a challenge, but one made less critical if the memory manager itself

doesn’t crash in response to a programming error. Ideally, program data

should only be able to trample other program data, and heap tracking infor-

mation is separated and thus protected.

6. Should not fragment easily. Fragmentation leads to situations where a small

allocation in the middle of memory prevents a large allocation from succeed-

ing, even though the total number of available bytes (if you add the separate

free chunks together) is more than enough to accommodate the allocation.

It’s also a common failure of memory managers to spend a lot of run-time

cycles reducing existing fragmentation or actively attempting to prevent it.

The wrong approach is creating complex policies that define where alloca-

tions should go, in what order allocations should be placed in the heap, or

how to coalesce adjacent free blocks. Some systems even go to the extent of

relocating memory to manually defragment the heap, similar to Java’s

memory model. In the end, preventing fragmentation should be a design cri-

terion that is inherent in the system, not paid for at run time.

26.2Overview

The following design is one such system that satisfies the minimum criteria, as

specified in the introduction, though there are many others possible. There are

certain trade-offs that are often made during large design processes that ultimate-

ly shape the final software product. Although we describe a specific instance of

the memory manager, the version found on the website is actually a template-

based solution that allows reconfiguration of many parameters so you can easily

experiment with what is right for your specific situation. In fact, it would be easy

to capture all allocations and deletions in a log file with your current memory

manager, then replay the operations in a unit test setting to measure exactly what

performance and memory fragmentation would be under realistic heap

conditions.

FightingFragmentationIsJob#1

From experience, the greatest problem that needs to be addressed by a games

memory manager is fragmentation. There are two types of fragmentation: inter-

nal and external.

412 26.AHighlyOptimizedPortableMemoryManager

■ Internal fragmentation occurs when a single allocation is requested for M

bytes, but the memory manager cannot allocate exactly M bytes, so it rounds

up by an extra N bytes, so

MN

bytes are reserved for the allocation. Inter-

nal fragmentation can be considered a factor of systemic overhead, and is

tolerable if kept to a minimum, because all that unusable memory will be ful-

ly reclaimed when the block is freed.

■

External fragmentation occurs when memory is known to be free and availa-

ble by the memory manager but has been subdivided by allocations so that it

is not contiguous and, thus, cannot be allocated in a single large block. The

degree of fragmentation can be measured in many ways, but developers are

traditionally concerned with the “big block,” that is, the single largest alloca-

tion possible at any given time. The ratio of the big block to total available

memory is another meaningful way to express how fragmented memory is.

As that ratio tends toward zero, the number of free regions increases while

the sizes of free spaces decrease, and the largest single allocation possible

becomes a fraction of total available memory.

External fragmentation, henceforth referred to simply as fragmentation, is the

source of many apparent stability problems in otherwise well-written programs.

For games, this is particularly challenging because in this industry, memory tends

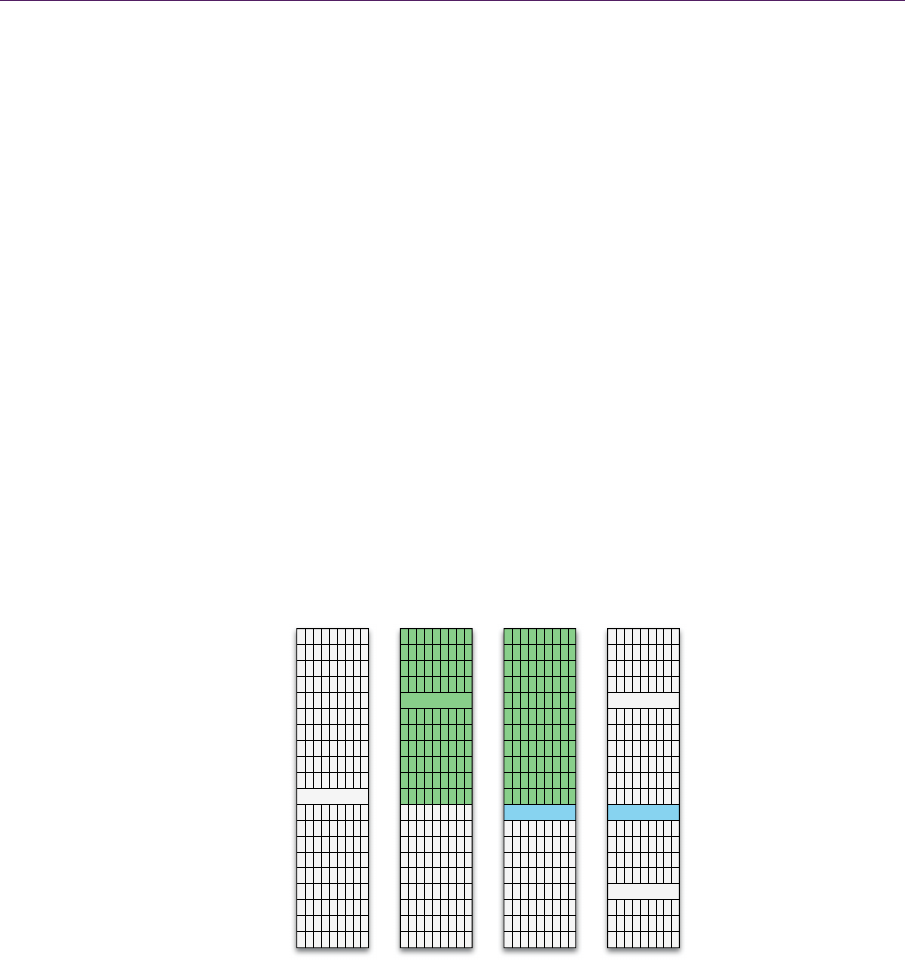

Figure 26.1. From left to right: the heap is free and unfragmented, one large allocation is

made, one small allocation is made, the large allocation is freed. In the rightmost dia-

gram, the heap is partitioned in half and exhibits an approximately 0.5 fragmentation

ratio.

FreeSpace

LargeAlloc

SmallAlloc Sm allAlloc

FreeSpace

FreeSpace

26.2Overview 413

to be considerably more limited, and players are more likely to play the game

without resetting for hours or even days on end. Thus, the dreaded “uptime

crash” is almost unavoidable without special precautions, careful planning, and

perhaps a custom memory management strategy that attempts to avoid fragmen-

tation automatically.

For further clarification of how fragmentation can occur within a program,

see Figure 26.1. Fragmentation can be demonstrated in three simple operations:

allocate twice and free once. If one allocation is quite large and is followed by a

small allocation, then once you release the large block back to the system, the

memory manager now has an approximate 0.5 fragmentation ratio. If the next

allocation is slightly larger than 50 percent of the total available memory, it will

fail.

Most decent memory managers have allocation strategies that react different-

ly based on the size of the allocation. Often, there is a specific handler for tiny

allocations (under 256 bytes, for instance) as well as a general allocation method

for everything larger. The idea behind this is primarily to reduce overhead in al-

locating very small blocks of memory that tend to represent the lion’s share of

allocations in most C/C++ programs. One happy consequence is that it prevents

that group of allocations from possibly splitting the big block in half and causing

fragmentation by preventing them from coming from the same memory

pool.

By extending this understanding to the design of a memory manager, it is

possible to reduce external fragmentation, at some small expense of internal

fragmentation. To illuminate by exaggeration, if you round up all of your alloca-

tions to the largest size you’ll ever allocate, then no external fragmentation is

important because no allocation will ever fail due to a fragmented heap, and any

allocation that gets freed can always fit any other allocation that may come in the

future. Of course, all of your memory would be exhausted before that could hap-

pen! Preposterous as it is, scaling back the idea and applying it to smaller alloca-

tions, where rounding up the size is less significant, makes the concept viable.

The trick is to make it fast and limit the amount of internal fragmentation (i.e.,

wasted space) the strategy produces.

Our approach is to make a very fast small block allocator (SBA) for alloca-

tions under 256 bytes, a reasonably fast medium block allocator (MBA) for allo-

cations that are larger than 256 bytes but smaller than a large allocation, and a

large block allocator (LBA) for any allocation of at least 4096 bytes. As men-

tioned above, the code on the website is templatized, so these numbers can be

modified trivially for your purposes, and when set to equal sizes, you can com-

pletely remove the SBA, the MBA, or both.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.